fastai v2 walk-thru #3

Chapters

0:06:32 Default Device

16:5 Source Links

20:30 Normalization

29:48 Tab Completion

32:10 Tab-Completion on Change Commands

34:18 Add Props

36:15 Daily Loader

36:39 Data Loader

49:57 Non Indexed or Iterable Data Sets

53:40 Infinite Lists

54:12 Itertools

57:10 Create Item

63:37 Worker Init Function

65:54 Create Batch

66:11 Language Model Data Loader

71:5 Retain Types

00:00:00.000 | Hey, folks, can you hear me okay?

00:00:12.220 | Can you see everything okay?

00:00:20.760 | Hello, Pedro, hello, everybody.

00:00:33.200 | Okay, so, if you hadn't noticed yet, I just pinned the daily code.

00:00:50.480 | And at the top of it, I'm just going to keep the video and notes for each one.

00:01:02.280 | And so, special extra big thanks to Elena, again, for notes, and also to Vishnu, so we've

00:01:14.480 | got two sets of notes at the moment, which is super helpful, I think.

00:01:25.580 | So let's see.

00:01:29.200 | And if anybody has any questions about previous lessons, after they've had a chance to look

00:01:34.120 | at them, feel free to ask during the calls as well, of course, or feel free to ask about

00:01:39.200 | pretty much anything, although I reserve the right to not answer.

00:01:45.320 | But I will certainly answer if I think it might be of general interest to people.

00:01:50.640 | So we were looking at data core yesterday.

00:01:58.680 | Before we look at that, I might point out 08.

00:02:13.080 | I made some minor changes to one of the 08 versions, which specifically is the Siamese

00:02:20.480 | model dataset one.

00:02:22.480 | I moved the Siamese image type up to the top, and I then removed the Siamese image.create

00:02:38.380 | method, because we weren't really using it for anything.

00:02:43.120 | And instead, I realized, you know, we can actually just make the pipeline say Siamese

00:02:47.560 | image.

00:02:48.560 | So that's going to call Siamese image as a function at the end.

00:02:53.440 | In other words, it's going to pass, it's going to call its constructor, so it's going to

00:02:57.560 | create the Siamese image from the tuple.

00:03:01.640 | I also, rather than deriving from tuple transform to create open and resize, this is actually

00:03:11.520 | an easier way to do it, is you can just create a tuple transform and pass in a function.

00:03:18.920 | So in this case, you just have to make sure that the function resized image is defined

00:03:25.280 | to such that the first parameter has a type, if you want your transform to be typed.

00:03:37.680 | Because we're using these kind of mid-level things, it's a little bit more complicated

00:03:45.760 | than usual.

00:03:46.760 | Normally, you don't have to create a tuple transform.

00:03:49.320 | If you create a data source or a transformed list or a transformed dataset, then it will

00:03:57.880 | automatically turn any functions into the right kind of transform.

00:04:02.720 | So we're doing more advanced stuff than probably most users would have to worry about.

00:04:08.760 | But I think that people who are on this call are interested in learning about the more

00:04:15.840 | advanced stuff.

00:04:17.720 | But if you look at the versions after that, we didn't have to do anything much in terms

00:04:28.760 | of making this all work.

00:04:33.400 | Okay, so yeah, we didn't have to worry about tuple transforms or item transforms or whatever,

00:04:41.280 | because these things like tf.ms know how to handle different parts of the pipeline appropriately.

00:04:47.520 | Okay, so that's something I thought I would mention.

00:04:52.740 | And you can also see that the segmentation, again, we didn't have to do it much at all.

00:04:58.080 | We just defined normal functions and stuff like that.

00:05:05.440 | Okay, so we were looking at O5, and I think we did, we finished with MNIST, right?

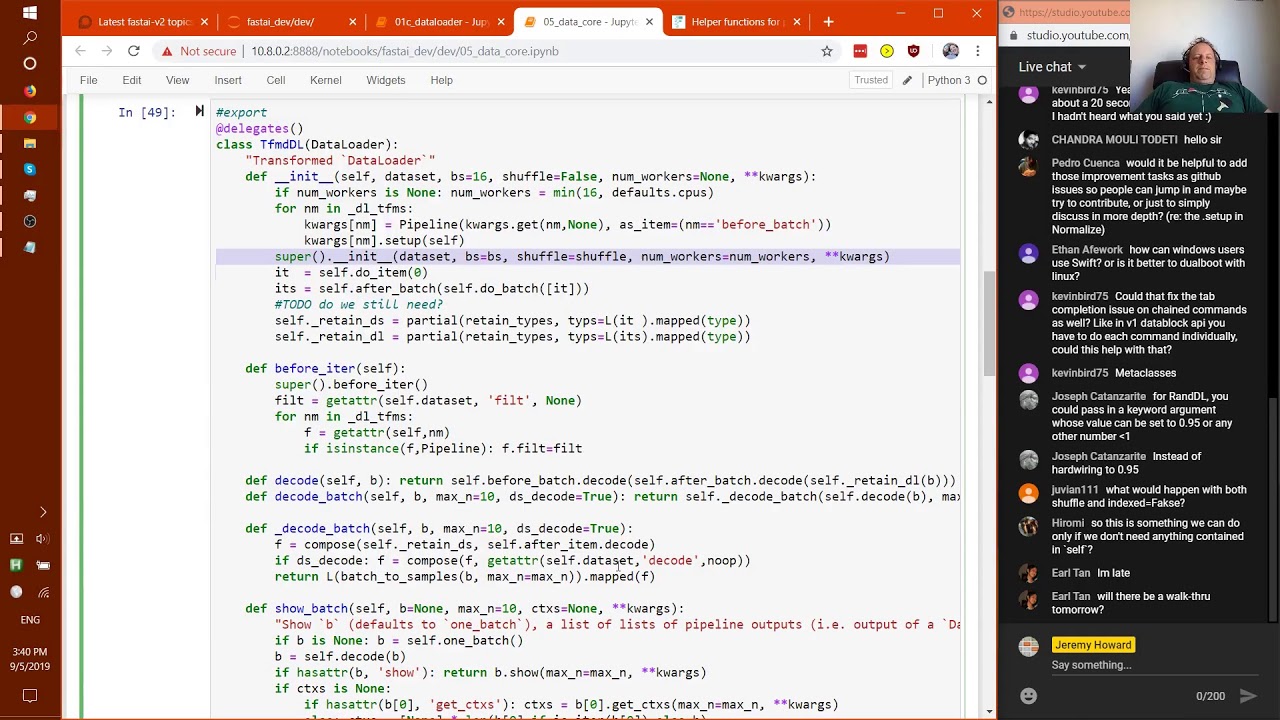

00:05:21.200 | And then we were starting to look at tf.mdl.

00:05:25.920 | And so rather than going into the details of tf.mdl, let's just look at some examples

00:05:30.320 | of things that can use tf.mdl.

00:05:31.920 | So in data core, we have to find a few more transforms.

00:05:40.880 | As you can see, here's an example of a transform, which is CUDA.

00:05:48.020 | So CUDA is a transform, which will move a batch to some device, which defaults this is

00:06:02.060 | now incorrect documentation.

00:06:04.480 | So let's fix it.

00:06:08.480 | Default device is what it defaults to.

00:06:28.960 | So this might be interesting to some of you.

00:06:33.840 | Default device, as you can see, returns a device.

00:06:39.280 | And so I share a server with some other people.

00:06:42.000 | So we've each decided like which GPU is going to be our device.

00:06:46.600 | So I'm using device number five.

00:06:49.560 | You can change the default device by just passing in a device.

00:06:58.600 | So in fact, you can just say forch.cuda.setDevice.

00:07:10.720 | And then that will change it.

00:07:13.600 | The reason we have a default device function is that you can pass in useCuda true or false.

00:07:21.440 | And so it's kind of handy, you can just pass in useCuda equals false.

00:07:27.800 | And it will set the default device to CPU.

00:07:34.280 | Otherwise it will set the default device to whatever you put into torch.cuda.setDevice.

00:07:41.480 | This is a really nice, easy way to cause all of fast AI to go between CUDA and non-Cuda

00:07:47.720 | and also to ensure that the same device is used everywhere, if you wish to be used everywhere.

00:07:54.880 | You never have to use the default device.

00:07:56.760 | You can also always just pass in a specific device to the transform.

00:08:02.720 | But if you pass in none, then it's going to use the default.

00:08:10.080 | So I mentioned transforms look a lot like functions.

00:08:18.040 | As you can see here, we're creating the CUDA transform and then we call it just like a

00:08:22.080 | function.

00:08:25.760 | And when we do call them like a function, they're going to call in codes.

00:08:30.260 | And the reasons that we don't just use a function, there's a couple.

00:08:34.200 | The first is that you might want to have some state.

00:08:36.880 | So in this case, we wanted some state here.

00:08:39.400 | Now, of course, you could do that with a with a partial instead.

00:08:45.600 | But this is just a nice simple way to consistently do it.

00:08:49.800 | And then the second reason is because it's nice to have this decodes method.

00:08:54.760 | And so this is quite nice that we now have a CUDA method that automatically puts things

00:08:58.160 | back on the CPU.

00:08:59.160 | When you're done, it's going to really help to avoid memory leaks and stuff like that.

00:09:09.280 | So like a lot of the more advanced functionality in fast AI tends to be stuff which is like

00:09:17.400 | pretty optional, right?

00:09:18.840 | So you don't have to use a CUDA transform.

00:09:22.880 | You could just put in a pipeline to device as a function.

00:09:32.120 | And that'll work absolutely fine.

00:09:35.080 | But it's not going to do the extra stuff of making sure that you don't have memory leaks

00:09:39.760 | and stuff by automatically calling decodes for you.

00:09:42.920 | So most of the kind of more advanced functionality in fast AI is designed to both be optional

00:09:51.400 | and also have like intuitive behavior, like do what you would expect it to do.

00:09:58.480 | Okay.

00:09:59.560 | So that's the CUDA transform.

00:10:03.720 | One interesting feature that you might have noticed we've seen before is there's a decorator

00:10:08.440 | called at docs that we use quite a lot.

00:10:12.520 | And what does at docs do?

00:10:14.880 | Well, you can see it underneath when I say show doc CUDA.decodes.

00:10:21.640 | It's returning this doc string.

00:10:24.800 | And the doc string is over here.

00:10:27.240 | So basically what at docs is going to do is it's going to try and find an attribute called

00:10:31.540 | underscore docs, which is going to be a dictionary.

00:10:34.440 | And it's going to use that for the doc strings.

00:10:37.760 | You don't have to use it for all the doc strings.

00:10:39.960 | But we very, very often because our code is so simple, we very, very often have one liners

00:10:44.800 | which, you know, if you add a doc string to that, it's going to become a three-liner,

00:10:49.000 | which is just going to take up a whole lot of stuff.

00:10:53.640 | So this way, yeah, you can have kind of much more concise little classes.

00:10:58.120 | I also kind of quite like having all the documentation in one place, personally.

00:11:03.660 | So Joseph asks, what is B?

00:11:06.680 | So B is being, so let's look at that from a couple of directions.

00:11:11.720 | The first is, what's being passed to encodes.

00:11:15.960 | Encodes is going to be passed whatever you pass to the callable, which in this case would

00:11:21.040 | be tensor one, just like in an end module forward, you'll get passed whatever is passed

00:11:28.680 | to the callable, specifically B in this case is standing for as Kevin's guest batch.

00:11:37.040 | And so basically, if you put this transform into the data loader in after batch, then

00:11:44.520 | it's going to get a complete batch and it'll pop it onto whatever device you request, which

00:11:51.600 | by default, if you have a GPU will be your first GPU.

00:11:58.120 | You'll see here that the test is passing in a tuple containing a single tensor.

00:12:06.760 | And of course, that's normally what batches contain.

00:12:09.080 | They contain tuples of tensors, normally that have two tensors, X and Y.

00:12:15.000 | And so things like two device and two CPU will work perfectly fine on tuples as well.

00:12:21.280 | They will just move everything in that tuple across to the device or the CPU.

00:12:26.520 | The tuples could also contain dictionaries or lists, it just does it recursively.

00:12:31.200 | So if we have a look at two device, you'll see that what it does is it sets up a function

00:12:40.540 | which calls the .2 function in PyTorch.

00:12:46.040 | And it's going to pass it through to the device, but then it doesn't just call that function,

00:12:51.880 | it calls apply that function to that batch.

00:12:56.120 | And so what apply does is a super handy function for PyTorch is it's going to apply this function

00:13:03.200 | recursively to any lists, dictionaries and tuples inside that argument.

00:13:11.740 | So as you can see, it's basically looking for things that are lists or instances and

00:13:20.320 | calling them appropriately.

00:13:23.160 | PyTorch has an apply as well, but this is actually a bit different because rather than

00:13:27.480 | changing something, it's actually returning the result of applying and it's making every

00:13:32.360 | effort to keep all the types consistent as we do everywhere.

00:13:36.200 | So for example, if your list thing is actually some subclass of tuple or list, it'll make

00:13:41.960 | sure that it keeps that subclass all the way through.

00:13:46.640 | Okay, for those of you that haven't looked at the documentation framework in ClassDiv1,

00:13:55.680 | you might not have seen show_doc before, show_doc is a function which returns, as you see, documentation.

00:14:04.280 | So if we go to the documentation, let's take a look at CUDA, here is, you can see CUDA.encodes

00:14:24.240 | return batch2_CPU, which is obviously the wrong way around, that should say encodes.

00:14:37.320 | Right, nevermind, let's do decodes since I had that the right way around, CUDA.decodes

00:14:49.360 | return batch2_CPU.

00:14:50.360 | So basically, what show_doc does is it creates the markdown that's going to appear in the

00:14:57.480 | documentation.

00:14:58.480 | This is a bit different to how a lot of documentation generators work.

00:15:03.800 | I really like it because it basically allows us to auto include the kind of automatic documentation

00:15:09.640 | anywhere we like, but then we can add our own markdown and code at any point.

00:15:17.440 | So it kind of lets us construct documentation which has auto-generated bits and also manual

00:15:22.800 | bits as well, and we find that super handy.

00:15:27.280 | When you export the notebooks to HTML, it will automatically remove the show_doc line.

00:15:33.520 | So as you can see, you can't actually see it saying show_doc.

00:15:38.040 | The other thing to note is that for classes and functions, you don't have to include the

00:15:44.760 | show_doc, it'll automatically add it for you, but for methods, you do have to put it there.

00:15:55.960 | All right, so that's kind of everything there, and you can see in the documentation, the

00:16:04.080 | show_doc kind of creates things like these source links, which if you look at the bottom

00:16:09.160 | of the screen, you can see that the source link when I'm inside the notebook will link

00:16:14.040 | to the notebook that defines the function, whereas in the documentation, the source link

00:16:21.600 | will link to the -- actually, this isn't quite working yet.

00:16:29.760 | I think once this is working, this should be linking to the GitHub where that's defined.

00:16:38.920 | So that's something I should note down to fix.

00:16:45.360 | It's working in first AI v1, so it should be easy to fix.

00:16:56.240 | All right.

00:16:59.480 | So you can see here our tests, so we create the transform, we call it as if it's a function,

00:17:08.040 | we test that it is a tuple that contains this, and we test that the type is something that's

00:17:19.180 | now putified, and then we should be able to decode that thing we just created, and now

00:17:25.000 | it should be uncutified.

00:17:26.440 | So as I mentioned last time, putting something that's a byte, making it into a float takes

00:17:35.280 | a surprisingly long time on the CPU.

00:17:39.480 | So by doing this as a PyTorch transform, we can automatically have it run on the GPU if

00:17:47.480 | we put it in that part of the pipeline.

00:17:51.840 | And so here you'll see we've got one pair of encodes/decodes for a tensor image, and

00:17:59.360 | one pair of encodes/decodes for a tensor mask.

00:18:02.920 | And the reason why, as you can see, the tensor mask version converts to a long if you're

00:18:25.520 | trying to divide by 255 if you requested it, which makes sense because masks have to be

00:18:36.520 | long.

00:18:41.040 | And then the decodes, if you're decoding a tensor image, then sometimes you end up with

00:18:49.960 | like 1.00001, so we clamp it to avoid those redundant errors, but for masks we don't have

00:18:57.280 | to because it was a long, so we don't have those floating point issues.

00:19:00.840 | So this is kind of, yeah, this is where this auto dispatch stuff is super handy.

00:19:08.160 | And again, we don't have to use any of this, but it makes life a lot easier for a lot of

00:19:14.040 | things if you do.

00:19:16.520 | So Kevin, I think I've answered your question, but let me know if I haven't.

00:19:20.000 | I think the answer is it doesn't need it.

00:19:22.400 | And the reason it shouldn't need it is that it's got a tensor mask coming in.

00:19:28.680 | And so by default, if you don't have a return type and it returns a superclass of the thing

00:19:35.840 | that it gets, it will cast it to the subclass.

00:19:38.920 | So yeah, this is all passing without that.

00:19:43.720 | Okay.

00:19:50.560 | So I think that since it's doing nothing, I don't think we should need a decodes at all

00:19:57.080 | because by definition, decodes does nothing.

00:20:02.720 | Okay.

00:20:05.800 | Great.

00:20:08.640 | That's interesting, there's about a 20-second delay.

00:20:15.120 | I think there's an option in YouTube video to say low latency.

00:20:19.120 | We could try that.

00:20:20.120 | It says that the quality might be a little bit less good.

00:20:22.120 | So we'll try that next time.

00:20:24.280 | Remind me if I forget and we'll see if the quality is still good enough.

00:20:29.400 | Okay.

00:20:31.400 | So normalization, like here's an example of where the transform stuff is just so, so,

00:20:36.840 | so, so, so helpful because it's so annoying in fast AI v1 and every other library having

00:20:43.520 | to remember to denormalize stuff when you want to display it.

00:20:48.920 | But thanks to decodable transforms, we have state containing the main and standard deviation

00:20:55.000 | that we want.

00:20:57.080 | And so now we have decodes.

00:21:00.840 | So that is super helpful, right?

00:21:04.320 | So here's how it works.

00:21:08.480 | Let's see, normalize.

00:21:13.080 | So we can create some data loader transform pipeline.

00:21:17.360 | It does CUDA, then converts to a float.

00:21:21.160 | And then normalizes with some main and standard deviation.

00:21:25.720 | So then we can get a transform data loader from that.

00:21:29.520 | So that's our after batch transform pipeline.

00:21:34.720 | And so now we can grab a batch and we can decode it.

00:21:45.280 | And as you can see, we end up with the right means instead of deviations for everything

00:21:54.440 | and we can display it.

00:21:55.860 | So that is super great.

00:22:01.440 | Okay, so that's a slightly strange test.

00:22:16.800 | I think we're just getting lucky there because X.main -- oh, I see.

00:22:33.280 | It's because we're not using a real main and standard deviation.

00:22:35.800 | So the main ends up being less than zero, if that makes sense.

00:22:44.640 | BroadcastVec is just a minor little convenience function which our main and standard deviation

00:22:51.960 | needs to be broadcast over the batch dimension correctly.

00:22:57.720 | So that's what BroadcastVec is doing, is it's broadcasting to create a rank four tensor

00:23:05.720 | by broadcasting over the first dimension.

00:23:13.600 | And so there's certainly room for us to improve normalize, which we should do I guess by maybe

00:23:18.800 | adding a setup method which automatically calculates a main and standard deviation from

00:23:25.280 | one batch of data or something like that.

00:23:27.560 | For now, as you can see, it's all pretty manual.

00:23:32.120 | Maybe we should make BroadcastVec happen automatically as well.

00:23:36.680 | Add that, inside normalize, adding normalize.setup.

00:23:52.840 | So remember setup's the thing that is going to be passed the data, the training data that

00:23:57.400 | we're using.

00:23:58.400 | So we can use that to automatically set things up.

00:24:04.160 | Okay, so that's normalize.

00:24:13.160 | So one of the things that sometimes you fast AI users complain about is data bunch as being

00:24:24.400 | like an abstraction that gets used quite widely in fast AI and seems like a complex thing

00:24:29.040 | to understand.

00:24:31.280 | Here is the entire definition of data bunch in fast AI version two.

00:24:36.920 | So hopefully we can all agree that this is not something we're going to have to spend

00:24:41.480 | a lot of time getting our heads around.

00:24:43.280 | A data bunch quite literally is simply something that contains a training data loader and a

00:24:51.200 | validation data loader.

00:24:53.480 | So that's literally all it is.

00:24:55.280 | And so if you don't want to use our data bunch class, you could literally just go, you know,

00:25:04.920 | data bunch equals named tuple train dl equals whatever, valid dl equals whatever, and now

00:25:19.720 | you can use that as a data bunch.

00:25:22.960 | But there's no particular reason to because you could just go data bunch equals data bunch

00:25:29.760 | and pass in your training data loader and your validation data loader, and again, you're

00:25:36.160 | all done.

00:25:37.160 | So as long as you've got some kind of object that has a train dl and a valid dl.

00:25:44.080 | But so data bunch is just something which creates that object for you.

00:25:48.240 | There's quite a few little tricks, though, to make it nice and concise, and again, you

00:25:52.720 | don't need to learn all these tricks if you're not interested in kind of finding ways to

00:25:59.360 | write Python in concise ways, but if you are interested, you might like some of these tricks.

00:26:06.720 | The first one is get atra.

00:26:11.400 | So get atra is basically a wrapper around thunder get atra, which is part of Python.

00:26:21.000 | And it's a really handy thing in Python.

00:26:23.880 | What it lets you do is if you define it in a class, and then you call some attribute

00:26:30.000 | of that class that doesn't exist, then Python will call get atra.

00:26:35.400 | So you can Google it, there's lots of information online about that if you want to know about

00:26:39.440 | it.

00:26:40.440 | But defining get atra has a couple of problems.

00:26:44.240 | The first problem is that it's going to grab everything that isn't defined, and that can

00:26:51.680 | hide errors because you might be calling something that doesn't exist by mistake by some typo

00:26:58.640 | or something, and you end up with some like weird exception, or worse still, you might

00:27:03.240 | end up with unexpected behavior and not an exception.

00:27:09.120 | The second problem is that you don't get tab completion.

00:27:15.600 | Because the stuff that's in thunder get atra, Python doesn't know what can be handled there,

00:27:21.520 | so it has no way to do tab completion.

00:27:26.440 | So I will show you a couple of cool things, though.

00:27:29.600 | If we define use the base class get atra, it's going to give us exactly the same behavior

00:27:35.120 | as thunder get atra does in Python, and specifically it's going to look for an attribute called

00:27:41.960 | default in your class, and anything that's not understood, it's going to instead look

00:27:47.160 | for that attribute in default.

00:27:50.720 | And the reason for that we want that in databunch is it's very handy to be able to say, for

00:27:56.160 | example, databunch.traindl, for instance, there's an example of this dataset.

00:28:14.200 | But if you just say databunch.dataset, that would be nice to be able to like assume that

00:28:18.920 | I'm talking about the training set by default.

00:28:24.520 | And so this is where get atra is super handy rather than having to define, you know, another

00:28:31.140 | example would be one batch.

00:28:34.360 | You know, this is the same as traindl.one batch.

00:28:42.680 | Yes, Pedro, that would be very helpful to add those tasks as GitHub issues.

00:28:52.000 | So in this case, they kind of say consider blah, so the issue should say consider blah

00:28:56.080 | rather than do it because I'm not quite sure yet if it's a good idea.

00:29:00.920 | The first one is certainly something to fix, but thanks for that suggestion.

00:29:07.920 | Okay, so, yeah, we'd love to be able to, you know, not have to write all those different

00:29:15.760 | versions.

00:29:16.760 | So thunder get atra is super handy for that, but as I said, you know, you have these problems

00:29:21.600 | of things like if you pass through some typo, so if I accidentally said not one batch but

00:29:28.000 | on batch, I'd get some weird error or it might even give me the wrong behavior.

00:29:32.940 | Get atra, sub class, fixes, all that.

00:29:35.000 | So for example, this correctly gives me an attribute error on batch, so it tells me clearly

00:29:41.800 | that this is an attribute that doesn't exist.

00:29:44.440 | And here's the other cool thing, if I press tab, I get tab completion.

00:29:50.680 | Or press tab, I can see all the possible things.

00:29:54.080 | So get atra fixes the sub class, fixes all of the things I mentioned that I'm aware of

00:29:59.840 | as problems in thunder get atra in Python.

00:30:03.080 | So like this is an example of where we're trying to take stuff that's already in Python

00:30:07.560 | and make it better, you know, more self-documenting, harder to make mistakes, stuff like that.

00:30:18.840 | So the way that this works is you both inherit from get atra, you have to add some attribute

00:30:30.460 | called default, and that's what any unknown attributes will be passed down to.

00:30:37.880 | And then optionally, you can define a special attribute called underscore extra that contains

00:30:43.600 | a list of strings, and that will be only those things will be delegated to.

00:30:50.560 | So it won't delegate to every possible thing.

00:30:55.180 | You don't have to define underscore extra.

00:30:57.320 | So I'll show you, if I comment it out, right?

00:31:00.640 | And then I go data bunch dot, and I press tab, you'll see I've got more things here now.

00:31:10.680 | So what it does by default is that underscore extra by default will actually dynamically

00:31:18.200 | find all of the attributes inside self.default if underscore extra is not defined, and it

00:31:24.680 | will include all of the ones that don't start with an underscore, and they're all going

00:31:28.760 | to be included in your tab completion list.

00:31:31.560 | In this case, we didn't really want to include everything, so we kind of try to keep things

00:31:36.960 | manageable by saying this is the subset of stuff we expect.

00:31:44.400 | Okay.

00:31:45.400 | So that's one nice thing.

00:31:48.080 | You'll see we're defining at docs, which allows us to add documentation to everything.

00:31:54.960 | Ethan's asked a question about Swift.

00:31:59.240 | So it would be best to probably ask that on the Swift forum.

00:32:08.120 | So Kevin's asking about tab completion on change commands.

00:32:13.080 | No, not really.

00:32:17.200 | This is an issue with Jupyter, basically, which is -- it's really an issue with Python.

00:32:25.760 | It can't really do tab completion on something that calls a function, because it would need

00:32:30.120 | to call the function to know how to tab complete it.

00:32:33.680 | So in Jupyter, you just have to create a separate line that calls each function.

00:32:38.360 | But we're doing a lot less change commands in fast.ai version 2, partly for that reason,

00:32:47.420 | and partly because of some of the ideas that came out of the Swift work.

00:32:51.080 | So you'll find it's less of a problem.

00:32:54.760 | But for things that are properties like this, the tab completion does change correctly.

00:33:03.640 | Okay.

00:33:06.000 | So that's what underscore extra is.

00:33:09.220 | So now you understand what under init is.

00:33:11.800 | So important to recognize that a data bunch is super flexible, because you can pass in

00:33:16.360 | as many data loaders as you like.

00:33:18.240 | So you can have not just a training and validation set, but the test set, multiple validation

00:33:22.240 | sets, and so forth.

00:33:24.040 | So then we define done to get item so that you can, as you see, index directly into your

00:33:32.120 | data bunch to get the nth data loader out of it.

00:33:35.860 | So as you can see, it's just returning soft.dls.

00:33:41.320 | And so now that we've defined get item, we basically want to be able to say at property

00:33:46.600 | def train dl self comma I, sorry, self return self zero.

00:34:03.240 | And we wanted to be able to do valid dl, and that would be self one.

00:34:10.560 | And so that's a lot of lines of code for not doing very much stuff.

00:34:16.760 | So in fastai, there's a thing called add props, which stands for add properties.

00:34:22.120 | And that is going to go through numbers from zero to n minus one, which by default is two.

00:34:32.820 | And we'll create a new function with this definition, and it will make it into a property.

00:34:41.560 | So here, we're creating those two properties in one go, and the properties are called train

00:34:45.480 | dl and valid dl.

00:34:47.120 | And they respectively are going to return x zero and x one, but they'll do exactly the

00:34:51.520 | same as this.

00:34:55.000 | And so here's the same thing for getting x zero and x one, but data set as properties.

00:35:01.480 | So again, these are shortcuts, which come up quite a lot in fastai code, because a lot

00:35:06.920 | often we want the train version of something and a validation version of something.

00:35:11.640 | Okay, so we end up with a super little concise bit of code, and that's our data bunch.

00:35:19.880 | We can grab one batch.

00:35:21.120 | In fact, the train dl, we don't need to say train dl, because we have good atra, which

00:35:27.800 | you can see is tested here.

00:35:30.760 | And one batch is simply calling, as we saw last time, next, ita, tdl, and so it is making

00:35:38.760 | sure that they're all the same, which they are.

00:35:41.800 | And then there's the test of get item, and that method should be there.

00:35:53.920 | Okay, all right.

00:35:57.080 | So that is data core.

00:36:04.120 | If people have requests as to where to go next, feel free to tell me.

00:36:11.200 | Otherwise, I think what we'll do is we'll go and have a look at the data loader.

00:36:21.240 | And so we're getting into some deep code now.

00:36:29.120 | Stuff that starts with 01 is going to be deep code.

00:36:32.520 | And the reason I'm going here now is so that we can actually have an excuse to look at

00:36:36.260 | some of the code.

00:36:39.480 | The data loader, which is here, is designed to be a replacement for high torch data loader.

00:36:49.960 | Yes, meta classes, I think we will get to Kevin pretty soon.

00:36:54.920 | We're kind of heading in that direction, but I want to kind of see examples of them first.

00:37:01.360 | So I think we're going to go from here, then we might go to transforms, and we might end

00:37:04.600 | up at meta classes.

00:37:07.760 | So this is designed to be a replacement for the pytorch data loader.

00:37:12.320 | Why replace the pytorch data loader?

00:37:13.800 | There's a few reasons.

00:37:15.520 | The biggest one is that I just kept finding that there were things we wanted to do with

00:37:19.680 | the pytorch data loader that it didn't provide the hooks to do.

00:37:23.920 | So we had to keep creating our own new classes, and so we just had a lot of complicated code.

00:37:30.960 | The second reason is that the pytorch data loader is very -- I really don't like the

00:37:38.480 | code in it.

00:37:39.480 | It's very awkward.

00:37:40.480 | It's hard to understand, and there's lots of different pieces that are all tightly coupled

00:37:44.240 | together and make all kinds of assumptions about each other that's really hard to work

00:37:48.680 | through and understand and fix issues and add things to it.

00:37:53.200 | So it just -- yeah, I was really pretty dissatisfied with it.

00:37:58.480 | So we've created our own.

00:38:01.160 | Having said that, pytorch -- the pytorch data loader is based on a very rock-solid, well-tested,

00:38:12.360 | fast multiprocessing system, and we wanted to use that.

00:38:19.520 | And so the good news is that that multiprocessing system is actually pulled out in pytorch into

00:38:25.240 | something called _multiprocessing_data_loader_idder.

00:38:28.840 | So we can just use that so we don't have to rewrite any of that.

00:38:32.800 | Unfortunately, as I mentioned, pytorch's classes are all kind of tightly coupled and make lots

00:38:40.560 | of assumptions about each other.

00:38:42.120 | So to actually use this, we've had to do a little bit of slightly ugly code, but it's

00:38:47.040 | not too much.

00:38:48.040 | Specifically, it's these lines of code here, but we'll come back to them later.

00:38:54.200 | But the only reason they exist is so that we can kind of sneak our way into the pytorch

00:39:01.080 | data loading system.

00:39:05.080 | So a data loader, you can use it in much the same way as the normal pytorch data loader.

00:39:13.960 | So if we start with a dataset -- and remember, a dataset is anything that has a length and

00:39:23.120 | you can index into.

00:39:25.520 | So for example, this list of letters is a dataset.

00:39:29.840 | It works as a dataset.

00:39:33.360 | So we can say data loader, pass in our dataset, pass in a batch size, say whether or not you

00:39:40.080 | want to drop the last batch if it's not in size 4, and say how many multiprocessing workers

00:39:47.720 | to do, and then if we take that and we run two epochs of it, grab all the elements in

00:39:57.640 | each epoch, and then join each batch together with no separator, and then join each set

00:40:06.440 | of batches together with the space between them, we get back that.

00:40:10.720 | Okay, so there's all the letters with the -- as you can see, the last batch disappears.

00:40:19.080 | If we do it without dropLast equals true, then we do get the last bit, and we can have

00:40:26.240 | more than, you know, as many workers as we like by just passing that in.

00:40:34.040 | You can pass in things that can be turned into tensors, like, for example, ints, and

00:40:41.360 | just like the -- this is all the same as the PyTorch data loader, it will turn those into

00:40:46.120 | batches of tensors, as you can see.

00:40:49.360 | So this is the testing. Test equal type tests that this thing and this thing are the same,

00:40:58.520 | and even have exactly the same types.

00:41:00.760 | Normally test equals just checks that collections have the same contents.

00:41:06.240 | Okay, so that's kind of the basic behavior that you would expect to see in a normal PyTorch

00:41:13.160 | data loader, but we also have some hooks that you can add in.

00:41:21.240 | So one of the hooks is after iter, and so after iter is a hook that will run at the

00:41:28.520 | end of each iteration, and so this is just something that's going to -- let's see what

00:41:37.360 | T3 is.

00:41:38.360 | T3 is just some tensor, and so it's just something that's going to just set T3.f to something,

00:41:46.560 | and so after we run this, T3.f equals that thing, so you can add code that runs after

00:41:53.160 | an iteration.

00:41:55.160 | Then, let's see this one.

00:42:04.720 | That's just the same as before.

00:42:06.960 | You can also pass a generator to a data loader, and it will work fine as well.

00:42:12.840 | Okay, so that's all kind of normal data loader behavior.

00:42:19.720 | Then there's more stuff we can do, and specifically, we can define -- rather than just saying after

00:42:29.640 | iter, there's actually a whole list of callbacks that we can, as you can see, that we can define,

00:42:39.120 | and we use them all over the place throughout fast.ai.

00:42:42.000 | You've already seen after iter, which runs after each iteration.

00:42:45.280 | Sorry, after all the iterations, there's before iter, which will run before all the iterations,

00:42:55.200 | and then if you look at the code, you can see here is the iterator, so here's before

00:43:03.000 | iter, here's after iter.

00:43:05.800 | This is the slightly awkward thing that we need to fit in with the PyTorch multi-processing

00:43:09.880 | data loader, but what it's going to do then is it's going to call -- it's going to basically

00:43:21.480 | use this thing here, which is going to call sampler to get the samples, which is basically

00:43:29.440 | using something very similar to the PyTorch's samplers, and it's going to call create batches

00:43:34.280 | for each iteration, and create batches is going to go through everything in the sampler,

00:43:43.760 | and it's going to map do item over it, and do item will first call create item, and then

00:43:50.480 | it will call after item, and then after that, it will then use a function called chunked,

00:44:06.480 | which is basically going to create batches out of our list, but it's all done lazily,

00:44:14.440 | and then we're going to call do batch, so just like we had do item to create our items,

00:44:18.440 | do batch creates our batches, and so that will call before batch, and then create batch.

00:44:26.240 | This is the thing to retain types, and then finally after batch, and so the idea here

00:44:32.280 | is that you can replace any of those things.

00:44:38.080 | Things like before batch, after batch, and after item are all things which default to

00:44:49.040 | no-op, so all these things default to no operation, so in other words, you can just use them as

00:44:56.760 | callbacks, but you can actually change everything, so we'll see examples of that over time, but

00:45:07.960 | I'll show you some examples, so example number one is here's a subclass of data loader, and

00:45:16.680 | in this subclass of data loader, we override create item, so create item normally grabs

00:45:24.280 | the ith element of a dataset, assuming we have some sample that we want, that's what

00:45:29.240 | create item normally does, so now we're overriding it to do something else, and specifically

00:45:34.560 | it's going to return some random number, and so you can see it's going to return that random

00:45:41.640 | number if it's less than 0.95, otherwise it will stop, what is stop?

00:45:50.360 | Stop is simply something that will raise a stop iteration exception, for those of you

00:45:58.320 | that don't know, in Python, the way that generators, iterators, stuff like that, say they've finished

00:46:06.920 | is they raise a stop iteration exception, so Python actually uses exceptions for control

00:46:14.980 | flow, which is really interesting insight, so in this case, we can create, I mean obviously

00:46:25.520 | this particular example is not something we do in the real world, but you can imagine

00:46:30.000 | creating a create item that keeps reading something from a network stream, for example,

00:46:36.600 | and when it gets some, I don't know, end of network stream error, it will stop, so you

00:46:45.120 | can kind of easily create streaming data loaders in this way, and so here's an example basically

00:46:51.200 | of a simple streaming data loader, so that's pretty fun.

00:47:00.120 | And one of the interesting things about this is you can pass in num workers to be something

00:47:05.160 | other than 0, and what that's going to do is it's going to create, in this case, four

00:47:12.440 | streaming data loaders, which is a kind of really interesting idea, so as you can see,

00:47:18.480 | you end up with more batches than the zero num workers version, because you've got more

00:47:26.200 | streaming workers and they're all doing this job, so they're all doing it totally independently.

00:47:33.920 | Okay, so that's pretty interesting, I think.

00:47:41.200 | So if you don't set a batch size, so here I've got batch size equals four, if you don't

00:47:44.960 | set a batch size, then we don't do any batching, and this is actually something which is also

00:47:55.760 | built into the new PyTorch 1.2 data loader, this idea of, you know, you can have a batch

00:48:02.000 | size of none, and so if you don't pass a batch size in, so here I remember that letters is

00:48:08.720 | all the letters of the alphabet, lowercase, so if I just do a data loader on letters,

00:48:18.280 | then if I listify that data loader, I literally am going to end up with exactly the same thing

00:48:25.840 | that I started with because it's not turning them into batches at all, so I'll get back

00:48:30.240 | 26 things. I can shuffle a data loader, of course, and if I do that, I should end up

00:48:39.280 | with exactly the same thing that I started with, but in a shuffled version, and so we

00:48:43.760 | actually have a thing called test shuffled that checks that the two arguments have the

00:48:48.600 | same contents in different orders.

00:48:56.480 | For randio, you could pass in a keyword argument whose value can be set to 0.95 or any other

00:49:01.920 | number less than 1. I'm not sure I understand that, sorry. Okay, so that's what that is.

00:49:16.760 | Something else that you can do is, you can see I'm passing in a data set here to my data

00:49:22.280 | loader, which is pretty normal, but sometimes your data set might not be, might not have

00:49:29.960 | a done-to-get item, so you might have a data set that only has a next, and so this would

00:49:34.640 | be the case if, for example, your data set was some kind of infinite stream, like a network

00:49:40.000 | link, or it could be like some coming from a file system containing like hundreds of

00:49:47.280 | millions of files, and it's so big you don't want to enumerate the whole lot, or something

00:49:51.400 | like that.

00:49:52.400 | And again, this is something that's also in PyTorch 1.2, is the idea of kind of non-indexed

00:49:58.920 | or iterable data sets.

00:50:01.760 | By default, our data loader will check whether this has a done-to-get item, and if it doesn't,

00:50:09.360 | it'll treat it as iterable, if it does, it'll treat it as indexed, but you can override

00:50:13.520 | that by saying indexed equals false.

00:50:16.520 | So this case, it's going to give you exactly the same thing as before, but it's doing it

00:50:20.160 | by calling next rather than done-to-get item.

00:50:25.640 | But this is useful, as I say, if you've got really, really, really huge data sets, or

00:50:29.440 | data sets that are coming over the network, or something like that.

00:50:36.640 | Okay, so that is some of that behavior.

00:50:43.600 | Yes, absolutely, you can change it to something that comes as a keyword argument, sure.

00:50:56.680 | Okay, so these are kind of more just interesting tests, rather than additional functionality,

00:51:02.880 | but in a data loader, when you have multiple workers, one of the things that's difficult

00:51:08.400 | is ensuring that everything comes back in the correct order.

00:51:11.960 | So here, I've created a data loader that simply returns the item from a list, so I've overwritten

00:51:22.000 | list, that adds a random bit of sleep each time, and so there are just some tests here,

00:51:27.760 | as you can see, that check that even if we have multiple workers, that we actually get

00:51:32.520 | back the results in the correct order.

00:51:34.440 | And you can also see by running percent time, we can observe that it is actually using additional

00:51:40.840 | workers to run more quickly.

00:51:47.000 | And so here's a similar example, about this time, I've simulated a queue, so this is an

00:51:54.120 | example that only has done to iter, doesn't have done to get item, so if I put this into

00:51:59.120 | my data loader, then it's only able to iterate.

00:52:03.520 | So here's a really good example of what it looks like to work with an iterable only queue

00:52:14.880 | with variable latency.

00:52:16.480 | This is kind of like what something streaming over a network, for example, might look like.

00:52:22.520 | And so here's a test that we get back the right thing.

00:52:27.040 | And now in this case, because our sleepy queue only has it done to iter, it doesn't have

00:52:32.560 | a done to get item, that means there's no guarantee about what order things will come

00:52:36.200 | back in.

00:52:37.200 | So we end up with something that's shuffled this time.

00:52:41.960 | Which I think answers the question of Juvian 111, which is what would happen if shuffle

00:52:47.600 | and index are both false, so it's a great question.

00:52:51.720 | So if indexed is false, then shuffle doesn't do anything.

00:52:56.880 | And specifically what happens is, in terms of how it's implemented, the sampler -- here

00:53:08.100 | is the sampler -- if you have indexed, then it returns an infinite list of integers.

00:53:18.680 | And if it's not indexed, then it returns an infinite list of nones.

00:53:24.000 | So when you shuffle, then you're going to end up with an infinite list of nones in shuffled

00:53:29.400 | order.

00:53:30.400 | So it doesn't actually do anything interesting.

00:53:37.200 | And so this is an interesting little class, which we'll get to, but basically for creating

00:53:40.920 | infinite lists.

00:53:41.920 | You'll see a lot of the stuff we're doing is much more functional than a lot of normal

00:53:46.560 | Python programming, which makes it easier to write, easier to understand, more concise.

00:53:52.960 | But we had to create some things to make it easier.

00:53:54.880 | So things like infinite lists are things which are pretty important in this style of programming,

00:54:00.220 | so that's why we have a little class to easily create these two main types of infinite lists.

00:54:08.760 | And so if you haven't done much more functional style programming in Python, definitely check

00:54:13.040 | out the iter tools standard library package, because that contains lots and lots and lots

00:54:18.640 | of useful things for this style of programming.

00:54:23.020 | And as you can see, for example, it has a way to slice into potentially infinite iterators.

00:54:29.080 | This is how you get the first N things out of a potentially infinitely sized list.

00:54:34.600 | In this case, it definitely is an infinitely sized list.

00:54:41.800 | Okay.

00:54:42.800 | So let's look at some interesting things here.

00:54:47.480 | One is that randl doesn't have to be written like this.

00:54:54.780 | What we could do is we could create a function called create, or we could say a function

00:55:05.120 | called rand item, like so.

00:55:12.680 | So we're now not inheriting from the data loader, we're just creating a function called

00:55:16.160 | rand item.

00:55:17.520 | And instead of creating a randl, we could create a normal data loader.

00:55:24.140 | But then we're going to pass in create item equals underscore randitem, and so that's

00:55:42.480 | now not going to take a cell.

00:55:47.080 | Great, okay.

00:55:50.840 | So as you can see, this is now doing exactly the same thing.

00:55:55.760 | That and that are identical.

00:56:00.840 | And the reason why is we've kind of added this really nice functionality that we use

00:56:05.680 | all over the place where nearly anywhere that you -- we kind of add callbacks or customise

00:56:12.280 | ability through inheritance.

00:56:14.740 | You can also do it by passing in a parameter with the name of the method that you want

00:56:23.680 | to override.

00:56:26.560 | And we use this quite a lot because, like, if you've just got some simple little function

00:56:32.680 | that you want to change, overriding is just, you know, more awkward than we would like

00:56:39.200 | you to force you to do, and particularly for newer users to Python, we don't want them to

00:56:44.760 | force them to have to understand, you know, inheritance and OO and stuff like that.

00:56:50.880 | So this is kind of much easier for new users for a lot of things.

00:56:55.000 | So for a lot of our documentation and lessons and stuff like that, we'll be able to do a

00:56:59.440 | lot of stuff without having to first teach OO inheritance and stuff like that.

00:57:07.000 | The way it works behind the scenes is super fun.

00:57:09.960 | So let's have a look at create item, and, yes, great question from Haromi.

00:57:16.960 | When you do it this way, you don't get to use any state because you don't get passed

00:57:21.400 | in self.

00:57:23.240 | So if you want state, then you have to inherit.

00:57:26.880 | If you don't care about state, then you don't.

00:57:31.140 | So let's see how create item works.

00:57:34.600 | Create item is one of the things in this long list of underscore methods.

00:57:41.480 | As you can see, it's just a list of strings.

00:57:46.000 | Underscore methods is a special name, and it's a special name that is going to be looked

00:57:49.800 | at by the funx quags decorator.

00:57:55.560 | The funx quags decorator is going to look for this special name, and it's going to say,

00:58:02.480 | "The quags in this init are not actually unknown.

00:58:08.200 | The quags is actually this list."

00:58:13.400 | And so what it's going to do is it's going to automatically grab any quags with these

00:58:21.680 | names, and it's going to replace the methods that we have with the thing that's passed

00:58:30.760 | to that quag.

00:58:32.880 | So again, one of the issues with quags, as we've talked about, is that they're terrible

00:58:37.720 | for kind of discoverability and documentation, because you don't really know what you can

00:58:42.480 | pass to quags.

00:58:44.080 | But pretty much everything we use in quags, we make sure that we fix that.

00:58:49.260 | So if we look at dataloader and hit Shift+Tab, you'll see that all the methods are listed.

00:59:02.240 | You'll also see that we have here assert not quags.

00:59:08.360 | And the reason for that is that @funxquags will remove all of these methods from quags

00:59:16.620 | once it processes them.

00:59:18.560 | So that way, you can be sure that you haven't accidentally passed in something that wasn't

00:59:23.080 | recognized.

00:59:24.120 | So if instead of, for example, doing create batch equals, we do create back equals, then

00:59:30.840 | we will get that assertion error so we know we've made a mistake.

00:59:35.440 | So again, we're kind of trying to get the best of both worlds, concise, understandable

00:59:39.760 | code, but also discoverability and avoiding nasty bugs.

00:59:49.240 | Okay, so, all right, another tiny little trick that one that I just find super handy is store

01:00:00.760 | atra.

01:00:02.860 | Here is something that I do all the time.

01:00:05.720 | I'll go, like, self.dataset, comma, self.bs, comma, et cetera equals data set, comma, bs,

01:00:16.280 | et cetera.

01:00:17.280 | So like, very, very often when we're setting up a class, we basically store a bunch of

01:00:22.440 | parameters inside self.

01:00:24.600 | The problem with doing it this way is you have to make sure that the orders match correctly.

01:00:29.040 | If you add or remove stuff from the parameter list, you have to remember to add or remove

01:00:32.360 | it here.

01:00:33.360 | You have to make sure the names are the same.

01:00:35.600 | So there's a lot of repetition and opportunity for bugs.

01:00:39.820 | So store atra does exactly what I just described, but you only have to list the things to store

01:00:49.160 | once.

01:00:50.160 | So it's a minor convenience, but it definitely avoids a few bugs that I come across and a

01:00:57.360 | little bit less typing, a little bit less reading to have to do, but I think that's

01:01:04.920 | it.

01:01:05.920 | Okay.

01:01:06.920 | Let me know if anybody sees anything else here that looks interesting.

01:01:14.740 | Because we're doing a lot of stuff lazily, you'll see a lot more yields and yield froms

01:01:20.040 | than you're probably used to.

01:01:22.240 | So to understand fast.ai version 2 foundations better, you will need to make sure that you

01:01:28.880 | understand how those work in Python pretty well.

01:01:31.440 | If anybody has confusions about that, please ask on the forums.

01:01:37.840 | But I did find that by kind of using this lazy approach, a function approach, we did

01:01:47.960 | end up with something that I found much easier to understand, had much less bugs, and also

01:01:52.960 | was just much less code to write.

01:01:54.560 | So it was definitely worth doing.

01:01:56.840 | Okay, so then there's this one ugly bit of code, which is basically we create an object

01:02:07.800 | of type fake loader, and the reason for that is that PyTorch assumes that it's going to

01:02:20.680 | be working with an object which has this specific list of things in it.

01:02:25.560 | So we basically had to create an object with that specific list of things and set them

01:02:28.880 | to the values that it expected, and then it calls iter on that object, and so that's where

01:02:37.840 | we then pass it back to our data loader.

01:02:41.100 | So that's what this little bit of ugly code is.

01:02:44.400 | It does mean, unfortunately, that we have also tied our code to some private things

01:02:50.440 | inside PyTorch, which means that from version to version, that might change, and so if you

01:02:56.880 | start using master and you get errors on things like, I don't know, underscore autocollation

01:03:02.720 | doesn't exist, it probably means they've changed the name of some internal thing.

01:03:06.640 | So we're going to be keeping an eye on that.

01:03:08.720 | Maybe we can even encourage the PyTorch team to make stuff a little bit less coupled, but

01:03:16.000 | for now, that's a bit of ugliness that we have to deal with.

01:03:24.600 | Something else to mention is PyTorch, I think they added it to 1.2, maybe it was earlier,

01:03:34.200 | they've added a thing called the worker init function, which is a handy little callback

01:03:41.680 | in their data loader infrastructure, which will be called every time a new process is

01:03:48.360 | fired off.

01:03:50.560 | So we've written a little function for the worker information function here that calls

01:03:55.760 | PyTorch's getWorkerInfo, which basically will tell us what dataset is this process working

01:04:02.520 | with, how many workers are there, and what's the ID of this worker into that list of workers,

01:04:09.040 | and so we do some, you know, so this way we can ensure that each worker gets a separate

01:04:16.080 | random seed, so we're not going to have horrible things with random seeds being duplicated.

01:04:22.640 | We also use this information to parallelize things automatically inside the data loader.

01:04:30.400 | As you can see, we've got, yes, we set the offset and the number of workers.

01:04:42.520 | And then we use that here in our sampler to ensure that each process handles a contiguous

01:04:49.880 | set of things which is different to each other process.

01:04:56.800 | Okay, so PyTorch, by default, calls something called default-collate to take all the items

01:05:21.760 | from your datasets and turn them into a batch, if you have a batch size.

01:05:27.880 | Otherwise it calls something called default-convert.

01:05:36.520 | So we have created our own fastAI-convert and fastAI-collate, which handle a few types

01:05:45.760 | which PyTorch does not handle, so it doesn't really change any behavior, but it means more

01:05:52.880 | things should work correctly.

01:05:55.160 | So create batch by default simply uses collate or convert as appropriate, depending on whether

01:06:03.440 | your batch size is none or not.

01:06:06.360 | But again, you can replace that with something else, and later on you'll see a place where

01:06:10.680 | we do that.

01:06:11.680 | The language model dataloader does that.

01:06:16.200 | In fact, that might be an interesting thing just to briefly mention.

01:06:18.640 | For those of you that have looked at language models before, the language model dataloader

01:06:25.360 | in fastAI version 1, and most libraries, tends to be big, confusing, horrible things.

01:06:35.160 | But look at this.

01:06:36.600 | This is the entire language model dataloader in fastAI version 2, and it's because we were

01:06:41.560 | able to inherit from our new dataloader and use some of these callbacks.

01:06:46.120 | But this actually has all of the advanced behavior of approximate shuffling and stuff

01:06:56.440 | like that, which you want in a modern language model dataloader.

01:07:00.240 | We'll look at this in more detail later, but as an example of the huge wins that we get

01:07:05.960 | from this new dataloader, things get so, so much easier.

01:07:20.360 | Now I think we can go back and look at what is a transform dataloader in more detail,

01:07:25.820 | because a transform dataloader is something which inherits the dataloader.

01:07:33.560 | It gets a bunch of quags, which as we mentioned, thanks to @delegates, passed to the dataloader,

01:07:41.520 | in this case is the superclass, but they're all going to be correctly documented and they're

01:07:47.280 | all going to be tab-completed and everything, thanks to @delegates.

01:07:50.880 | So what's the additional stuff that TufemDL does that dataloader doesn't do?

01:07:55.360 | Well, the first thing it does is this specific list of callbacks turned into transform pipelines.

01:08:07.680 | So basically after you grab a single item from the dataset, there will be a pipeline.

01:08:16.920 | After you've got all of the items together, but before you collate them, there will be

01:08:25.320 | this callback, and after everything's been turned into a collated batch, there will be

01:08:30.200 | this callback and these are all going to be pipelines.

01:08:33.000 | So you can see here, these are all in _deautofems, so we're going to go through each of those

01:08:39.920 | and we're going to turn them into a pipeline, okay?

01:08:43.440 | So it's just going to grab it and turn it into a pipeline.

01:08:51.800 | Remember how I mentioned before that there are item transforms and tuple transforms,

01:08:58.640 | and tuple transforms are the only ones that have the special behavior that it's going

01:09:02.280 | to be applied to each element of a tuple.

01:09:06.920 | And I mentioned that kind of stuff, all the TufemDL and TufemDS and everything will handle

01:09:12.080 | all that for you, and here it is doing it here, right?

01:09:15.800 | Basically before batch is the only one that's going to be done as a whole item and the other

01:09:27.000 | ones will be done as tuple transforms.

01:09:35.080 | And then I also mentioned that it handles setup, so this is the place where it's going

01:09:38.320 | to call setup for us if you have a setup.

01:09:41.960 | We'll be talking more about setup when we look at pipelines in more detail.

01:09:45.600 | But the main thing to recognize is that TufemDL is a data loader.

01:09:50.760 | It has the callbacks, these same three callbacks, but these three callbacks are now actual transformation

01:09:57.680 | pipelines, which means they handle decodes and all that kind of thing.

01:10:04.520 | So a TufemDL we're going to call under init needs to be able to decode things.

01:10:14.580 | So how is it going to decode things?

01:10:16.160 | So one of the things it's going to need to know is what types to decode to.

01:10:23.260 | And this is tricky for inference, for example, because for inference, in production all you're

01:10:37.000 | getting back from your model is a tensor, and that tensor could represent anything.

01:10:42.640 | So our TufemDL actually has to remember what types it has.

01:10:49.440 | And so it actually stores them -- the types that come out of the dataset are stored away

01:10:54.400 | in retainDS, and the types that come out of the data at the end of the batching is stored

01:10:59.600 | away in retainDL.

01:11:02.120 | And they're stored as partial functions.

01:11:05.160 | And we'll see this function called retain types later, but this is the function that

01:11:08.440 | knows how to ensure that things keep their types.

01:11:11.800 | And so we just grab -- so we get one item out of our batch, just in order that we can find

01:11:20.160 | out what types it has in it, and then we turn that into a related batch in order to find

01:11:26.280 | what types are in that.

01:11:28.280 | So that way, when we go decode, we can put back the types that we're meant to have.

01:11:38.120 | So that's what's going on there.

01:11:41.320 | So basically what that means, then, is that when we say show batch, we can say decode.

01:11:49.680 | Decode will put back the correct types, and then call the decode bits of all of our different

01:11:58.720 | pipelines, and then allow us to show it.

01:12:04.120 | If we can show the whole batch at once, which would be the case for something like tabular

01:12:10.760 | or for things like most other kinds of data sets, we're going to have to display each

01:12:16.920 | part of the tuple separately, which is what this is doing here.

01:12:22.520 | So we end up with something where we can show batch, decode batch, et cetera.

01:12:36.720 | So L. Tan's asking, will there be a walkthrough tomorrow?

01:12:41.840 | Yes, there's a walkthrough every day.

01:12:44.080 | You just need to look on the fast.ai version 2 daily code walkthroughs where we will keep

01:12:50.080 | the information updated.

01:12:51.080 | Oh, wait.

01:12:52.080 | But today's Friday.

01:12:53.080 | No, today's Thursday.

01:12:54.080 | Yeah.

01:12:55.080 | So it's going to be every weekday, so I'm turning tomorrow.

01:13:02.760 | So let me know if you have any requests for where you would like me to head next.

01:13:14.560 | Is that all of that one?

01:13:25.040 | So if I look where we've done, we've kind of, we've done 01c, we've done 08, we've done

01:13:34.160 | 05.

01:13:37.600 | So maybe we should look at pipeline.

01:13:43.920 | So that's going to be a big rabbit hole.

01:13:45.920 | So what I suggest we do is we wrap up for today.

01:13:49.880 | And tomorrow, let's look at pipeline, and then that might get us into transforms.

01:13:56.920 | And that's going to be a lot of fun.

01:13:59.520 | All right.

01:14:00.520 | Thanks, everybody.

01:14:01.520 | I will see you all tomorrow.

01:14:04.320 | Bye-bye.

01:14:05.320 | Bye-bye.

01:14:05.320 | Bye-bye.

01:14:06.320 | Bye-bye.

01:14:07.320 | Bye-bye.

01:14:08.320 | Bye-bye.

01:14:09.320 | Bye-bye.

01:14:09.320 | [BLANK_AUDIO]