fastai v2 walk-thru #7

Chapters

0:09:53 Delegates

13:8 Data Loader

28:13 Data Blocks

28:41 Datablocks

36:26 Data Block

39:8 Merge Transforms

40:1 Data Augmentation

40:23 Create a Data Source

41:58 Examples

42:53 Multi-Label Classification

47:16 Inheritance

47:25 Static Method

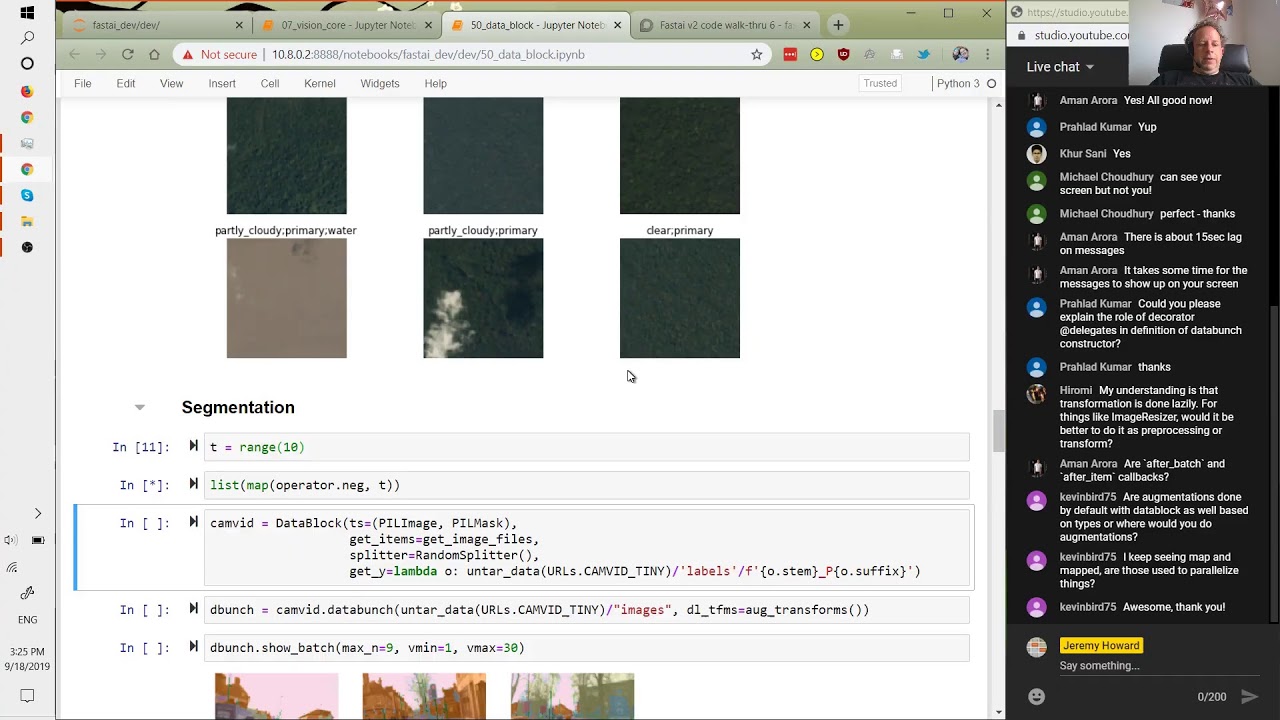

51:0 Segmentation

00:00:00.000 | Hi, can you see me and hear me okay?

00:00:18.260 | Test, test, one, two.

00:00:43.200 | Okay, sorry for the slight delay, where did we get to last time?

00:01:00.740 | We looked at data source, did we finish looking at data source?

00:01:12.100 | But also, did anybody have any questions about yesterday?

00:01:14.900 | No, it doesn't look like we quite finished data source.

00:01:30.500 | Let's see what the notes say, okay, so we've done some, okay, we looked at filter, okay.

00:01:49.180 | So all right, so we were looking at data source.

00:01:55.020 | Yes, that's right, we looked at subsets.

00:02:02.020 | So I think the only bit we haven't looked at was data bunch.

00:02:16.020 | So if we look at the tests, yes, I remember doing that test, yeah, okay, so I don't think

00:02:27.180 | we did this one.

00:02:28.780 | So one of the interesting things, oh, my video's not on, no, it's not, why's that?

00:02:46.460 | Yes, Michael, that's because it's trying to use a device that's not there.

00:03:04.660 | And then my computer's going really slowly.

00:03:30.660 | Okay.

00:03:46.660 | Okay, can you see me now?

00:04:15.620 | All right, so one of the interesting things about data source is that the input could

00:04:32.580 | be, instead of being a list or an L or whatever, it can be a data frame or a NumPy array.

00:04:41.300 | And one of the nice things is that it will use the pandas or NumPy or whatever kind of

00:04:48.420 | accelerated indexing methods to index into that to grab the training set and the test

00:04:53.900 | set.

00:04:54.900 | So this is kind of a test that that's working correctly.

00:05:02.220 | And so here's an example of a transform, which should grab the first column.

00:05:11.940 | And then this subset is going to be the training set.

00:05:20.420 | And in this case, the data source, we haven't passed any filters into it.

00:05:24.500 | So this is going to be exactly the same as the unsubsetted version.

00:05:29.860 | So that's all that test's doing.

00:05:32.700 | So here's how filters work, they don't have to be tensors, but here's the example with

00:05:39.220 | a tensor and a list just to show you.

00:05:49.860 | So here we've got two filters and we can check the zeroth subset and the first subset.

00:06:00.940 | Yeah, so that 15 second lag, I think is, that's just what YouTube does.

00:06:06.940 | It has a super low latency version, but if you choose a super low latency version, I

00:06:11.660 | think that significantly impacts the quality.

00:06:16.100 | So I think the 15 second lag is probably the best compromise.

00:06:23.780 | Just a reminder that the reason that we get things back as tuples is because that's what

00:06:27.460 | we expect to see for a data set or a data loader mini batch is that we do get tuples

00:06:37.420 | of x and y or even if it's just an x, it'll be a tuple of an x, so it keeps things more

00:06:41.900 | consistent that way.

00:06:55.340 | So here's a test of adding a filter to a transform, which we talked about last time.

00:07:13.380 | So this transform will only be applied to subset one, which in this case will be these

00:07:19.820 | ones, so it encodes x times two.

00:07:25.520 | So if we start with range naught through four, then the training sets are going to be one

00:07:31.340 | comma two, but the validation set will be doubled because it's the filter number one.

00:07:38.740 | So that's the example of the thing we're talking about if using filters.

00:07:43.420 | So then data bunch is, as the name suggests, just something that creates a data bunch.

00:08:02.540 | So a data bunch to remind you is data bunch is this tiny, tiny, tiny thing, so it's just

00:08:16.100 | something which basically has a train deal and a valid deal.

00:08:22.500 | So to create one, we just need to pass some data loaders into init for data bunch, that's

00:08:30.820 | how we create it.

00:08:34.260 | So here you can see here is the data bunch constructor, and we have to pass in a bunch

00:08:40.700 | of data loaders.

00:08:46.980 | And this is something still going to just edit today, which is it won't necessarily

00:08:50.260 | be of type data loader, it could be some subclass of data loader.

00:08:54.280 | So deal class default is transform deal, so this is going to create a transform deal with

00:09:03.820 | subset I, because we're looping through all of the eyes of all of the subsets.

00:09:10.660 | And then for each one, there's going to be some batch size and some shuffle and some

00:09:14.300 | drop last, and they all just depend on whether or not it's the training or validation set

00:09:20.980 | as to what's the appropriate value there.

00:09:25.340 | Okay, so that's that, that is data source.

00:09:37.420 | So now let's put that all together again, look back at data source in O8.

00:09:52.660 | So okay, so the delegates is something that we're kind of working on at the moment, it's

00:09:58.320 | not quite working the way we want, but basically, normally, we just put delegates at the very

00:10:04.580 | top of a class like this.

00:10:09.540 | And that means that the any star star quags in the init of that class will be documented

00:10:18.020 | in the signature as being the super classes init method, but we don't have to delegate

00:10:26.100 | to the super class init, we can specifically say what we're delegating to.

00:10:31.340 | So in this case, the quags that are being passed through here, ended up being passed

00:10:36.500 | to the data loader constructor.

00:10:41.880 | So this says this is delegating to the data loader constructor.

00:10:45.240 | So if we look at the documentation, so let's run some of these to see this in action.

00:10:54.980 | So if we go data source.dataBunch, and you can see here's quags, and if I hit shift tab,

00:11:08.220 | then I don't see quags, I see all of the keyword arguments for data loader.

00:11:14.540 | And that's because that's what that delegates did, it said use the quags from this method

00:11:21.420 | for the signature.

00:11:26.100 | Okay, thanks for the question.

00:11:33.140 | So if we now look at the data source version here, just to remind you of what we have, we're

00:11:52.500 | looking at the pets data set, and so the items are lists of paths to pictures of pets, and

00:12:11.500 | data firms are these lists of PIO image.create, and there's a regex laborer in the second

00:12:23.420 | pipeline and categorized in the second pipeline.

00:12:26.660 | So when we pass those to data source, we can also pass in our splits, then we can go pets.subset1

00:12:35.220 | to grab the validation set.

00:12:37.100 | And so this is the zero thing in that data set, which is of course, an image, that's

00:12:42.940 | the shape and some label, categorized label.

00:12:46.980 | So we can also use valid, and we can use decode, and we can use show.

00:12:55.460 | And so you can see show is decoding the int label to create an actual proper label.

00:13:04.440 | So in order to create many batches, we need to create a data loader, that's here.

00:13:15.400 | And to do that, we also need to resize all the images to the same size and turn them

00:13:20.620 | into tenses.

00:13:23.260 | And we need to put everything onto CUDA and convert byte to flow tenses for the images.

00:13:31.480 | So when we create the transform data loader, let's do the training set, some batch size,

00:13:39.300 | and then here the data loader transforms.

00:13:47.540 | And so then we can grab a batch, and we can make sure that the lengths and shapes are

00:13:54.580 | right and that it's actually on CUDA if we asked it to be.

00:13:59.260 | And that now gives us something we can do a .showbatch done.

00:14:05.340 | We could have also done this by using databatch, databunch rather, the source.databunch, pets.train,

00:14:35.180 | pets.databunch, so that's going to want batch size, oh, it's two batch sizes, that's a mistake.

00:15:00.100 | And let's grab all that.

00:15:14.060 | Okay, so let's see what that's given us, databunch.

00:15:26.220 | So that should have a train.do, train.do, oopsie-daisy, so it is, and so we could then

00:15:55.540 | grab a batch of that, same idea.

00:16:01.860 | Okay, so I guess we could even say showbatch, there we are.

00:16:17.060 | Okay, so questions, maybe do imagery sizer is pre-processing?

00:16:24.660 | So generally, you don't, well, let's try and give you a complete answer to that, Harami.

00:16:32.820 | Generally speaking, in the fast data courses, we don't do it that way because normally part

00:16:38.300 | of the resizing is data augmentation.

00:16:43.700 | So the default data augmentation for ImageNet, for example, is we kind of pick some, oopsie-daisy,

00:16:48.780 | we pick some random cropped sub-area of the image and we kind of like zoom into that.

00:16:56.500 | So that's like what we, that's kind of what, that's normally how we resize.

00:17:01.420 | So for the training, the resizing is kind of cropping at the same time.

00:17:06.540 | So that's why we don't generally resize as pre-processing.

00:17:15.660 | Having said that, if your initial images are really, really big, and if you want to use

00:17:21.260 | the full size of them, then you may want to do some pre-processing resizing.

00:17:26.500 | Okay, so after batch and after item, I'm glad you asked, they're the thing I was going to

00:17:32.220 | look at in more detail now, which is to take a deeper dive into how Transform Data Loader

00:17:37.620 | works.

00:17:39.020 | We've already kind of seen it a little bit, but we're probably in a good position to look

00:17:42.940 | at it more closely.

00:17:49.540 | And yeah, you can think of them as callbacks.

00:17:57.780 | Looking at O1C Data Loader is actually a fun place to study because it's a super interesting

00:18:05.820 | notebook.

00:18:06.820 | You might remember it's got this really annoying fake loader thing, which you shouldn't worry

00:18:10.780 | about too much because it's just working around some problems with the design of PyTorch's

00:18:17.180 | Data Loader.

00:18:19.180 | But once you get past that, to get to the Data Loader itself, we're doing a lot of stuff

00:18:25.060 | in a very nice way.

00:18:26.620 | So basically what happens is, let's see what happens when we iterate.

00:18:35.380 | So what's going to happen when we iterate is it's going to create some kind of loader.

00:18:41.900 | And so _loaders specifically is a list of the multiprocessing Data Loader and the single

00:18:48.260 | process Data Loader from PyTorch.

00:18:50.700 | So we'll generally be using the multiprocessing Data Loader.

00:18:53.700 | So it's going to grab a multiprocessing Data Loader, and it's going to yield a batch from

00:19:02.900 | that.

00:19:06.100 | Actually what it iterates, it actually calls sampler on our Data Loader class to grab a

00:19:13.420 | sample of IDs and then batches to create batches from those IDs.

00:19:20.300 | So the key thing to look at is create batches.

00:19:23.200 | This is actually what the Data Loader is using, is create batches.

00:19:26.760 | So create batches, most of the time there's going to be a data set that we're creating

00:19:31.780 | a Data Loader over.

00:19:33.600 | So we create an iterator over the data set, and we pop that into self.it.

00:19:40.900 | And then we grab each of the indexes that we are interested in.

00:19:46.180 | So this is the indexes of our sample, and we map do item over that.

00:19:52.660 | Do item calls create item, and then after item, create item, assuming that the sample

00:20:03.700 | is not none, simply grabs the appropriate indexed item from a data set.

00:20:10.420 | So that's all create item does.

00:20:12.980 | And then after item, by default after item equals no up, it does nothing at all.

00:20:23.220 | But we have this funx quags thing, which is a thing where it looks for methods, and you'll

00:20:34.580 | see that after item is one of the things listed here in funx quags.

00:20:48.500 | And what that means is that when we pass in quags, it's going to see if you've passed

00:20:53.940 | in something called after item, and it's going to replace our after the item method with

00:20:58.340 | a thing you passed in.

00:21:00.220 | So in other words, we can pass in after item, and it will then be called the thing we passed

00:21:08.100 | in will be called at this point.

00:21:11.300 | So yeah, it is basically a callback.

00:21:14.540 | But actually, notice that all of these things, all these things that are listed are all replaceable.

00:21:22.060 | So it's kind of like a bit more powerful than normal callbacks, because you can easily replace

00:21:26.300 | anything inside the data loader with whatever you like.

00:21:31.820 | But these things with after or before in their name, the things which actually default to

00:21:37.980 | no up are very easy to replace, because there's no functionality currently there.

00:21:42.340 | So it doesn't matter.

00:21:43.340 | Like, you can do anything you like.

00:21:45.700 | So after item, then, is the thing that is going to get called immediately after we grab

00:21:52.220 | one thing from the data set.

00:21:55.780 | And so that's what happens here.

00:21:59.240 | So we map that over each sample index.

00:22:04.260 | And then, assuming that you have-- so that if you haven't got a batch size set, so batch

00:22:12.060 | size is none, then we're done.

00:22:16.300 | There's nothing to do.

00:22:17.300 | You haven't asked for batches.

00:22:18.300 | But if you have asked for batches, which you normally have, then we're going to map do

00:22:23.420 | batch over the result we just got.

00:22:28.300 | And first, we chunk it into batches.

00:22:30.260 | And one of the nice things about this code is the whole thing's done using generators

00:22:37.820 | and maps and lazy.

00:22:39.560 | So it's a nice example of code to learn how to do that in Python.

00:22:43.660 | Anyway, so do batch is going to call before batch, and then create batch, and then retain.

00:22:52.140 | This is just the thing that keeps the same type.

00:22:54.640 | And then finally, eventually, after batch.

00:22:59.820 | So again, before batch, by default does nothing at all.

00:23:03.940 | After batch, by default does nothing at all.

00:23:07.540 | And create batch, by default simply calls collate.

00:23:12.700 | So that's the thing that just concatenates everything into a single tensor.

00:23:18.100 | OK, so it's amazing how little code there is here, and how little it does.

00:23:30.580 | But we end up with something that's super extensible, because we can poke into any of

00:23:35.940 | these points to change or add behavior.

00:23:41.340 | One of the things that I'm talking about is that it's possible we might change the names

00:23:48.660 | of these things like after item and before batch and stuff.

00:23:54.580 | They're technically accurate.

00:23:59.800 | They're kind of like once you understand how the data loader works, they make perfect sense.

00:24:08.580 | But we may change them to be names, which makes sense even if you don't know how the

00:24:12.540 | data loader works.

00:24:17.820 | So anyway, so that's something we're thinking about.

00:24:22.660 | So then tofmdl, which is in O5, now I think about it, is a pretty thin subclass of data

00:24:42.500 | loader.

00:24:44.800 | And as the name suggests-- so here it is.

00:24:50.420 | As the name suggests, it is a data loader.

00:24:53.380 | But the key thing we do is that for each of these three callbacks, we loop through those

00:25:01.020 | and we replace them with pipelines, transform pipelines.

00:25:06.940 | So that means that a tofmdl data loader also has decode, decode batch, and show batch.

00:25:16.900 | So that's the key difference there.

00:25:28.460 | The other thing it needs to know how to do is that if you-- when you call decode, you're

00:25:36.740 | going to be passing in just a plain PyTorch tensor.

00:25:40.940 | Probably it's not going to have any type like a tensor image or a tensor bounding box or

00:25:45.660 | whatever.

00:25:46.660 | The loader has to know what data types to convert it into.

00:25:52.620 | And what it actually does is it has this method called retainDL, which is the first thing

00:26:01.000 | that it does here.

00:26:04.500 | And that's the method that adds the types that it needs.

00:26:08.840 | So the-- what we do is we basically just run one mini batch, a small mini batch, to find

00:26:23.240 | out what types it creates.

00:26:25.580 | And we save those types.

00:26:32.080 | And this little bit of code is actually super cute.

00:26:34.820 | If you want to look at some cute code, check this out and see if you can figure out how

00:26:37.980 | it's working, because it's-- I think it's very nice.

00:26:46.460 | OK.

00:26:47.460 | So that's that.

00:27:01.780 | So now you can see why we have these data set image transforms and these data loader

00:27:10.060 | transforms and what they go here.

00:27:11.740 | So these data set image transforms, imagerySizer, that's something that operates on-- let's

00:27:18.260 | go back and have a look at it, because we created it just up here.

00:27:21.620 | It's something that operates on a pillow image.

00:27:27.260 | So that has to be run before it's being collated into a mini batch, but after it's being grabbed

00:27:37.340 | from the data set.

00:27:40.000 | So that's why it's-- that is in the after item transforms, because it's before it's

00:27:49.500 | being collated into a mini batch.

00:27:51.300 | On the other hand, CUDA and byte to float tensor are going to run much faster if it's

00:27:56.620 | run on a whole mini batch at a time.

00:27:58.900 | So after batch is the thing that happens after it's all being collated, and so that's why

00:28:03.820 | those ones are here.

00:28:07.780 | OK.

00:28:10.940 | So let's now look at data blocks.

00:28:26.980 | OK.

00:28:41.860 | So data blocks is down in 50, because we-- here we go, because it has to use stuff from

00:28:53.940 | vision and text and so forth in order to create all those different types of data blocks.

00:28:59.540 | And let's take a look at an example first.

00:29:04.500 | So here's an example of MNIST.

00:29:09.140 | So if you remember what data blocks basically have to do, there has to be some way to say

00:29:14.180 | which files, for example, are you working with, some way to say how to split it from

00:29:20.100 | a validation set and training set, and some way to label.

00:29:27.540 | So here are each of those things.

00:29:37.340 | We kind of need something else, though, as well, which is we need to know, for your Xs,

00:29:48.220 | what types are you going to be creating for your Xs?

00:29:53.540 | And for your Ys, what types are you going to be creating for your Ys?

00:29:59.540 | Why is that?

00:30:00.540 | Well, it wants to create those types, because these types, as we'll see, have information

00:30:09.780 | about what are all the transforms that you would generally expect to have to use to create

00:30:14.820 | and use that type.

00:30:17.860 | So you can see there isn't actually anything here that says open an image.

00:30:23.740 | This is just list the images on the disk, list the file names.

00:30:29.580 | But this tuple of types is the thing where it's going to call PIL image black and white

00:30:34.740 | dot create on the file name to create my Xs, and it's going to call category to create

00:30:44.060 | my Ys.

00:30:45.140 | And in the Y case, because there's a get Y to find, first of all, it will label it with

00:30:52.060 | the parent labeler.

00:30:56.420 | So if we have a look, so here's our category class.

00:31:19.580 | And let me try to remember how this works.

00:31:46.460 | What about PIL image PW?

00:32:05.020 | I just want to remember how to find my way around this bit.

00:32:19.100 | So PIL image PW.

00:32:36.980 | So, these types are defined in 07 Vision Core.

00:33:02.940 | Yes, so you can see PIL image PW.

00:33:31.620 | PW is a PIL image.

00:33:36.460 | It's got no other methods.

00:33:38.440 | So it's going to be calling the create method for us, but when it calls the create method,

00:33:45.020 | it's going to use open args to figure out what arguments to pass to the create method, which

00:33:50.660 | in the case of PIL image PW is mode L. That's the black and white mode for pillow.

00:34:01.620 | But there's another thing in here that's interesting, which is that PIL base, which this inherits

00:34:06.740 | from, has a thing called default DL transforms.

00:34:11.840 | And this says if you, in the Databox API, when you use this type, it's going to automatically

00:34:18.700 | add this transform to our pipeline.

00:34:27.180 | So here's another example.

00:34:29.580 | If you're working with point data, it adds this to your DS transform pipeline.

00:34:37.980 | Pointing boxes, there are different transforms that are added to those.

00:34:50.860 | So the key thing here is that these types do two things, at least two things.

00:34:59.500 | The first is they say how to actually create the, how to actually create the objects that

00:35:06.740 | we're trying to build, but they also say what default transforms to add to our pipeline.

00:35:18.900 | And so that's why when we then say, so we can take a data block object and we can create

00:35:24.420 | it, and then we can call data source, passing in the thing that will eventually make its

00:35:30.340 | worth way to get item source. So this is the path to MNIST.

00:35:36.940 | And this then allows us, as you can see, to use it in the usual way.

00:35:43.620 | And because the Databox API, as we'll see, uses funx quags, that means that we can, instead

00:35:57.460 | of inheriting from data block, we can equally well just construct a data block.

00:36:03.500 | And if you construct a data block, then you have to pass in the types like this.

00:36:08.980 | And then here are the three things, again, that we were overriding before.

00:36:14.020 | So it's doing exactly the same thing as this one.

00:36:17.860 | So a lot of the time, this one is going to be a bit shorter and easier version if you

00:36:22.180 | don't kind of need state.

00:36:26.440 | So let's look at the data block class.

00:36:36.480 | As you can see, it's very short.

00:36:40.260 | So as we discussed, it has a funx quags decorator.

00:36:47.740 | And that means it needs to look to see what the underscore methods are.

00:36:51.100 | So here's the list of methods that you can pass in to have replaced by your code.

00:36:57.220 | Then the other thing that you're going to pass in are the types.

00:37:07.720 | So then what we're going to do is we're going to be creating three different sets of transforms.

00:37:14.600 | And in data block, we're giving them different names to what we've called them elsewhere.

00:37:17.980 | But again, I don't know if this is what the names are going to end up being, but basically

00:37:22.940 | what's going to happen is, as you can see, after item is DS transforms, which kind of

00:37:31.700 | makes sense, right?

00:37:32.700 | Because the things that happen after you pull something out of a data set is after item.

00:37:36.780 | So they're kind of the data set transforms, whereas the things that happen after you collate.

00:37:42.260 | So after batch, they're the DL transforms.

00:37:45.580 | So they're those two.

00:37:48.900 | And so we have for a particular data block subclass, there's going to be some default

00:37:55.260 | data set transforms and some default data loader transforms.

00:37:59.660 | The data set transforms, you're pretty much always going to want to tensor, data loader

00:38:05.660 | transforms, pretty much always going to want CUDA.

00:38:08.080 | And then we're going to grab from our types that we passed in that default DS transforms

00:38:15.280 | and default DL transforms attributes that we saw.

00:38:23.300 | OK, so there's something kind of interesting, though, which is then later on when you try

00:38:37.300 | to create your data bunch, you know, you might pass in your own data set transforms and data

00:38:47.060 | loader transforms.

00:38:54.220 | And so we have to merge them together with the defaults.

00:38:58.760 | And so that means if you pass in a transform that's already there, we don't want it to

00:39:02.900 | be there twice in particular.

00:39:06.040 | So there's a little function here called merge transforms, which, as you can see, basically

00:39:14.000 | removes duplicate transforms, so transforms of the same type, which is a little bit awkward,

00:39:23.360 | but it works OK.

00:39:26.000 | So this is why things like order is important.

00:39:31.440 | Remember, there's that order attribute.

00:39:32.800 | In pipeline, we use the order attribute to sort the transforms.

00:39:36.760 | So like you can have transforms defined in different places.

00:39:39.520 | They can be defined in the types, in the defaults and the types, or you could be passing them

00:39:44.760 | in directly when you call data source, sorry, a data bunch or data source.

00:39:53.300 | And so we need to bring them all together in a list and make sure that list is in an

00:39:56.680 | order that makes sense.

00:40:00.480 | So yeah, so data augmentation, we haven't looked at yet, but they are just other transforms.

00:40:06.920 | So generally, you would pass them into the data bunch method, and we don't have any--

00:40:15.120 | there's no augmentations that are done by default, so you have to pass them in.

00:40:21.320 | So if you ask for a data bunch, then first of all, it's going to create a data source.

00:40:26.620 | So self.source, in this case, will be like the path to MNIST, for example.

00:40:31.700 | So we would then call that get items function that you defined.

00:40:35.800 | And if you didn't define one, then just do nothing to turn the source into a list of

00:40:41.040 | items.

00:40:45.840 | And then if you have a splitter, then we create the splits from it.

00:40:51.600 | And if there's a get x and a get y, then call those-- well, actually, don't call those,

00:41:00.880 | just store those, I should say, as functions.

00:41:04.860 | So they're going to be a labeling functions.

00:41:08.560 | OK, if you didn't pass in any type transforms, then use the defaults.

00:41:19.520 | And so then we can create a data source, passing in our items and our transforms.

00:41:31.640 | And so that gives us our data source.

00:41:33.840 | And then we'll create our data set transforms and data loader transforms and turn our data

00:41:38.280 | source into a data bunch using the method we just saw.

00:41:44.720 | OK, so that's data blocks.

00:41:56.920 | So the best way to understand it is through the examples.

00:41:59.480 | So yeah, have a look at the subclassing example, have a look at the function version example.

00:42:07.760 | And so pets, so the types of pets are going to be an image in a category.

00:42:14.040 | Same get items, a random splitter, a regex labeler, forget why.

00:42:19.400 | And there we go.

00:42:21.880 | And in this case, you can see here we are actually doing some data augmentation.

00:42:27.200 | So aug transforms is a function of simulator, which gives us-- it's basically the same as

00:42:33.300 | get transforms was in version one, but now transforms is a much more general concept

00:42:39.600 | in version two.

00:42:40.600 | So these are specifically augmentation transforms.

00:42:44.960 | OK, and multi-label classification.

00:42:58.920 | So multi-label classification is interesting because this time we-- this is Planet.

00:43:04.840 | It uses a CSV.

00:43:11.760 | So yeah, Kevin, hopefully that's answered your question on augmentations.

00:43:14.920 | Let me know if it didn't.

00:43:17.320 | So Planet, we create a data frame from the CSV.

00:43:23.200 | And so when we call Planet.betaBunch, we're going to be passing in the NumPy array version

00:43:32.480 | of that data frame, so df.values in this case.

00:43:38.760 | And our augmentation here, as you can see, it has some standard Planet appropriate augmentations,

00:43:55.760 | including flipvert equals true.

00:44:00.520 | And so what happens here?

00:44:01.920 | So we pass in this.

00:44:04.280 | This is a NumPy array when we call dot values.

00:44:08.400 | And so get x is going to grab just x0.

00:44:24.000 | And this will just grab x1 and split it because this is a NumPy array.

00:44:32.160 | So each row of this will contain-- the first item will be the file name and the second

00:44:38.800 | item will be the value.

00:44:40.840 | So that's one way of doing Planet, which is mildly clunky.

00:44:45.080 | It works.

00:44:49.360 | Here's another way of doing-- and the other thing about this way is it's kind of-- I don't

00:44:53.200 | know.

00:44:54.200 | It's like doing this NumPy array thing is kind of weird.

00:44:56.520 | This is a more elegant way, I think, where we're actually passing in the data frame rather

00:45:03.300 | than converting it into a NumPy array first.

00:45:14.120 | So this time, we're doing something a little bit different, which is in get items.

00:45:24.260 | So before for get items, there was nothing at all, right?

00:45:28.200 | So we just used the NumPy array directly.

00:45:30.540 | This time for get items, we're using this function, which returns a tuple.

00:45:38.740 | And the first thing in the tuple is a pandas expression that works in an entire pandas

00:45:45.000 | column to turn that column into a list of file names.

00:45:50.000 | And then this is another pandas expression that works on a complete column, the tags

00:45:54.880 | column, to create the labels.

00:45:58.960 | So one of the things that we skipped over when we looked at data blocks is that when

00:46:07.480 | we call get items, if it returns a tuple, then what we do is we zip together all of

00:46:21.320 | the items in that tuple.

00:46:23.480 | So in this case, we've returned a column of file names and a column of labels.

00:46:30.480 | And data sets are meant to return a single file name label pair.

00:46:37.780 | So that's why this zips them together.

00:46:42.600 | And so then the labelers will just grab the appropriate thing from each of those lists.

00:46:53.640 | So this is kind of a nice-- as you can see, you end up with very nice, neat code.

00:46:58.040 | It's also super fast because it's all operating in the kind of the pandas fast C code version

00:47:05.640 | of things.

00:47:07.500 | So there's a handy trick.

00:47:15.240 | And then here's the same thing again, but using inheritance instead.

00:47:23.840 | Here's a neat trick.

00:47:24.840 | We've only ever seen static method use as a decorator before, but you can actually just

00:47:28.760 | use it like everything.

00:47:31.160 | You can use it as a function to turn this into a static method.

00:47:39.840 | Lots and lots of versions of this, as you can see.

00:47:42.360 | Here's another one where we're pissing in the data frame.

00:47:46.080 | And we can just have get x, grab this column, grab-- get y, this column.

00:47:54.720 | Maybe this is kind of actually the-- maybe this is the best version.

00:47:58.920 | It's kind of both fast and fairly obvious what's going on.

00:48:04.260 | So there's lots of ways of doing things.

00:48:09.080 | So Kevin mapped is simply the version of map as a method inside L. And then map is just

00:48:18.680 | the standard map function in Python, which you should Google if you don't know about

00:48:25.360 | it.

00:48:26.360 | It's got nothing to do with parallelization.

00:48:28.320 | It's just a way of creating a lazy generator in Python.

00:48:34.840 | And it's very fundamental to how we do things in version 2.

00:48:40.580 | So I mean, end users don't really need to understand it.

00:48:43.680 | But if you want to understand the version 2 code, then you should definitely do a deep

00:48:47.760 | dive into map.

00:48:51.120 | And looking at how the version 2 data loader works would be a good place to get a very

00:48:56.000 | deep dive into that because it's all doing lazy mapping.

00:49:00.200 | It's basically a functional style.

00:49:02.920 | So map is something that comes up in functional programming a lot.

00:49:05.920 | So a lot of the code in version 2 is of a more functional style.

00:49:15.880 | I mean, here's a short version.

00:49:18.400 | If I create something like that, and then I say map some function like negative, so

00:49:34.640 | that's just the negative function over that, it returns-- oh, something weird-- it returns

00:49:41.800 | a map.

00:49:42.800 | And basically, that says this is a lazy generator, which won't be calculated until I print it

00:49:48.840 | or turn it into a list or something.

00:49:51.120 | So I can just say list.

00:49:55.080 | And there you go.

00:49:56.080 | So you can see it's mapped this function over my list.

00:50:05.120 | But it won't do it until you actually finally need it.

00:50:09.200 | So if we go t2 equals that, and then we say t3 equals map.

00:50:18.720 | Maybe lambda o plus 100 over t2.

00:50:26.320 | So now t3 is, again, some map that I could force it to get it calculated like so.

00:50:36.200 | And as you can see, it's doing each of those functions in turn.

00:50:41.120 | So this is a really nice way to work with data sets and data loaders and stuff like

00:50:45.840 | that.

00:50:46.840 | You can just add more and more processing to them lazily in this way.

00:51:00.440 | So then segmentation.

00:51:01.440 | I don't think there's anything interesting there that's any different.

00:51:08.200 | I'm not going to go into the details of how points and bounding boxes work.

00:51:14.960 | But for those of you that are interested in object detection, for example, please do check

00:51:22.240 | it out in the code and ask us any questions you have.

00:51:26.720 | Because although there's no new concepts in it, the way that this works so neatly, I think,

00:51:31.400 | is super, super nice.

00:51:33.780 | And if you have questions or suggestions, I would love to hear about them.

00:51:40.560 | And then I think what we might do next time is we will look at tabular data.

00:51:48.920 | So let's do that tomorrow.

00:51:52.560 | Thanks, everybody.

00:51:53.560 | Hopefully that was useful.

00:51:55.560 | See you.

00:51:57.240 | [BLANK_AUDIO]