Stanford CS25: V4 I Aligning Open Language Models

00:00:00.000 | [silence]

00:00:05.000 | Today we're happy to have Nathan Lambert, a research scientist at the Allen Institute for AI,

00:00:13.000 | who focuses on RLHF and the author of interconnects.ai.

00:00:19.000 | He'll be presenting a really cool talk on aligning open language models today.

00:00:24.000 | So thank you for joining us, Nathan.

00:00:27.000 | Yeah, thanks for the intro. Okay, this is a long time coming.

00:00:32.000 | This is a brief intro on why I'm doing this.

00:00:34.000 | I think generally since ChatGPT you'll see a lot has obviously happened,

00:00:40.000 | but I don't think it's been a blur for me as much as anyone else.

00:00:44.000 | So kind of taking the time to retell what has happened in this kind of fine-tuning and alignment space

00:00:50.000 | since ChatGPT happened is something that I thought was a worthy undertaking.

00:00:55.000 | So this is not really a one-on-one lecture,

00:00:57.000 | but it will probably give you a lot of context on why people are mentioning certain things

00:01:01.000 | and what still matters and what does not.

00:01:03.000 | So hopefully this is fun.

00:01:06.000 | I can see the chat.

00:01:07.000 | I don't know exactly if questions are going to come to me or if I will see it the whole time.

00:01:11.000 | I think clarifying questions are good, maybe not discussions the whole time,

00:01:16.000 | and I'll try to keep sure that there's time for questions at the end.

00:01:20.000 | So let's get into it.

00:01:26.000 | Generally, we're going to talk about language models.

00:01:28.000 | It's what everyone wants to talk about these days.

00:01:30.000 | I need to do some of the older history so that I can talk about recent history.

00:01:34.000 | The place that I like to start is actually with Claude Shannon,

00:01:37.000 | who kind of had this paper talking about approximating, arranging characters to create language models.

00:01:45.000 | That's probably why Anthropic called their models Claude.

00:01:48.000 | That's pretty well known.

00:01:51.000 | And a lot has happened since these very early papers on predicting sequences of text,

00:01:58.000 | and this is largely built on this loss function, which is called the autoregressive loss function.

00:02:04.000 | So if you kind of have this training example where you have something like I saw A,

00:02:08.000 | and you're trying to predict what comes after this,

00:02:10.000 | the whole idea is that there's going to be one correct token that has the correct label,

00:02:14.000 | and their training loss is going to increase the probability of that token

00:02:17.000 | and decrease the probability of everything else.

00:02:20.000 | This very simple loss function, classifying which token to use and actually predict,

00:02:25.000 | has enabled wild things.

00:02:27.000 | And this kind of took another turn in 2017 when this transformer paper was born.

00:02:32.000 | Attention was all you need.

00:02:34.000 | Everyone here has heard about this.

00:02:35.000 | It's a great exercise to actually dig into what the attention mechanism is doing,

00:02:39.000 | not the focus of this talk.

00:02:41.000 | We'll quickly kind of keep going.

00:02:43.000 | In 2018, there was three main things.

00:02:45.000 | These are slightly out of order.

00:02:47.000 | ELMo was the earliest one, which was contextualized word embeddings.

00:02:50.000 | In the same year, we also had GPT-1 and BERT released,

00:02:54.000 | which is kind of the beginning of core ideas on which modern language models

00:02:58.000 | and transformers were trending towards.

00:03:00.000 | And just getting these better models, training on large internet-scaled CORPA,

00:03:06.000 | BERT was a classifier, GPT-1 was generating text,

00:03:09.000 | and we kind of continue along these trends through the years.

00:03:13.000 | GPT-2 is when we started learning about scaling laws.

00:03:16.000 | And if you use orders of magnitude more compute,

00:03:19.000 | the actual test loss will continue to decrease in a linear fashion with respect

00:03:23.000 | to the log compute.

00:03:25.000 | These ideas now are commonplace when we talk about language models.

00:03:29.000 | GPT-2 also pioneered a lot of discussions on releasing language models.

00:03:34.000 | So GPT-2, when it was first announced, they were holding access back because

00:03:39.000 | of the risks of language models, and this started a lot of the conversations

00:03:42.000 | around what you should or should not release with language models.

00:03:46.000 | They eventually actually released GPT-2, and you could download the models

00:03:50.000 | on Hugging Face and use them, but this is where that kind of conversation

00:03:53.000 | around release strategies emerged.

00:03:55.000 | In 2020 is when language models really started to be noticeably good.

00:03:59.000 | So GPT-3 is when a lot of people are like, "Whoa, this can actually do

00:04:03.000 | really interesting things if I kind of create a really clever prompt,

00:04:06.000 | figure out how to give it my information correctly."

00:04:09.000 | And GPT-3 could do a ton of things with kind of this few-shot

00:04:13.000 | or multi-shot learning, which is when you give it a few examples

00:04:16.000 | in the prompt and then ask it to do another rendition of it.

00:04:20.000 | And with this power came many harms, and this is kind of a discussion

00:04:24.000 | of what are the risks of releasing language models,

00:04:27.000 | what types of groups will be hurt by this.

00:04:30.000 | Very important problems that kind of culminated in 2021

00:04:33.000 | with the Stochastics Parrots paper, which is arguing about whether

00:04:38.000 | or not language models can be too big is in the title, but it's really

00:04:42.000 | a critique on how we should be thinking about language models,

00:04:46.000 | what are the limits of them, are they actually doing the things,

00:04:49.000 | like are they actually thinking or doing any of these human things,

00:04:52.000 | or are they just kind of following patterns in the data?

00:04:56.000 | And then just the year after, this is kind of like the tragedy

00:05:00.000 | of Stochastic Parrots, as no one talks about it now,

00:05:03.000 | is that ChattoobeeT came a year later and totally reshaped

00:05:07.000 | the whole narrative around language models one more time.

00:05:10.000 | And this is really where we start today's talk, is like,

00:05:13.000 | how does this idea of alignment emerge in ChattoobeeT,

00:05:17.000 | and then what happens after this?

00:05:20.000 | So the question that I ask myself is like, or I tell a lot of people is,

00:05:24.000 | can ChattoobeeT exist without RLHF?

00:05:27.000 | And what we saw in the release day, so if you go back and read

00:05:30.000 | the actual OpenAI blog about RLHF, they list all these limitations,

00:05:35.000 | but they say that RLHF was an important tool to launching ChattoobeeT.

00:05:38.000 | And the limitations that they list are really the things that we're

00:05:41.000 | still researching and that we're talking about in this talk.

00:05:43.000 | It's a great blog post to go back to, but a good way to frame it

00:05:46.000 | is that RLHF seems to be necessary, but it's not sufficient.

00:05:50.000 | You can't do something like ChattoobeeT or Gemini or Claude

00:05:53.000 | with a technique that -- without something like RLHF.

00:05:56.000 | But it's not the thing -- like, pre-training is still most of the work,

00:06:00.000 | but the fact that RLHF is needed is really important to kind of

00:06:03.000 | contextualize all these improvements that we've seen in the open

00:06:05.000 | in the last 14 months or so.

00:06:08.000 | Some examples that I like to cite on RLHF being relied upon,

00:06:12.000 | you can list many more models here than I have.

00:06:15.000 | This kind of -- this figure from Anthropics Constitutional AI paper

00:06:19.000 | is the single one that I go back to all the time,

00:06:22.000 | showing how just kind of using RLHF can get these more desirable behaviors

00:06:27.000 | from their model in really dramatic ways.

00:06:29.000 | So these kind of ELO measurements aren't kind of calibrated,

00:06:33.000 | so we don't know how to compare LLAMA3 on this chart compared

00:06:37.000 | to Anthropics models, but the level of investment that Anthropics has had

00:06:41.000 | in these kind of techniques and showing this kind of wide-ranging

00:06:44.000 | improvements of their models with RLHF is a kind of flag that we can follow

00:06:49.000 | to try to learn how to do alignment with this much precision

00:06:52.000 | and with this much kind of impact as places like Anthropic

00:06:55.000 | or OpenAI will have.

00:06:57.000 | One such example is just a simple quote from the LLAMA2 paper,

00:07:01.000 | which is kind of like the colloquial way of reading this quote,

00:07:06.000 | which I will read, is that, "Whoa, RLHF worked really easily."

00:07:09.000 | And what the quote is is, "Meanwhile, reinforcement learning,

00:07:13.000 | known for its instability, seemed a somewhat shadowy field for those

00:07:17.000 | in the NLP research community.

00:07:19.000 | However, reinforcement learning proved highly effective,

00:07:21.000 | particularly given its cost and time effectiveness."

00:07:24.000 | So this is one of the biggest endorsements of RLHF,

00:07:27.000 | and it's always fun for me because I came from the RL side

00:07:29.000 | and then I've been learning NLP.

00:07:31.000 | But for NLP researchers to say these things, like, yes,

00:07:34.000 | reinforcement learning is known for instability, and given that it is

00:07:38.000 | cost-effective and time-effective for an RL person, that's shocking.

00:07:42.000 | It's like RL has never been particularly cost-

00:07:45.000 | and time-effective, but for in these language model domain,

00:07:48.000 | where we're fine-tuning with it rather than learning from scratch,

00:07:51.000 | to have people in NLP that are saying this is just really striking

00:07:56.000 | for how much impact it can have.

00:07:58.000 | And the timeline of alignment and open alignment is really like,

00:08:01.000 | when do we see these benefits?

00:08:03.000 | Like, these benefits didn't show up in models that people were playing

00:08:06.000 | with for quite a bit of time.

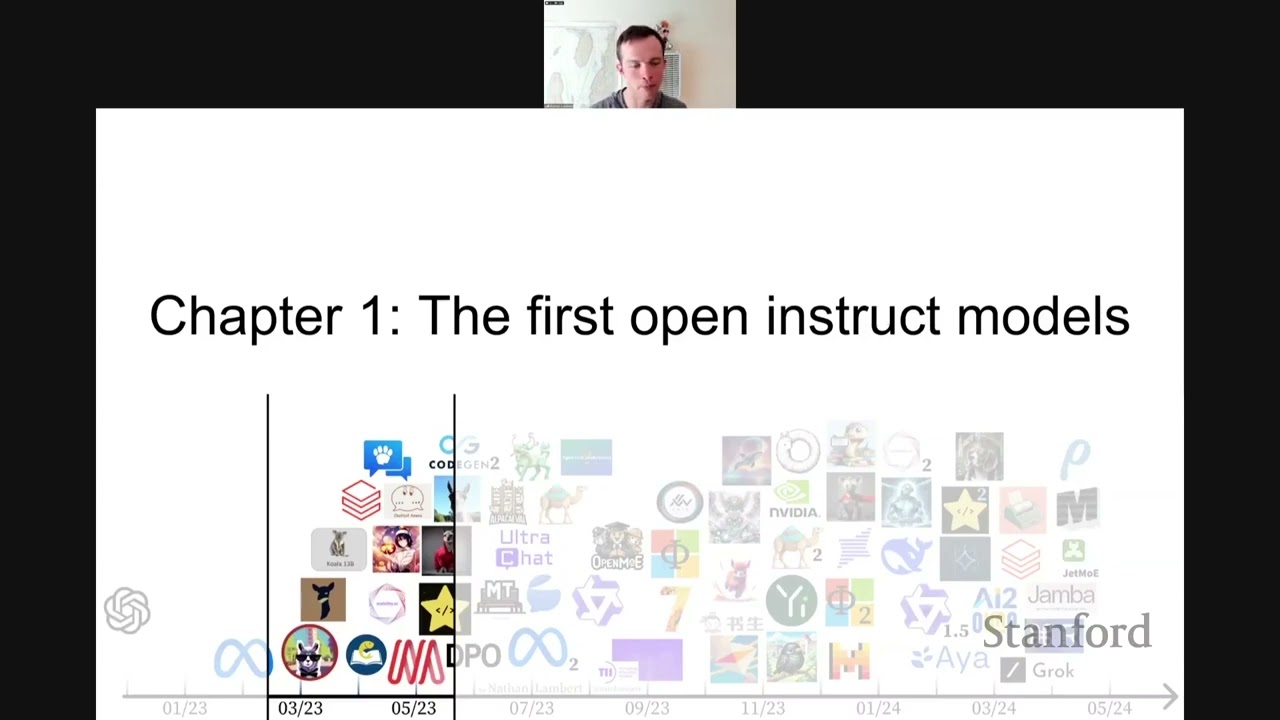

00:08:08.000 | So this is kind of a little atlas that I've thrown together.

00:08:11.000 | I also made a hugging face collection where I tried to add all the models

00:08:15.000 | that I talk about to it, so you can actually click on the models

00:08:17.000 | or try to use them if you're so inclined of actually running

00:08:20.000 | the models yourself.

00:08:22.000 | It's just kind of another way of documenting the artifacts

00:08:25.000 | that I talk about and the artifacts that, for me, this is a good review.

00:08:28.000 | I'm like, what mattered?

00:08:30.000 | What mattered in this really noisy journey in the last year?

00:08:33.000 | Of course, some disclaimers.

00:08:35.000 | I'm not covering every model since ChatGPT.

00:08:38.000 | This little plot of model icons could probably look more like an exponential

00:08:44.000 | than this kind of capped bar.

00:08:46.000 | And there's so much history of NLP that people are building on

00:08:50.000 | in the alignment space that is totally swept under the rug here.

00:08:55.000 | A lot of academic and infrastructure contributions that I'm not talking

00:08:59.000 | about but are really important to kind of this proliferation

00:09:02.000 | of fine-tuning models.

00:09:04.000 | So just kind of describing what this image that I have here is.

00:09:09.000 | To kind of summarize, some of these are base models.

00:09:14.000 | I'm not going to focus on base models as much as fine-tuned models.

00:09:18.000 | The base models are extremely important.

00:09:20.000 | Like none of this happens without LLAMA.

00:09:22.000 | None of this happens without LLAMA2.

00:09:24.000 | The base models are the bedrock of this ecosystem.

00:09:28.000 | And then the alignment models are what people --

00:09:31.000 | the aligned models are a lot of times what people can play with

00:09:34.000 | and what you could try out, what you could do yourself

00:09:36.000 | on much less computing infrastructure and all these things.

00:09:39.000 | So I'm going to talk more about the aligned models,

00:09:41.000 | but everything matters.

00:09:42.000 | It's one big ecosystem.

00:09:47.000 | Another thing that's not fun but I'm going to do for the sake of kind

00:09:51.000 | of flag-posting, no one really likes listening to definitions.

00:09:55.000 | Here are some things that you'll hear thrown around.

00:09:58.000 | This isn't even all of them when talking about "alignment."

00:10:01.000 | Here, alignment I've defined as a general notion of training a model

00:10:05.000 | to mirror a user's desires, really with any loss function.

00:10:09.000 | It's not restricted.

00:10:10.000 | So there's a difference between instruction fine-tuning

00:10:13.000 | and supervised fine-tuning.

00:10:15.000 | Instruction fine-tuning is about trying to get a model

00:10:18.000 | that will respond to queries, format, and instructions,

00:10:21.000 | while supervised fine-tuning is more about learning

00:10:24.000 | a specific task's capabilities.

00:10:26.000 | These get interchanged all the time.

00:10:28.000 | Like, that's okay.

00:10:29.000 | It's good to know that they're different.

00:10:31.000 | And then two kind of more ones I need to touch on,

00:10:35.000 | and we could go on even longer, is reinforcement learning

00:10:38.000 | from human feedback.

00:10:39.000 | There's this multistage process.

00:10:41.000 | It's a specific tool for aligning ML models to human data.

00:10:46.000 | It's kind of a class of tools, so it has some sort of --

00:10:49.000 | you learn a preference model and then you extract information from it.

00:10:52.000 | So there are so many different ways to do it.

00:10:54.000 | It's really an approach.

00:10:55.000 | And then there's a term that I'm kind of trying to grow,

00:10:58.000 | which is preference fine-tuning,

00:11:00.000 | which could encompass RLHF methods like PPO,

00:11:03.000 | but there's the question of how do we differentiate something

00:11:06.000 | like direct preference optimization,

00:11:08.000 | which doesn't use an RL optimizer, from all of RLHF.

00:11:12.000 | And I'll kind of come back to this,

00:11:14.000 | but it's good to have some common rounds to build on

00:11:17.000 | because I might be going through some of these things pretty quickly.

00:11:27.000 | This is a chapter that I cover in one slide

00:11:30.000 | because it's really tapping into a lot of different personal stories.

00:11:34.000 | It's hard to retell how crazy things were when ChatGPT dropped.

00:11:40.000 | People were not really losing their mind,

00:11:43.000 | but there was a lot of uncertainty on what the future held,

00:11:48.000 | especially -- it was clear that language models were important,

00:11:52.000 | but it is not clear -- there's a lot of articles on like --

00:11:55.000 | titled "We're Going to Reproduce Open ChatGPT,"

00:11:58.000 | which you can't really have an open model

00:12:02.000 | that does what a closed product does.

00:12:05.000 | There's a difference between model weights

00:12:07.000 | and this product that ChatGPT represents.

00:12:09.000 | But there's so much excitement that everyone is saying

00:12:11.000 | they're going to do these things

00:12:13.000 | and trying to figure out the right coalitions for actually doing so.

00:12:16.000 | And it's interesting.

00:12:18.000 | This delay is kind of this land grab

00:12:20.000 | where people are learning the basic things,

00:12:22.000 | like what is red teaming?

00:12:24.000 | What is the difference between a dialogue agent

00:12:26.000 | and a predictive language model?

00:12:28.000 | What tools should we use?

00:12:30.000 | And everything kind of follows from here with what people are building.

00:12:34.000 | But personally, I just remember multiple meetings

00:12:37.000 | where people were like, "Yeah, you should do it.

00:12:38.000 | You should go try to build open ChatGPT."

00:12:40.000 | And when you look back, that goal is just so wild

00:12:43.000 | that so many people are just going like,

00:12:45.000 | "We need to build this thing into open source."

00:12:48.000 | It doesn't even make sense

00:12:50.000 | because you can't open source a whole system that way.

00:12:54.000 | But there are some things that make a bit --

00:12:57.000 | this makes a lot more sense,

00:12:59.000 | which is when things start to get grounded in actual models.

00:13:02.000 | So the first Llama Suite was released, I think, in February.

00:13:06.000 | I have the date in some notes somewhere.

00:13:08.000 | And then these instruction-tuned models started to show up

00:13:11.000 | on this first Llama model.

00:13:13.000 | The first one to really crack the narrative was this Alpaca model.

00:13:17.000 | And it did a bunch of things that still are used today.

00:13:21.000 | So this was trained on 52,000 self-instruct style data

00:13:25.000 | distilled from text DaVinci 3.

00:13:27.000 | There's a lot in the sentence.

00:13:28.000 | I'll say what self-instruct means.

00:13:30.000 | But this wasn't even data generated from ChatGPT.

00:13:33.000 | It was generated from one of OpenAI's API models.

00:13:36.000 | So if we talk about --

00:13:39.000 | this is all on how to apply instruction fine-tuning.

00:13:42.000 | And this is this thing I mentioned on the definition slide.

00:13:45.000 | But really, it's about making a model

00:13:47.000 | that will respond to specific styles of inputs.

00:13:50.000 | What often happens at a technical level here

00:13:52.000 | is that the model is learning to integrate

00:13:54.000 | something called, like, a chat template,

00:13:56.000 | the ability to include system prompts.

00:13:58.000 | So you want the model to know it is an agent.

00:14:01.000 | You want the model to know what day it is.

00:14:04.000 | Excuse me, you can do this in the system prompt,

00:14:06.000 | which is something the user doesn't see,

00:14:08.000 | but it steers the behavior of the model.

00:14:10.000 | And instruction tuning is really where

00:14:12.000 | we make the model capable of having these behaviors.

00:14:15.000 | But the question is, like,

00:14:16.000 | what data are we training this behavior on?

00:14:19.000 | So the most common example is kind of --

00:14:24.000 | you continue training with this autoregressive loss function

00:14:27.000 | on question/answer pairs.

00:14:29.000 | So it's like, what is a transformer?

00:14:31.000 | And then the language model will predict an answer.

00:14:34.000 | It could be from stack overflow.

00:14:35.000 | It could be something else.

00:14:37.000 | And this example is human data.

00:14:39.000 | But what made Alpaca and a lot of these early models,

00:14:43.000 | and even today, really popular and accessible,

00:14:45.000 | is by using data to answer questions

00:14:48.000 | that is generated by an AI.

00:14:51.000 | So this is where the kind of idea

00:14:52.000 | of self-instruct data comes in.

00:14:56.000 | Self-instruct was a paper from Allen AI and UW in 2022,

00:15:01.000 | before ChatGPT, where essentially the idea is,

00:15:05.000 | how do we expand on the distribution

00:15:07.000 | and instruction data that we have,

00:15:09.000 | this training data for fine-tuning a language model,

00:15:12.000 | without getting more humans in the loop?

00:15:14.000 | So what you really have to do

00:15:17.000 | is you start with some high-quality,

00:15:19.000 | often human prompts.

00:15:21.000 | And then what we now see as more common practice today,

00:15:24.000 | but was very new then,

00:15:26.000 | is asking a stronger language model,

00:15:28.000 | create a list of prompts that are similar to this,

00:15:32.000 | but still diverse.

00:15:33.000 | And then once you have a list of prompts,

00:15:36.000 | you can use ChatGPT or another model

00:15:38.000 | to actually generate completions.

00:15:40.000 | Because then what you have

00:15:41.000 | is a really big list of question-answer pairs,

00:15:45.000 | but you don't need to go through the bottleneck

00:15:47.000 | of getting humans to sit down and write all of them.

00:15:49.000 | So what Alpaca was really, why Alpaca worked,

00:15:53.000 | is because of realizing this

00:15:55.000 | and taking in this better model from OpenAI.

00:15:58.000 | So you can see this figure here on the right

00:15:59.000 | is from the Alpaca paper or blog post, one of the two.

00:16:03.000 | They took this model from OpenAI

00:16:05.000 | and they asked it to generate more tasks.

00:16:08.000 | So they had 175 to start,

00:16:11.000 | and then they ended up with over 50,000 tasks,

00:16:14.000 | and they also generated completions

00:16:15.000 | from this OpenAI model.

00:16:17.000 | And then what they did is they took these meta-weights

00:16:21.000 | that had just come out and they instruction fine-tuned them,

00:16:24.000 | and then you end up with Alpaca.

00:16:26.000 | This is kind of a pattern.

00:16:28.000 | This is a pattern that we've seen many times with Alpaca,

00:16:30.000 | which is essentially you take,

00:16:33.000 | you generate some data from a stronger language model

00:16:37.000 | and you fine-tune on it.

00:16:38.000 | It sounds so obvious today,

00:16:39.000 | but this was the first model to actually release this.

00:16:44.000 | I can now see questions coming in.

00:16:46.000 | I'll answer the ones that are clarifying

00:16:48.000 | and stuff like this, so thanks for asking them,

00:16:51.000 | and we can come back to more at the end.

00:16:54.000 | Once Alpaca happened,

00:16:55.000 | it felt like there was a new model every week.

00:16:57.000 | The second model was Vicuna,

00:16:59.000 | and really what they changed was they added new sources

00:17:04.000 | of prompts to the distribution.

00:17:07.000 | So you can see that I say shared GPT.

00:17:10.000 | They also introduced the idea of LLM as a judge,

00:17:13.000 | which is now obvious from a lot of their later evaluation work.

00:17:17.000 | But let's talk about why shared GPT was so interesting.

00:17:21.000 | So shared GPT was one of the only data sets

00:17:26.000 | that got open language model builders,

00:17:30.000 | so people like me, prompts,

00:17:33.000 | that were similar to what people were asking chat GPT.

00:17:38.000 | So what was happening was you would install

00:17:40.000 | this browser plug-in,

00:17:42.000 | and it would let you share your prompts from chat GPT

00:17:47.000 | on Twitter or whatever.

00:17:48.000 | So it was making it easier to share the prompts

00:17:51.000 | in your conversations before OpenAI made a tool to do this,

00:17:55.000 | and now there's this legal gray area over the data set

00:18:00.000 | because most of these data sets are unlicensed,

00:18:02.000 | and they were kind of created without consent

00:18:04.000 | or they were released without consent.

00:18:06.000 | So there's a legal question

00:18:08.000 | of whether or not people should be training on this data,

00:18:11.000 | but the fact of the matter is that shared GPT

00:18:13.000 | was really important to this kind of acceleration

00:18:15.000 | and progress on fine-tuning models

00:18:18.000 | because the diversity of data is just so much stronger

00:18:21.000 | than what people were going to get

00:18:23.000 | from, like, this alpaca self-instruct idea,

00:18:25.000 | and it set the bar much higher.

00:18:27.000 | It's only today and in the last, like, few months

00:18:30.000 | or six months for some of them that we're getting data sets

00:18:32.000 | that can replace these.

00:18:34.000 | So you see I mentioned LLM says chat 1M,

00:18:37.000 | which is just a million conversations

00:18:39.000 | from Chatbot Arena, which took a lot of work

00:18:41.000 | to clean out personal information,

00:18:44.000 | and then a project from the Allen Institute of AI,

00:18:46.000 | which is WildChat,

00:18:48.000 | which is really similar to shared GPT,

00:18:50.000 | but the users were given consent at the start

00:18:53.000 | that their data was going to be collected and released

00:18:55.000 | in exchange for using a language model for free.

00:18:58.000 | So there's a lot of happenstance in the story

00:19:02.000 | where something like this, which is legally gray,

00:19:05.000 | the data is still on Hugging Face,

00:19:06.000 | but it looks kind of odd,

00:19:09.000 | where these little things helped enable the ecosystem,

00:19:12.000 | even though looking back, it's like,

00:19:14.000 | "Oh, we don't know if that should have happened."

00:19:20.000 | Following Vicuna is one called Koala,

00:19:23.000 | also from Berkeley, and, like,

00:19:26.000 | if you look at the time frames, it's pretty obvious

00:19:28.000 | that a lot of these were developed concurrently,

00:19:30.000 | and then the release dates just happened

00:19:32.000 | to be slightly different.

00:19:34.000 | Koala is mostly known for having

00:19:36.000 | kind of a different diverse set of data sets.

00:19:39.000 | They used some from Alpaca.

00:19:41.000 | They used some from shared GPT again.

00:19:44.000 | They also used enthropic data that has been released,

00:19:46.000 | and they had some human evaluation from grad students.

00:19:49.000 | So this just added more data diversity,

00:19:51.000 | and the evaluations weren't necessarily better,

00:19:54.000 | but it was an important model that a lot of people noticed

00:19:56.000 | just from these kind of bringing back up

00:19:58.000 | these new data sets that had been

00:20:00.000 | in the literature from years prior.

00:20:03.000 | Something they might ask looking at these slides

00:20:05.000 | is that it's like, "Why weight differences?"

00:20:07.000 | I have all these slides like weight diff

00:20:09.000 | to Llama7b, weight diff.

00:20:12.000 | Essentially, when Llama was released,

00:20:14.000 | it was released as research only,

00:20:16.000 | and it was distributed to researchers upon request,

00:20:20.000 | and the license prohibited people from updating

00:20:22.000 | Llama1 to HuggingFace.

00:20:24.000 | So it was kind of this annoying phase where,

00:20:26.000 | in order to use a model on HuggingFace,

00:20:27.000 | you had to clone it,

00:20:29.000 | and then you had to run a script to convert it

00:20:31.000 | with this kind of delta into the new model

00:20:34.000 | in order to actually use it.

00:20:36.000 | So this was kind of a really frustrating phase

00:20:38.000 | from a user perspective

00:20:39.000 | because it just made experimentation

00:20:41.000 | have one more barrier to entry,

00:20:43.000 | and thankfully, it was changed with Llama2,

00:20:45.000 | but it was really something that many people

00:20:47.000 | dealt with at the time,

00:20:49.000 | and we now today see different license restrictions

00:20:52.000 | on how Llama is used.

00:20:55.000 | I mean, the Llama3 released today,

00:20:57.000 | essentially, if I fine-tune a model for my research

00:21:00.000 | and I release it at AI2,

00:21:02.000 | Llama3 needs to be in the name.

00:21:04.000 | So if I wanted to release a new Tulu model,

00:21:07.000 | it would have to be Llama3 Tulu4 DPO.

00:21:10.000 | It's like the namings are going to be crazy,

00:21:12.000 | but there's always been restrictions on using LlamaWeights

00:21:14.000 | or how you share them.

00:21:18.000 | And the final model that I kind of group into this batch

00:21:21.000 | of this real first swing was Dolly.

00:21:24.000 | So Dolly was fine-tuned from a different base model.

00:21:27.000 | It was fine-tuned from the Pythia models from Eleuther,

00:21:29.000 | which are a suite of early scaling experiments

00:21:32.000 | from Eleuther AI, which is still used extensively.

00:21:36.000 | But they added some human-written data to the loop,

00:21:38.000 | which is just really important

00:21:40.000 | because almost all the projects that I'll mention today

00:21:43.000 | talk about synthetic data or data derived from OpenAI,

00:21:46.000 | but there's only a few of them

00:21:48.000 | that actually added new human data to the loop,

00:21:50.000 | and this is what everyone remembered Dolly for.

00:21:53.000 | And a lot of its performance limitations

00:21:55.000 | are probably from the base model Pythia,

00:21:57.000 | which is trained in a time where this type of inference

00:22:00.000 | that people expect wasn't as popular,

00:22:02.000 | and it was kind of before the scaling laws

00:22:04.000 | were thought of differently.

00:22:08.000 | You can kind of see through these

00:22:10.000 | where we're going to start with different model sizes

00:22:12.000 | and different MTBench scores.

00:22:14.000 | I'll talk about what MTBench is in a few slides.

00:22:16.000 | It's an evaluation tool,

00:22:18.000 | and this is really just to ground you

00:22:20.000 | on how the scores would change over time.

00:22:25.000 | So I have these throughout the talk

00:22:27.000 | as we kind of go through different models

00:22:29.000 | just to show kind of how this --

00:22:31.000 | how the scores continue to progress over time

00:22:33.000 | from one small change to another

00:22:35.000 | as the community gets better at these things.

00:22:38.000 | While I was talking about human data,

00:22:40.000 | so remember, Dolly is all about human data.

00:22:43.000 | Probably still the single busiest human coordination project

00:22:50.000 | for data generation was OpenAssistant.

00:22:52.000 | I think it's easy now, if you get into fine-tuning,

00:22:55.000 | to see the OpenAssistant dataset

00:22:57.000 | and not realize how important it was

00:23:01.000 | to the process of alignment in this whole summer,

00:23:04.000 | and it is still used today.

00:23:06.000 | So essentially, there's this quote on the top,

00:23:08.000 | but the leaders ran a community project

00:23:11.000 | to generate human-written prompts

00:23:14.000 | and these kind of like human-written responses

00:23:18.000 | in many different languages with rating them

00:23:20.000 | so you could use it as preferences.

00:23:23.000 | The slide has a bug where it's like

00:23:25.000 | it has over 10,000 annotated trees

00:23:28.000 | and over 1,000 volunteers.

00:23:30.000 | This is still used extensively today.

00:23:32.000 | It will come up again in the talk.

00:23:34.000 | They also released models.

00:23:35.000 | So the first model used on Hugging Chat,

00:23:39.000 | which I don't remember the launch date of,

00:23:41.000 | was an OpenAssistant model.

00:23:43.000 | So OpenAssistant was probably

00:23:45.000 | the first majorly successful project of the era,

00:23:47.000 | and the dataset is still used today.

00:23:50.000 | Where I will end this talk is saying

00:23:52.000 | that we need more things like this.

00:23:54.000 | It's like really one of the most important things

00:23:57.000 | is we need more human data in the open.

00:24:01.000 | This is a quick aside.

00:24:02.000 | It's kind of out of the flow of the talk,

00:24:04.000 | but on April 28th of 2023, typo on the slide,

00:24:08.000 | of April 28th of 2023,

00:24:10.000 | Stable Vicuno was released from Carper AI,

00:24:13.000 | which looks now like the style of training models,

00:24:17.000 | except for the dataset, which is now popular.

00:24:23.000 | They got PPO to work.

00:24:24.000 | They had some human evaluations that were solid.

00:24:27.000 | It was a good chat model.

00:24:28.000 | It wasn't out of distribution,

00:24:31.000 | but Carper AI was really ahead at the time,

00:24:34.000 | and then it seems like priorities

00:24:36.000 | kind of shifted from stability.

00:24:38.000 | But it's important to know the extent

00:24:40.000 | by which there were still some players

00:24:42.000 | who knew how to do ROHF really early on,

00:24:45.000 | even though they were pretty rare.

00:24:52.000 | This is the last slide of this kind of first chapter

00:24:55.000 | on instruction tuning, was the idea of QLORA,

00:24:58.000 | which was kind of unlocked a whole new bunch of players

00:25:02.000 | into actually being able to find two models.

00:25:05.000 | So for the quick 60-second overview,

00:25:09.000 | LORA stands for low-rank adaptation,

00:25:12.000 | which is the idea of you can freeze some model --

00:25:15.000 | you freeze most of the model weights,

00:25:16.000 | and you add new weights to specific layers

00:25:19.000 | that you can then fine-tune,

00:25:21.000 | as if you were fine-tuning the whole model.

00:25:23.000 | You'd use the same approach of instruction data

00:25:26.000 | with question-answering, but it takes much less memory.

00:25:29.000 | QLORA was a technique that built upon this

00:25:32.000 | by adding very specific quantization and GPU tricks

00:25:35.000 | to make it so the memory requirements

00:25:37.000 | to fine-tune models was even lower.

00:25:40.000 | Tim Detmers and team also released this Guanaco model

00:25:44.000 | with it, which was another big step up

00:25:48.000 | in performance of these models.

00:25:49.000 | I have a few more slides on it, on the method.

00:25:51.000 | So you can kind of see on the right this difference,

00:25:54.000 | full fine-tuning, LORA.

00:25:56.000 | They look similar, where LORA, you have fewer parameters,

00:25:58.000 | is kind of what the smaller shapes mean.

00:26:00.000 | In QLORA, they quantize the base model

00:26:03.000 | that you're propagating gradients through

00:26:05.000 | to save most of the memory.

00:26:08.000 | So this is an approximation of if you're fine-tuning

00:26:11.000 | different model sizes on the top,

00:26:13.000 | so 7 billion, 13 billion, 30 billion,

00:26:15.000 | with full fine-tuning, different amount of bits,

00:26:18.000 | but full fine-tuning versus LORA versus QLORA.

00:26:21.000 | And you can kind of see, for reference,

00:26:24.000 | one A100 GPU has about 80 gigabytes of memory,

00:26:28.000 | and these are really hard GPUs to get.

00:26:31.000 | Plenty of consumer GPUs will only

00:26:33.000 | have like 24 to 32 gigabytes of memory.

00:26:37.000 | So you need to use these QLORA techniques

00:26:39.000 | to actually get the ability to fine-tune models at the 7

00:26:44.000 | or 13 billion parameter size.

00:26:48.000 | And like Guanaco did this, and they released 33 billion

00:26:52.000 | and 65 billion parameter LAMA fine-tunes,

00:26:55.000 | which were clear steps up in the kind of state

00:26:57.000 | that they are at the time.

00:26:59.000 | And they also figured out ways to filter this Open Assistant

00:27:03.000 | data set that I mentioned, and this kind

00:27:05.000 | of filtered version of Open Assistant

00:27:07.000 | is what is still most popular today.

00:27:10.000 | I'm going to kind of pause and skim through the questions

00:27:12.000 | and see if there's anything on that section,

00:27:14.000 | and if not, I'll save the relevant ones for later.

00:27:18.000 | Anyway, I'm going to keep going.

00:27:20.000 | They're great questions, and I appreciate them,

00:27:23.000 | but they're mostly not specific enough

00:27:26.000 | where it's worth the digression.

00:27:28.000 | This Chapter 2 phase is really like

00:27:31.000 | where it seemed like things were a little bit slower

00:27:35.000 | on the ground, but when we look back

00:27:37.000 | at a lot of the things that came out of this time,

00:27:39.000 | like the DPO paper was in this era.

00:27:42.000 | Everyone read it, but we didn't know what to do with it yet,

00:27:44.000 | and the new evaluations are still really used.

00:27:48.000 | Transitioning in, setting the scene for being

00:27:51.000 | not sure if things work, a lot of people

00:27:54.000 | were continuing to try to build on these LORA methods

00:27:57.000 | and QLORA methods.

00:27:58.000 | I remember a lot of excitement at Hugging Face

00:28:00.000 | where we were setting up our RLHF pipeline

00:28:03.000 | where we could do RLHF on 7 billion parameter models,

00:28:07.000 | and we could maybe do it on a consumer GPU.

00:28:09.000 | It was really cool to see the loss going down.

00:28:12.000 | It was great to bring more people into the space,

00:28:15.000 | but weeks and weeks would go by, and you're like,

00:28:18.000 | "Why has no one picked up what we released

00:28:21.000 | in the blog post and trained a really good model with it?"

00:28:24.000 | And the kind of consensus now is that these LORA methods

00:28:30.000 | just have some sort of weird limitation

00:28:32.000 | in how you use them or how the gradients flow

00:28:35.000 | that make it much, much harder to get a really good model out.

00:28:39.000 | If you only have a certain number of GPUs

00:28:41.000 | such that LORA is your only option, definitely use it,

00:28:44.000 | but for people that have more GPUs,

00:28:46.000 | figuring out how to scale is normally a better solution

00:28:49.000 | than just using something like LORA that fits

00:28:51.000 | and is easier in the short term.

00:28:55.000 | Another defining moment of this era was the LLAMA2 backlash.

00:29:00.000 | I'm guessing some people remember this,

00:29:02.000 | which is like the famous line was people asked LLAMA

00:29:05.000 | how to kill a Python process, and it would say no,

00:29:08.000 | and this really started a whole bunch of new discussions

00:29:12.000 | around what kind of alignment means

00:29:15.000 | or what models should or should not do.

00:29:18.000 | Here's an example from a paper for a safety evaluation test set

00:29:22.000 | called XS Test, and it's just like,

00:29:25.000 | "Should chat models be safe

00:29:27.000 | or should they follow the instructions that I want?"

00:29:30.000 | And this is a fundamental question.

00:29:32.000 | It'll differ by organization. It'll differ by individual.

00:29:35.000 | And this is the point where this became very serious

00:29:39.000 | and something that people actually had to reckon with

00:29:41.000 | because there were models that were actively disagree--

00:29:45.000 | people were really disagreeing with this specific take.

00:29:48.000 | I don't have any clear solution to it,

00:29:51.000 | but one of the things it led to is this idea of uncensored models.

00:29:55.000 | It's a really popular category on kind of a hugging face right now

00:30:01.000 | where the idea is you remove filtering.

00:30:03.000 | So if we're using synthetic data and I ask a language model a question,

00:30:07.000 | like if I ask chat GPT how to make a bomb,

00:30:09.000 | it's going to say, "I'm sorry. I'm a language model.

00:30:11.000 | I shouldn't make this."

00:30:13.000 | And the idea of uncensored models is to remove those points from our kind of--

00:30:19.000 | remove those points from our fine-tuning data set.

00:30:22.000 | I think there's a lot of confusion over the name

00:30:24.000 | because language models were never--

00:30:26.000 | at this stage really aren't censored to begin with,

00:30:29.000 | but it's really that the data set

00:30:31.000 | and the method for creating these data sets needed more filtering

00:30:35.000 | or they needed some way of becoming unbiased.

00:30:38.000 | So like there's a lot of people now that only build models

00:30:42.000 | to try to make them unbiased against any sort of refusal.

00:30:45.000 | A refusal is when you ask a language model something and it says no.

00:30:48.000 | And this goes on today, and this came out of this LLAMA2 thing.

00:30:54.000 | But otherwise, this is a transition period

00:30:56.000 | where there's a lot of good, solid models being trained,

00:31:00.000 | but either they didn't have a lot of documentation,

00:31:02.000 | they didn't have the right release team to splash as big as they should have,

00:31:06.000 | the methods were complicated to implement, or something like this.

00:31:10.000 | So I could run through these, and I remember all these models coming out,

00:31:14.000 | but none of them were really things that are household names like Alpaca is today.

00:31:19.000 | The team from behind WizardLM,

00:31:22.000 | where they created this method called InvolInstruct,

00:31:24.000 | which is a synthetic data method.

00:31:26.000 | All these things were clearly working for them

00:31:30.000 | based on the models they were generating,

00:31:32.000 | but for whatever reason, the narrative wasn't actually changed.

00:31:36.000 | There's some new datasets, like UltraLM is from OpenBMB in China

00:31:42.000 | that is releasing new datasets, more people training on shared GPT.

00:31:46.000 | The model called XWinLM was the first one to be a similar ballpark,

00:31:51.000 | and it's also trained with RLHF, so not just that Carper model.

00:31:56.000 | But for whatever reason, these didn't really splash.

00:32:00.000 | And that was this kind of summer after LLAMA2

00:32:03.000 | where fine-tuning was chugging along,

00:32:06.000 | but the narrative wasn't changing all that much,

00:32:09.000 | at least from my perspective, but that's why I'm here.

00:32:14.000 | But what was happening in the background,

00:32:16.000 | while the models weren't seeming that different,

00:32:19.000 | is that new evaluation tools were coming out

00:32:22.000 | that ended up kind of being the standard of today.

00:32:25.000 | So you can see the dates here, so May 3rd, ChatBot Arena,

00:32:28.000 | June 8th, AlpacaEval, June 22nd, MTBench.

00:32:31.000 | Sometime in early July, the OpenLLM Leaderboard.

00:32:34.000 | All of these things were created about the same time,

00:32:37.000 | where there's a desperate need to get some sort of signal

00:32:40.000 | on what our fine-tuned models are doing in the open.

00:32:43.000 | Like, we don't have the capability of paying humans

00:32:46.000 | to compare our responses like they do at Anthropic,

00:32:49.000 | where they're always trying new models on humans.

00:32:51.000 | That's way too expensive.

00:32:53.000 | We need something that you could sit down as an engineer

00:32:56.000 | and get feedback in 10 to 15 minutes.

00:33:00.000 | So I kind of run through these in order,

00:33:02.000 | and a lot of these are obvious,

00:33:04.000 | but it's important to take this from the perspective

00:33:06.000 | of what can I use when I'm trying to align models,

00:33:09.000 | and what is an immediate feedback

00:33:11.000 | versus what is kind of this long-term signal.

00:33:14.000 | So ChatBot Arena is obviously fantastic.

00:33:17.000 | Like, everyone looks at this today

00:33:19.000 | as something that is defining corporate strategy,

00:33:25.000 | as defining the biggest language model players.

00:33:28.000 | If Cloud 3 is better than GPT-4.

00:33:31.000 | But if I'm an engineer, A, many small providers

00:33:35.000 | aren't going to get their models in,

00:33:37.000 | and B, it takes -- especially previously,

00:33:40.000 | it used to take weeks to get your models rating,

00:33:42.000 | but now it takes days.

00:33:44.000 | Like, I need to know what my models are

00:33:47.000 | before I decide to actually release it.

00:33:49.000 | So that's the biggest thing where, like,

00:33:51.000 | I know I need something beyond ChatBot Arena

00:33:54.000 | just for my engineering development.

00:33:57.000 | And this is where, like,

00:33:58.000 | AlpacaEval and MTBench really thrive.

00:34:00.000 | So AlpacaEval --

00:34:02.000 | God, the slide formatting got changed,

00:34:04.000 | but I'll just kind of keep rolling through this.

00:34:07.000 | AlpacaEval is the idea of you have a list of prompts

00:34:10.000 | that you compare to a strong other base model,

00:34:14.000 | like OpenAI's DaVinci 3 or GPT-4,

00:34:19.000 | and then you ask a language model which is better.

00:34:21.000 | And the data set here is compiled

00:34:23.000 | from all these popular data sets

00:34:25.000 | that I have been talking about so far.

00:34:28.000 | So data sets from OpenAssistant, Vicuna, Koala, Anthropic.

00:34:32.000 | Like, all these data sets that people have been using,

00:34:35.000 | they took the test sets from those,

00:34:37.000 | and that's what AlpacaEval mirrors.

00:34:39.000 | It's kind of a known thing.

00:34:41.000 | It has some limitations

00:34:43.000 | because there's only so many prompts,

00:34:46.000 | and it's like asking a language model

00:34:48.000 | to provide a rating is going to have some ceiling

00:34:51.000 | where we don't know how to compare two really good models.

00:34:55.000 | So it has more samples than MTBench,

00:34:57.000 | so there's more --

00:35:00.000 | so there's just kind of smaller error bars,

00:35:02.000 | and it's easier to use

00:35:03.000 | because it's a single-turn generation.

00:35:05.000 | But we've heard about the length bias

00:35:07.000 | for a really long time,

00:35:09.000 | and it's not clear how to interpret these top results.

00:35:12.000 | So this is an older screenshot of Leaderboard,

00:35:14.000 | but what does beating a model 95% of the time

00:35:19.000 | mean to another language model?

00:35:21.000 | That's the questions that we can't really answer

00:35:23.000 | in the short term.

00:35:25.000 | AlpacaEval 2 came out, which takes steps to this,

00:35:28.000 | where it compares to GPT-4 rather than DaVinci 3.

00:35:34.000 | DaVinci 3 was an Instruct GPT variant.

00:35:37.000 | But at the end of the day,

00:35:39.000 | if, like, GPT-4 is answering these questions

00:35:43.000 | in the Alpaca style really well,

00:35:46.000 | so what does beating GPT-4 exactly mean?

00:35:49.000 | And we need to get more specific in our evaluations

00:35:52.000 | because I don't really know if I care too much

00:35:55.000 | about a 20% or 30% score in AlpacaEval 2

00:35:59.000 | because I don't know what it means.

00:36:00.000 | And this is the opaqueness of all of our evaluations.

00:36:04.000 | We'll see this time and time again

00:36:05.000 | where we don't know what an increase in score means.

00:36:08.000 | That's, like, the next step

00:36:09.000 | after being able to do it easily.

00:36:12.000 | This update was pretty recent.

00:36:16.000 | MTBench is pretty similar,

00:36:18.000 | where instead of comparing to one model,

00:36:21.000 | you ask a language model to provide a score

00:36:24.000 | to a list of prompts.

00:36:25.000 | So if I have a model I'm training,

00:36:27.000 | I generate the completion to 80 diverse prompts,

00:36:30.000 | and then I ask GPT-4, "Hey, from 0 to 10,

00:36:33.000 | how good were each of those completions?"

00:36:36.000 | And this is good,

00:36:38.000 | but it runs into the same problem of, like,

00:36:40.000 | what if our model is getting really good?

00:36:42.000 | If our model is getting really good,

00:36:44.000 | it's just, like, it becomes saturated.

00:36:47.000 | Like, GPT-4 only gets to about 9,

00:36:50.000 | and there's only about 80 prompts

00:36:52.000 | in the evaluation set and all these things.

00:36:54.000 | And it's just --

00:36:56.000 | And one of the nuance points

00:36:57.000 | is that there's actually a variance.

00:36:59.000 | So even if you set the temperature to 0,

00:37:01.000 | GPT-4 versions change.

00:37:03.000 | Your own generations from your model

00:37:05.000 | you're trying to train can change.

00:37:08.000 | And this makes it better where it's like,

00:37:12.000 | "Okay, I can tell if a model was really bad,

00:37:14.000 | if MTBench and AlpacaEval have really low scores."

00:37:17.000 | But it's hard to, like --

00:37:19.000 | It's still --

00:37:20.000 | We have this goal for a precise evaluation.

00:37:23.000 | So, like, in pre-training,

00:37:25.000 | we have MTBench and HelloSwag

00:37:28.000 | and all of the --

00:37:29.000 | Or, sorry.

00:37:30.000 | In pre-training, we have, like, MMLU and HelloSwag

00:37:32.000 | and all these things that people can look at

00:37:34.000 | and average over 10 tasks.

00:37:36.000 | And if you get, like, a 2% improvement on average,

00:37:38.000 | you're doing great.

00:37:39.000 | But we don't have this clear indicator

00:37:41.000 | in alignment evaluation.

00:37:44.000 | The OpenLLM leaderboard was the same,

00:37:46.000 | where it's --

00:37:48.000 | This came out of the team

00:37:49.000 | I was working on at Hugging Base,

00:37:50.000 | where we were just trying to evaluate

00:37:52.000 | more models to get more signal,

00:37:55.000 | which was that we needed to know

00:37:57.000 | what our competitors were doing

00:37:58.000 | and get some ballpark estimate.

00:38:00.000 | And what this grew into

00:38:01.000 | is this whole kind of, like,

00:38:02.000 | ecosystem-supporting discovery tool

00:38:05.000 | just because, like,

00:38:06.000 | getting any signal is so useful.

00:38:08.000 | So, this is where we were starting

00:38:09.000 | with evaluation,

00:38:10.000 | which was just, like, no signal.

00:38:11.000 | And why this leaderboard was so successful

00:38:13.000 | is because it gave everyone access

00:38:15.000 | to a little bit more signal

00:38:16.000 | on the models that they're evaluating,

00:38:18.000 | but it didn't really solve

00:38:20.000 | any of the fundamental problems.

00:38:21.000 | And it didn't show us that, like,

00:38:23.000 | doing RLHF on models

00:38:24.000 | would actually make the scores go up.

00:38:26.000 | It's starting to get better today,

00:38:27.000 | but that's, like, a year on

00:38:29.000 | from the launch of this leaderboard.

00:38:32.000 | So, this is, like --

00:38:33.000 | These problems are still --

00:38:34.000 | This is talking about a section

00:38:36.000 | from July of 2023,

00:38:38.000 | and it seems like the things

00:38:39.000 | that if I were to go into --

00:38:40.000 | like, go into work and talk to people

00:38:42.000 | about what we're going to do

00:38:43.000 | with our new models,

00:38:44.000 | like, these are the same questions

00:38:45.000 | that we're still asking,

00:38:46.000 | which is why it's --

00:38:48.000 | These evaluation schools

00:38:50.000 | are still so useful,

00:38:51.000 | and it's why people still talk

00:38:52.000 | about Opaqa Eval,

00:38:53.000 | but it shows how much of an opportunity

00:38:54.000 | there still is.

00:38:57.000 | So, this is kind of a summary

00:38:58.000 | of what I was talking about.

00:38:59.000 | It's, like, how easy is it

00:39:01.000 | to use these evaluations?

00:39:03.000 | Like, Chatbot Arena is everything.

00:39:05.000 | Like, Andrej Karpathy tweets about it,

00:39:07.000 | and it's great,

00:39:08.000 | and you can go there

00:39:09.000 | and you can use models,

00:39:10.000 | but, like, I don't know

00:39:12.000 | how to make sense of that

00:39:14.000 | as if I'm trying to sit down

00:39:15.000 | every day and write code.

00:39:17.000 | And Opaqa Eval and MTBench

00:39:19.000 | mostly solve this by being cheap

00:39:21.000 | and pretty accessible,

00:39:23.000 | but I really, really think

00:39:24.000 | there's a huge opportunity here

00:39:26.000 | to come out with more.

00:39:27.000 | So, a colleague at AI2

00:39:28.000 | launched WildBench,

00:39:29.000 | which is a good tool

00:39:31.000 | that kind of fits in.

00:39:33.000 | It's like a Chatbot Arena

00:39:34.000 | Opaqa Eval hybrid,

00:39:35.000 | and you can use it

00:39:36.000 | a little bit faster.

00:39:37.000 | It's, like, how are we going

00:39:38.000 | to continue to push this along

00:39:40.000 | is a great question,

00:39:41.000 | and I would love to hear

00:39:42.000 | what people think.

00:39:46.000 | We'll take another pause.

00:39:47.000 | I think we're getting good questions

00:39:50.000 | in the chat around RLHF

00:39:53.000 | and other things.

00:39:55.000 | To what extent do aligned models

00:39:57.000 | actually reason about

00:39:59.000 | whether user intent is malicious

00:40:01.000 | rather than perform target detection

00:40:03.000 | to avoid unsafe topics?

00:40:06.000 | This is a question

00:40:07.000 | that I wanted to read

00:40:08.000 | because it kind of gets

00:40:09.000 | at this model versus system topic.

00:40:11.000 | So, when ChatGBT was released

00:40:13.000 | on day one,

00:40:14.000 | it has an output filter

00:40:16.000 | that does moderation.

00:40:17.000 | The language model

00:40:18.000 | that is instruction tuned

00:40:19.000 | or RLHF tuned

00:40:20.000 | generates a bunch of text,

00:40:22.000 | and then a separate model

00:40:23.000 | says yes or no,

00:40:25.000 | and that's, like,

00:40:26.000 | where it actually does detection,

00:40:28.000 | and with the release of LLAMA3,

00:40:30.000 | there's another model

00:40:31.000 | that's called, like, LLAMAGuard,

00:40:33.000 | and this is a classifier

00:40:34.000 | which will take this text,

00:40:36.000 | do the moderation,

00:40:37.000 | and say which type

00:40:38.000 | of unsafe topic it is.

00:40:40.000 | The actual model

00:40:41.000 | that it's generating

00:40:42.000 | does no reasoning

00:40:43.000 | over kind of what is actually

00:40:46.000 | an unsafe topic.

00:40:50.000 | So, I'll come back to other ones.

00:40:51.000 | I'm going to do some discussions

00:40:52.000 | about RLHF right now,

00:40:56.000 | so this will kind of give

00:40:57.000 | good grounds for

00:40:58.000 | where we can continue

00:40:59.000 | some of these discussions on.

00:41:02.000 | There's ORPO or REINFORCE.

00:41:04.000 | I don't cover all of them

00:41:05.000 | in the lecture,

00:41:06.000 | but I kind of lead on

00:41:07.000 | to why we would talk about them.

00:41:13.000 | So, this chapter

00:41:14.000 | is when I started

00:41:15.000 | to get validation

00:41:16.000 | as an RL researcher

00:41:17.000 | that being opportunistic

00:41:19.000 | and going to work

00:41:20.000 | in language models

00:41:21.000 | was actually a good idea.

00:41:23.000 | For a lot of this,

00:41:24.000 | there was a lot of uncertainty

00:41:27.000 | over if people

00:41:29.000 | in this kind of open ecosystem

00:41:31.000 | were even going to be able

00:41:32.000 | to use RLHF at all

00:41:34.000 | or if being a "RLHF researcher"

00:41:36.000 | for me meant

00:41:37.000 | I was going to do

00:41:38.000 | instruction fine-tuning

00:41:39.000 | and talk about evals

00:41:40.000 | and never think about RL again.

00:41:43.000 | It turned out to be wrong.

00:41:47.000 | I'm going to review

00:41:48.000 | some RL fundamentals

00:41:49.000 | just to kind of make sure

00:41:50.000 | we're talking the same language

00:41:51.000 | as we talk about this,

00:41:52.000 | and this will lead

00:41:53.000 | into direct preference optimization.

00:41:55.000 | So, there's a reason

00:41:56.000 | why I'm doing math.

00:41:57.000 | I know this is not

00:41:58.000 | a normal lecture,

00:41:59.000 | but here is the equation

00:42:01.000 | where you'll see this

00:42:02.000 | in RLHF papers.

00:42:03.000 | This is what we're optimizing

00:42:04.000 | when we're optimizing RLHF.

00:42:07.000 | It looks kind of nebulous here.

00:42:09.000 | I'll break it down.

00:42:10.000 | So, on the left side,

00:42:11.000 | we're really maximizing

00:42:12.000 | with respect to some policy, pi,

00:42:15.000 | this reward that is parameterized

00:42:17.000 | by a network, phi,

00:42:19.000 | and we have a penalty

00:42:20.000 | that is this kind of KL term,

00:42:22.000 | which is the distance

00:42:23.000 | from our policy to some reference.

00:42:25.000 | We want to increase reward,

00:42:27.000 | but we want to constrain the model

00:42:29.000 | so that this kind of optimization

00:42:31.000 | doesn't go too far.

00:42:34.000 | And the primary questions

00:42:35.000 | when doing this is,

00:42:37.000 | how do we implement

00:42:38.000 | a good reward function

00:42:39.000 | and how do we optimize the reward?

00:42:41.000 | This is a really RL-centric way

00:42:43.000 | of doing it, which is like,

00:42:44.000 | if you give me a reward function,

00:42:47.000 | I can optimize it.

00:42:48.000 | And the classic RL idea

00:42:50.000 | was I'm in an environment

00:42:51.000 | that environment has

00:42:52.000 | the reward function built in.

00:42:54.000 | In RLHF, we're designing

00:42:56.000 | our own reward function.

00:42:57.000 | So this adds a lot of weirdness

00:43:00.000 | to the actual optimization

00:43:02.000 | that we're doing.

00:43:04.000 | And what we do is

00:43:06.000 | to get this reward function,

00:43:08.000 | is we learn what is called

00:43:09.000 | a preference or reward model.

00:43:10.000 | And the most popular way to do this

00:43:12.000 | is to take a language model

00:43:16.000 | that's kind of predicting

00:43:17.000 | this separation of two preferences.

00:43:20.000 | This is called a Bradley-Terry model,

00:43:22.000 | which goes back to some economics.

00:43:24.000 | But the key idea is that

00:43:25.000 | the reward will be proportional

00:43:27.000 | to the probability

00:43:29.000 | that the text I have

00:43:30.000 | would be chosen over

00:43:31.000 | any other arbitrary text.

00:43:33.000 | Quickly sounds really theory-like,

00:43:35.000 | but it outputs a scalar,

00:43:36.000 | which is now a reward function

00:43:38.000 | and is based on this pairwise data.

00:43:42.000 | So the idea is with this equation,

00:43:45.000 | what if we just use gradient ascent

00:43:46.000 | on this equation?

00:43:48.000 | And instead of trying to learn

00:43:50.000 | a preference model

00:43:51.000 | and learn this R,

00:43:53.000 | what if we just use

00:43:54.000 | gradient ascent directly?

00:43:55.000 | This is really what

00:43:56.000 | direct preference optimization

00:43:57.000 | is doing.

00:43:58.000 | There's a bunch of math in here

00:43:59.000 | to get what this R is,

00:44:01.000 | but this was released back in May.

00:44:03.000 | So we've already moved on

00:44:04.000 | months ahead.

00:44:05.000 | This chapter starts in kind of

00:44:06.000 | late September, October.

00:44:08.000 | Back in May,

00:44:09.000 | when we're still talking

00:44:10.000 | about Open Assistant,

00:44:11.000 | this DPO paper came out.

00:44:13.000 | It's a fantastic paper.

00:44:14.000 | If you hadn't read it,

00:44:15.000 | it's a great way to learn

00:44:16.000 | about language model math.

00:44:18.000 | It's worth reading,

00:44:20.000 | but the core idea is like,

00:44:21.000 | why are we spending all this time

00:44:24.000 | learning a reward model

00:44:25.000 | when we can just use gradient ascent

00:44:27.000 | and solve for the loss function?

00:44:30.000 | Some key ideas to think about

00:44:31.000 | with DPO is that DPO

00:44:34.000 | is extremely simple to implement.

00:44:36.000 | On the right here side

00:44:37.000 | is the example code

00:44:38.000 | from the DPO paper

00:44:40.000 | where it's like,

00:44:41.000 | as long as you have access

00:44:42.000 | to the log probs from a model,

00:44:44.000 | which is a very core thing

00:44:45.000 | for training language models,

00:44:47.000 | you can compute the DPO loss.

00:44:50.000 | Because of this,

00:44:51.000 | because the loss function

00:44:52.000 | is at a nice abstraction,

00:44:54.000 | it scales nicely

00:44:55.000 | with existing libraries.

00:44:57.000 | And what it's actually doing

00:44:59.000 | is training an implicit

00:45:00.000 | reward function.

00:45:01.000 | So the reward is a function

00:45:02.000 | of the log probs.

00:45:04.000 | I don't have the equation here

00:45:05.000 | because it quickly becomes

00:45:06.000 | a rabbit hole.

00:45:08.000 | But whatever the whole DPO

00:45:10.000 | versus PPO debate means,

00:45:12.000 | or ORPO,

00:45:14.000 | I don't remember

00:45:15.000 | what the paper title is.

00:45:18.000 | We're going to see

00:45:19.000 | a lot of these things

00:45:20.000 | because it's simple

00:45:21.000 | and it scales well.

00:45:22.000 | That doesn't necessarily mean

00:45:23.000 | the fundamental limits are higher,

00:45:25.000 | but sometimes it doesn't matter

00:45:26.000 | if the limits are higher

00:45:28.000 | if it's easier to make progress

00:45:29.000 | on something.

00:45:30.000 | Because it feels better

00:45:31.000 | when progress is being made.

00:45:33.000 | So that's really a core thing

00:45:34.000 | is we'll keep seeing these models

00:45:35.000 | and we are.

00:45:37.000 | And there's this whole debate

00:45:38.000 | that has gone on,

00:45:40.000 | kind of crushing a whole bunch

00:45:41.000 | of these questions

00:45:42.000 | by redirecting them

00:45:44.000 | in a very political manner.

00:45:46.000 | But it's like,

00:45:47.000 | should we use reinforced?

00:45:48.000 | What about PPO?

00:45:49.000 | What about other things?

00:45:51.000 | They're very different styles

00:45:53.000 | of optimization.

00:45:54.000 | So in one half,

00:45:56.000 | we're using RL update rules,

00:45:58.000 | which is ultimately about

00:45:59.000 | learning a value function

00:46:00.000 | and then learning to update,

00:46:02.000 | taking gradient steps

00:46:03.000 | with respect to that value function.

00:46:05.000 | In DPO,

00:46:06.000 | we're taking gradient steps

00:46:07.000 | directly from the probabilities

00:46:08.000 | of the language model.

00:46:09.000 | They're very different

00:46:10.000 | optimization regimes.

00:46:12.000 | And there's this great meme

00:46:13.000 | where like all of this,

00:46:14.000 | like there was a month

00:46:15.000 | where the whole NLP Twitter

00:46:17.000 | was just arguing about this,

00:46:19.000 | but both of them

00:46:21.000 | are continuing to progress

00:46:24.000 | and that is good.

00:46:25.000 | It will not just be one

00:46:26.000 | or the other.

00:46:30.000 | So what really made this debate

00:46:32.000 | kick into gear

00:46:33.000 | was this release

00:46:34.000 | of the Zephyr beta model

00:46:35.000 | from HuggingFace.

00:46:37.000 | It's after I left HuggingFace

00:46:38.000 | with the team I was on.

00:46:40.000 | And it was the first model

00:46:41.000 | to make a splash with DPO

00:46:42.000 | and it was a big step up

00:46:43.000 | in how models were perceived.

00:46:45.000 | This model was added

00:46:46.000 | to like the use search engine.

00:46:47.000 | People were using

00:46:48.000 | all sorts of crazy things.

00:46:49.000 | So it just felt really good to use.

00:46:51.000 | It was building on this

00:46:52.000 | better base model.

00:46:53.000 | Mistral had come out.

00:46:54.000 | A new data set,

00:46:55.000 | this ultra feedback data set

00:46:57.000 | that I mentioned

00:46:58.000 | is still one of the core

00:46:59.000 | data sets used today

00:47:00.000 | when we're kind of

00:47:01.000 | practicing alignment.

00:47:02.000 | This was back in September,

00:47:04.000 | October that this model came back.

00:47:06.000 | One of the core things

00:47:07.000 | to getting DPO to work

00:47:09.000 | was using really low learning rates

00:47:11.000 | like 5E minus 7.

00:47:13.000 | There's memes about 3E minus 4

00:47:15.000 | being the only learning rate

00:47:16.000 | you need to do deep learning

00:47:18.000 | and changing it being kind of a joke.

00:47:20.000 | DPO is the case where

00:47:21.000 | that is not even remotely true.

00:47:23.000 | And then you can see

00:47:24.000 | the MT bench scores again

00:47:25.000 | continuing to rise.

00:47:27.000 | So this is like a validation proof

00:47:28.000 | that DPO works

00:47:29.000 | that came four months

00:47:31.000 | after the paper was released.

00:47:33.000 | That delay is something

00:47:34.000 | that nobody expected.

00:47:35.000 | We were kind of losing hope

00:47:36.000 | on DPO at many times

00:47:38.000 | and now look at where it is.

00:47:40.000 | And then when I joined AI2,

00:47:42.000 | they were already working

00:47:43.000 | on this project

00:47:44.000 | and I had just helped

00:47:45.000 | to kind of get it across the line.

00:47:46.000 | It's like the classic advisor thing

00:47:48.000 | where sometimes it's just easier

00:47:50.000 | is the first model to scale DPO

00:47:52.000 | to 70 billion parameters.

00:47:53.000 | The last question was,

00:47:54.000 | oh yeah, DPO works on small models.

00:47:56.000 | Will anyone ever use it

00:47:57.000 | on a big model?

00:47:58.000 | The answer is yes.

00:47:59.000 | And it's like built on the same recipe

00:48:01.000 | as Zephyr with a little bit

00:48:03.000 | different instruction tuning data

00:48:04.000 | sets, but scores continued to climb.

00:48:08.000 | This model was so close

00:48:11.000 | to beating GPT 3.5 on chatbot arena.

00:48:14.000 | It was like a couple ELO points below.

00:48:16.000 | So we didn't get the title

00:48:17.000 | of being the first ones to do that,

00:48:19.000 | but open models were starting

00:48:21.000 | to get that kind of chatty behavior

00:48:23.000 | that for so long had eluded them

00:48:25.000 | because we hadn't figured out scale

00:48:27.000 | because we hadn't figured

00:48:28.000 | out these data sets.

00:48:29.000 | So it was great progress.

00:48:30.000 | Very important and kind

00:48:32.000 | of major transition

00:48:33.000 | in my career where now it's like,

00:48:34.000 | okay, RHF methods really can work.

00:48:39.000 | And these weren't just,

00:48:40.000 | I was not the only one touching

00:48:41.000 | things that did this.

00:48:43.000 | A couple other projects

00:48:44.000 | that are really important.

00:48:45.000 | So NVIDIA had STEER-LM

00:48:47.000 | where STEER-LM was collecting

00:48:50.000 | feedback data where there

00:48:51.000 | was attributes on it,

00:48:52.000 | like how helpful the message was,

00:48:54.000 | how concise the message was.

00:48:56.000 | And they did a bunch of fine tuning

00:48:57.000 | and released good, very solid models.

00:49:01.000 | And they also showed that PPO

00:49:02.000 | was better than DPO,

00:49:03.000 | which is interesting.

00:49:05.000 | And then Berkeley came out

00:49:06.000 | with this Starling-LM Alpha

00:49:08.000 | where they had a new preference

00:49:10.000 | data set, Nectar,

00:49:12.000 | which is still looked at today.

00:49:14.000 | And then they also used this kind

00:49:15.000 | of PPO method after training

00:49:17.000 | a reward model.

00:49:18.000 | And both of these came out

00:49:19.000 | about the same time,

00:49:20.000 | and they're like, huh,

00:49:21.000 | DPO isn't doing as well for us.

00:49:23.000 | The models are really good.

00:49:25.000 | Recently, the second Starling

00:49:26.000 | model came out.

00:49:28.000 | Its reward model is very strong

00:49:29.000 | in my testing.

00:49:31.000 | It's a 7b model that's almost

00:49:32.000 | reaching GPT levels in chatbot arena.

00:49:35.000 | It's crazy how fast

00:49:36.000 | these models are going.

00:49:38.000 | But we still get a lot of models

00:49:39.000 | that are both with PPO or with DPO.

00:49:42.000 | It's really not one or the other

00:49:44.000 | at this point.

00:49:52.000 | Okay.

00:49:53.000 | I think this is a reasonable time

00:49:54.000 | for me to take a couple

00:49:55.000 | of these questions.

00:49:56.000 | I might come back to them

00:49:57.000 | in more slides.

00:49:58.000 | But someone asked,

00:50:01.000 | "Is there a particular alignment

00:50:02.000 | method that I use?"

00:50:05.000 | This is teasing a paper,

00:50:07.000 | but there was a recent paper

00:50:08.000 | that came out where --

00:50:10.000 | I don't remember the group.

00:50:11.000 | I can find it later.

00:50:13.000 | But they did what they called

00:50:14.000 | a systematic study of PPO and DPO,

00:50:17.000 | and they showed that PPO was better.

00:50:19.000 | I will say that in the experiments

00:50:21.000 | that I'm seeing at Allen AI,

00:50:22.000 | I'm also seeing PPO to be stronger,

00:50:25.000 | and we hope to release

00:50:26.000 | this stuff soon.

00:50:28.000 | It's not one crushes the other.

00:50:31.000 | It's that we're seeing

00:50:32.000 | that for some reason,

00:50:34.000 | PPO is just getting

00:50:35.000 | a bit more performance.

00:50:37.000 | And then the logical question is,

00:50:38.000 | "Why not reinforce?"

00:50:40.000 | which is another one

00:50:41.000 | of these questions.

00:50:42.000 | I would love to try it.

00:50:43.000 | It's just like we have the code

00:50:44.000 | that we have,

00:50:45.000 | and we don't want to touch things

00:50:46.000 | that are working well,

00:50:47.000 | and there's just so few people

00:50:49.000 | that are kind of working

00:50:50.000 | in this space,

00:50:51.000 | which I'm like,

00:50:52.000 | "Let's get more people

00:50:53.000 | working on these things,"

00:50:55.000 | because there's so few people

00:50:57.000 | that can answer all these questions.

00:50:58.000 | So there's another question

00:50:59.000 | that says like,

00:51:00.000 | "Some say reinforce can work

00:51:02.000 | as well as if not better than PPO."

00:51:05.000 | It probably can.

00:51:07.000 | It comes down to your infrastructure,

00:51:09.000 | carefully fine-tuning it,

00:51:10.000 | what people are excited about,

00:51:12.000 | and a lot of luck.

00:51:13.000 | So we'll see these continue

00:51:14.000 | to play out throughout the year,

00:51:17.000 | but it's complicated.

00:51:22.000 | I'll come back

00:51:23.000 | to the Lama 3 question.

00:51:24.000 | I have one slide for that

00:51:26.000 | in a little bit,

00:51:28.000 | but really this modern ecosystem

00:51:29.000 | is how investment

00:51:32.000 | in releasing open models

00:51:34.000 | that people can use

00:51:35.000 | is continuing to grow

00:51:37.000 | into 2024.

00:51:39.000 | I think there's always been

00:51:40.000 | this tenuous period of like,

00:51:42.000 | there's only a few people

00:51:43.000 | releasing these aligned models.

00:51:44.000 | There's these important people

00:51:46.000 | in the ecosystem

00:51:47.000 | that are just doing this

00:51:48.000 | because they want to,

00:51:49.000 | and it's for fun.

00:51:50.000 | They might have a day job,

00:51:51.000 | and it's like,

00:51:52.000 | how long can this go on?

00:51:53.000 | What are the limitations on this?

00:51:55.000 | But in 2024,

00:51:56.000 | we've really seen more companies

00:51:59.000 | come into the space,

00:52:00.000 | and someone drew Lama 3

00:52:02.000 | on the screen.

00:52:03.000 | It's talking to coworkers,

00:52:04.000 | and they're like,

00:52:05.000 | "Yeah, you're going to need

00:52:06.000 | to keep adding models.

00:52:07.000 | You're never going to be able

00:52:08.000 | to give this lecture."

00:52:09.000 | Yeah, it's a losing battle.

00:52:10.000 | I know, but there's just

00:52:12.000 | way more types of models.

00:52:15.000 | So I get away with not having Lama 3

00:52:17.000 | on this specific slide

00:52:18.000 | because I'm talking about

00:52:19.000 | diversity of players and models,

00:52:21.000 | not just the fact that

00:52:22.000 | there are more great models.

00:52:24.000 | So there's interesting models

00:52:25.000 | like this one Genstruct

00:52:26.000 | from New Research

00:52:27.000 | in the last few months

00:52:28.000 | where it's like

00:52:29.000 | a specifically fine-tuned model

00:52:31.000 | for rephrasing any text

00:52:33.000 | into instructions.

00:52:34.000 | So if you have a book,

00:52:35.000 | and you want a model

00:52:36.000 | to be able to answer

00:52:37.000 | questions about this,

00:52:38.000 | why don't we just throw it

00:52:39.000 | at this rephrasing question,

00:52:41.000 | this rephrasing model?

00:52:42.000 | And the teams that I work on

00:52:44.000 | at AI2

00:52:45.000 | are trying to release

00:52:46.000 | instruction models