Lesson 10: Deep Learning Foundations to Stable Diffusion, 2022

Chapters

0:0 Introduction0:35 Showing student’s work over the past week.

6:4 Recap Lesson 9

12:55 Explaining “Progressive Distillation for Fast Sampling of Diffusion Models” & “On Distillation of Guided Diffusion Models”

26:53 Explaining “Imagic: Text-Based Real Image Editing with Diffusion Models”

33:53 Stable diffusion pipeline code walkthrough

41:19 Scaling random noise to ensure variance

50:21 Recommended homework for the week

53:42 What are the foundations of stable diffusion? Notebook deep dive

66:30 Numpy arrays and PyTorch Tensors from scratch

88:28 History of tensor programming

97:0 Random numbers from scratch

102:41 Important tip on random numbers via process forking

00:00:00.000 | Hi, everybody, and welcome back. This is lesson 10 of practical deep learning for coders.

00:00:09.920 | It's the second lesson in part two, which is where we're going from deep learning foundations

00:00:14.880 | to stable diffusion. So before we dive back into our notebook, I think first of all, let's

00:00:20.700 | take a look at some of the interesting work that students in the course have done over

00:00:24.640 | the last week. I'm just going to show a small sample of what's on the forum. So check out

00:00:29.760 | the share your work here thread on the forum for many, many, many more examples. So Puro

00:00:38.400 | did some something interesting, which is to create a bunch of images of doing a linear

00:00:46.500 | interpolation. I mean, details actually spherical linear interpolation, but it doesn't matter.

00:00:51.160 | Doing a linear interpolation between two different latent, you know, noisy, you know, latent noise

00:00:56.280 | starting points for an auto picture and then showed all the intermediate results that came

00:01:03.720 | out pretty nice and they did something similar starting with an old car prompt and going

00:01:08.640 | to a modern Ferrari prompt. I can't remember exactly what the prompts were, but you can

00:01:12.720 | see how as it kind of goes through that latent space, it actually is changing the image that's

00:01:20.380 | coming out. I think that's really cool. And then I love the way Namrata took that and

00:01:26.000 | took it to another level in a way, which is starting with a dinosaur and turning into

00:01:30.240 | a bird. And this is a very cool intermediate picture of one of the steps along the way.

00:01:37.480 | The dino bird. I love it. Dino chick. Fantastic. So much creativity on the forums. I loved

00:01:48.480 | this. John Richmond took his daughter's dog and turned it gradually into a unicorn. And

00:01:57.640 | I thought this one along the way actually came out very, very nicely. I think this is

00:02:02.240 | adorable. And I suspect that John has won the dad of the year or dad of the week, maybe

00:02:08.080 | award this week for this fantastic project. And Maureen did something very interesting,

00:02:18.920 | which is she took John O's parrot image from his lesson and tried bringing it across to

00:02:28.840 | various different painter's styles. And so her question was, anyone want to guess the

00:02:33.040 | artists in the prompts? So I'm just going to let you pause it before I move on. If you

00:02:40.000 | want to try to guess. And there they are. Most of them pretty obvious, I guess. I think

00:02:51.320 | it's so funny that Frida Kahlo appears in all of her paintings. So the parents actually

00:02:56.840 | turned into Frida Kahlo. All right. Not all of her paintings, but all of her famous ones.

00:03:01.960 | So the very idea of Frida Kahlo painting without her in it is so unheard of that the parrots

00:03:05.960 | turned into Frida Kahlo. And I like this Jackson Pollock. It's still got the parrot going on

00:03:11.340 | there. So that's a really lovely one, Maureen. Thank you. And this is a good reminder to

00:03:19.760 | make sure that you check out the other two lesson videos. So she was working with John

00:03:27.280 | Ono's stable diffusion lesson. So be sure to check that out if you haven't yet. It is

00:03:35.200 | available on the course web page and on the forums and has lots of cool stuff that you

00:03:41.000 | can work with, including this parrot. And then the other one to remind you about is

00:03:49.120 | the video that Waseem and Tanish did on the math of diffusion. And I do want to read out

00:03:56.880 | what Alex said about this because I'm sure a number of you feel the same way. My first

00:04:02.080 | reaction on seeing something with the title math of diffusion was to assume that, oh,

00:04:05.960 | that's just something for all the smart people who have PhDs in maths on the course. And

00:04:09.640 | it'll probably be completely incomprehensible. But of course, it's not that at all. So be

00:04:16.400 | sure to check this out. Even if you don't think of yourself as a math person, I think

00:04:20.840 | it's some nice background that you may find useful. It's certainly not necessary. But you

00:04:28.480 | might. Yeah, I think it's kind of useful to start to dig in some of the math at this point.

00:04:39.280 | One particularly interesting project that's been happening during the week is from Jason

00:04:43.040 | Antich, who is a bit of a legend around here. Many of you will remember him as being the

00:04:49.480 | guy that created De-Oldify and actually worked closely with us on our research, which together

00:04:57.440 | turned into Nogan and Decrapify and other things, created lots of papers. And Jason has kindly

00:05:07.680 | joined our little research team working on the stuff for these lessons and for developing

00:05:13.680 | a kind of fast AI approach to stable diffusion. And he took the idea that I prompted last

00:05:21.080 | week, which is maybe we should be using classic optimizers rather than differential equation

00:05:28.000 | solvers. And he actually made it work incredibly well already within a week. These faces were

00:05:33.600 | generated on a single GPU in a few hours from scratch by using classic deep learning optimizers,

00:05:45.120 | which is like an unheard of speed to get this quality of image. And we think that this research

00:05:52.160 | direction is looking extremely promising. So really great news there. And thank you,

00:05:58.640 | Jason, for this fantastic progress. Yeah, so maybe we'll do a quick reminder of what we

00:06:09.640 | looked at last week. So last week, I used a bit of a mega one note hand-drawn thing.

00:06:17.000 | I thought this week I might just turn it into some slides that we can use. So the basic

00:06:24.760 | idea, if you remember, is that we started with, if we're doing handwritten digits, for example,

00:06:30.400 | we'd start with a number seven. This would be one of the ones with a stroke through it

00:06:35.040 | that some countries use. And then we add to it some noise. And the seven plus the noise

00:06:43.120 | together would equal this noisy seven. And so what we then do is we present this noisy

00:06:51.440 | seven as an input to a unit. And we have it try to predict which pixels are noise, basically,

00:07:02.320 | or predict the noise. And so the unit tries to predict the noise from the number. It then

00:07:09.800 | compares its prediction to the actual noise. And it's going to then get a loss, which it

00:07:18.680 | can use to update the weights in the unit. And that's basically how stable diffusion,

00:07:26.240 | the main bit, if you like, the unit is created. To make it easier for the unit, we can also

00:07:34.220 | pass in an embedding of the actual digit, the actual number seven. So for example, a

00:07:39.600 | one hot encoded vector, which goes through an embedding layer. And the nice thing about

00:07:45.720 | that to remind you is that if we do this, then we also have the benefit that then later

00:07:50.080 | on we can actually generate specific digits by saying I want a number seven or I want

00:07:54.960 | a number five and it knows what they look like. I've skipped over here the VAE Latents

00:08:01.080 | piece, which we talked about last week. And to remind you, that's just a computational

00:08:06.640 | shortcut. It makes it it makes it faster. And so we don't need to include that in this

00:08:14.600 | picture because it's just a just a computational shortcut that we can pre-process things into

00:08:19.920 | that latent space with the VAE first, if we wish. So that's what the unit does. Now then

00:08:28.120 | to remind you, you know, we want to handle things that are more interesting than just

00:08:31.680 | the number seven. We want to actually handle things where we can say, for example, a graceful

00:08:40.720 | swan or a scene from Hitchcock. And the way we do that is we turn these sentences into

00:08:48.120 | embeddings as well. And we turn them into embeddings by trying to create embeddings

00:08:53.120 | of these sentences, which are as similar as possible to embeddings of the photos or images

00:08:58.280 | that they are connected with. And remind you, the way we did that or the way that was done

00:09:02.760 | originally as part of this thing called clip was to basically download from the internet

00:09:10.120 | lots of examples of lots of images, find their alt tags. And then for each one, we then have

00:09:17.800 | their image and its alt tag. So here's the graceful swan and its alt tag. And then we

00:09:24.240 | build two models, an image encoder that turns each image into some feature vector. And then

00:09:33.320 | we have a text encoder that turns each piece of text into a bunch of features. And then

00:09:38.680 | we create a loss function that says that the features for a graceful swan, the text, should

00:09:44.960 | be as close as possible to the features for the picture of a graceful swan. And specifically,

00:09:51.000 | we take the dot product and then we add up all the green ones because these are the ones

00:09:55.320 | that we want to match and we subtract all the red ones because those are the ones we

00:09:58.880 | don't want to match. Those are where the text doesn't match the image. And so that's the

00:10:04.160 | contrastive lost, which gives us the CL in clip. So that's a review of some stuff we

00:10:11.600 | did last week. And so with this, then we can, we now have a text encoder, which we can now

00:10:17.760 | say a graceful swan, and it will spit out some embeddings. And those are the embeddings

00:10:23.160 | that we can feed into our unit during training. And so then we haven't been doing any of that

00:10:34.320 | training ourselves, except for some fine tuning, because it takes a very long time on a lot

00:10:38.120 | of computers. But instead, we take pre-trained models and do inference. And the way we do

00:10:44.120 | inference is we put in an example of the thing that we want, that we have an embedding for.

00:10:49.480 | So let's say we're doing handwritten digits, and we put in some random noise into the unit.

00:10:56.280 | And then it spits out a prediction of which bits of noise you could remove to leave behind

00:11:02.000 | a picture of the number three. Initially, it's going to do quite a bad job of that.

00:11:07.040 | So we subtract just a little bit of that noise from the image to make it a little bit less

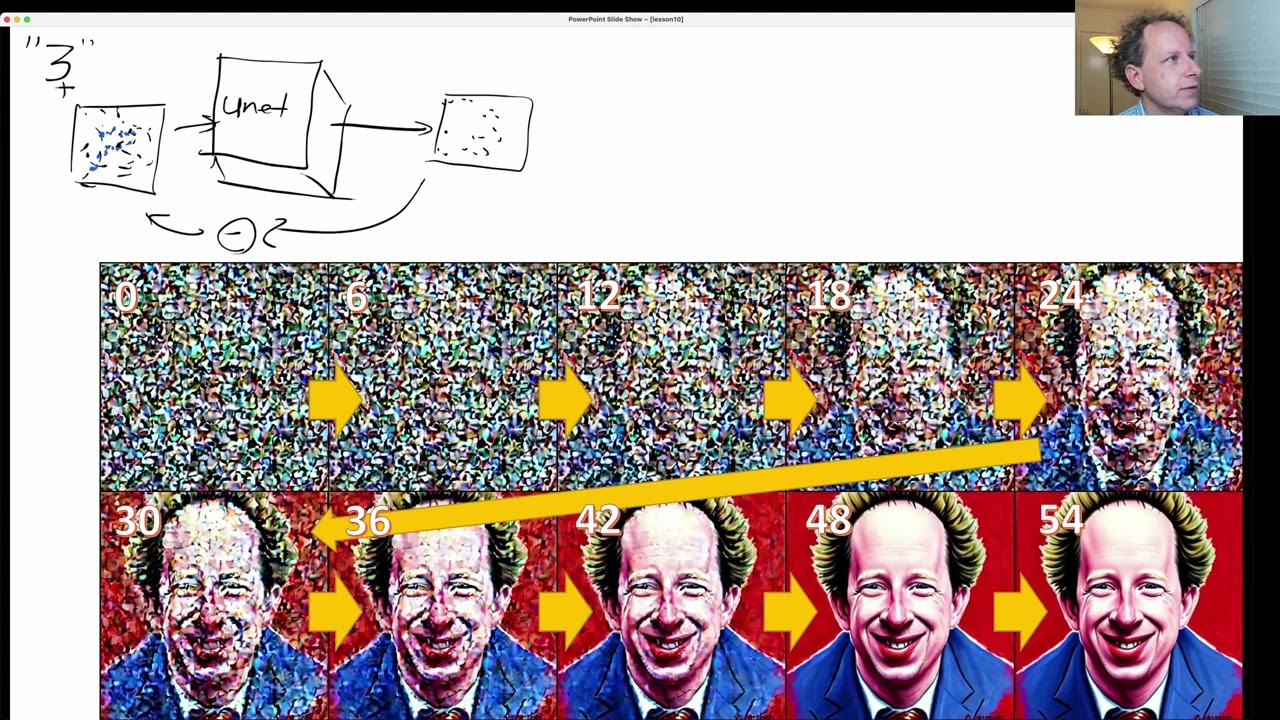

00:11:11.760 | noisy, and we do it again, and we do it a bunch of times. So here's what that looks like, creating

00:11:23.200 | a-- I think somebody here did a smiling picture of Jeremy Howard or something, if I remember

00:11:27.320 | correctly. And if we print out the noise at kind of step zero, and it's step six, and it's

00:11:36.240 | step 12, you can see the first signs of a face starting to appear. Definitely a face

00:11:41.640 | appearing here, 18, 24. By step 30, it's looking much more like a face. By 42, it's getting

00:11:50.800 | there. It's just got a few little blemishes to fix up. And here we are. I think I've slightly

00:11:55.940 | messed up my indexes here because it should finish at 60, not 54, but such is life. So

00:12:03.140 | rather rosy red lips, too, I would have to say. So remember, in the early days, this

00:12:12.200 | took 1,000 steps, and now there are some shortcuts to make it take 60 steps. And this is what

00:12:20.720 | the process looks like. And the reason this doesn't look like normal noise is because

00:12:24.200 | now we are actually doing the VAE Latents thing. And so noisy Latents don't look like

00:12:32.600 | a Gaussian noise. They look like, well, they look like this. This is what happens when

00:12:36.520 | you decode those noisy Latents. Now, you might remember last week I complained that things

00:12:44.200 | are moving too quickly. And there was a couple of papers that had come out the day before

00:12:49.480 | and made everything entirely out of date. So John and I and the team have actually had

00:12:58.120 | time to read those papers. And I thought now would be a good time to start going through

00:13:08.240 | some papers for the first time. So what we're actually going to do is show how these papers

00:13:16.680 | have taken the required number of steps to go through this process down from 60 steps

00:13:23.160 | to 4 steps, which is pretty amazing. So let's talk about that. And the paper is specifically

00:13:33.440 | is this one progressive distillation for fast sampling of diffusion models. So it's only

00:13:45.680 | been a week, so I haven't had much of a chance to try to explain this before. So apologies

00:13:49.040 | in advance if this is awkward, but hopefully it's going to make some sense. What we're

00:13:55.160 | going to start with is so we're going to start with this process, which is gradually denoising

00:14:07.680 | images and actually I wonder if we can copy it. Okay, so how are we going to get this down

00:14:17.760 | from 60 steps to 4 steps? The basic idea is that we're going to do a process. We're going

00:14:32.520 | to do a process called distillation, which I have no idea how to spell, but hopefully

00:14:38.560 | that's close enough that you get the idea. Distillation is a process which is pretty

00:14:43.640 | common in deep learning. And the basic idea of distillation is that you take something

00:14:48.760 | called a teacher network, which is some neural network that already knows how to do something,

00:14:55.720 | but it might be slow and big. And the teacher network is then used by a student network,

00:15:03.400 | which tries to learn how to do the same thing, but faster or with less memory. And in this

00:15:10.640 | case, we want ours to be faster. We want to do less steps. And the way we can do this

00:15:17.000 | conceptually, it's actually, in my opinion, reasonably straightforward. We have. Like when

00:15:29.840 | I look at this and I think like, wow, you know, neural nets are really amazing. So given

00:15:34.920 | your own, it's really amazing. Why is it taking like 18 steps to go from there to there? Like

00:15:47.640 | that seems like something that you should be able to do in one step. The fact that it's

00:15:53.440 | taking 18 steps and originally, of course, that was hundreds and hundreds of steps is

00:16:00.480 | because it's kind of that's just a kind of a side effect of the math of how this thing

00:16:08.000 | was originally developed, you know, this idea of this diffusion process. But the idea in

00:16:15.600 | this paper is something that actually we've, I think I might have even mentioned in the

00:16:20.840 | last lesson, it's something we were thinking of doing ourselves before this paper beat

00:16:24.240 | us to it, which is to say, well, what if we train a new model where the model takes as

00:16:32.760 | input this image, right, and puts it through some other unit, unit B. Okay. And then that

00:16:52.360 | spits out some result. And what we do is we take that result and we compare it to this

00:17:03.200 | image, the thing we actually want. Because the nice thing is now, which we've never really

00:17:08.980 | had before is we have for each intermediate output, like the desired goal where we're

00:17:13.720 | trying to get to. And so we could compare those two just using, you know, whatever means

00:17:20.080 | squared error. Keep on forgetting to change my pen means squared error. And so then if

00:17:29.280 | we keep doing this for lots and lots of images and lots of lots of pairs and exactly this

00:17:33.240 | way, this unit is going to hopefully learn to take these incomplete images and turn them

00:17:41.580 | into complete images. And that is exactly what this paper does. It just says, okay,

00:17:50.960 | now that we've got all these examples of showing what step 36 should turn into at step 54,

00:17:58.720 | let's just feed those examples into a model. And that works. And you'd kind of expect it

00:18:05.540 | to work because you can see that like a human would be able to look at this. And if they

00:18:09.520 | were a competent artist, they could turn that into a, you know, a well finished product.

00:18:15.220 | So you would expect that a computer could as well. There are some little tweaks around

00:18:21.240 | how it makes this work, which I will briefly describe because we need to be able to go

00:18:26.140 | from kind of step one through to step 10 through to step 20 and so forth. And so the way that

00:18:39.540 | it does this, it's actually quite clever. What they do is they initially, so they take their

00:18:45.020 | teacher model. So remember the teacher model is one that has already been trained. Okay.

00:18:50.100 | So the teacher model already is a complete stable diffusion model. That's finished. We

00:18:54.580 | take that as a given and we put in our image. Well, actually it's noise. We put in our noise,

00:19:03.420 | right? And we put it through two time steps. Okay. And then we train our unit B or whatever

00:19:13.780 | you want to call it to try to go directly from the noise to time step number two. And

00:19:20.940 | it's pretty easy for it to do. And so then what they do is they take this. Okay. And

00:19:26.660 | so this thing here, remember is called the student model. They then say, okay, let's

00:19:33.100 | now take that student model and treat that as the new teacher. So they now take their

00:19:40.940 | noise and they run it through the student model twice, once and twice, and they get

00:19:49.900 | out something at the end. And so then they try to create a new student, which is a copy

00:19:57.700 | of the previous student, and it learns to go directly from the noise to two goes of

00:20:02.940 | the student model. And you won't be surprised to hear they now take that new student model

00:20:08.060 | and use that to go two goes. And then they learn, they use that. Then they copy that

00:20:15.940 | to become the next student model. And so they're doing it again and again and again. And each

00:20:21.100 | time they're basically doubling the amount of work. So it goes one to two, effectively

00:20:25.380 | it's then going two to four and then four to eight. And that's basically what they're

00:20:31.260 | what they're doing. And they're doing it for multiple different time steps. So the single

00:20:35.700 | student model is learning to both do these initial steps, trying to jump multiple steps

00:20:47.220 | at a time. And it's also learning to do these later steps, multiple steps at a time. And

00:20:56.620 | that's it, believe it or not. So this is this neat paper that came out last week. And that's

00:21:04.140 | how it works. Now I mentioned that there was actually two papers. The second one is called

00:21:13.720 | On Distillation of Guided Diffusion Models. And the trick now is this second paper, these

00:21:25.440 | came out at basically the same time, if I remember correctly, even though they build

00:21:28.940 | on each other from the same teams, is that they say, okay, this is all very well, but

00:21:37.100 | we don't just want to create random pictures. We want to be able to do guidance, right?

00:21:46.100 | And you might remember, I hope you remember from last week that we used something called

00:21:49.540 | Classifier Free Guided Diffusion Models, which, because I'm lazy, we will just use an acronym,

00:21:58.340 | Classifier Free Guided Diffusion Models. And this one, you may recall, we take, let's say

00:22:07.540 | we want a cute puppy. We put in the prompt cute puppy into our clip text encoder, and

00:22:16.620 | that spits out an embedding. And we put that, let's ignore the VAE Latents business. We

00:22:29.020 | put that into our unit, but we also put the empty prompt into our clip text encoder. We

00:22:43.100 | concatenate these things too together so that then out the other side, we get back two things.

00:22:49.820 | We get back the image of the cute puppy, and we get back the image of some arbitrary thing.

00:22:58.420 | Could be anything. And then we effectively do something very much like taking the weighted

00:23:03.020 | average of these two things together, combine them. And then we use that for the next stage

00:23:11.340 | of our diffusion process. Now, what this paper does is it says, this is all pretty awkward.

00:23:18.260 | We end up having to train two images instead of one. And for different types of levels

00:23:23.740 | of guided diffusion, we have to do it multiple different times. It's all pretty annoying.

00:23:28.620 | How do we skip it? And based on the description of how we did it before, you may be able to

00:23:34.260 | guess. What we do is we do exactly the same student-teacher distillation we did before,

00:23:44.020 | but this time we pass in, in addition, the guidance. And so again, we've got the entire

00:23:56.300 | stable diffusion model, the teacher model available for us. And we are doing actual CFGD,

00:24:07.980 | Classifier Free Guided Diffusion, to create our guided diffusion cute puppy pictures.

00:24:13.780 | And we're doing it for a range of different guidance scales. So you might be doing two

00:24:17.940 | in 7.5 and 12 and whatever, right? And those now are becoming inputs to our student model.

00:24:29.140 | So the student model now has additional inputs. It's getting the noise as always. It's getting

00:24:36.980 | the caption or the prompt, I guess I should say, as always, but it's now also getting

00:24:43.500 | the guidance scale. And so it's learning to find out how all of these things are handled

00:24:53.820 | by the teacher model. Like what does it do after a few steps each time? So it's exactly

00:25:00.420 | the same thing as before, but now it's learning to use the Classifier Free Guided Diffusion

00:25:06.860 | as well. Okay. So that's got quite a lot going on there. And if it's a bit confusing, that's

00:25:17.060 | okay. It is a bit confusing. And what I would recommend is you check out the extra information

00:25:27.700 | from Jono who has a whole video on this. And one of the cool things actually about this

00:25:31.620 | video is it's actually a paper walkthrough. And so part of this course is hopefully we're

00:25:36.620 | going to start reading papers together. Reading papers is extremely intimidating and overwhelming

00:25:43.820 | for all of us, all of the time, at least for me, it never gets any better. There's a lot

00:25:48.540 | of math. And by watching somebody like Jono, who's an expert at this stuff, read through

00:25:54.940 | a paper, you'll kind of get a sense of how he is skipping over lots of the math, right?

00:26:01.340 | To focus on, in this case, the really important thing, which is the actual algorithm. And

00:26:06.180 | when you actually look at the algorithm, you start to realize it's basically all stuff,

00:26:11.420 | nearly all stuff, maybe all stuff that you did in primary school or secondary school.

00:26:15.940 | So we've got division, okay, sampling from a normal distribution, so high school, subtraction,

00:26:23.420 | division, division, multiplication, right? Oh, okay, we've got a log there. But basically,

00:26:30.020 | you know, there's not too much going on. And then when you look at the code, you'll find

00:26:36.060 | that once you turn this into code, of course, it becomes even more understandable if you're

00:26:40.260 | somebody who's more familiar with code, like me. So yeah, definitely check out Jono's video

00:26:49.980 | on this. So another paper came out about three hours ago. And I just had to show you it to

00:27:02.900 | you because I think it's amazing. And so this is definitely the first video about this paper

00:27:12.100 | because yeah, only came out a few hours ago. But check this out. This is a paper called

00:27:16.180 | iMagic. And with this algorithm, you can pass in an input image. This is just a, you know,

00:27:23.100 | a photo you've taken or downloaded off the internet. And then you pass in some text saying

00:27:28.340 | a bird spreading wings. And what it's going to try to do is it's going to try to take

00:27:32.060 | this exact bird in this exact pose and leave everything as similar as possible, but adjust

00:27:36.880 | it just enough so that the prompt is now matched. So here we take this, this little guy here,

00:27:45.100 | and we say, oh, this is actually what we want this to be a person giving the thumbs up.

00:27:49.460 | And this is what it produces. And you can see everything else is very, very similar

00:27:52.460 | to the previous picture. So this dog is not sitting. But if we put in the prompt, a sitting

00:27:59.980 | dog, it turns it into a sitting dog, leaving everything else as similar as possible. So

00:28:08.180 | here's an example of a waterfall. And then you say it's a children's drawing of a waterfall

00:28:11.740 | and now it's become a children's drawing. So lots of people in the YouTube chat going,

00:28:16.340 | oh my God, this is amazing, which it absolutely is. And that's why we're going to show you

00:28:19.580 | how it works. And one of the really amazing things is you're going to realize that you

00:28:23.540 | understand how it works already. Just to show you some more examples, here's the dog image.

00:28:29.940 | Here's the sitting dog, the jumping dog, dog playing with the toy, jumping dog holding

00:28:36.880 | a frisbee. Okay. And here's this guy again, giving the thumbs up, crossed arms in a greeting

00:28:45.020 | pose to namaste hands, holding a cup. So that's pretty amazing. So I had to show you how this

00:28:56.380 | works. And I'm not going to go into too much detail, but I think we can get the idea actually

00:29:06.640 | pretty well. So what we do is again, we start with a fully pre-trained, ready to go generative

00:29:20.860 | model like a stable diffusion model. And this is what this is talking about here, pre-trained

00:29:27.380 | diffusion model. In the paper, they actually use a model called Imogen, but none of the

00:29:31.500 | details as far as I can see in any way depend on what the model is. It should work just

00:29:35.900 | fine for stable diffusion. And we take a photo of a bird spreading wings. Okay. So that's

00:29:41.420 | our, that's our target. And we create an embedding from that using, for example, our clip encoder

00:29:52.220 | as usual. And we then pass it through our pre-trained diffusion model. And we then see

00:30:05.220 | what it creates. And it doesn't create something that's actually like our bird. So then what

00:30:18.020 | they do is they fine-tune this embedding. So this is kind of like textual inversion.

00:30:23.820 | They fine-tune the embedding to try to make the diffusion model output something that's

00:30:30.420 | as similar as possible to the, to the input image. And so you can see here, they're saying,

00:30:37.260 | oh, we're moving our embedding a little bit. They don't do this for very long. They just

00:30:41.820 | want to move it a little bit in the right direction. And then now they lock that in

00:30:46.900 | place and they say, okay, now let's fine-tune the entire diffusion model end to end, including

00:30:53.140 | the VAE or actually with Imogen, they have a super resolution model, but same idea. So

00:30:58.900 | we fine-tune the entire model end to end. And now the embedding, this optimized embedding

00:31:04.020 | we created, we, we, we store in place. We don't change that at all. That's now frozen.

00:31:11.580 | And we try to make it so that the diffusion model now spits out our bird as close as possible.

00:31:22.140 | So you fine-tune that for a few epochs. And so you've now got something that takes this,

00:31:26.300 | embedding that we fine-tuned, goes through a fine-tuned model and spits out our bird.

00:31:30.460 | And then finally, the original target embedding we actually wanted is a photo of a bird spreading

00:31:37.060 | its wings. We ended up with this slightly different embedding and we take the weighted

00:31:42.620 | average of the two. That's called the interpolate step, the weighted average of the two. And we

00:31:47.820 | pass that through this fine-tune diffusion model and we're done. And so that's pretty

00:31:56.700 | amazing. This, this would not take, I don't think a particularly long time or require any

00:32:03.020 | particular special hardware. It's the kind of thing I expect people will be doing, yeah,

00:32:09.740 | in the coming days and weeks. But it's very interesting because, yeah, I mean, the ability

00:32:14.860 | to take any photo of a person or whatever and change it, like literally change what the person's

00:32:26.380 | doing is, you know, societally very important and really means that anybody I guess now can generate

00:32:40.380 | believable photos that never actually existed. I see John O in the chat saying it took

00:32:47.020 | about eight minutes to do it for Imogen on TPUs. Although Imogen is quite a slow

00:32:54.620 | big model, although the TPUs they used were very, the latest TPUs. So might be, you know,

00:33:03.260 | maybe it's an hour or something for stable diffusion on GPUs.

00:33:07.740 | All right. So that is a lot of fun.

00:33:16.220 | All right. So with that, let's go back to our notebook where we left it last time. We had kind

00:33:30.700 | of looked at some applications that we can play with in this diffusion NBS repo, in the stable

00:33:36.140 | diffusion notebook. And what we've got now, and to remind you, when I say we, it's mainly actually

00:33:45.020 | Pedro, Patrick and Suraj, just a little bit of help from me. So hugging face folks. What we/they

00:33:52.700 | have done is they now dig into the pipeline to pull it all apart step by step. So you can see

00:33:59.740 | exactly what happens. The first thing I was just going to mention is this is how you can create

00:34:05.740 | those gradual denoising pictures. And this is thanks to something called the callback.

00:34:13.980 | So you can say here, when you go through the pipeline, every 12 steps, call this function.

00:34:21.980 | And as you can see, it's going to call it with I and T and the latents.

00:34:29.180 | And so then we can just make an image and stick it on the end of an array. And that's all that's

00:34:37.260 | happening here. So this is how you can start to interact with a pipeline without rewriting it

00:34:45.420 | yourself from scratch. But now what we're going to do is we're actually going to write it, we're

00:34:49.580 | going to build it from scratch. So you don't actually have to use a callback because you'll

00:34:54.620 | be able to change it yourself. So let's take a look. So looking inside the pipeline, what exactly is

00:35:03.500 | going on? So what's going to be going on in the pipeline is seeing all of the steps that we saw

00:35:13.900 | in last week's OneNote notes that I drew. And it's going to be all the code. And we're not going to

00:35:20.860 | show the code of how each step is implemented. So for example, the clip text model we talked about,

00:35:27.340 | the thing that takes as input a prompt and creates an embedding, we just take that as a given. So we

00:35:34.860 | download it, open AI is trained one called clip, the IT large patch 14. So we just say from tree

00:35:41.980 | change. So hugging face will transformers will download and create that model for us.

00:35:49.100 | Ditto for the tokenizer. And so ditto for the auto encoder, and ditto for the unit.

00:35:56.460 | So there they all are, we can just grab them. So we just take that all as a given.

00:36:01.500 | These are the three models that we've talked about, the text encoder, the clip encoder,

00:36:07.020 | the VAE, and the unit. So there they are. So given that we now have those,

00:36:16.540 | the next thing we need is that thing that converts time steps into the amount of noise. Remember that

00:36:22.540 | graph we drew. And so we can basically, again, use something that hugging face,

00:36:31.340 | well, actually, in this case, Catherine Carlson has already provided, which is a scheduler,

00:36:37.580 | it's basically something that shows us that connection. So we've got that. So we use that

00:36:44.700 | scheduler. And we say how much noise when we're using. And so we have to make sure that that

00:36:51.580 | matches. And so we just use these numbers that were given. Okay, so now to create our photograph

00:36:59.260 | of astronaut riding a horse again, in 70 steps with a 7.5 guide and scale, batch size of one,

00:37:08.380 | step number one is to take our prompt and tokenize it. Okay, so we looked at that in part one of the

00:37:16.060 | course. So check that out if you can't remember what tokenizing does, but it's just splitting it

00:37:20.620 | in basically splitting it into words, or subword units if they're long and unusual words. So here

00:37:26.860 | are so this will be the start the start of sentence token, and this will be a photograph

00:37:32.620 | of an astronaut, etc. And then you can see the same token is repeated again again at the end.

00:37:38.380 | That's just the padding to say we're all done. And the reason for that is that GPUs and TPUs

00:37:45.340 | really like to do lots of things at once. So we kind of have everything be the same length by

00:37:51.820 | padding them. That may sound like a lot of wasted work, which it kind of is. But a GPU would rather

00:37:58.620 | do lots of things at the same time on exactly the same sized input. So this is why we have all this

00:38:03.660 | padding. So you can see here if we decode that number, it's the end of text marker, just just

00:38:11.340 | padding really in this case. As well as getting the input IDs, so these are just lookups into a

00:38:18.620 | vocabulary. There's also a mask, which is just telling it which ones are actual words as opposed

00:38:25.500 | to padding, which is not very interesting. So we can now take those input IDs, we can put them on

00:38:35.180 | the GPU, and we can run them through the clip encoder. And so for a batch size of one, so you've

00:38:43.180 | got one image that gives us back a 77 by 768, because we've got 77 here. And each one of those

00:38:54.620 | creates a 768 long vector. So we've got a 77 by 768 tensor. So these are the embeddings

00:39:01.820 | for a photograph of an astronaut riding a horse that come from clip. So remember,

00:39:08.540 | everything's pre-trained. So that's all done for us. We're just doing inference. And so remember,

00:39:15.340 | for the classifier free guidance, we also need the embeddings for the empty string.

00:39:23.500 | So we do exactly the same thing.

00:39:36.540 | So now we just concatenate those two together, because this is just a trick to get the GPU to

00:39:42.380 | do both at the same time, because we like the GPU to do as many things at once as possible.

00:39:46.220 | And so now we create our noise. And because we're doing it with a VAE, we can call it

00:39:56.380 | Latents, but it's just noise, really. I wonder if you'd still call it that without the VAE.

00:40:03.340 | Maybe you would have to think about that. So that's just random numbers normally

00:40:09.180 | generated, normally distributed random numbers of size one, that's our batch size.

00:40:14.140 | And the reason that we've got this divided by eight here is because that's what the VAE does.

00:40:20.220 | It allows us to create things that are eight times smaller by height and width.

00:40:24.700 | And then it's going to expand it up again for us later. That's why this is so much faster.

00:40:30.700 | You'll see a lot of this after we put it on the GPU, you'll see a lot of this dot half.

00:40:34.620 | This is converting things into what's called half precision or FB 16.

00:40:39.580 | Details don't matter too much. It's just making it half as big in memory by using less precision.

00:40:45.740 | Modern GPUs are much, much, much, much faster if we do that. So you'll see that a lot.

00:40:51.500 | If you use something like fast AI, you don't have to worry about it, but all this stuff is done for

00:40:56.780 | you. And we'll see that later as we rebuild this with much, much less code later in the course.

00:41:02.060 | So we'll be building our own kind of framework from scratch,

00:41:07.740 | which you'll then be able to maintain and work with yourself.

00:41:12.780 | Okay, so we have to say we want to do 70 steps.

00:41:16.060 | Something that's very important, we won't worry too much about the details right now,

00:41:23.740 | but what you see here is that we take our random noise and we scale it. And that's because

00:41:29.740 | depending on what stage you're up to, you need to make sure that you have the right amount of

00:41:36.860 | variance, basically. Otherwise you're going to get activations and gradients that go out of control.

00:41:42.940 | This is something we're going to be talking about a huge amount

00:41:45.580 | during this course, and we'll show you lots of tricks to handle that kind of thing automatically.

00:41:50.860 | Unfortunately at the moment in the stable diffusion world, this is all done in rather,

00:41:56.940 | in my opinion, kind of ways that are too tied to the details of the model. I think we will be able

00:42:04.620 | to improve it as the course goes on, but for now we'll stick with how everybody else is doing it.

00:42:10.220 | This is how they do it. So we're going to be jumping through. So normally it would take a

00:42:15.740 | thousand time steps, but because we're using a fancy scheduler, we get to skip from 999 to

00:42:21.660 | 984, 984 to 970, and so forth. So we're going down about 14 time steps. And remember, this is a very,

00:42:28.460 | very, very unfortunate word. They're not time steps at all. In fact, they're not even integers.

00:42:33.420 | It's just a measure of how much noise are we adding at each time, and you find out how much noise by

00:42:41.500 | looking it up on this graph. Okay. That's all time step means. It's not a step of time, and it's a

00:42:47.740 | real shame that that word is used because it's incredibly confusing. This is much more helpful.

00:42:53.660 | This is the actual amount of noise at each one of those iterations. And so here you can see the

00:43:02.140 | amount of noise for each of those time steps, and we're going to be going backwards. As you can see,

00:43:11.100 | we start at 999, so we'll start with lots of noise, and then we'll be using less and less and less and

00:43:15.900 | less noise. So we go through the 70 time steps in a for loop, concatenating our two noise bits

00:43:30.380 | together, because we've got the classifier free and the prompt versions, do our scaling,

00:43:39.500 | calculate our predictions from the unit, and notice here we're passing in the time step,

00:43:44.460 | as well as our prompt. That's going to return two things, the unconditional prediction,

00:43:51.980 | so that's the one for the empty string. Remember, we passed in one of the two things we passed in

00:43:57.180 | was the empty string. So we concatenated them together, and so after they come out of the unit,

00:44:04.220 | we can pull them apart again. So dot chunk just means pull them apart into two separate variables,

00:44:09.580 | and then we can do the guide and scale that we talked about last week.

00:44:14.140 | And so now we can do that update where we take a little bit of the noise

00:44:23.660 | and remove it to give us our new latents. So that's the loop. And so at the end of all that,

00:44:33.740 | we decode it in the VAE. The paper that created this VAE tells us that we have to divide it by

00:44:41.180 | this number to scale it correctly. And once we've done that, that gives us a number which is between

00:44:48.620 | negative one and one. Python imaging library expects something between zero and one,

00:44:56.860 | so that's what we do here to make it between zero and one and enforce that to be true.

00:45:02.460 | Put that back on the CPU, make sure it's that the order of the dimensions is the same as what Python

00:45:08.780 | imaging library expects, and then finally convert it up to between zero and 255 as an int,

00:45:16.220 | which is actually what PIO really wants. And there's our picture. So there's all the steps.

00:45:26.620 | So what I then did, this is kind of like, so the way I normally build code, I use notebooks for

00:45:34.860 | everything, is I kind of do things step by step by step, and then I tend to kind of copy them,

00:45:40.460 | and I use shift M. I don't know if you've seen that, but what shift M does, it takes two cells

00:45:46.460 | and combines them like that. And so I basically combined some of the cells together, and I removed

00:45:53.820 | a bunch of the the pros, so you can see the entire thing on one screen. And what I was trying to do

00:46:04.620 | here is I'd like to get to the point where I've got something which I can very quickly do experiments

00:46:09.420 | with. So maybe I want to try some different approach to guidance tree classification,

00:46:13.820 | maybe I want to add some callbacks, so on and so forth. So I kind of like to have everything,

00:46:22.540 | you know, I like to have all of my important code be able to fit into my screen at once.

00:46:28.300 | And so you can see now I do, I've got the whole thing on my screen, so I can keep it all in my

00:46:32.380 | head. One thing I was playing around with was I was trying to understand the actual guidance tree

00:46:41.340 | equation in terms of like how does it work. Computer scientists tend to write things

00:46:50.220 | and software engineers with kind of long words as variable names. Mathematicians tend to use

00:46:55.820 | short just letters normally. For me, when I want to play around with stuff like that, I turn stuff

00:47:01.580 | back into letters. And that's because I actually kind of pulled out one note and I started jutting

00:47:06.540 | down this equation and playing around with it to understand how it behaves. So this is just like,

00:47:13.900 | it's not better or worse, it's just depending on what you're doing. So actually here I said, okay,

00:47:18.860 | g is guidance scale. And then rather than having the unconditional and text embeddings,

00:47:24.940 | I just call them u and t. And now I've got this all down into an equation which I can

00:47:29.420 | write down in a notebook and play with and understand exactly how it works.

00:47:32.860 | So that's something I find really helpful for working with this kind of code is to, yeah,

00:47:40.700 | turn it into a form that I can manipulate algebraically more easily. I also try to make

00:47:46.140 | it look as much like the paper that I'm implementing as possible. Anyways, that's that code. So then I

00:47:52.860 | copied all this again and I basically, oh, I actually did it for two prompts this time.

00:47:59.420 | I thought this was fun. While you're painting an astronaut riding a horse in the style of

00:48:03.340 | Grant Wood, just to remind you, Grant Wood looks like this. Not obviously astronaut material,

00:48:15.500 | which I thought would make it actually kind of particularly interesting. Although he does have

00:48:19.500 | horses. I can't see one here. Some of his pictures have horses. So because I did two

00:48:27.820 | prompts, I got back two pictures I could do. So here's the Grant Wood one. I don't know what's

00:48:33.340 | going on in his back here, but I think it's quite nice. So yeah, I then copied that whole thing again

00:48:39.980 | and merged them all together and then just put it into a function. So I took the little bit which

00:48:48.540 | creates an image and put that into a function. I took the bit which does the tokenizing and

00:48:55.020 | text encoding and put that into a function. And so now all of the code necessary to do

00:49:01.340 | the whole thing from top to bottom fits in these two cells, which makes it for me much easier to

00:49:10.300 | see exactly what's going on. So you can see I've got the text embeddings. I've got the

00:49:16.860 | unconditional embeddings. I've got the embeddings which can catenate the two together,

00:49:20.220 | optional random seed, my latents, and then the loop itself. And you'll also see something I do

00:49:32.700 | which is a bit different to a lot of software engineering is I often create things which are

00:49:36.780 | kind of like longer lines because I try to have each line be kind of like mathematically one thing

00:49:44.620 | that I want to be able to think about as a whole. So yeah, these are some differences between kind of

00:49:50.300 | the way I find numerical programming works well compared to the way I would write a more

00:49:56.060 | traditional software engineering approach. And again, this is partly a personal preference,

00:50:01.100 | but it's something I find works well for me. So we're now at a point where we've got, yeah,

00:50:06.300 | two fun, three functions that easily fit on the screen and do everything. So I can now just say

00:50:11.500 | make samples and display each image. And so this is something for you to experiment with.

00:50:21.660 | And what I specifically suggest as homework is to try picking one of the

00:50:32.860 | extra tricks we learned about like image to image or negative prompts. Negative prompts

00:50:39.340 | would be a nice easy one like see if you can implement negative prompt in your version of this.

00:50:48.780 | Or yeah, try doing image to image. That wouldn't be too hard either. Another one you can add is

00:51:00.220 | try adding callbacks. And the nice thing is then, you know, you've got code which you fully understand

00:51:07.900 | because you know what all the lines do. And you then don't need to wait for the diffusers folks

00:51:16.460 | to update it. The library to do is, for example, the callbacks are only added like a week ago.

00:51:22.140 | So until then you couldn't do callbacks. Well, now you don't have to wait for the diffusers team

00:51:26.140 | to add something. The code's all here for you to play with. So that's my recommendation as a bit

00:51:32.380 | of homework for this week. Okay, so that brings us to the end of our rapid overview of stable

00:51:47.420 | diffusion and some very recent papers that very significantly developed stable diffusion. I hope

00:51:53.180 | that's given you a good sense of the kind of very high level slightly hand wavy version of all this

00:52:00.940 | and you can actually get started playing with some fun code. What we're going to be doing next

00:52:06.540 | is going right back to the start, learning how to multiply two matrices together effectively

00:52:14.860 | and then gradually building from there until we've got to the point that we've rebuilt all

00:52:19.180 | this from scratch and we understand why things work the way they do, understand how to debug

00:52:24.300 | problems, improve performance and implement new research papers as well. So that's going to be

00:52:32.380 | very exciting. And so we're going to have a break and I will see you back here in 10 minutes.

00:52:45.660 | Okay, welcome back everybody. I'm really excited about the next part of this. It's going to require

00:52:53.100 | some serious tenacity and a certain amount of patience. But I think you're going to learn a lot.

00:53:02.620 | A lot of folks I've spoken to have said that previous iterations of this part of the course

00:53:10.060 | is like the best course they've ever done. And this one's going to be dramatically better than

00:53:14.620 | any previous version we've done of this. So hopefully you'll find that the hard work and patience

00:53:22.620 | pays off. We're working now through the course 22 p2 repo. So 2022 course part two.

00:53:33.500 | And notebooks are ordered. So we'll start with notebook number one.

00:53:40.940 | And okay, so the goal is to get to stable diffusion from the foundations, which means we

00:53:49.020 | have to define what are the foundations. So I've decided to define them as follows. We're allowed

00:53:56.060 | to use Python. We're allowed to use the Python standard library. So that's all the stuff that

00:54:01.500 | comes with Python by default. We're allowed to use matplotlib because I couldn't be bothered

00:54:07.100 | creating my own plotting library. And we're allowed to use Jupyter notebooks and NBDev,

00:54:13.420 | which is something that creates modules from notebooks. So basically what we're going to

00:54:19.340 | try to do is to rebuild everything starting from this foundation. Now, to be clear,

00:54:27.980 | what we are allowed to use are the libraries once we have reimplemented them correctly.

00:54:36.060 | And so if we reimplement something from NumPy or PyTorch or whatever, we're then allowed to use

00:54:43.020 | the NumPy or PyTorch or whatever version. Sometimes we'll be creating things that haven't

00:54:50.700 | been created before. And that's then going to be becoming our own library. And we're going to be

00:54:56.860 | calling that library mini AI. So we're going to be building our own little framework as we go.

00:55:03.740 | So, for example, here are some imports. And these imports all come

00:55:08.540 | from the Python standard library, except for these two.

00:55:12.300 | Now, to be clear, one challenge we have is that the models we use in stable diffusion were trained

00:55:24.300 | on millions of dollars worth of equipment for months, which we don't have the time or money.

00:55:31.740 | So another trick we're going to do is we're going to create smaller identical but smaller versions

00:55:38.860 | of them. And so once we've got them working, we'll then be allowed to use the big pre-trained

00:55:44.540 | versions. So that's the basic idea. So we're going to have to end up with our own VAE,

00:55:50.380 | our own unit, our own clip encoder, and so forth.

00:55:59.020 | To some degree, I am assuming that you've completed part one of the course. To some degree,

00:56:05.500 | I will cover everything at least briefly. But if I cover something about deep learning too fast for

00:56:13.100 | you to know what's going on and you get lost, go back and watch part one, or go and Google for

00:56:21.740 | that term. For stuff that we haven't covered in part one, I will go over it very thoroughly and

00:56:27.340 | carefully. All right. So I'm going to assume that you know the basic idea, which is that we're going

00:56:37.420 | to need to be doing some matrix multiplication. So we're going to try to take a deep dive into

00:56:43.820 | matrix multiplication today. And we're going to need some input data. And I quite like working

00:56:49.900 | with MNIST data. MNIST is handwritten digits. It's a classic data set. They're 28 by 28 pixel

00:57:04.380 | grayscale images. And so we can download them from this URL.

00:57:15.980 | So we use the path libs path object a lot. It's part of Python. And it basically takes a string

00:57:23.100 | and turns it into something that you can treat as a path. For example, you can use slash to mean

00:57:28.460 | this file inside this subdirectory. So this is how we create a path object.

00:57:34.140 | Path objects have, for example, a make directory method.

00:57:43.340 | So I like to get everything set up. But I want to be able to rerun this cell lots of times

00:57:48.940 | and not give me errors if I run it more than once. If I run it a second time, it still works.

00:57:55.100 | And in that case, that's because I put this exist OK equals true. How did I know that I can say--

00:58:00.460 | because otherwise, it would try to make the directory. It would already exist in a given

00:58:03.820 | error. How do I know what parameters I can pass to make dir? I just press shift tab. And so when I

00:58:10.540 | hit shift tab, it tells me what options there are. If I press it a few times, it'll actually

00:58:18.460 | pop it down to the bottom of the screen to remind me. I can press escape to get rid of it.

00:58:25.020 | Or you can just-- or else you can just hit tab inside. And it'll list all the things you could

00:58:37.900 | type here, as you can see. All right. So we need to grab this URL. And so Python comes

00:58:52.620 | with something for doing that, which is the URL lib library that's part of Python

00:58:59.340 | that has something called URL retrieve. And something which I'm always a bit surprised

00:59:05.020 | is not widely used as people reading the Python documentation. So you should do that a lot.

00:59:11.580 | So if I click on that, here is the documentation

00:59:19.340 | for URL retrieve. And so I can find exactly what it can take. And I can learn about exactly what

00:59:33.980 | it does. And so I read the documentation from the Python docs for every single method I use.

00:59:48.460 | And I look at every single option that it takes. And then I practice with it.

00:59:53.420 | And to practice with it, I practice inside Jupyter. So if I want this import on its own,

01:00:07.660 | I can hit Control-Shift- and it's going to spit it into two cells. And then I'll hit Alt-Enter

01:00:16.300 | or Option-Enter so I can create something underneath. And I can type URL retrieve, Shift-Tab.

01:00:22.700 | And so there it all is. If I'm like way down somewhere in the notebook and I have no idea

01:00:32.540 | where URL retrieve comes from, I can just hit Shift-Enter. And it actually tells me exactly

01:00:38.140 | where it comes from. And if I want to know more about it, I can just hit Question Mark,

01:00:43.340 | Shift-Enter, and it's going to give me the documentation. And most cool of all,

01:00:49.900 | second question mark, and it gives me the full source code. And you can see it's not a lot.

01:00:58.460 | You know, reading the source code of Python standard library stuff is often quite revealing.

01:01:03.260 | And you can see exactly how they do it. And that's a great way to learn more about

01:01:12.940 | more about this. So in this case, I'm just going to use a very simple functionality,

01:01:24.300 | which is I'm going to say the URL to retrieve and the file name to save it as.

01:01:28.540 | And again, I made it so I can run this multiple times. So it's only going to do the URL retrieve

01:01:36.460 | if the path doesn't exist. If I've already downloaded it, I don't want to download it again.

01:01:41.580 | So I run that cell. And notice that I can put exclamation mark, followed by a line of bash.

01:01:49.980 | And it actually runs this using bash.

01:01:54.220 | If you're using Windows, this won't work. And I would very, very strongly suggest if you're using

01:02:06.780 | Windows, use WSL. And if you use WSL, all of these notebooks will work perfectly. So yeah, do that.

01:02:14.860 | Or write it on paper space or Lambda Labs or something like that, Colab, et cetera.

01:02:19.980 | Okay, so this is a gzip file. So thankfully, Python comes with a gzip module. Python comes

01:02:31.580 | with quite a lot, actually. And so we can open a gzip file using gzip.open. And we can pass in

01:02:37.980 | the path. And we'll say we're going to read it as binary as opposed to text.

01:02:43.580 | Okay, so this is called a context manager. It's a with clause. And what it's going to do is it's

01:02:51.340 | going to open up this gzip file. The gzip object will be called f. And then it runs everything

01:02:59.100 | inside the block. And when it's done, it will close the file. So with blocks can do all kinds

01:03:06.540 | of different things. But in general, with blocks that involve files are going to close the file

01:03:11.660 | automatically for you. So we can now do that. And so you can see it's opened up the gzip file.

01:03:18.860 | And the gzip file contains what's called pickle objects. Pickled objects is basically

01:03:26.060 | Python objects that have been saved to disk. It's the main way that people in pure Python

01:03:32.220 | save stuff. And it's part of the standard library. So this is how we load in from that file.

01:03:39.260 | Now, the file contains a tuple of tuples. So when you put a tuple on the left hand side of an equal

01:03:46.460 | sign, it's quite neat. It allows us to put the first tuple into two variables called x_train

01:03:51.660 | and y_train and the second into x_valid and y_valid. This trick here where you put stuff like this on

01:03:59.180 | the left is called destructuring. And it's a super handy way to make your code kind of

01:04:07.020 | clear and concise. And lots of languages support that, including Python.

01:04:15.340 | Okay. So we've now got some data. And so we can have a look at it. Now, it's a bit tricky

01:04:22.380 | because we're not allowed to use NumPy according to our rules. But unfortunately, this actually

01:04:26.540 | comes as NumPy. So I've turned it into a list. All right. So I've taken the first

01:04:34.140 | image and I've turned it into a list. And so we can look at a few examples of some values in that list.

01:04:44.380 | And here they are. So it looks like they're numbers between zero and one.

01:04:47.420 | And this is what I do when I learn about a new dataset. So when I started writing this notebook,

01:04:56.620 | what you see here, other than the pros here, is what I actually did when I was working

01:05:04.540 | with this data. I wanted to know what it was. So I just grab a little bit of it and look at it.

01:05:14.140 | So I kind of got a sense now of what it is. Now, interestingly,

01:05:20.060 | it's 784. This image is 784 long list. Oh, dear. People freaking out in the comments. No NumPy.

01:05:39.980 | Yeah. No NumPy. Do you see NumPy? No NumPy. Why 784? What is that? Well, that's because these are

01:05:47.500 | 28 by 28 images. So it's just a flat list here of 784 long. So how do I turn this 784 long thing

01:05:57.340 | into 28 by 28? So I want a list of 28 lists of 28, basically, because we don't have matrices.

01:06:05.820 | So how do we do that? And so we're going to be learning a lot of cool stuff in Python here.

01:06:11.100 | Sorry, I can't stop laughing at all the stuff in our chat.

01:06:15.580 | Oh, dear. People are quite reasonably freaking out. That's okay. We'll get there.

01:06:24.300 | I promise. I hope. Otherwise, I'll embarrass myself. All right. So how do I convert a 784

01:06:33.580 | long list into 28 lists, 28 long list of 28 long lists? I'm going to use something called

01:06:43.740 | chunks. And first of all, I'll show you what this thing does. And then I'll show you how it works.

01:06:47.340 | So vowels is currently a list of 10 things. Now, if I take vowels and I pass it to chunks

01:06:56.780 | with five, it creates two lists of five. Here's list number one of five elements. And here's list

01:07:03.740 | number two of five elements. Hopefully, you can see what it's doing. It's chunkifying this list.

01:07:12.860 | And this is the length of each chunk. Now, how did it do that? The way I did it is using a very,

01:07:20.620 | very useful thing in Python that far too many people don't know about, which is called yield.

01:07:26.060 | And what yield does is you can see here, if we're on loop, it's going to go through from zero

01:07:33.020 | up to the length of my list. And it's going to jump by five at a time. It's going to go,

01:07:40.060 | in this case, zero comma five. And then it's going to think of this as being like return for now,

01:07:47.420 | it's going to return the list from zero up to five. So it returns the first bit of the list.

01:07:55.180 | But yield doesn't just return. It kind of like returns a bit and then it continues.

01:08:02.780 | And it returns a bit more. And so specifically, what yield does is it creates an iterator.

01:08:13.420 | An iterator is an iterator is basically something you can actually use it that you can call next on

01:08:21.660 | a bunch of times. So let's try it. So we can say iterator equals.

01:08:29.260 | Okay. Oh, got to run it. So what is iterator? Well, iterator is something that I can basically,

01:08:38.940 | I can call next on. And next basically says yield the next thing. So this should yield

01:08:45.660 | vals zero colon five. There it is. It did, right? There's vals zero colon five.

01:08:56.060 | Now, if I run that again, it's going to give me a different answer

01:09:00.220 | because it's now up to the second part of this loop. Now it returns the last five. Okay. So

01:09:10.540 | this is what an iterator does. Now, if you pass an iterator to Python's list,

01:09:21.260 | it runs through the entire iterator until it's finished and creates a list of the results.

01:09:27.020 | And what does finished looks like? This is what finished looks like. If you call next

01:09:31.580 | and get stop iteration, that means you've run out. And that makes sense, because my loop,

01:09:38.380 | there's nothing left in it. So all of that is to say, we now have a way of taking a list and

01:09:46.540 | chunkifying it. So what if I now take my full image, image number one, chunkify it into chunks

01:09:56.620 | of 28 long and turn that into a list and plot it. We have successfully created an image.

01:10:06.780 | So that's good.

01:10:10.380 | Now, we are done. But there are other ways to create this iterator. And because iterators

01:10:29.660 | and generators, which are closely related, so important, I wanted to show you more about how

01:10:37.100 | to do them in Python. It's one of these things that if you understand this, you'll often find

01:10:44.700 | that you can throw away huge pieces of enterprise software and basically replace it with an iterator.

01:10:52.700 | It lets you stream things one bit at a time. It doesn't store it all in memory.

01:10:58.300 | It's this really powerful thing that once I show it to people, they suddenly go like, oh, wow,

01:11:05.980 | we've been using all this third party software and we could have just created a Python iterator.

01:11:12.300 | Python comes with a whole standard library module called edit tools just to make it easier to work

01:11:19.900 | with iterators. I'll show you one example of something from edit tools, which is iseless.

01:11:26.540 | So let's grab our values again, these 10 values.

01:11:32.540 | Okay. So let's take these 10 values and we can take any list and turn it into an iterator

01:11:45.100 | by passing it to itter, which I should call it. So I don't override this Python.

01:11:55.340 | That's not a keyword, but this thing I don't want to override.

01:11:57.500 | So this is now basically something that I can call. Actually, let's do this.

01:12:03.180 | I'll show you that I can call next on it. So if I now go next it,

01:12:08.620 | you can see it's giving me each item one at a time.

01:12:18.300 | Okay. So that's what converting it into an iterator does.

01:12:21.580 | I slice, converts it into a different kind of iterator. Let's call this maybe I slice iterator.

01:12:42.940 | And so you can see here, what it did was it jumped.

01:12:53.340 | Stop here. So that's what had been better. So I should query,

01:13:03.180 | create the iterator and then call next a few times. Sorry. This is what I went to do.

01:13:11.340 | It's now only returning the first five before it calls stop iteration before it raises stop

01:13:17.100 | iteration. So what I slice does is it grabs the first N things from an iterable,

01:13:24.620 | something that you can iterate. Why is that interesting?

01:13:33.340 | Because I can pass it to list for example. Right. And now if I pass it to list again,

01:13:42.540 | this iterator has now grabbed the first five things. So it's now up to thing number six.

01:13:48.620 | So if I call it again, it's the next five things. And if I call it again, then there's nothing left.

01:13:59.180 | And maybe you can see we've actually now got this defined, but we can do it with I slice.

01:14:06.940 | And here's how we can do it. It's actually pretty tricky.

01:14:10.460 | It in Python, you can pass it something like a list to create an iterator,

01:14:19.180 | or you can pass it. Now this is a really important word. A callable. What's a callable?

01:14:25.260 | A callable is generally speaking, it's a function. It's something that you can put parentheses after.

01:14:31.660 | Could even be a class. Anything you can put parentheses after,

01:14:38.780 | you can just think of it for now as a function. So we're going to pass it a function.

01:14:42.300 | And in the second form, it's going to be called until the function returns

01:14:50.620 | this value here, which in this case is empty list. And we just saw that I slice will return empty

01:14:55.500 | list when it's done. So this here is going to keep calling this function again and again and again.

01:15:07.260 | And we've seen exactly what happens because we've called it ourselves before.

01:15:12.300 | There it is. Until it gets an empty list. So if we do it with 28, then we're going to get

01:15:22.940 | our image again. So we've now got two different ways of creating exactly the same thing.

01:15:34.460 | And if you've never used iterators before, now's a good time to pause the video and play with them,

01:15:43.340 | right? So for example, you could take this here, right? And if you've not seen lambdas before,

01:15:49.500 | they're exactly the same as functions, but you can define them in line. So let's replace that

01:15:53.900 | with a function. Okay, so now I've turned it into a function and then you can experiment with it.

01:16:03.900 | So let's create our iterator

01:16:07.900 | and call f on it. Well, not on it, call f. And you can see there's the first 28.

01:16:19.900 | And each time I do it, I'm getting another 28. Now the first two rows are all empty, but finally,

01:16:27.180 | look, now I've got some values. Call it again. See how each time I'm getting something else.

01:16:32.380 | Just calling it again and again. And that is the values in our iterator. So that gives you a sense

01:16:39.420 | of like how you can use Jupyter to experiment. So what you should do is as soon as you hit

01:16:48.220 | something in my code that doesn't look familiar to you, I recommend pausing the video and experimenting

01:16:57.100 | with that in Jupyter. And for example, itter, most people probably have not used itter at all,

01:17:05.660 | and certainly very few people have used this to argument form. So hit shift tab a few times,

01:17:10.540 | and now you've got at the bottom, there's a description of what it is. Or find out more.

01:17:18.380 | Python itter. Here we are, go to the docs. Well, that's not the right bit of the docs.

01:17:31.020 | See API? Wow, crazy. That's terrible. Let's try searching here. There we go. That's more like it.

01:17:52.060 | So now you've got links. So if it's like, okay, it returns an iterator object, what's that?

01:17:56.620 | Well, click on it. Find out. Now this is really important to know. And here's that stop exception

01:18:01.020 | that we saw. So stop iteration exception. We saw next already. We can find out what iterable is.

01:18:07.900 | And here's an example. And as you can see, it's using exactly the same approach that we did,

01:18:15.740 | but here it's being used to read from a file. This is really cool. Here's how to read from a file.

01:18:22.060 | 64 bytes at a time until you get nothing processing it, right? So the docs of Python are

01:18:29.420 | quite fantastic. As long as you use them, if you don't use them, they're not very useful at all.

01:18:37.500 | And I see Seifer in the comments, our local Haskell programmer,

01:18:47.900 | appreciating this Haskellness in Python. That's good. It's not quite Haskell, I'm afraid,

01:18:53.660 | but it's the closest we're going to come. All right. How are we going for time? Pretty good.

01:19:01.980 | Okay. So now that we've got image, which is a list of lists and each list is 25 long,

01:19:15.500 | we can index into it. So we can say image 20. Well, let's do it. Image 20.

01:19:22.380 | Okay. Is a list of 28 numbers. And then we could index into that.

01:19:32.860 | Okay. So we can index into it. Now, normally, we don't like to do that for matrices. We would

01:19:43.340 | normally rather write it like this. Okay. So that means we're going to have to create our own class

01:19:51.100 | to make that work. So to create a class in Python, you write class, and then you write the name of it.

01:19:59.900 | And then you write some really weird things. The weird things you write have two underscores,

01:20:08.380 | a special word, and then two underscores. These things with two underscores on each side are

01:20:13.980 | called dunder methods, and they're all the special magically named methods which have particular

01:20:20.700 | meanings to Python. And you're just going to learn them, but they're all documented in the Python

01:20:27.340 | object model. Edit object model. Yay, finally. Okay. So it's called data model, not object model.

01:20:42.140 | And so this is basically where all the documentation is about absolutely everything,

01:20:48.620 | and I can click under edit, and it tells you basically this is the thing that constructs

01:20:53.580 | objects. So any time you want to create a class that you want to construct, it's going to store

01:21:02.300 | some stuff. So in this case, it's going to store our image. You have to define dunder in it.

01:21:07.900 | Python's slightly weird in that every method, you have to put self here. For reasons we probably

01:21:17.660 | don't really need to get into right now. And then any parameters. So we're going to be creating an

01:21:22.860 | image passing in the thing to store, the Xs. They're going to be passing in the Xs. And so here we're

01:21:29.580 | just going to store it inside the self. So once I've got this line of code, I've now got something

01:21:35.340 | that knows how to store stuff, the Xs inside itself. So now I want to be able to call square bracket

01:21:43.420 | 20 comma 15. So how do we do that? Well, basically part of the data model is that there's a special

01:21:53.660 | thing called dunder get item. And when you call square brackets on your object, that's what Python

01:21:59.820 | uses. And it's going to pass across the 20 comma 15 here as indices. So we're now basically just

01:22:11.340 | going to return this. So the self.Xs with the first index and the second index. So let's create that

01:22:20.620 | matrix class and run that. And you can now see M 20 comma 15 is the same. Oh, quick note on, you know,

01:22:30.060 | ways in which my code is different to everybody else's, which it is. It's somewhat unusual to put

01:22:37.100 | definitions of methods on the same line as as the the signature like this. I do it quite a lot for

01:22:47.580 | one-liners. As I kind of mentioned before, I find it really helps me to be able to see all the code

01:22:53.580 | I'm working with on the screen at once. A lot of the world's best programmers actually have

01:23:00.060 | had that approach as well. It seems to work quite well for some people that are extremely productive.

01:23:05.180 | It's not common in Python. Some people are quite against it. So if you're at work, and your

01:23:13.260 | colleagues don't write Python this way, you probably shouldn't either. But if you can get away with it,

01:23:18.940 | I think it works quite well. Anyway, okay, so now that we've created something that lets us index

01:23:23.420 | into things like this, we're allowed to use PyTorch because we're allowed to use this one feature in

01:23:29.660 | PyTorch. Okay, so we can now do that. And so now to create a tensor, which is basically a lot like

01:23:42.700 | our matrix, we can now pass a list into tensor to get back a tensor version of that list, or perhaps

01:23:53.180 | more interestingly, we could pass in a list of lists. Maybe let's give this a name.

01:24:04.540 | Whoopsie dozy. That needs to be a list of lists, just like we had before for our image.

01:24:18.380 | In fact, let's do it for our image. Let's just pass in our image. There we go. And so now we

01:24:27.980 | should be able to say tens 20 comma 15. And there we go. Okay, so we've successfully reinvented that.

01:24:43.020 | All right. So now we can convert all of our lists into tenses. There's a convenient

01:24:55.980 | way to do this, which is to use the map function in the Python standard library.

01:25:02.220 | So it takes a function and then some iterables. In this case, one iterable. And it's going to

01:25:14.060 | apply this function to each of these four things and return those four things. And so then I can

01:25:19.900 | put four things on the left to receive those four things. So this is going to call tensor x_train

01:25:26.140 | and put it in x_train. Tensor y_train, put it in y_train, and so forth. So this is converting

01:25:30.780 | all of these lists to tenses and storing them back in the same name. So you can see that

01:25:37.580 | x_train now is a tensor. So that means it has a shape property. It has 50,000 images in it,

01:25:46.860 | which are each 784 long. And you can find out what kind of stuff it contains by calling its .type.

01:25:57.180 | So it contains floats. So this is the tensor class. We'll be using a lot of it.

01:26:02.620 | So of course, you should read its documentation.

01:26:05.420 | I don't love the PyTorch documentation. Some of it's good. Some of it's not good.

01:26:12.860 | It's a bit all over the place. So here's tensor. But it's well worth scrolling through to get a

01:26:18.060 | sense of like, this is actually not bad, right? It tells you how you can construct it. This is how I

01:26:21.820 | constructed one before, passing it lists of lists. You can also pass it NumPy arrays.

01:26:27.660 | You can change types. So on and so forth. So, you know, it's well worth reading through. And like,

01:26:39.980 | you're not going to look at every single method it takes, but you're kind of, if you browse through

01:26:44.060 | it, you'll get a general sense, right? That tensors do just about everything you couldn't think of

01:26:51.340 | for a numeric programming. At some point, you will want to know every single one of these,

01:26:57.820 | or at least be aware roughly what exists. So you know what to search for in the docs.

01:27:03.980 | Otherwise you will end up recreating stuff from scratch, which is much, much slower

01:27:09.180 | than simply reading the documentation to find out it's there.

01:27:14.300 | All right. So instead of, instead of calling chunks or I slice, the thing that is roughly

01:27:21.340 | equivalent in a tensor is the reshape method. So reshape, so to reshape our 50,000 by 784 thing,

01:27:30.380 | we can simply, we want to turn it into 50,000 28 by 28 tensors. So I could write here,