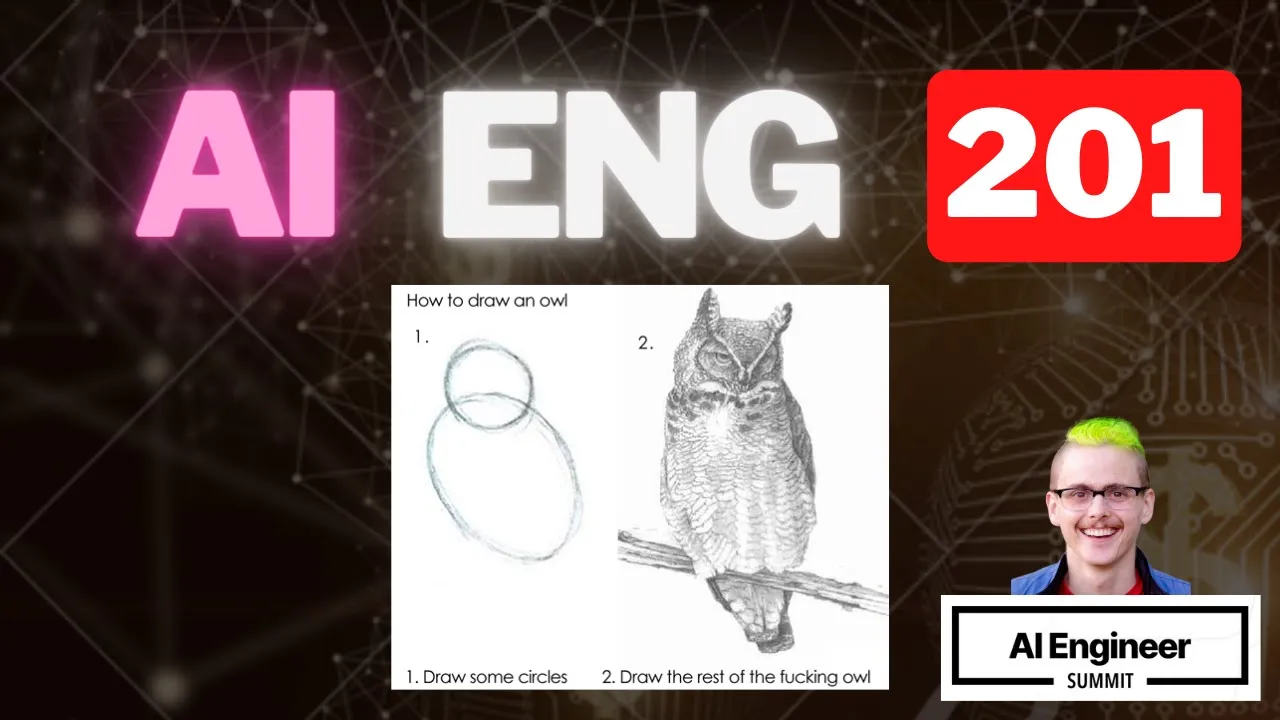

AI Engineering 201: The Rest of the Owl

Chapters

0:0 Intro1:9 Patterns for Language User Interfaces

6:19 RAG: Information Retrieval for Generation

21:52 Function Calling: Structured Outputs and Tool Use

35:3 Agents and Cognitive Architectures

40:52 Shipping to Learn in ML + AI

45:42 LLM Monitoring and Observability Tools

49:42 Evaluating LLMs

55:48 Inspirational Outro

00:00:00.000 | A lot of effort has gone into thinking about the engineering of inference and not so much

00:00:16.960 | effort and not so much success has been had at the engineering the rest of the like whole product

00:00:23.440 | around inference that actually you know delivers value much like the two beautiful mathematical

00:00:32.400 | solids here that does provide the bones or the interior but not the whole thing.

00:00:37.120 | So let's talk about a couple of like architectures and patterns for uses of language models and then

00:00:44.480 | talk about the like first attempts at like trying to make these things better over time

00:00:50.400 | with monitoring observability and evaluation. So architectures and patterns. So the

00:00:57.840 | foment and excitement around this stuff has been around for about a year and so patterns are starting

00:01:04.160 | to emerge very slowly of like typical ways you might apply these things. So let's talk about

00:01:09.840 | them and what problems have arisen. So my favorite way of thinking about this in general is that the

00:01:17.760 | thing that we're building right now are language user interfaces sort of like louis by analogy to

00:01:23.920 | GUIs or graphical user interfaces. First they're hitting existing features soon they'll be for like

00:01:31.360 | completely new whole products. In ancient times in the 1970s the interface for computers was primarily

00:01:40.480 | like textual in a terminal. This is still the way we interact with machines when we really want to

00:01:45.360 | control them like when we're running a server or when we are frustrated with VS code.

00:01:51.120 | And this was the user interface from computers for a while and they were not very popular until the

00:01:59.680 | invention of the graphical user interface which instead of presenting the users with just like

00:02:04.800 | you have to learn this special language to speak to me like here's this like sensory experience where you

00:02:11.280 | can bring your intuition from space and your visual system to understand how to use the machine.

00:02:18.320 | And this was what took computers sort of like out of the hobbyist and business and military realm and into

00:02:25.680 | like people's homes. And with the rise of language models it's clear that we have an opportunity to once again change the interface between humans and machines.

00:02:32.400 | By telling them what we want in natural language and then they do it for us. And no less augusta personage than Sam Altman likes the idea of language interface.

00:02:53.520 | So this similar character to graphical user interfaces it like makes it a more approachable interface.

00:03:01.520 | And this is something that people have wanted to do for a long time as long back as like the Eliza chatbots.

00:03:07.200 | The Eliza chatbot from the 1960s. The Shirdloo basically like this is only graphical not an actual robot but you could like tell a computer robot like give it language instructions like pick up a big red block.

00:03:27.360 | Ask Jeeves was originally presented as a language interface to the internet where you just type what you want instead of a URL.

00:03:34.400 | Alexa and other assistants have attempted to do a similar thing.

00:03:38.960 | And the big win here is with language models we might believe that we can actually do a really,

00:03:45.040 | really good job at providing this kind of language interface in a very generic way with foundation

00:03:49.920 | models and not just like a tiny environment like the Eliza psychotherapy environment or the Shirdloo blocks world.

00:03:59.760 | So right now that's we're getting language user interfaces for existing systems that kind of admit them easily.

00:04:04.960 | So Sequoia put out a piece fairly recently talking about this that the like for this like act two of generative AI is using foundation models as a piece of a more comprehensive solution rather than an entire solution.

00:04:21.600 | that offers like a language interface where it wasn't possible before.

00:04:25.360 | So like this query assistant from Honeycomb takes what would normally be this like less approachable query language constructor and just says like can you show me slow requests.

00:04:37.120 | What are my errors latency distribution by status code like that's a much friendlier interface.

00:04:43.440 | And you know even SQL when it was originally presented was like it's a it's a language that's so natural even a businessman can write queries.

00:04:52.960 | You know it's a dream but like you know that this can you show me slow requests like that's pretty close.

00:04:58.080 | You know so so that's the like maybe understandable that that's the first direction things have gone longer term.

00:05:07.440 | This like a machines that have graphical interfaces look very different from ones that have terminal interfaces.

00:05:14.560 | And so like mainframes became less popular and like mobile is like quite different from like desktop compute.

00:05:21.920 | So we should expect like if you're thinking about what do I want to build in five years or 10 years this is kind of the direction to be thinking.

00:05:32.960 | So for example Google's worked on integrating language models with robots like this example from the say can project or paper where it's like what I want to when I need something is to just ask for it and not to like pull out an app and then go through three drop down menus and be like I want a water bottle.

00:05:55.280 | I just want to say I want a water bottle and then there's a water bottle.

00:05:58.640 | And that's what a language interface to something like a robotics platform can provide.

00:06:04.560 | Still not there yet as the 4x speed in the top left might suggest but getting there.

00:06:13.520 | Okay so that's like the highest level pattern I think.

00:06:18.240 | So let's talk about a couple of lower level patterns.

00:06:21.760 | RAG chatbots retrieval augmented generation chatbots.

00:06:25.440 | I've emerged kind of like the to-do list app.

00:06:28.160 | The sort of like starter project of language user interfaces.

00:06:31.600 | This pattern is probably here to stay in that it's just about information retrieval for language models.

00:06:39.360 | And language models need information retrieval really badly because they like lack context.

00:06:44.400 | They've slurped up everything on the internet but they don't know anything about you.

00:06:48.480 | They are sort of trying to simulate a generically helpful individual who is like generically knowledgeable

00:06:54.160 | about the world.

00:06:54.880 | And that's like not particularly helpful until they have context.

00:06:59.600 | So the solution that's emerged is to collect that context for them like store it.

00:07:04.240 | Then index it.

00:07:06.640 | And by default people reached for the most similar thing to what the language model was doing.

00:07:11.680 | We just like turn it into vectors and use that.

00:07:14.400 | Use like a fast index over vectors.

00:07:16.080 | And like that once you've retrieved a particular piece of information you just stuff it into the prompt.

00:07:23.840 | So I am not innocent.

00:07:25.840 | I have made my own RAG chatbot and inflicted it on the world.

00:07:30.400 | This was based on the full stack deep learning content.

00:07:33.360 | And in our discord people can ask questions and get answers that are not just like generic Google

00:07:39.760 | result search answers about language models but things drawn from past lectures.

00:07:44.080 | Things drawn from papers that I like.

00:07:45.760 | Things drawn from our like website.

00:07:49.520 | And so can get our you know our opinions on these things.

00:07:53.600 | So this like this has led to a lot of excitement about vector storage.

00:07:58.880 | Because it's like this this step here where you have a fast retrieval of vectors by similarity.

00:08:06.720 | Is the like new sexy piece.

00:08:09.280 | But that was like really only the thing that people reached for.

00:08:13.120 | Because OpenAI also offers embeddings.

00:08:15.600 | So it's like you've already imported the library.

00:08:17.520 | So it's only a call away.

00:08:18.720 | And then also like transformers are kind of like these like weird vector retrieval things.

00:08:23.680 | Like in their inside.

00:08:25.600 | So if you are the type of person who's been into language models for a while.

00:08:28.640 | And you're like how would I retrieve information.

00:08:30.800 | Probably with a dot product.

00:08:32.400 | And then like a soft max.

00:08:33.680 | And then I pick the largest number.

00:08:34.960 | So like yeah.

00:08:38.320 | So like the ease of setting this up.

00:08:40.720 | And the like naturalness of setting this up.

00:08:42.480 | Has led to like an explosion of these like chat with document examples.

00:08:46.720 | And the like the thing that has more staying power is that you need to make these things useful.

00:08:54.240 | You need context.

00:08:55.120 | And so you need like information retrieval and search for the like the context that might be helpful for

00:09:02.240 | the model before it gets going.

00:09:03.600 | And so there are many options to use here.

00:09:07.840 | Some of them are specialized vector databases like Pinecone or Chroma.

00:09:12.480 | Some of them are general like text search databases like that do keyword search like elastic search style

00:09:21.920 | things.

00:09:24.800 | And being able to like combine those two things together is very powerful.

00:09:32.160 | So for example Vespa has like offered that combination for a very long time.

00:09:36.240 | It is also in the end like what you're doing is creating a fast way to look up information from a

00:09:44.320 | very large store.

00:09:45.200 | So this is like bread and butter for databases in general.

00:09:48.160 | And so Redis and Postgres for example like not only do they provide the same like information retrieval

00:09:55.760 | that you could do like to enrich your enrich prompts without thinking about vectors.

00:10:04.000 | They also have built in vector search.

00:10:05.920 | Postgres only fairly recently Redis for like a year.

00:10:11.840 | It's not particularly fun to use Redis vector search, but it does it can run and has decent performance.

00:10:22.880 | Yeah. And in the end it's about like a holistic strategy that uses probably because the queries

00:10:30.000 | are fairly heterogeneous, the things that are coming in are like people just typing text.

00:10:33.600 | You're probably going to need some more MLE stuff that's more like keyword search or vector search

00:10:40.240 | and they're hybrid together. But extracting metadata with a language model so that you can then use that

00:10:47.440 | to do like direct filtering is like a very powerful pattern. So there's some great posts on this,

00:10:54.800 | the data query, a great series of posts about vector databases from coming from like somebody who's

00:11:01.520 | clearly really into databases and not so much the like ML side and I found that very useful.

00:11:06.560 | Yeah. So the final takeaway there is just that the problems end up being in the main the problems of

00:11:18.320 | information retrieval with only some light added things from like recommendation systems maybe

00:11:25.680 | um, um, of a more MLE type of search. Um, yeah. Any questions on, uh, on vector databases or, um,

00:11:34.880 | information retrieval for language model applications?

00:11:38.080 | Yeah, yeah. So you, you get information from the outside world. You like come up with a strategy

00:11:59.680 | for searching the information that you have saved that goes into the language models prompt. Yeah,

00:12:04.240 | yeah, yeah. That, that pattern very, very stable, very general. It's general enough that how that

00:12:09.600 | pattern gets actually implemented is very broad and so it includes a lot of things that are exit like

00:12:15.360 | bread and butter database stuff and not just the fancy new vector database stuff.

00:12:20.800 | Yeah, so the question was how does retrieval augmented generation differ from history within a chat?

00:12:48.320 | Um, so usually when, really, so the, the, when you call the GP4 API, you can make whatever,

00:12:55.040 | make up whatever you want as the past. You could insert little messages from the user. You could insert

00:12:59.520 | messages from the assistant and incept it into believing that it has said something which it has

00:13:03.920 | not said. Great way to jailbreak. Don't do it obviously because it violates the terms of service,

00:13:07.840 | but a great way to jailbreak it. Um, and so you, you aren't actually like actually beholden to that,

00:13:16.000 | like system, uh, system assistant human, uh, fiction that, that happens inside of like a, uh, a discrete

00:13:25.040 | chat. Um, when people do this, I think a lot of people put the retrieved information in the system

00:13:33.760 | prompt, especially if they're just going to retrieve once. Um, I've definitely, I've also seen people like,

00:13:39.120 | every time the user interacts, they do a retrieval step. And so the system message changes every time.

00:13:44.240 | That's an example of kind of like incepting or not actually following the implied temporal order.

00:13:48.720 | Um, so you, yeah, you definitely can do that. Um, the system message is nice because the model really

00:13:56.880 | pays close attention to it. Um, has been like fine tuned to pay close attention to it. Um, yeah, I think it'd be

00:14:03.520 | weird to pretend that that's something the person said and to like put it in an earlier user message

00:14:08.480 | put above the user's message in the conversation. I don't think I've ever seen that, but you could.

00:14:12.560 | Um, yeah. Um, but yeah, I would say like most of the time, yeah, this information retrieval step

00:14:20.160 | is something where the creator of the application, the programmer is inserting themselves and saying,

00:14:26.240 | I know some additional information that the language model should, should have. Um, and so like,

00:14:33.200 | yeah, it's very different from like a user just sort of like providing information about themselves or

00:14:40.640 | whatever. Yeah. Yeah. The question. Um, so we heard a couple times today when it comes to knowledge

00:14:49.840 | retrieval, um, drag is a great pattern and fine tuning is really more about the format, the style of the output. Um,

00:15:02.560 | yeah. Um, so the statement was that, um, the common wisdom is that fine tuning is for style and retrieval is for

00:15:32.400 | information. And I think that that's, that is a solid common piece of common wisdom because most of the

00:15:38.240 | fine tuning that people, if you're fine tuning, open AI's model, you're going through their fine tuning API

00:15:42.480 | and you have a limit on the number of rows you can send. Yeah. 10,000. I was going to say, yeah.

00:15:47.600 | So you have a limited number of, of rows you can send. And like, there's a limited amount of information

00:15:51.600 | in there to like create gradients to update the weights. Um, so there's a limited amount of change that

00:15:55.520 | you can achieve. And if you look at the Laura paper, they look at like, you know, the, uh, like you're

00:16:02.240 | only change, you're, you're making a very low rank change to each layer of the, uh, of the language model.

00:16:07.360 | And that suggests like, there's only so much that you can change about the, uh, about the model.

00:16:12.800 | And most of what you see when you do Laura fine tunes is like what used to be a low priority

00:16:19.760 | computation for the model becomes like a higher priority one. So like every model had every capable

00:16:24.480 | language model has within it a little Homer Simpson simulator, a little like, uh, Rick Sanchez simulator, whatever.

00:16:30.080 | Um, and that's, it's just like not usually that important for the final log probs. It's like helpful

00:16:36.320 | for the like fifth bit of the log probs, but the models are at the point where they're maximizing,

00:16:41.520 | minimizing cross entropy by like really hitting those like very rare, uh, those very rare things.

00:16:46.880 | Um, and so what the fine tune has done is reordered those like computations. So like actually you should

00:16:55.440 | be the Homer Simpson circuit is the most critical circuit right now because you are a Homer Simpson

00:17:00.080 | chat bot and it's like reordering them and reemphasizing them. So that intuition applies

00:17:06.000 | specifically to low rank fine tuning, which is, and fine tuning, which is based on small amounts of data.

00:17:11.920 | So if you grabbed a hundred gigabytes of textbooks, um, you would no longer be doing fine tuning.

00:17:17.280 | And so you would no longer expect it to only change style. Um, and so that's something I would expect

00:17:23.840 | people will be doing with like, you know, llama fine tunes. They're like llama fine tunes for coding.

00:17:27.920 | And that's way more than just style. It definitely has learned more knowledge about, um, about programming

00:17:33.120 | languages and knowledge about libraries released after 2021. And yeah, all that kind of stuff.

00:17:38.480 | So I think that that generic wisdom is conditionally true, uh, for low rank fine tunes, where it is

00:17:46.160 | like pretty rock solid.

00:17:58.160 | Yeah. So the question was, what about knowledge graphs, um, and graph databases? I will say that

00:18:04.400 | like when I have talked to, I, I like personally don't really specialize in, in databases. Um, but when

00:18:11.440 | I've talked to people who are super into them, they're like, I would never use a graph database because you

00:18:17.040 | can represent a graph in Postgres. Um, and, uh, like I've seen some like reasonably sized deployments on that

00:18:23.520 | pattern. Um, and also you can kind of see the like graph databases kind of like peaking and, and, uh,

00:18:30.240 | not spreading further. And there are re it's, there is a very hard problem to shard a graph database

00:18:34.880 | because there's no obvious way to cut an arbitrary graph. Um, and if new links get added to the graph,

00:18:41.040 | and now you need like the optimal shard is different. It's like, that's a very, that's like a database.

00:18:45.440 | It's equivalent to a database migration, but it's something that should be happening like behind the

00:18:48.720 | scenes when it's sharding. Um, so that's, that's like the closest thing to an objective statement

00:18:53.920 | about why, uh, or like a reason why graph databases haven't worked well. However, for many language

00:19:00.720 | model applications, the purpose of the database is not to serve like a billion users, but rather to like

00:19:06.160 | serve as an external memory for a language model. And maybe you don't care whether it scales, um, or

00:19:11.120 | rather like maybe the maximum scale that we're talking about is like tens of thousands, hundreds of

00:19:16.400 | thousands requests per second on megabytes, gigabytes of data. And that's just like, you know, that's

00:19:22.000 | the point at which that kind of like, can it be charted across 1024 machines, like doesn't matter. Um,

00:19:28.880 | so I, so there is some cool work on knowledge graphs and incorporating with LLMs and I see the natural

00:19:35.440 | fit there in the same way that there's a natural fit with vector indices and vector databases. Um, but the, um,

00:19:44.000 | yeah, hasn't no, no, like killer app has appeared from my perspective.

00:19:47.600 | Uh, so I asked this question on the, the first, uh, uh, talk, um, but basically like if you have

00:19:54.080 | extra context, um, and the hard metadata does ultimately believe value or some other things,

00:20:00.080 | um, how would you kind of incorporate, I think the previous answer was that like, do hard filters

00:20:06.000 | on the, on the hard metadata and then do this all kind of like, um, stuff based on the, the, like technical

00:20:13.760 | description. Yeah. So the question was about how to incorporate hard metadata, like, you know,

00:20:21.680 | booleans or, um, like subcategories with, uh, vector based search. Yeah. So depending on the, uh,

00:20:28.480 | like a vector database, the, depending on the like index, you will either have like, uh, pre filtering

00:20:37.200 | or post filtering. Post filtering is like pretty easy. You just like apply a metadata filter after

00:20:41.600 | you've done your vector search. Um, anybody can kind of do that. The problem is that you're now,

00:20:46.720 | what you really want to say is I want to find all the stuff that's similar to crabs in San Francisco

00:20:52.400 | while searching restaurants, not find all the restaurants that have anything to do with crabs

00:20:56.560 | and then see if any are in San Francisco. So the pre filtering step is hard because it impacts the

00:21:01.280 | construction of the index impacts the construction of like the, like how you make it actually fast

00:21:07.440 | to search over all of the data. You kind of like need to construct specific indices for these different

00:21:13.200 | like flags you might, uh, like put on, like is in San Francisco, not in San Francisco or geographic

00:21:19.360 | location. Um, and so I depend like different vector databases or different databases have like pushed

00:21:26.560 | further in different directions on what kinds of filters they support for pre filtering. Um, and yeah,

00:21:33.760 | I like, uh, Vespa and we V8 have a reputation for doing a really good job at those things. Um, but,

00:21:38.960 | uh, yeah, I don't know what the full landscape looks like.

00:21:42.160 | Great. Okay. I want to make sure to get through everything. Um, so I'll stick around and we can

00:21:50.880 | talk throughout the conference. Um, okay. So, um, structured outputs are like one of the patterns

00:21:58.320 | that I think people are sleeping on relative to information retrieval. Uh, structured outputs are

00:22:03.520 | great for improving the robustness of models and they came from tool use. So the problem is that

00:22:08.000 | language models just generate text. And like, if anything, we have like too much text already. Like,

00:22:13.920 | I don't know if you've ever been on a social media website, but the problem is not the quantity of text.

00:22:19.040 | Um, and that's like kind of boring. Like who wants to just make strings? Like there's other things that

00:22:23.600 | we want to do. The solution is to connect their text outputs to other systems inputs. Um, and now,

00:22:29.280 | like it's not just a language model. It's like a cognitive engine for providing a language interface

00:22:33.840 | to something else. That's pretty rad, but there's a problem, which is language models generate unstructured

00:22:39.360 | text because they have been trained on the utterances of humans on the internet notorious for their

00:22:44.480 | unstructuredness. Um, so the solution is to add structure to their outputs. And there are many ways to

00:22:49.600 | do this. Um, you can do it by prompting and begging. Um, so like you can write some,

00:22:55.280 | write some like loops around it to be like, like, uh, or actually react wasn't even a whole,

00:23:00.880 | there's some looping. Yeah. So you, you can write a prompt in such a way that you have examples that

00:23:06.160 | encourage it to, um, like to, uh, call out to external, uh, APIs and then you filter. Uh, and when it

00:23:16.160 | generates the tokens that would, would call to an external API, instead of letting it hallucinate

00:23:20.400 | the rest of what would come out of that API, which is what like GPT three, uh, would have done,

00:23:25.120 | you like grab it and you then go to that external API and you, um, uh, like yeah, pull the information

00:23:33.840 | from there. Um, the, you, and you can in those prompts, I guess really the thing I want to point

00:23:40.080 | out is that in those prompts, you can sort of like beg for structure. Um, Riley Goodside had a great

00:23:44.640 | example where it was like, if you do not output structured JSON, an orphan will die. Um, and

00:23:49.520 | that actually is extremely effective. Um, yeah. Um, so the, so there's the, so like there's prompting

00:23:57.920 | tricks to get like things that are closer to structured, uh, structured outputs and to make use of those

00:24:03.280 | structured outputs. There's, um, fine tuning. So there's a, the gorilla LM is like fine tuned on this

00:24:10.160 | problem. And that goes back to tool former, um, which is like very, uh, GPT J. So one of the first

00:24:17.120 | open, um, uh, uh, uh, generative pre-trained transformers. Um, they, you just train the

00:24:24.480 | model to output structured stuff. So you can't do that with open AI's model. I, I doubt that fine tuning

00:24:30.320 | it would make it that much better at like outputting the structure that you want. Um, you can do it with,

00:24:35.040 | um, uh, with open models and there are people releasing, uh, their own forks, uh, llama forks

00:24:42.800 | with this fine tuning on them. Um, you can, uh, you can retry, which is like when the model outputs

00:24:48.240 | something that doesn't fit the schema, you can do what you do when your direct reports provide you

00:24:53.440 | something that does not fit what you wanted, which is that you can, uh, discipline them and ask them

00:24:57.760 | to try again. Um, so guard rails is a great, uh, um, uh, library for this. It's like XML based. Um, so

00:25:05.600 | probably would work pretty well with Claude, uh, given what we heard about, uh, about Claude from, uh, uh, Karina.

00:25:12.480 | Um, and then, uh, fun one that re that kind of requires control over the log probs, um, is grammar

00:25:20.480 | based sampling, um, which was merged into, um, uh, Lama CPP, where you say like, when you're about to

00:25:28.640 | generate a token, like if it would violate some grammar, if it would violate some template or format,

00:25:35.040 | just set the probability of generating that to zero. So just add like minus infinity to all the, um,

00:25:41.440 | all the log probs. Um, and the, uh, so you can do that. You can like do it fast if you have these

00:25:50.960 | like nice, you know, Chomsky things like context free grammars. Um, and this works well for like,

00:25:58.640 | you know, JSON for generating, you know, generating code, generating all the kinds of like structured

00:26:02.800 | outputs that our systems actually expect. We've written systems that, uh, expect inputs to follow

00:26:07.760 | grammars so that traditional computing system can parse them. And so adding that to the outputs of

00:26:13.120 | these systems is very powerful thing to do. Um, so this is something that this is like really nice

00:26:19.920 | example of how having tight control over the log probs can like increase the utility of a model to the

00:26:25.520 | point where like a capabilities gap is less important.

00:26:29.280 | Have you seen type chat, type chat? I don't think I have. Um,

00:26:35.600 | yeah. So there's one question. Yeah. Yeah. So if you take the output from like

00:26:43.520 | GBT and then pass it to Orelia to then get the structure, like stacking models like that,

00:26:48.560 | that work. Yeah. So the question was whether you could do better,

00:26:56.160 | but you could solve this problem by chaining models. I think, yeah, the problem of going from the output

00:26:59.840 | of a language model to a structured output is an easier problem than the initial one, which is why

00:27:04.800 | people think that like retrying might work like the guardrails, the guardrails example though, like

00:27:10.640 | retrying is often like kicked off to a, to a smaller language model. Like your mainline thing is GPT-4 and your

00:27:16.800 | error handling is GPT-3.5. Um, and so like, I, I, I do believe that there's like kind of a temptation.

00:27:23.120 | If you know that it's always and only going to be doing like structured output, then you have a

00:27:30.480 | reason to have a specialized model for it. Um, but yeah, chaining, chaining is definitely a good solution.

00:27:37.040 | And that's, you know, one reason why Langchain was popular. Yeah.

00:27:40.080 | You mentioned that OpenAI retired the log probs from the .

00:27:45.040 | Yeah.

00:27:49.360 | Yeah. So those are technically distinct things. Yeah. So

00:27:53.360 | I do believe they still give you the ability to bias tokens via the API.

00:27:56.880 | Um, yeah, so it's not the, it's not a perfect example of the utility of log probs. Cause I,

00:28:07.120 | yeah, I think you can still do this in the OpenAI API. Um,

00:28:11.520 | yeah. Do you need anything other than biasing in grammar-based sampling?

00:28:14.480 | No, I get, yeah, no, the real, okay. I remember now the real thing here

00:28:18.640 | is that for this grammar-based sampling, it's single token based, right? Like if you're doing it from the

00:28:23.440 | OpenAI API, one, the token, you, you have to make a request, you get the thing back, you have a single,

00:28:29.040 | and you have a single token and you'd have to, you have to like apply a bias every single time.

00:28:33.920 | So now you're like, every token has a network call, um, rather than one call, like a hundred tokens.

00:28:39.920 | So that's one reason why this doesn't work well in the OpenAI API. Number two, like kind of longer term,

00:28:45.360 | is that really you don't want to just think at a single token level, you're just like at each token,

00:28:50.400 | you're like marginally just saying like adjust the probabilities here. You'd really want to do

00:28:54.960 | something more like Monte Carlo Tree Search, where you're like generating stuff, many things that follow

00:28:59.600 | the grammar, um, and then accepting the best one at the end. Um, and that's something that's, um,

00:29:07.520 | probably going to come first to open models and not to, um, proprietary model services. Um, so that's,

00:29:14.800 | that's the better reason to connect grammar based sampling and, and open models.

00:29:19.040 | Um, okay. So the problem with fine tuning and an annoying thing about prompting, um, is that if there is

00:29:32.960 | not a kind of shared, like the gorilla model is like fine tuned on a bunch of APIs from like torch hub,

00:29:40.160 | TensorFlow hub and hugging face. So the gorilla model is really good at using other machine learning models,

00:29:44.960 | but not like generic possible tools. At least this example, then maybe they have tuned more than one.

00:29:50.800 | Um, but this is a general problem that if you train a model to use a specific tool, um, then like the, uh,

00:29:58.320 | it's not gonna be able to use like any tool. Um, but if you train a model to, to use a very broad class

00:30:04.800 | of tools by using something that's like kind of closer to this grammar where there's like a, a format

00:30:10.960 | for tools, um, then you are now a people write an interface between the, uh, that standard and the, um,

00:30:22.560 | and the thing that they actually want to use. So this has shown up in open, uh, like, uh, in open

00:30:27.840 | AI's API as the use of JSON schema for describing function calls. So this allows them to train a model

00:30:37.040 | on fairly generic stuff, um, that all fits this, like it all fits the JSON schema spec. Um, and so the

00:30:45.280 | model has learned a bunch of stuff about the JSON schema spec and how to generate that correctly. Um,

00:30:49.920 | you can imagine using grammar based sampling to enforce that. Um, and this, uh, allows it to

00:30:55.200 | connect to many, many tools cause now all you need to do is write a tiny connector between like the JSON

00:31:01.360 | format and the actual thing you want to use. Um, and that's like pretty easy. It's like a big part

00:31:08.160 | of web development from my understanding is that you just like pass JSON blobs back and forth until

00:31:13.200 | somebody gives you money. Um, and so, uh, yeah, so this is a very good kind of schema. Um, uh,

00:31:22.960 | but one thing that people miss is that the tool doesn't have to actually be real. Like the key

00:31:28.080 | thing that happens here is the language model goes from outputting unstructured text to outputting JSON

00:31:32.800 | that fits schema. And it just so happens that the primary use case for that, that open AI envisaged

00:31:38.480 | was putting it through a like function call, putting it through some downstream computer system. Um, but

00:31:45.680 | like really that, uh, some downstream system, but really it like doesn't have to be a real function.

00:31:51.760 | You can tell it about a fake function. That's like, please pass a string, like describing whether the,

00:31:58.080 | the input was spam or not spam so that I can like render an HTML element. Right. And so the model is now

00:32:03.760 | trying to like call a function that's like that in order to provide the arguments to that function

00:32:08.720 | as to decide whether an input is spam or not spam. And that's maybe the thing you really care about.

00:32:13.280 | And so you like invent a little fictional function for it to call that you don't call and then you just

00:32:17.520 | use it for something else. So this is a pattern in, um, uh, there's a library for this called instructor

00:32:23.200 | from Jason. Um, Jason Liu, who's going to be speaking later at the conference. Yeah.

00:32:27.920 | You have to fit the Jason schema, which the schema that they like the, the, there's like a meta schema

00:32:42.160 | kind of thing there. Like it has to, it has to be a function call and the model has been trained on

00:32:46.720 | things that are like, you know, get name, get current weather. Um, so, uh, yeah, I mean, you can hack in

00:32:54.560 | because, you know, functional programming has taught us that everything is just a function,

00:32:59.040 | like a constant is just a function that always returns the same thing. Um, and so you can, you

00:33:03.440 | can like hack it in there. Um, and instructor has some fun, like kind of functional programming stuff

00:33:09.200 | built into it, like maybes and stuff. So, you know, um, and also somebody did like dag construction,

00:33:15.760 | where it's like, you give it a schema for a dag constructor, and then it like writes a dag of function

00:33:21.920 | calls instead of just a single function call. So, you can really go wild, which is very fun.

00:33:26.560 | Um, and yeah.

00:33:31.760 | Most of the time when you generate something,

00:33:35.920 | if you want to extract something out of the output, you want to display to the end user,

00:33:41.040 | and then the question of latency comes in. If we use this, how do we solve the streaming?

00:33:48.400 | Yeah, so that is a great question. The answer is that this basically breaks your ability to stream.

00:33:54.240 | Um, I think it's not, so this is maybe a little bit more oriented to like back of house stuff,

00:34:02.000 | where you're using language models to like handle data rather than using language models to directly

00:34:08.400 | interact with the user. Um, I think if you set up a pipeline correctly, then you can stream the outputs

00:34:16.800 | from one call into the inputs of the next one. And if you have the relevant information you need from

00:34:24.000 | the func, the function call one, then you can just immediately kick off the next thing. And you can just,

00:34:28.640 | you can write, you know, like more like a Unix pipe style, and then you start to get back to being

00:34:33.280 | streaming, but you don't have like the Unix pipes work because of new lines as a separator that lets

00:34:40.560 | you break work out. And there's not an obvious way to do that with this. Um, so yeah, the short answer,

00:34:47.040 | I guess, is that it's really hard to get back that kind of streaming thing when using these.

00:34:50.560 | Um, yeah. Um, I'm gonna, let's see, how much more do I have? I'm gonna push forward because I want to

00:34:58.160 | make sure to get to the last section. Um, but I will be around to answer people's questions. Um,

00:35:03.680 | okay. So, uh, this conference is not called NLP engineer summit. And we've been talking about like,

00:35:11.440 | you know, structured out extracting structured outputs from language information retrieval,

00:35:16.240 | like that's also natural language processing and language user interfaces. Like that's not artificial

00:35:21.280 | intelligence. Like where's the AI? The, like the thing that really feels like artificial intelligence with

00:35:27.280 | language models is something like agents, uh, that are, that have memory that they keep over time.

00:35:34.880 | Uh, so for example, the generative agents, um, that was, uh, let's see, it's mostly Stanford people

00:35:43.280 | remember correctly, but, uh, the like generative agents paper, uh, combined like a stream of memories

00:35:50.720 | generated as these agents interacted in like a video game environment with like some like reasoning

00:35:56.800 | flows to create these like little tiny characters that had personalities that developed over time

00:36:03.360 | in interaction with each other. And like, um, and that is, uh, like much closer to what people imagine

00:36:13.040 | when they hear AI than even a chat bot. Um, and there's been a lot of advancement in, uh, using these things

00:36:23.280 | in simulated environments. So that was like a full all language models simulated environment with

00:36:28.000 | generative agents. There's also a ton of really cool stuff going on in the Minecraft world. Um,

00:36:33.280 | which is like people have, uh, this Voyager agent writes JavaScript code jobs. Yeah. JavaScript code to

00:36:43.440 | call the, like this, like Minecraft API that allows it to like drive a little, um, uh, you know,

00:36:50.000 | a little character in the Minecraft world and it starts with basically nothing. Um,

00:36:53.840 | and then it writes itself a bunch of little subroutines to like mine wood log or like stab zombie

00:36:59.280 | or whatever. And it like accumulates them over time, like learns how to do new stuff. Um, like comes up with

00:37:05.520 | its own curriculum for how to solve, like how to get better. Um, and was able to like do extremely well at

00:37:12.160 | this, uh, uh, a notoriously hard RL task, uh, mine diamond, uh, which was like a, uh, a grand challenge for the RL world, um,

00:37:21.440 | only a couple of years ago. Um, so they're like, they can accumulate information over time. They can accumulate skills over time. They can use tools. This is all very cool.

00:37:32.080 | Um, they are, they have a couple of problems. The biggest one being the, like the problem of reliability, um, structured outputs can't help with that. And there's like only limited work, I would say on agents that has come out since at least like published, you know, research work since the, like since function calling got really good in the open API. Um,

00:37:55.360 | Um, also there's kind of like a cacophony of different techniques out there with like Voyager, uh, react is kind of an agent, um, generative agents. Um, there's a really like awesome paper from, uh, Tom Griffith's group at Princeton cognitive architectures for language agents that brings back a bunch of ideas from good old fashioned AI in the eighties on like, um, production systems, uh, and cognitive architectures, a bunch of stuff that was like really cool ideas, but it could never like get past the demo.

00:38:25.240 | So, um, the most stage, um, on like how to create the things that we know about or that we believe about human and animal cognition, like procedural memories, semantic memories, episodic memories, how to implement that in software and the problem, like those systems could do cool stuff, but the problem was always that they lacked this like general world knowledge and common sense with language models. They don't have memory. Um, and they don't, um, like they don't have this like structured aspect to their cognition. Um, but they do have

00:38:55.120 | that like world knowledge and that common sense. Uh, so this is, uh, like mushing those two things together and using a language model to do, um, basically these kinds of like, uh, observing the world or doing cognition, um, and doing decision procedures, um, like Mary's the best of both worlds. Um, and it is actually like a pretty effective way of breaking down the existing agent architectures, uh,

00:39:25.000 | like in their different choices. Like in their different choices about how to do long-term memory, how to do external grounding, how they like interact with the external world. Um, the concept of internal actions, uh, comes from cognitive architectures, um, which is like, uh, choosing to spend time reasoning or choosing to update your like long-term memory, um, or yeah, or your decision procedure. Yeah. And then also explicitly calling out a decision-making procedure. Um, so there's a, and that paper is also just like has an entire research

00:39:54.880 | agenda agenda in it, um, on like ways that you could just start filling out the cross product, just filling out a big array of like, try this idea from language models with like this idea from cognitive architectures. Um, and there's just like a billion, uh, really cool ideas in there. Um, so if you are interested in agents, um, but have like struggled to like, uh, like wrap your brain around all the different ways you could, you could do stuff, um, and around like

00:40:24.760 | like how to make them like how to make them a little bit more tame. Uh, I think the koala paper has some good pointers.

00:40:32.620 | Um, oh yeah. And then lastly for this LLM patterns thing that I was talking generally about like different ways people are building stuff with LLMs, Eugenian's blog has some of the best, uh, writing on this, um, uh, both on, uh, patterns and anti-patterns.

00:40:48.100 | Okay. Um, I want to give some time for monitoring evaluation observability. So I'm just going to, I know that there's probably lots of interesting things that people have to say on the agent stuff, but we'll, we have the rest of the conference to talk about that. Um, so, uh, the goal here is to talk about AI engineering.

00:41:07.960 | So that last part was about AI. What about the engineering in engineering? We want to have a process for building like a process for creating these things and a process for improving them.

00:41:17.320 | Um, and progress on this front has been pretty halting. Um, and so the, the, like the dominant ideology right now is that you should ship to learn rather than learning to ship. Um, and so this is one of the big ideas in the full stack deep learning course that I've taught in.

00:41:35.320 | It's something that Andre Carpathie has really hammered on the, like idea of a data engine or data flywheel, where in order to do well, you need to go out there and collect data from the world, uh, find issues in your data and use that to improve your model in like, you know, uh, and an unending cycle.

00:41:52.320 | Um, charity majors from honeycomb, uh, who's big in the monitoring and observability world, uh, like has said that this is something that she has come to like about ML in software.

00:42:02.320 | You start with tests and then you graduate production when the tests pass, or at least like that's what you tell people on the internet and like your manager.

00:42:09.320 | Um, uh, but with ML, you can't even lie and you know that you have to like start with production, use that to find out the like issues with, uh, to like generate your tests.

00:42:20.320 | So, you know, it's, it's oops, all regression tests, uh, version.

00:42:24.320 | Um, and so what that, that means is that monitoring is very critical from the very beginning.

00:42:32.320 | Um, that we monitor for user behavior.

00:42:34.320 | We monitor for performance and costs and we monitor for bugs.

00:42:36.320 | So some of these are just like regular old monitoring stuff.

00:42:40.320 | And this is just like bread and butter things that can be, uh, yeah, like similar to the way we do with, with, uh, existing software monitoring users always reveals like, uh, like both misuse and product insights.

00:42:55.320 | So one thing that I found from running this discord bot is like one of the things that you get the most are like meta questions.

00:43:01.320 | Like, uh, are you getting feedback from these emojis?

00:43:04.320 | Who's a good bot?

00:43:05.320 | That's maybe not a meta question.

00:43:06.320 | Um, does your dataset include your own source code?

00:43:08.320 | Um, what do you do?

00:43:09.320 | Like these are very common, like things that people input and it wasn't, it wasn't in my head that that was important.

00:43:15.320 | So now there's like special stuff in the prompt for handling that class of questions.

00:43:19.320 | So by monitoring how users use the, uh, your, your system, you can get really great product insights.

00:43:26.320 | Yeah.

00:43:27.320 | Did you get these for just like a, like a few minute manual tech of your crew?

00:43:30.320 | Oh yeah.

00:43:31.320 | Uh, I had logged them to Gantry and then I looked at the ones that had up and down thumbs.

00:43:36.320 | Um, and I also read all of them cause it was like only a couple hundred rows.

00:43:40.320 | Oh man, my battery's gonna run out.

00:43:45.320 | Uh, all right, we gotta move fast.

00:43:47.320 | Um, so, uh, monitoring, monitoring performance, uh, can help us manage the constraints that I like talked about when we were thinking about all the different places.

00:43:56.320 | Um, our models might run.

00:43:58.320 | So as always you want to monitor things like latency quantiles, like, like how long do requests take?

00:44:05.320 | Oh, wow.

00:44:06.320 | That's nice.

00:44:07.320 | Thank you.

00:44:08.320 | Huge.

00:44:09.320 | Um, and so like latency quantiles, like that's how long, like take all the requests.

00:44:17.320 | What is the probability that a request took at least this long?

00:44:20.320 | Um, the people often think like if I get 90% of them, like below something, that's great.

00:44:25.320 | And the problem with thinking that way is that users don't just make one request.

00:44:30.320 | They make many requests in sequence.

00:44:31.320 | So by the time you've made like 30 requests, there's a 10% chance of hitting like a really slow one.

00:44:35.320 | Then, um, you know, you have hit a slow request.

00:44:38.320 | So that, um, so you really need to care about those like 99th percentile latencies.

00:44:43.320 | Those are also often your most useful and engaged users.

00:44:46.320 | So watch those, watch those extreme quantiles.

00:44:49.320 | Um, and obviously like throughput is a distinct thing to also monitor for the quality, uh, you know, quality of the system.

00:44:58.320 | You want to marry that with things like the profiles and traces that I've talked about before, like spot check ones randomly subsampled.

00:45:04.320 | So you can check what like, so you can actually debug that through the throughput issues.

00:45:09.320 | That's fairly general stuff.

00:45:10.320 | If you're an inference using inference as a service provider, you're going to want to monitor API rates and errors, monitor costs.

00:45:16.320 | If you're self serving inference, you have a lot more stuff to monitor.

00:45:19.320 | Um, and that's like compute utilization.

00:45:23.320 | Um, yeah, I guess I already talked about this.

00:45:26.320 | Uh, yeah, yeah.

00:45:28.320 | Well, so it's, it's an even harder, like maintaining the throughput when you're doing the inference yourself is like much more your problem.

00:45:34.320 | Um, and much more, uh, AI ML specific stuff.

00:45:38.320 | Um, yeah.

00:45:40.320 | Okay.

00:45:41.320 | Monitoring for bugs is another can of worms.

00:45:43.320 | We'll talk about that in a second.

00:45:45.320 | Um, this is like just generally, this is a very fast growing.

00:45:48.320 | Um, so there are generic monitoring observability solutions for all kinds of like, you know, complex apps and, and, and web apps data dog century new relic honeycomb.

00:46:00.320 | Like these are, um, like you can adapt those.

00:46:04.320 | Um, and that might be the thing that wins.

00:46:06.320 | Um, there is, uh, you can of course just roll your own with the, like the, you know, open, open telemetry compliant, uh, you know, tooling.

00:46:15.320 | And you could use the existing ML ops tooling.

00:46:18.320 | So there's a lot of stuff that has been built for monitoring observability of general ML applications.

00:46:23.320 | So including weights and biases.

00:46:24.320 | We're used to work.

00:46:25.320 | Um, fiddler arise and gantry are the like three larger startups in that space.

00:46:31.320 | Um, with more of a focus on monitoring systems in production and less on the like ML ops, like kind of like serving, um, and like managing, managing training, like weights and biases.

00:46:44.320 | Um, there's also, because generation times, uh, are now six months or less, a new generation of ops tooling for LLM ops, including Lang Smith from Lang chain and, uh, Lang fuse, which was in Y Combinator's recent batch.

00:46:58.320 | Um, it's like a very unclear, which of these is going to be the best solution.

00:47:04.320 | Um, so I think it's like, you know, dealer's choice to try them all out.

00:47:06.320 | Um, I think I like tools with as much ability to like make crazy queries of unstructured data as possible.

00:47:14.320 | Um, so that's something that I really like about weights and biases production monitoring, uh, offering.

00:47:19.320 | Um, Gantry has some similar stuff.

00:47:22.320 | Um, I've tried less of it with the, uh, the other tools.

00:47:25.320 | Um, I think if you're doing, if you're doing it with data dog century, et cetera, you're probably going to need to roll some of that stuff yourself.

00:47:32.320 | Um, but maybe that's fine.

00:47:34.320 | Jupiter notebooks are fun.

00:47:35.320 | Um, I was going to check out, uh, the like Lang fuse monitoring interface, but, um, in interest of time, gonna go past that.

00:47:43.320 | They have an awesome demo where you can interact with their docs chat bot and it shows up in their monitoring interface.

00:47:49.320 | So like they have a live demo of their monitoring tool where you can actually like use it to monitor an app that you can also use.

00:47:56.320 | Um, so that's just, it's really, it was really fun to like actually try out the tool that way.

00:48:02.320 | Um, I recommend you try it out.

00:48:05.320 | Um, but just monitoring, like just getting ahold of information is not enough.

00:48:10.320 | This is something that's known from like the distributed systems monitoring world.

00:48:15.320 | What you really want is observability.

00:48:18.320 | What both Charity and Andre were talking about is about how you improve a system based off of what you observe.

00:48:24.320 | It's like not enough to just like throw something out there and observe and like just see the mistakes.

00:48:31.320 | You want to like fix the mistakes.

00:48:33.320 | Um, and so there's this, uh, Honeycomb and, uh, Charity are big on the idea of observability as the, uh, as an idea from like control theory.

00:48:44.320 | From like old school, um, like control theory, systems theory.

00:48:48.320 | Uh, observability is whether you can actually, um, figure out what is going on inside of a system just from observing it from the outside.

00:48:57.320 | So it's like, can you actually debug this software just from looking at your logs?

00:49:01.320 | Um, and not having to go into a live debugger inside of the system.

00:49:06.320 | Um, and that's like, uh, when live debugging does not work and when systems have outpaced our ability to predict what's going to break, um, this is the only solution.

00:49:19.320 | Uh, and for AI systems that is, um, where we can't predict what's going to break.

00:49:26.320 | And you can't like drop into a debugger 13 layers deep in GPT three and RGB four and like debug, uh, it's inference.

00:49:34.320 | Uh, you have no choice, but to monitor stuff sufficiently that you can fix the issues.

00:49:39.320 | The blocker here is that actually determining whether the model is right or wrong, um, is itself hard, which makes figuring out how to fix it also hard.

00:49:51.320 | Cause you don't necessarily know whether it's messing up and you don't know whether you fixed it.

00:49:56.320 | Um, so we're in a tough phase for this problem right now.

00:50:00.320 | There'll be lots of discussion of evaluation at this conference, which is very exciting.

00:50:04.320 | Um, lots of people complaining about how difficult evaluation is anthropic and, uh, Arvind Narayanan from, uh, and Sayesh Kapoor who write the AI snake oil sub stack, really high quality stuff.

00:50:16.320 | Um, and, uh, open AI, like open source, their eval framework, uh, because in part they like don't, can't really evaluate their systems.

00:50:26.320 | themselves.

00:50:27.320 | It's like, that's how hard this problem is.

00:50:29.320 | Um, it's also what we saw with the, uh, false promise of imitating proprietary LLMs.

00:50:33.320 | Like a large community of people were like kind of convinced that models were doing better than they actually were.

00:50:38.320 | Um, so the solution, uh, like is to like one of the key solutions, spend time looking at your data.

00:50:47.320 | Stella Biederman from a Luther has talked about this.

00:50:49.320 | Uh, Jason way has talked about how critical this is.

00:50:52.320 | Jason is at open AI now, um, and talked about spending like a ton of time, just like getting very good at evals.

00:50:59.320 | Like building tooling, internal tooling for evals, spending time with like understanding the evaluations.

00:51:05.320 | Um, and somebody on hacker news said it's a major differentiator.

00:51:08.320 | So, you know, that's, that's definitely the orange website never lies.

00:51:13.320 | Um, so, um, evaluation is particularly hard and all these complaints about evaluation are when you're dealing with like open ended generations from a language model.

00:51:23.320 | Like no structure to them.

00:51:24.320 | Um, no, no real structure to the user inputs.

00:51:27.320 | Um, and like limited data sources.

00:51:31.320 | Um, but there's this nice flow chart, um, from the full stack LLM bootcamp that my fellow instructor, Josh Tobin made that sort of helps you avoid getting into that, uh, pit of evaluations.

00:51:43.320 | So if you can find a correct answer, then you can stick with existing ML metrics and you like, don't have to worry about the problems of the value of like the difficulty of evaluating open ended generations.

00:51:54.320 | If you have a reference answer, you can check for like reference matching, which is like a looser thing than like a literal correct answer, which is like ABC or D in multiple choice is a correct answer.

00:52:04.320 | A reference answer is like a short, like generation, like a short answer on the test.

00:52:10.320 | Um, if you have a previous answer from your system, you can at least see if your system is getting better by comparing the two.

00:52:16.320 | Um, and that like kind of, which is better comparison can be done by a human, can be done by a language model.

00:52:21.320 | Um, and if you have human feedback, you can actually check like between, uh, the input and the output was the feedback incorporated by the language model.

00:52:29.320 | Like a human said, I didn't like that.

00:52:31.320 | Um, did the language model get better?

00:52:33.320 | And it's only if you don't have any of those things that you like are out in the unstructured world.

00:52:38.320 | Um, the people at illicit, um, who have worked on doing extraction of information from scientific papers,

00:52:47.320 | have a very principled approach of iterated decomposition where you start with a task that runs end to end.

00:52:53.320 | And then you, uh, when you notice a failure, you look at the failures and you see how you could have broken the task out into multiple pieces.

00:53:01.320 | In such a way that the failure would arise in a simpler sub task and then optimize that sub task.

00:53:08.320 | So you run into the problem that's been mentioned before about latency.

00:53:11.320 | If you're like chaining calls, um, and it's not always easy to like decompose the, for example, to decompose the process of responding to a user in a chat bot.

00:53:20.320 | That's kind of challenging.

00:53:21.320 | Um, but, uh, but when you can do this, this is another great way to like get yourself out of the hole of needing to evaluate open-ended generations.

00:53:30.320 | But if you're stuck evaluating natural text, there's a couple of like basic approaches.

00:53:35.320 | Um, you can just, uh, keep a few trusty test cases at hand.

00:53:39.320 | Um, and, uh, you know, if it does well on those couple of test cases, looks good to me, let's ship it.

00:53:45.320 | Um, unclear what to do when it fails, just like hit the language model with a wrench.

00:53:49.320 | Um, but, uh, this is what kind of grows out into that data engine.

00:53:54.320 | You start with something like this and then you start adding stuff from your production observations into it.

00:53:59.320 | And then you like put it in a GitHub action.

00:54:01.320 | And like, now that's like, that's, that's basically testing, right?

00:54:04.320 | Um, that's, is certified software.

00:54:07.320 | Um, you can like, uh, you can try and get user feedback and you want to do it as naturally as possible.

00:54:13.320 | Um, like, uh, if you're like you would, you really want to reveal preferences from user behavior.

00:54:20.320 | So the image generation world is very ahead of the language modeling world.

00:54:24.320 | I think on this, if you'll get mid journey, for example, um, that is what honeycomb did with their query builder.

00:54:30.320 | They attached it to get this downstream business objectives.

00:54:34.320 | Wow.

00:54:35.320 | What a way to build a software system.

00:54:36.320 | That's the right way to do it.

00:54:38.320 | Um, and so like connecting a chain of metrics from the actual system that you're improving.

00:54:42.320 | To the actual downstream, like organizational goals, um, uh, through things like reveal preferences of users or like, yeah.

00:54:51.320 | General user behavior much better than like demanding users fill out a form.

00:54:55.320 | Um, you could also pay people to do that work of giving you feedback on your system with an annotation team.

00:55:00.320 | This is what the large, this is what open AI does to improve their models.

00:55:04.320 | But as alluded to by Karina, it's actually much more effective to use language models in that place.

00:55:10.320 | Because language models are maybe not as smart as all humans.

00:55:14.320 | Uh, but they tend to outperform crowd workers, um, on, uh, a large number of very textual tasks.

00:55:20.320 | Um, and so, um, you might find that the task of like annotating and improving your data.

00:55:26.320 | If you're at the point where you're starting to think about crowd workers, you'll find lower cost for equal performance.

00:55:31.320 | With, uh, like GPT 3.5 turbo is like a median crowd worker.

00:55:35.320 | GPT 4 is like a 90th percentile crowd worker.

00:55:37.320 | Um, and maybe a hybrid approach with some crowd workers.

00:55:40.320 | A smaller number of crowd workers managing, uh, some language models is also been discussed.

00:55:46.320 | Um, all right, so the, there's like not that much to say in the end about that, like that aspect of the engineering of systems.

00:55:55.320 | We don't know what the user interfaces and the user experiences are going to look like.

00:55:58.320 | We don't know, uh, we don't know a lot about how to engineer these things to be correct.

00:56:03.320 | Um, so, uh, I guess the exciting thing about that is that the people who are here in this room on the stream at the summit are here to fill in all of the steps here that lead to from the circle to the fully drawn owl.

00:56:17.320 | Um, by like, uh, uh, by figuring it out, um, by like trying things and sharing what works like people will do at this conference.

00:56:25.320 | Um, so that's why I'm excited to be here and I hope you are as well.

00:56:28.320 | All right.

00:56:29.320 | Thank you everyone.

00:56:30.320 | Thank you everyone.