Eugene Yan on RecSys with Generative Retrieval (RQ-VAE)

Chapters

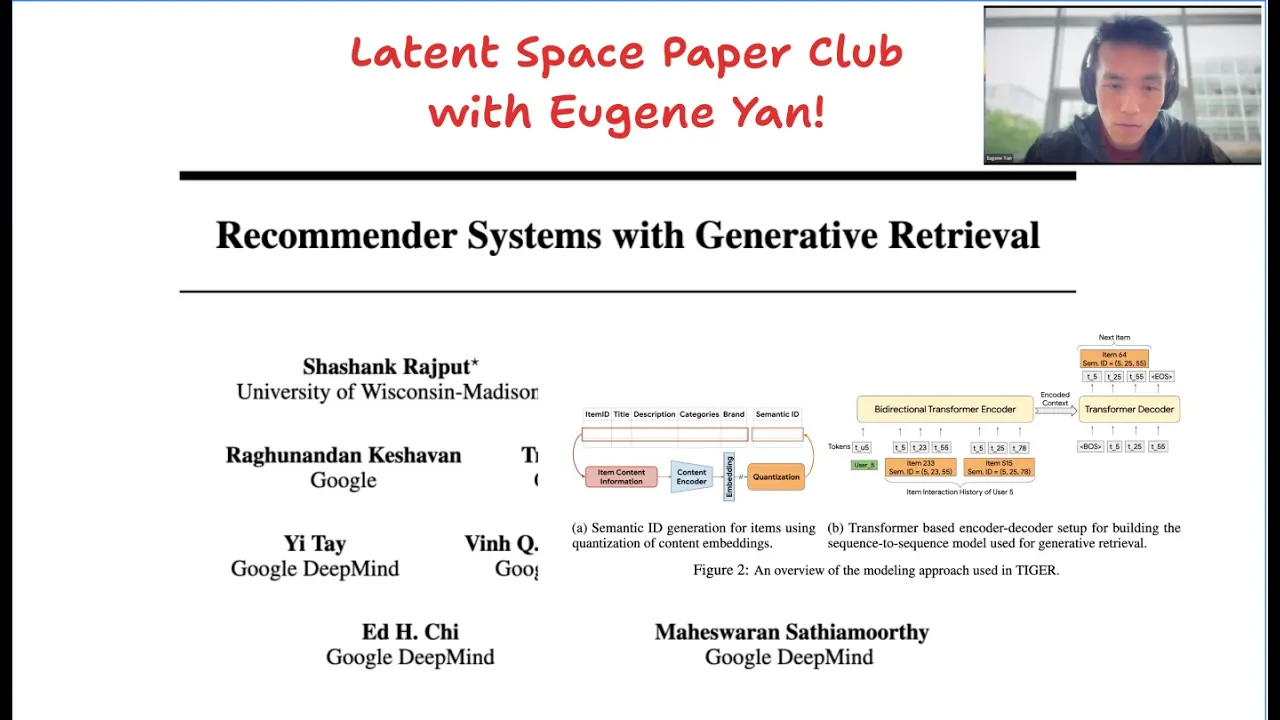

0:0 Introduction3:52 Recommendation Systems with Generative Retrieval

8:48 Traditional vs. Generative Retrieval

10:49 Semantic IDs and Model Uniqueness

14:15 Semantic ID Generation and RQ-VAE

17:37 Residual Quantization (RQ)

23:26 RQ-VAE Loss Function

34:55 Codebook Initialization and Challenges

36:32 RQ-VAE Training Metrics and Observations

48:19 Generative Recommendations with SASRec

55:25 Fine-tuning a Language Model for Generative Retrieval

84:8 Model Capabilities and Future Directions

00:00:00.000 | i guess not let me also share this on the discord how do we invite folks there's a lot of people

00:00:09.340 | registered for this one but i can share the room then let me let me do it got it okay yeah

00:00:16.180 | you can have a question you mentioned in the description of the luma please read the paper

00:00:38.240 | if you have time try to grok section 3.1 with your favorite ai teacher who's your favorite ai teacher

00:00:44.480 | oh clod opus opus interesting i never use clod for papers oh really i have it because i just have

00:00:54.700 | a max description uh subscription that's why i hate i don't know i never i think i should

00:01:01.900 | try to be described as well but i use and i just used to be found okay i think we will

00:01:17.720 | just wait one more minute and then a few minutes actually like i feel like we have a lot of people

00:01:23.680 | registered yeah unless you have a long session let me just kick off i i do have a lot okay okay i want to share

00:01:33.900 | um but we can wait one more minute one or two more minutes i'm going to start with a demo and we'll go

00:01:39.540 | into the paper i'm going quote and then at the end we have live demo where i'll take in requests from

00:01:46.240 | folks to um just you know just provide the input prompt and we'll see what happens this model is uh

00:01:55.680 | currently only trained on one epoch so we'll see wow uh michael is new to paper club so basically every

00:02:04.320 | wednesday we do a different paper um we love volunteers if you want to volunteer to share something

00:02:10.160 | um otherwise it's mostly me or eugene or someone um this paper club is kind of whatever's latest or new

00:02:17.600 | whatever gets shared uh someone will cover it and then some people are experts in domains like eugene

00:02:23.120 | is the god of rexus so he's sharing the latest state of the art of rexus stuff um this week yeah that's

00:02:30.480 | that's what he's going to talk about next week we have uh the paper how much do language models

00:02:36.000 | memorize um the week after someone has added vision towards gpt oss so i think we do that one it's

00:02:46.400 | like a rl vr uh there's also a news research technical report we can get one of them to present

00:02:53.680 | but honestly it wasn't great so i think we skip it but yeah if anyone also wants to volunteer papers

00:03:00.960 | or you know if you want someone else to share them we have active discord just

00:03:05.840 | share and then someone will do rj presents a lot too yep okay so i'm gonna just get started um i won't

00:03:16.000 | be able to look at the chat oh so let me know if the the font is too small uh and because i'm just

00:03:24.240 | doing this on my own laptop so let me know if the font is too small okay cool so what do i want to talk

00:03:31.200 | about today wait am i sharing the wrong screen wrong you're sharing your whole things okay uh i need to

00:03:38.960 | move zoom to this desktop paint perfect and now i want to share this test can you see this

00:03:49.520 | perfect okay so what is this recommended systems with generator retrieval let's start from the back what is it

00:03:59.200 | that we want to achieve here's an example of what we want to achieve whereby given this this is literally

00:04:07.360 | the input string recommend playstation products similar to this thing so what is this thing this

00:04:13.040 | this is the semantic id you see that it's in harmony format we have a semantic id start

00:04:18.000 | we have a semantic id end and then we have four levels of semantic ids one two three four how to read

00:04:26.720 | this it's hard but we have a mapping table so over here recommend playstation products similar to this

00:04:33.040 | semantic id i have no idea what this semantic id is but i have a mapping table so you can see this semantic id is

00:04:39.680 | actually logitech gaming keyboard so when i ask for playstation products it's able to say playstation

00:04:48.480 | uh gaming headset for playstation this is uh i don't think this is all this for pc so that's a little bit

00:04:55.440 | off there and this is for pc ps5 ps4 so not that's not too bad now let's try another something else a bit a

00:05:04.080 | little bit different recommend zelda products similar to this thing which is new super mario bros

00:05:11.520 | is able to respond zelda okay this is off teenage mutant ninja turtles and nintendo wii so i guess

00:05:18.240 | maybe we don't have a lot of zelda products in the catalog let's try something a little bit different

00:05:22.880 | super mario bros meets assassin's creed what happens uh we get bad man quick question what is uh what is

00:05:31.040 | this sid to what does semantic id mean what are these tokens like at a high level so semantic ids are a

00:05:38.800 | representation of the item um so here's how we try to represent the item instead of a random hash which

00:05:46.560 | is what the paper says uh we will just represent an item so imagine we try to merge something cute

00:05:52.240 | super mario bros with something about assassination well we get batman so this is an early example

00:06:02.160 | maybe let's just try you get lego batman the lego part makes it cute yeah it makes sense right cute

00:06:09.760 | let's try residence evil with donkey kong okay maybe this is a little bit off um but i don't know dog

00:06:16.720 | let's just say dog i have no idea whether this is in the training data how this would look dog and super

00:06:22.240 | mario well it's not so you can see it defaults to zelda because there's a very strong relationship

00:06:27.120 | between nintendo super mario and zelda actually sega i actually wonder if this is in the data set

00:06:33.200 | luigi dragon ball brutal legend so essentially we have recommendations we can learn the recommendations

00:06:40.480 | but now that we can use natural language to shape the recommendations by cross learning recommendation

00:06:46.480 | data with the natural language data so now that is the quick demo hopefully you are interested enough

00:06:53.760 | to pay attention to this to this um this is i'm gonna go fairly fast and i'm going to go through

00:07:01.440 | a lot we're going to go through paper we're going to go through my notes and we are going to go through

00:07:06.320 | um code so i hope you give me your attention oh vivo has a hand raised and then a quick question on

00:07:13.120 | that example so right now you're crossing stuff that should be in training data right so stuff like

00:07:18.560 | uh zelda batman assassin's creed resident evil uh this should also theoretically just work with natural

00:07:25.680 | language right so if i was to ask something like single player and something like a single player

00:07:31.280 | game or like a multiplayer game like zelda right because zelda is multiplayer or you know yeah multiplayer

00:07:38.160 | game like zelda and i would hope i get something that's like a nintendo game so like maybe like um

00:07:43.920 | mario kart is similar to zelda but multiplayer right let's try this this is the amazing spider-man

00:07:49.920 | we know this because there's a the output is the past of the this is this is the actual output which

00:07:55.440 | is in sid this this log over here is the past so this is recommend single player game similar to spider-man

00:08:06.400 | okay batman batman batman multiplayer i i don't know how this will work uh and this is a very janky

00:08:14.640 | young model well it's still batman well maybe doesn't doesn't work very well some some batman

00:08:20.160 | games are multiplayer but i mean i think the point is yours is like a toy example but yeah i just wanted

00:08:24.960 | to clarify theoretically that should work right yep theoretically it should work if we have enough training

00:08:30.240 | data that we augment well enough now i haven't done a lot of data annotation here uh but now let's jump

00:08:35.920 | into the paper so this is the paper um the blue highlights essentially mean implementation details

00:08:42.320 | the yellow highlights are interesting things green is good red is bad so most recommended systems they do

00:08:50.240 | retrieval by doing this thing which is uh we embed queries and items in the same vector space and then we

00:08:58.640 | do approximate nearest neighbors so essentially all of this is always standard approximate embed everything

00:09:03.920 | and do approximate nearest neighbors but what this purpose uh suggesting is that now we can actually

00:09:11.360 | just decode we can get a model to return the most similar products so now this is of course very expensive

00:09:21.120 | compared to um just approximate nearest neighbors which is extremely cheap but this gives us a few special

00:09:28.160 | properties whereby now we can tweak how we want the output to look like as you can see with just

00:09:35.280 | natural text and we essentially it allows us to kind of filter uh our recommendations and shape our

00:09:41.440 | recommendations so now imagine that uh if i were to chat imagine a chatbot capability right um like this

00:09:49.360 | okay let's say we have zelda and assuming it's better trained super mario bros and i want more zelda products

00:09:56.240 | similar to super mario bros uh we get one of these well only one but we'll see essentially just more

00:10:03.360 | training data needs just needs more training data and augmentation so over here now we get three zelda

00:10:09.200 | products of course the temperature here is fairly high uh essentially i'm just trying to test how this

00:10:13.440 | looks like and you can see by by suggesting the model understands the word zelda the model understands this

00:10:20.000 | product and the model understands products similar to this product but in the form of zelda

00:10:27.120 | uh yeah is this meant to be like used in real-time low latency small model situation or like

00:10:40.960 | honestly from an external perspective it just doesn't seem that like it doesn't seem super clear what's

00:10:47.920 | special about this right because if you just ask any model like yes gemini or chat gpt or anything you

00:10:53.680 | know uh recommend me multiple multiplayer games similar to zelda you should know this right yeah the

00:11:01.520 | what i think is unique about this model so imagine if you just ask a regular lm recommend me all to

00:11:08.480 | play a games unique to zelda it will recommend you games based on its memory and based on what is learned

00:11:12.800 | on the internet so what is unique here is i'm trying to join world knowledge learn on the internet and

00:11:22.400 | customer behavioral knowledge which is proprietary and seldom available on the internet so i'm trying to

00:11:30.000 | merge those together so usually when you use world knowledge there's a very strong popularity bias right

00:11:37.520 | but when you try to merge it with actual behavioral knowledge that's progressing in real time and you

00:11:42.800 | can imagine mapping it to things like this you can get that kind of data so that's the that's what i

00:11:49.680 | mean by this is a bilingual model that speaks english and customer behavior in terms of semantic ids

00:11:56.400 | very interesting and uh like more clear-cut example would be like uh uh lm would be out of date because

00:12:05.040 | there's knowledge cut off but if you have like a new song or something you know exactly yeah something

00:12:10.320 | like this is a soft course oh another henry's yeah uh so what is the world knowledge and the local

00:12:16.240 | knowledge conflict so what will happen then i don't know um yeah i don't know i i guess it really depends

00:12:23.120 | on so that's world knowledge right and then when you maybe fine-tune this on local knowledge it depends on

00:12:28.240 | how much strength you want to know local knowledge to uh update the weights okay okay so while it's oh go

00:12:37.280 | ahead from basic training principles the local knowledge should always come first right because

00:12:43.600 | let's say like you have a base model that thinks you know if someone likes zelda they like mario kart but

00:12:50.240 | if you've trained it such that people that like zelda prefer some other category of game your your model

00:12:57.840 | is trained to you know predict one after the other so the the later training often over you know has more

00:13:05.840 | impact yeah i mean so the reason why i asked is because there's a lot of abundance of evidence in

00:13:10.320 | the like base model so by just having one example does that completely override the the information it

00:13:17.520 | has learned or with so much evidence um i don't know it's a good test i guess

00:13:24.400 | yeah honestly i don't know so that that's where this is what i'm trying to explore while we're

00:13:29.120 | already 15 minutes in um barely touch anything i want to cover but i'm going to try to speed through

00:13:35.840 | this a little bit faster and i'll stop for questions maybe at a 45 minute mark so now most systems

00:13:41.600 | nowadays use this retrieve and rank strategy essentially um here's the approximate nearest index

00:13:47.760 | i was talking about over here given a request we embed it you get approximate nearest neighbors index

00:13:53.040 | and as you add features and then you try to do some ranking on top of it this could be as simple as a

00:13:57.280 | logistic regression or decision tree or two tower network or whatever a sas rack we actually look

00:14:02.240 | at a sas rack which is essentially a one or two layer auto decoder model that is trained on user

00:14:07.760 | sequences we'll look at that later um so how they are trying to do this is they have a semantic

00:14:16.960 | representation of items called a semantic id so semantic id so usually product items or any item

00:14:24.320 | you represent is usually a random hash like some random hash of numbers this semantic id and we have

00:14:30.080 | seen is a sequence of four levels of tokens so now these four levels of tokens they actually encode

00:14:36.240 | the each item's content information and and we'll see how we do that so what they do is they use a

00:14:42.160 | pre-trained text encoder to generate content embeddings and this is to encode text you can

00:14:47.360 | imagine using a clip to encode images using uh other encoders to encode audio etc then they do a

00:14:55.840 | quantization scheme so what is this quantization scheme i think this is uh very important and that's

00:15:00.640 | why i want to take extra time to go through section uh 3.1 of this so here are the benefits that they they

00:15:10.160 | expound right like training and transform on semantically meaningful data allows knowledge

00:15:13.680 | sharing across items so essentially now we don't need random ids now when we understand one item in

00:15:19.920 | one format we can now understand it uh a new item so now for a new item if we have no customer behavioral

00:15:26.720 | data so long as we have a way to get the semantic id for it and because the semantic id for it the

00:15:33.280 | assumption is that the levels of the semantic id the first level the second level the third level if it

00:15:38.560 | we'll find a similar item we can start to recommend that item as well all right so essentially

00:15:43.440 | generalized to newly added items in a corpus and the other thing is the scale of item corpus usually

00:15:48.880 | you know on e-commerce or whatever the scale of items is like millions and maybe even billions

00:15:53.680 | so when you train such a model uh your embedding table becomes very big right in order of millions

00:15:58.240 | of billions but if you use semantic ids and if a semantic id is just represented by a combination of tokens

00:16:05.840 | uh we can now have a use very few embeddings to represent a lot of tables uh and and i'll show

00:16:13.120 | you i'll show you what i mean by this um the first thing i want to talk about is how we generate

00:16:20.720 | semantic ids um so one of the previous ideas was using a vector quantization recommender so it generates

00:16:30.960 | codes they're like semantic ids uh what they use is an rqvae residual quantize uh variation and auto encoder

00:16:43.040 | i think i think this is quite important like how to train a good rqvae that leads to semantically

00:16:49.120 | meaningful semantic ids that your downstream model can then learn from uh i think it's quite challenging and

00:16:55.120 | i haven't found very good uh literature understanding of how to do this well so this is their proposed

00:17:02.720 | framework first we're given an item given an item's content be text audio image or video we encode it to

00:17:12.000 | first we get the vectors then we quantize it to semantic code words that's step number one second

00:17:20.480 | now once we have these tokens the semantic tokens for an item we can now use it to train a transformer

00:17:27.200 | model or language model which is the demo i just showed you so i'm going to take a little bit of time

00:17:35.920 | to try to help to try to help us all understand what residual quantization means

00:17:42.320 | so essentially given an embedding okay given an embedding that comes in we consider this the residue

00:17:48.560 | we think of everything here within this within this gray box as residuos and embedding comes in we

00:17:56.160 | consider the first residue we try to find which codebook token it is most similar to

00:18:04.800 | and we assign it to it right so now we've assigned it to token number seven and uh it's the most similar

00:18:11.760 | so then what we take is we take the initial residue that comes in the initial embedding that comes in

00:18:16.960 | this blue box we subtract away the embedding of the codebook token seven and now we get one right

00:18:24.240 | this this remaining thing here this is the remaining residue then we repeat it at codebook level two

00:18:32.000 | at codebook level two we try to find the most similar codebook token embedding the most similar

00:18:38.720 | vector we try to find the most similar vector we map it to it and over here we map it to

00:18:41.840 | uh dimension this token one right so now we take the previous residue we subtract the new

00:18:50.000 | codebook level two vector and now we get the remaining residue is at this level at the residue two

00:18:59.600 | right and then we repeat this right we repeat this along we keep repeating this until uh and along the

00:19:07.520 | way we keep trying to minimize the residue so essentially based on this we can now assign

00:19:12.080 | given an embedding space we can now assign it to integers or tokens right so now this outstanding the

00:19:22.080 | remaining the semantic code for this is seven one four so now if we take seven plus one plus four

00:19:28.880 | the output of this will be the quantized representation essentially what has come

00:19:33.440 | in which is blue we do this residual quantization and when we take the respective tokens they are assigned

00:19:38.880 | to it if we sum it all up it should be the same as whatever has come in and then based on this this

00:19:48.080 | quantized representation with a decoder we can get the original embedding

00:19:55.200 | any questions here did i lose everyone where does i mean i read the paper too and this part totally lost

00:20:01.760 | me where does that initial code book come from uh you initialize it randomly okay as you start oh

00:20:10.320 | yeah or there are smarter ways to do this which i'll talk about so you've got the random initialization

00:20:16.240 | and then in this process where you're learning the representation are you like iteratively updating the

00:20:21.120 | code book and updating okay that makes a lot more time yes so this rqvae needs to be learned um and

00:20:31.280 | when you learn this rqvae there are a lot of metrics to look at which we'll see and it's really hard to

00:20:36.080 | understand which metrics indicate a good rqvae um okay so that's the semantic id generation uh quick

00:20:43.600 | question uh and finally when we try to calculate the loss of the contested version and original version

00:20:50.800 | at 714 do we actually do addition or there's some other operation actually when i imagine that after

00:21:00.160 | training the code book it will be deviated from the original initialization so it won't be in the same

00:21:07.680 | scale right addition may not make sense i actually do addition so just addition yeah i just do addition

00:21:14.960 | okay and i think the the image suggests as addition as well i think they mentioned in the paper that they

00:21:24.160 | do uh three different code books for that reason so that the each code book has sort of like a different

00:21:31.440 | scale so that you can there they are additive exactly um because the norm the residues decreases with

00:21:38.000 | increasing levels right so so that was what i explained via the image i'm going to try to explain

00:21:46.320 | it again via the text in the paper because i i think this is really important so whatever comes in

00:21:53.440 | is the latent representation right and we consider this the initial residue which is r0 now this is this

00:22:01.440 | r0 is quantized essentially converted to an integer by mapping into the nearest embedding from that level

00:22:07.840 | of code book so r0 so now to get the next residual right we just take r0 minus the code book embedding

00:22:18.160 | right over here to to get the next residue we just take okay then similarly we recursively repeat this

00:22:25.120 | to find the m code words that represent the semantic id so this is done from cost to find now there's this

00:22:34.560 | section over here which i think is also quite important uh that i really want to try to help us all

00:22:39.680 | understand essentially now we know how we get the quantization the input and output everything

00:22:46.400 | now this z you can see over here which is really just a summation uh which is really just a summation

00:22:55.040 | of all the code book vectors this is now passed is to into the decoder right which tries to recreate

00:23:02.560 | the input embedding so now this imagine your code book could be like 32 dimensions and your input could be

00:23:11.280 | like 1124 dimensions this decoder now needs to map it from the input the the code book representation

00:23:19.760 | through the original input and has to try to learn that okay so then the next thing is how do we what is

00:23:30.960 | the loss for this so this is quite interesting to me this is the one of the first few times i've actually

00:23:35.600 | trained a rqvae and i spent quite a bit of time trying to understand this um i'll briefly talk

00:23:42.000 | through it very quickly and then we're going to the notes to try to see how to understand this so the loss

00:23:47.360 | function is the reconstruction loss plus the rqvae loss right and the reconstruction loss this is

00:23:54.560 | straightforward but rqvae loss uh takes a bit of time to understand so now let's go into this

00:24:02.640 | so the rqvae loss right is the reconstruction loss which is how well we can reconstruct the original

00:24:08.720 | output this is quite straightforward the original input is x over here the reconstructed output is x

00:24:15.120 | hat over here and this is really squared loss error so so how can we reconstruct it now that's question

00:24:22.080 | that's quite straightforward but the quantization loss this is this one is a little bit more complex

00:24:28.400 | you can see that there are two terms here which is really sg sg means stop graded stop gradient on

00:24:34.080 | residual minus the codebook and embedding and then there's a beta term which is how you want to balance

00:24:42.320 | it and then the residual minus the stop gradient on the embedding so you can see the left and the right

00:24:48.080 | is essentially the same except the stop gradient is on different terms so the first term this updates the

00:24:56.560 | codebook codebook vectors which is this uh which is e which is this embedding here to be closer to the

00:25:04.000 | residual right we stop the gradient so that we treat the residual as the fixed target

00:25:10.080 | and now what this does is it pulls the embedding closer to the target

00:25:19.840 | yeah and then so what this does is updates the codebook vectors to represent better

00:25:24.480 | residuos now the second one is that it updates the encoder to return residuos closer to the

00:25:34.160 | codebook embedding what so you can see where we stop the gradient on the codebook embedding and we treat

00:25:40.160 | the embedding as fixed so now the embedding is fixed so now your encoder has to try to

00:25:45.840 | do the encoding so that it maps better to the codebook embedding so essentially the first term

00:25:55.200 | teaches the codebook the second term teaches the encoder

00:26:06.880 | i think it's something like gans i'm not very familiar with gans uh but so yeah i i'm not sure i

00:26:14.640 | can if i can comment on that uh ted i think vector quantization is a multiple rounding us yes i i think

00:26:21.440 | the intuition is right this is very similar um and then there's a beta which is a weighting factor right

00:26:27.440 | so you can see in the paper they use a weighting factor of 0.25 essentially what this means is that

00:26:34.480 | we want to train the encoder less than we want to train the codebook and again how do you choose this

00:26:44.720 | beta it it's it's really hard i don't know in this case i think having high fidelity of the codebook is

00:26:50.080 | important because that's what we will use to train our downstream model we're not training rqv for the

00:26:55.680 | sake of training in rqva we're training rq over here we're trying to rqva for the sake of having

00:27:00.400 | good semantic ids that we can use in a downstream use case any questions here

00:27:07.840 | did i lose everyone i was like i'm i was just i've just been building a variational auto encoder so

00:27:18.640 | i'm a little curious like when in all my reading there's always like a elbow or elbow like loss

00:27:26.560 | the and i don't see one there did you did you understand why they didn't use that or is it just

00:27:34.080 | not mentioned or they didn't use that it's just not mentioned i guess it's because um when you look

00:27:39.920 | at our cube and the rqvas didn't really come from this it came from representing audio and also images

00:27:49.600 | decided these two papers right residual vector quantizer so uh ted has a has a hand raised

00:27:56.960 | yeah so i don't deeply understand it but i think i can answer rj's question at a superficial level

00:28:05.840 | which is that the elbow is used to approximate when you are training your regular continuous vae okay and so you

00:28:15.920 | have some assumptions around like whatever normal distribution and things like that that what you're

00:28:20.880 | trying to do is you're trying to do maximum likelihood but you can't do it directly so you use

00:28:25.360 | this elbow as a proxy in order to try to get to the maximum likelihood okay what the what the vqvae paper

00:28:34.240 | says is that we're using a whatever multinomial distribution instead of this continuous distribution

00:28:40.400 | and and that's the reason why uh because of that distribution assumption we no longer have this

00:28:46.240 | elbow thing i've never fully wrapped my head around it but it's fundamentally because this discrete

00:28:51.440 | distribution is very different from the continuous distribution that we use with elbow i i so somebody

00:28:57.440 | can go deeper than that yeah that's as much as i got okay no that's actually super helpful and thank you for

00:29:03.760 | the i'm gonna have to read those two citations as well thank thanks a lot guys that's helpful thank you

00:29:10.160 | welcome am i going too fast right now or is it fine a bit too fast

00:29:17.120 | maybe a high level overview again for those that aren't following the technical deep dives um what is

00:29:27.280 | okay the high level overview is given some embedding of our item we want to summarize that embedding the

00:29:37.280 | embedding could be a hundred and a thousand dimensions we want to summarize it into tokens

00:29:42.960 | which is integers and that's what an rqva does

00:29:47.280 | so again to i think again i think in this paper the rqva is quite essential i think the rest of it this

00:29:56.320 | this was the one that i had the hardest uh the largest challenge to try to implement the rest

00:30:01.760 | are quite straightforward so i'll spend i want to spend a bit more time here can i ask um question

00:30:06.960 | go ahead yeah uh that sum it's the the vector sum you're summing you're summing the embeddings

00:30:13.040 | yeah okay yeah you just sum it up and you'll get the output is it fair to say the novel contribution

00:30:20.560 | here is intermediate token summarization versus textual yeah it could be essentially it's a way to convert

00:30:25.520 | embeddings to tokens right firstly you quantize the embeddings and you think about it it's really tokens

00:30:33.120 | what's a good choice of a number of levels it's hard um i don't have the answer to that but in the paper

00:30:40.720 | they use three levels and each level has um 256 code words so that essentially can represent 16 million

00:30:52.720 | unique combinations essentially 16 million unique combinations

00:30:56.160 | so now let's go into the code of course i won't be going to all the code i just want to go through

00:31:05.840 | the part to help folks understand uh a bit more

00:31:09.760 | i have this oh see this okay perfect so you can see over here the code book loss essentially that's

00:31:22.080 | how we represent the losses right the code book loss is just the the input we detach it the stop gradient

00:31:32.000 | and the quantized which is uh the quantized which is just the uh the combine the combined embeddings

00:31:39.600 | right the combined cobalt embeddings this is the code book loss commitment and go ahead

00:31:44.400 | and the commitment loss is really we just take the quantization and we detach it

00:31:52.400 | and we do a mean squared error on the input right that's essentially all there is to this loss

00:31:58.560 | right so that's how we implement it quite straightforward then um this is how we do the quantization

00:32:08.480 | so you can see we firstly initialize uh some array to just collect all these for every vector quantization

00:32:20.560 | level we take the residual and the residual is really the first time at the very first input the residue

00:32:27.120 | is just the input embedding right we take the residual we pass it through the vector quantization

00:32:33.680 | layer and we get the output then we get the residual for the next level by just taking the residual and we

00:32:43.920 | subtract subtract subtract the the embeddings right that's the residual now our new output

00:32:52.080 | is we just keep summing up all this so our quantized output is just uh we just keep summing up

00:33:02.320 | so essentially at the end we can represent this initial residual by the sum of the vector quantization layers

00:33:12.720 | and that's that's that recursive thing that's all there is to it okay maybe i'm oversimplifying it but yeah

00:33:22.160 | then when we encode it to semantic ids essentially this x is the embedding right

00:33:29.280 | uh we convert this embedding so for every vector oh yes go for it

00:33:34.960 | is someone unmuted when the color is unmuted

00:33:43.280 | okay maybe thank you for muting them so the input is really the embedding right so for every vector

00:33:52.960 | quantization quantization quantization layer and the embedding is the initial residual

00:33:57.360 | again we just pass it in we get the in we get the output and this output we essentially what we want

00:34:05.520 | is the index so the index and then we get the residual and so we get the residual minus the codebook embedding

00:34:13.200 | and we just go through it again so at the end of this we get all the indexes for the semantic id

00:34:20.160 | and now that is our those are you consider them as our tokens right the sid tokens that we essentially

00:34:27.040 | saw here right um this over here this maps to the first index 201 the second index third index fourth index

00:34:38.080 | so essentially my rqvae has three levels and the fourth level is just to for preventing collisions

00:34:48.880 | um i guess the last thing uh initialize okay the last thing the question that people folks had and

00:34:54.640 | this is what the paper does as well which is how do you first initialize these code books

00:34:59.200 | um there's a very interesting idea which is that we just take the first batch

00:35:04.000 | and for each level we perform k-means

00:35:12.400 | uh and we try to fit it right and then after that we just return the clusters we we do k-means with the

00:35:21.360 | means in terms of your kv size so uh so not kv size uh in terms of your cookbook size so my cookbook size

00:35:29.600 | is two five six so i want k-means with two five six means so now i have all these two five six means

00:35:38.160 | i just initialize it uh with these two five six means and that's how i do a smart initialization

00:35:47.040 | what does the fourth level in the rqvae my rqvae only has three levels the fourth level is something i

00:35:53.920 | artificially add when there is a collision in rqvae how to determine code book size again that's a great

00:36:00.160 | question i actually don't know uh how to develop a cookbook size it's really hard because we're not

00:36:04.480 | again we're not training the rqvae just for the sake of training rqva we're training rqvae for the

00:36:09.520 | downstream usage

00:36:10.400 | oh

00:36:13.680 | i think that's a that's a great example uh that of the vbo of the image that the bush had so now let's

00:36:23.120 | look at some trained rqvaes to try to understand how this works so i've trained a couple of rqvaes not all

00:36:33.680 | of them work very well but i want to talk you through this um so essentially if you recall

00:36:40.160 | their commitment with their beta was 0.25 right so all of these the beta is 0.25 unless i say otherwise

00:36:46.560 | so let's first understand what the impact on learning rate is um initially i have two learning rates the

00:36:55.520 | green learning rate is lower than the brown learning rate so you can see of course the brown one with a

00:37:01.600 | higher learning rate uh we get oh first let me take let me take some time to explain what the different

00:37:07.760 | losses means um okay so this is the validation loss essentially the the loss on the validation set uh this

00:37:23.200 | loss total is really just the training loss now if you recall the loss of the rqvae is two has two things

00:37:31.440 | in it that's the code book loss and then there's the commitment loss so now this and this is the

00:37:38.400 | reconstruction loss you can think of it as if you try to reconstruct the embedding how well does it do now

00:37:44.400 | so now this is the um vector quantization loss i do i didn't actually calculate the i think this is i

00:37:52.800 | can't remember this is the i think this is the code book loss right and the reconstruction loss is the uh

00:38:00.560 | um the commitment loss which is the reconstruction of the output loss okay so you can see and this is the

00:38:07.440 | residual norm which is how much residue is there left at the end of all my code book levels uh ideally this

00:38:15.520 | this demonstrates if you have very low residual norm it means that um your code books are

00:38:21.280 | explaining away most of the rest most of the input residual so a lower one is bad so of course all the

00:38:31.920 | losses the lower is better residual norm the lower is better now this one this is a different metric

00:38:38.000 | this is the unique ids proportion so at uh at some period i give a big batch of data uh maybe 16k or 32k

00:38:48.800 | give a big batch of data i do the i put it through semantic ids uh the quantization and i calculate

00:38:54.640 | the number of unique ids so you can see that as we train the number of unique ids gets less and less

00:39:00.080 | essentially the model is better at reconstructing the output but it uses fewer and fewer unique ids

00:39:08.160 | so what is a good model i think um what is a good model is quite hard to try to understand

00:39:18.800 | but here's what one person responded uh and the response kinds of make kind of make sense

00:39:24.640 | so that's what i've been working on so look at validation reconstruction loss again how well is your

00:39:31.520 | is your rqvae good at reconstructing the input the quantization error again this is your codebook error

00:39:37.120 | and codebook usage i think of this as unique ids as well as codebook usage we also see some graphs of codebook usage

00:39:46.880 | um okay so now you can see that with a higher learning with a higher learning rate which is

00:39:53.440 | this one this learning rate is higher than the degree we have lower losses overall but

00:40:00.080 | our codebook usage is also lower so i think that's that's one thing to note again i don't know which

00:40:06.880 | of which of these are better is it lower loss better or codebook usage better and then i just

00:40:10.880 | took a step and just pick something um now over here this uh i think maybe this is just easier to

00:40:19.440 | compare these are the various weights right if you remember this is the commitment weight this is a

00:40:23.760 | commitment weight of 0.5 and this is a commitment one essentially the commitment weight is how much

00:40:29.520 | weight that you want to have on your um on your um on your on your codebook right on your uh on your

00:40:40.400 | commitment loss on the on the validation on the reconstruction error so you can and this this is

00:40:47.680 | commitment weight of 0.25 which is the default so you can see with a higher commitment weight uh our

00:40:52.960 | reconstruction error is actually very similar right but when we push the commitment weight up to 1.0

00:41:00.960 | you can see something something really off course here uh our loss is a lot um just x-ray funcily

00:41:09.040 | you can see our loss is just very high but the unique ids is also higher which is actually better

00:41:15.600 | and the residual norm is lower which is a good thing that means we explain most of the variance

00:41:22.480 | so in terms of how to figure out how to pick a good commitment weight i think it's very tricky

00:41:27.200 | uh i haven't figured it out essentially here when i double the commitment weight i get told i have lower

00:41:32.480 | validation loss and my id proportion is similar but my residue norm is higher but in the end i just stick

00:41:39.280 | to the default which is 0.25 and over here this is another run i did where i clean the data essentially

00:41:45.680 | i excluded uh the product data and all this is like open open source amazon reviews

00:41:52.320 | data i clean the data i exclude those that are unusually short remove html tags and you can see

00:41:57.600 | by cleaning the data you can get a much lower validation loss much lower reconstruction loss

00:42:03.040 | residue norm is similar but your unique id proportion is a lot higher

00:42:10.960 | um does that make sense am i going yes the reader with the reduced number of unique ids am i just going

00:42:16.560 | too deep into this but i also do want to share a lot um okay we just take okay maybe i'm going too deep

00:42:26.720 | into this but i'll just take one last thing to show you how a good codebook looks like um so this is the

00:42:36.960 | codebooks so these are the codebooks right and this is this is the distribution of codebook usage

00:42:41.200 | this is a this is a not a very good distribution of codebook usage you can see all the codebook usages in

00:42:50.400 | um token number one right and you can see there's very large spikes so this is when your rqva is not

00:42:57.040 | trained well you don't have a very good distribution and but and then you can see over here um

00:43:05.600 | where's my numbers for you can see over here the proportion of unique ids is only 26 that means all your

00:43:21.600 | data you've only collapsed it to a quarter of it essentially you've thrown away three quarters of

00:43:27.200 | your data but what happens when you have a good uh good rqvae here's how it looks like the proportion

00:43:35.280 | of unique ids is 89 um but this is the codebook usage you can see a codebook usage is very well sort of

00:43:44.400 | somewhat well distributed and all the quotes are well learned and all the quotes are fairly well

00:43:51.680 | utilized so this is what it means by having a good codebook usage

00:43:58.560 | so what have you done so that you can uh have a good uh code distribution codebook

00:44:27.120 | um the main thing that i have done is so a few things they use k-means clustering initialization

00:44:37.600 | so i do that k-means clustering initialization um the other thing i do is

00:44:48.960 | codebook reset so essentially any time at every epoch if there are any codes that are unused i reset it

00:44:57.200 | so essentially i'm just forcing i i force the i reset unused codes for each rqvae layer

00:45:02.960 | so i'm forcing the model to keep learning on those unused codes seems to work decently um yeah

00:45:15.520 | so the next thing now we will very quickly go through the recommendations part of it because i

00:45:20.560 | think it's fairly straightforward um so oh michael has a hand raise hello

00:45:28.560 | yes hi you good yes yeah i'm just yeah i'm a south indian and i am right now entering into this air

00:45:39.200 | field so right now what do you suggest and how to enter into this field as per the new concepts that

00:45:46.320 | are right now in your point of view yeah i think a great way to learn this is i'm gonna share an invite

00:45:56.240 | link uh a great way to learn this is really just to hang out in the discord channel

00:46:02.640 | um so i'm gonna copy the link i'm gonna paste it in the chat i think the really just us is just

00:46:09.680 | learning via osmosis right yeah what are the folks yeah yeah due to this yeah boom i have learned the no

00:46:17.600 | code development and i then and all the all these things for almost four months but right after i have

00:46:25.760 | sort of issue i found her big right because like coding so yeah thank you michael what i would do

00:46:32.080 | is to really just uh ask on the discord channel and the thing is the the reason why i'm saying this

00:46:38.240 | is there's only 15 minutes left and i still have quite a bit i want to share and go through yeah

00:46:42.640 | anyone has any questions related to the paper

00:46:48.320 | frankie okay can you hear me yes yeah a quick question maybe it's not relevant but i noticed

00:46:54.480 | that you did some stop gradient uh so it's stopping back propagation is that is that because you're trying

00:47:00.240 | to freeze something when you're doing training i don't quite understand it's it's just um firstly it's

00:47:07.600 | part of the rqvae formulation where they do stop gradient right and so i'm just following it and the reason

00:47:14.720 | is if you don't do stop gradient if you don't do stop gradient this is the loss function right it just

00:47:21.040 | simplifies to this right which is residual minus codebook embedding so now this leads to a degenerate

00:47:26.480 | solution right you can imagine that your encoder will just encode everything to 000 and your codebook

00:47:33.600 | will just encode everything to 000 and essentially you just use one single thing where they both cheat

00:47:38.640 | okay but then you have to allow training at some point right because you're training the codebook

00:47:44.400 | so how do you how does that how does that codebook get modulated then if you do stop gradient so we

00:47:50.240 | do stop reading on the residue for this for the first half of the rqvae loss equation and then we do stop

00:47:56.800 | gradient on the codebook for the second half of the and then it's a it's a weightage we waited by

00:48:01.600 | yeah i see it thank you so much yeah okay so i know we have some questions left but i'm going to take

00:48:06.320 | five to ten minutes to try to go through the recommendations part of it uh which is really where it all comes

00:48:12.640 | together and we also have results for that so i'm going to ignore the questions for a while so now

00:48:18.160 | generative uh recommendations right what do you do is we essentially reconstruct item sequences for every

00:48:23.520 | user we sort them in terms of all the items they've interacted with then given a sequence of items

00:48:30.320 | the recommender's task is to predict the next item very much the same as language modeling given a

00:48:37.040 | sentence predict the next word in the sentence right essentially that's what sasrack is what a lot of our

00:48:42.960 | recommendation model is right now so uh so for a regular recommendation given a sequence of items you

00:48:51.520 | just predict the next item for a semantic id based on recommendation given a sequence of semantic id you

00:48:58.720 | predict the next semantic id which is not which is now not a single item but a sequence of four semantic ids

00:49:05.040 | so now the task is more challenging you have to try to predict the four semantic ids

00:49:09.360 | all right so you can see that they they use some data sets here um and they have the rqva which we

00:49:15.840 | spoke about there's an encoder a residual quantizer and the decoder i think the encoder and decoder is

00:49:20.720 | essentially the same thing i mean not the same thing it's just mirror image of each other different

00:49:25.440 | ways of course as well and residual quantizer so you can see they have a sequence to sequence

00:49:31.280 | more than implementation um and this is their code book right uh one or two four tokens um

00:49:37.920 | it's only three levels and the fourth level is for breaking hashes they include user specific

00:49:44.720 | tokens for personalization in my implementation in my implementation i didn't do that now let's look

00:49:49.680 | at some sas rec code for the recommendations and we'll see how how similar it is to um

00:49:55.360 | how similar it is to language modeling

00:49:59.760 | so over here this is the sas red code and if you see it's actually very similar right you see

00:50:08.080 | modules like causal self-attention which is predicting the next token you can see the familiar things attention

00:50:15.040 | number of heads etc and then you know qkv and then you have our mlp uh which is really just uh reluce

00:50:22.160 | and then the transformer block this is a pre-layer norm layer norm self-attention layer norm and mlp

00:50:29.040 | that's it that is our recommendation system model so now the sas rec is item embedding for every single

00:50:37.280 | item we need to have a single item embedding position embedding and dropout

00:50:41.840 | and then the blocks are really just the number of hidden units you want and just transformer blocks

00:50:47.520 | and of course a final layer norm um for the forward pass what we do is we get all the item embeddings

00:50:57.440 | we get all the positions and we combine it so now we have the hidden states right it's actually the

00:51:05.760 | what has happened in the past this is what has happened in the past now for the prediction we take

00:51:11.040 | the hidden states which is the past five six now imagine this is language modeling we take the past

00:51:18.800 | five to six tokens we forward it we get the next language token candidate embeddings you can see now

00:51:25.440 | we get the candidate embeddings and what we do in recommendations oops what we do in recommendations

00:51:36.960 | is we score them and the score is really just dot product essentially given what has happened in the

00:51:43.680 | past uh we encode it we get a final hidden layer given all the candidate embeddings we do a dot product

00:51:50.400 | and that's the score so essentially given all the sentences given all the potential next tokens

00:51:57.680 | get the dot product to get the next best token and that's the same for recommendations uh over here

00:52:03.520 | um and the training step i don't think i'll go through this um i won't go through this

00:52:12.720 | now for sasright semantic id it's the same right now this is the forward pass uh no this is not the

00:52:18.880 | forward pass for a sasright semantic id this is the same predict next item so now you saw that in

00:52:26.560 | the previously we were just predicting only one single next item now in this case we have to predict

00:52:32.160 | four tokens to represent the next item so when we do training we apply teacher forcing whereby

00:52:41.920 | first we try to predict the first token if that is correct that's fantastic we use that that first

00:52:46.720 | token to predict the next token but if it's wrong we replace it with the actual correct token

00:52:51.120 | so that's what we do when we do training but when we do evaluation we don't do this

00:52:57.680 | uh when we do evaluation

00:53:01.040 | you can see uh

00:53:07.840 | okay i'm definitely losing people here so i i i won't i won't go too deep into this but when we do

00:53:12.080 | evaluation we actually don't try to correct the evaluation so now let's look at some results of

00:53:16.880 | how this runs essentially when i was doing this all that i really cared about was do my semantic ids

00:53:22.640 | make sense so let's first look at this uh this is a this is a sasright model you can see the the ndcg is

00:53:31.040 | uh unusually high right so firstly the purple line is the raw sasrack that just predicts item ids

00:53:39.920 | given the past item ids predicts the next item id uh let's just look at hit rate right you can see the

00:53:44.960 | hit rate is 99 uh i i made it artificially easy uh because i just wanted to get a sense of whether

00:53:50.480 | there was any signal or not you can see here is 99 uh we exclude the most infrequent items there's no

00:53:56.160 | cold start problem over here and uh the the false negatives are the false positives are very easy to

00:54:01.680 | get so you can see this is how the regular suspect does with ndcg of 0.76 now let's now look at our

00:54:08.320 | semantic ids now again to be to be very clear right semantic ids the combination of semantic ids that are

00:54:15.440 | possible is 16.8 million but in our data set we only have 67 000 potential data points so the fact

00:54:23.440 | that if from that 16.8 combinations is able to even predict correct data points that's a huge thing

00:54:31.040 | so over here we can see that after we train it the hit rate is 81 percent that is huge essentially it

00:54:37.840 | means that the model this new recommendation model instead of predicting the next item it has to predict

00:54:44.400 | four next items the the four tokens that make up the next item and only if that is an exact match we

00:54:51.680 | consider as a hit a hit rate right recall and it's able to do that and you can see that the ndcg is

00:54:57.520 | able to do that uh on ndcg is also decent so now it doesn't it doesn't quite outperform uh the regular

00:55:03.600 | sas right because this is uh it would outperform it if we had coastal items all the very dirty items i

00:55:10.000 | think it could beat or even match but essentially this was all just a test to try to see if we can actually

00:55:16.240 | train such a model now now that this test has passed we know that our semantic ids are actually working

00:55:23.440 | well now we can fine-tune a language model right um this is so this is fine-tuning a language model

00:55:33.200 | you can see that the this is the learning rate so initially this is my original data you can see

00:55:40.080 | uh maybe i'll just hide this first this is this is training on my original data and you can see my

00:55:44.160 | original data this is the validation my original data was very huge uh was there was a lot of data

00:55:49.360 | because i didn't really clean it i just used everything i was just not sure whether you could

00:55:52.720 | learn or and i found that it could learn so let's look at an example over here this so you can see over

00:56:02.720 | here this is the initial props right and it's able to say things like let's look at test number three

00:56:09.360 | if product a has this id if product b has this id what can you tell me about their relationship

00:56:13.920 | and all he sees is ids but he's able to say they're related through puzzles

00:56:19.120 | um and then list three products similar to this product right i don't know what this product is um but

00:56:28.240 | i i do know that when i look at it in the title form this is what i expected it was able to respond

00:56:33.200 | invalid semantic ids so what this means is that hey you know this thing can learn uh on my original data

00:56:40.240 | so then after i clean up my original data so now this is my new data set you can see a new data set

00:56:44.720 | it's half the size of original data when you can see when we have this sharp drop during training loss

00:56:50.400 | essentially that means i'm it's the end of an epoch it's a new epoch but evaluation loss just keeps going

00:56:56.080 | down as well and the final outcome we have is a model like this this is a checkpoint from the first epoch

00:57:04.160 | whereby it's able to um it's able to speak in both english and semantic ids

00:57:15.360 | um okay that was all i had uh any questions sorry it took so long

00:57:20.720 | you sure you have a question hi eugene uh so you said that um when you notice some code points are

00:57:33.520 | being unused you reset them um after you reset did you notice them getting used to have some metric to

00:57:39.840 | track the reset one actually was if the resetting was actually helpful in that case yes i i was able to

00:57:47.280 | try that uh sorry i'm just responding to numbers yes i do have that um uh in my let's again let's look at

00:57:59.040 | this when i was training the rqv i had a lot of metrics uh i only went through just a few of them

00:58:09.040 | um but this is it this is the metric um that is important um first oh wait are you oh wait i'm

00:58:17.360 | stopping you stop sorry okay here we go um first let me reset the axis

00:58:24.560 | okay so the metric that is important is this codebook usage so i do log codebook usage

00:58:38.240 | um at every epoch so you can see at step 100 or at epoch 100 codebook usage was only 24 20 24

00:58:44.400 | but as you keep training it codebook usage essentially goes up to 100

00:58:48.480 | so that's the metric i i love nice thanks you're welcome uh frankie oh yeah um can you go over like

00:58:57.840 | again uh for the cement that i semantic id part what is the training data because i'm having a hard time

00:59:04.400 | understanding like what what do you actually what is the llm learning that so can you can explain that

00:59:10.560 | again uh for training the rqvae no no no no for the semantic id part uh the last example because you

00:59:19.360 | basically you're basically inputting say a cement id and say what are the hardest two things related to

00:59:25.280 | each other and i'm having a hard time figuring out like what i what is the um was it actually learning

00:59:31.600 | here so this is the training data so essentially the training data is a sequence of items so you can

00:59:38.720 | see this is the raw item right uh and for each item we map it to this sequence of semantic ids

00:59:46.880 | this is now we're trying to order does the order in this uh list matter or not yes the order matters

00:59:53.840 | is essentially the sequence of what people transact okay and if there's the order in the

00:59:59.920 | semantic id matters as well for example if we were to swap the positions of sid105 and sid341 it wouldn't

01:00:06.960 | make sense that would be an invalid semantic id and and the reason is because the code the first code book

01:00:12.560 | vocab the first number should be between 0 to 255 the second number should be between 256 to 511 and

01:00:19.280 | so on and so forth yeah so that makes sense i mean but i'm talking about like in the group of four so

01:00:25.200 | if you permute those uh does that does it matter so it does matter does matter as well yeah it definitely

01:00:33.520 | it must be in this order if not it will be an invalid semantic id i understand because you have you have to

01:00:39.280 | go with your levels right of your coding right so you have four tokens so that understand and but i'm saying

01:00:44.480 | that you have multiple items here right yes so if you permute those does it matter uh i don't know

01:00:51.920 | i think it depends it may or may not so essentially if i were to buy a phone and then you recommend me a

01:01:00.000 | phone case and then pair of headphones and a screen protector that makes a lot of sense but if i were to

01:01:07.440 | buy a screen protector and you recommend me a phone that doesn't make sense

01:01:10.640 | right in terms of recommendations and user behavior okay okay so that's encodings

01:01:19.440 | that's the sequence encodes that behavior that's what you're saying yes that's correct okay

01:01:31.600 | any other questions yeah eugene uh i had done a lot of work on vector quantization and the there are some

01:01:43.680 | other uh techniques to handle like under utilization like splitting and one important question is the

01:01:53.520 | distance metric like i noticed that you're using euclidean distance to measure the difference between

01:02:01.600 | the embeddings and the code words but the euclidean distance is not good when there is a difference in

01:02:09.840 | value or rate for the features so have you tried to do it i'm sorry i'm in the noisy environment please go ahead

01:02:23.520 | okay so have you tried to do it okay so have you tried different metrics like mahalanova's distance

01:02:27.440 | um i have not

01:02:34.080 | essentially my implementation uh reflects what um what was written in the paper so and i i'm not sure if i'm

01:02:47.840 | really using euclidean distance uh i don't remember where is it

01:02:54.880 | yeah but it's it's worth a try but i i just use the implementation written in the paper and it works

01:03:04.160 | it's in the main uh like uh equation where you have the stop gradient because that uh that sign is the

01:03:12.000 | ingredient distance and it is by default what k means algorithm uses but yeah you can change that

01:03:18.080 | so then that's what i'm using yeah so i just replicated that

01:03:22.480 | any other question oh pastor you have a question yeah so i have a question related to to that so in

01:03:34.160 | the paper says that you that you use that you use k means k means to to initialize the codebook and then

01:03:44.320 | like you used it like you use it for the free first training batch and then you use the centroids

01:03:51.920 | uh as initialization is is that what you use and uh follow of that like it also says that there is an

01:04:01.040 | option to use like k means clustering hierarchically but it lost semantic meaning so i didn't really

01:04:08.400 | understand that part if you can briefly explain like and and if you use the centroids as initialization

01:04:14.880 | thanks uh so yes that's what i used um i'm sorry what was your second question yeah so so also the paper

01:04:24.960 | the paper said that the other option is used k means hierarchically but it lost semantic meaning

01:04:34.640 | between the clusters yes yes yes so yeah do you know why or how that plays or what's the difference

01:04:42.720 | between using the the you know the the what is suggesting the paper uh that's a great question

01:04:49.520 | right so are you referring to this uh they use k-means clustering hierarchically essentially these

01:04:55.760 | are different alternatives or quantization right um over here we quantize using an rqvae they they also

01:05:02.800 | talk about locality sensitive hashing then the other one is they use k-means clustering first k-means

01:05:08.080 | then second level k-means and third k-means um i don't know why it loses semantic meaning um honestly

01:05:16.320 | so i i i i won't be able to address that i haven't actually tried this yeah and i don't have a strong

01:05:21.680 | intuition on why it loses semantic meaning okay cool thanks thanks yeah i can uh try to answer that

01:05:30.560 | like when you are uh having the same id and you're using the proper uh k-mean clustering the entities

01:05:41.360 | are unique and they represent something but if you try to split them into different

01:05:46.320 | levels like the first order and you group them and then you do grouping of the second layer as if

01:05:54.400 | like you're trying to cluster numbers uh if you keep a three-digit number nine seven three uh and cluster it

01:06:04.160 | with eight seven uh eight two one then they are close together but if you cluster on the uh the

01:06:10.880 | hundred digits first then at the uh tens and then uh the ones you know you lose the meaning and the

01:06:20.240 | these numbers do not become close to each other

01:06:26.000 | awesome makes sense thank you thank you okay well very much over time thank you vibu for kindly

01:06:34.000 | hosting us for so long uh i can stay for any other questions feel free to take eugene's time is your

01:06:42.640 | experience uh for next week we will volunteer for a paper i'll post it in the chat right now

01:06:49.920 | um but yeah thank you thank you eugene for presenting and same thing if anyone wants to volunteer a paper

01:06:56.560 | to present the following week um you know now is your shot you'll you'll definitely learn a lot

01:07:03.600 | going through the paper being ready to teach it to someone otherwise the paper is how do language models

01:07:09.440 | memorize and and also to be clear i want to make it very clear right that i did not prepare for

01:07:14.640 | this over the course of a week so if you're preparing for this i've been working on this for

01:07:19.920 | several weeks ever since like 4th of july holiday right and essentially this is just i've been working

01:07:26.320 | on it i'm excited to share about with people and that's why i'm sharing most of the time we usually

01:07:30.080 | just go through a paper so if you want to volunteer it's okay to just have the paper that's it

01:07:35.200 | if you want to see the other side of uh you know the other extreme unlike eugene who prepares for

01:07:40.720 | months i read the paper in the day before the day or the day and i morning off hey don't call me out

01:07:47.600 | yes but people have slides that's the thing i will never have slides no no i i used to do slides i

01:07:52.640 | actually think it's uh slightly detrimental i don't think it's as beneficial to make slides other papers

01:07:58.000 | anymore papers are good ways to read uh to understand information so now it's just uh walk through a

01:08:04.160 | highlighted paper just read it understand it and yeah okay take eugene's time asking about semantic ids

01:08:15.440 | yeah and we can we can also go through more of the paper if you want i know i haven't

01:08:19.280 | gone through as much of it as i should but it's actually the the answer is there and uh the the

01:08:27.760 | results replication is very similar to show you have a hand raised hey eugene uh so while you're

01:08:32.400 | implementing this right uh were there anything was there anything from the paper that you could not

01:08:37.040 | replicate which you had tried uh i think it took me a lot of time to try to train a good rqvae um

01:08:52.480 | then that's why i i spent so much time on it so essentially to train an rqvae you see that i

01:08:59.360 | have so many experiments right gradient i i did crazy things stop gradient to decoder um gradient clipping

01:09:07.600 | and a lot of it failed um i even tried like there was this thing called um exponential moving average

01:09:14.720 | to learn the rqvae code books like it has great uh unique id unique ids right um but it's just

01:09:22.960 | not able to learn you can see that the loss is very bad um and and that was because i was new to this so

01:09:30.400 | you can see that the loss uh actually if we if we zoom in on only those after 2000 you can see that

01:09:38.080 | we see zotero oh shoot you only see zotero oh thank you i should share my desktop thank you for

01:09:44.640 | so you can see i i tried some crazy things like this exponential exponential moving average approach

01:09:50.240 | to update my code books so you can see um this the purple line is the exponential moving

01:09:55.360 | average you can see the loss is very high um and it just doesn't work as well uh like gradient

01:10:02.000 | clipping the rotation trick uh figure out the right batch size is what the what the right weight is what

01:10:06.960 | the clean data is so this took me several experiments and i that was after even deleting the experiments

01:10:13.360 | that just don't make sense to save whereas the recommendation part i just needed two experiments

01:10:19.040 | you can see yes even though that the recommendation loss is uh the hit rate and ndcg is not close to the

01:10:26.080 | sastrack one the intent was never to that the intent was can i can a sastrack actually learn on this

01:10:32.880 | sequence of four tokens and very quickly you find that yes it can learn and then that's when i okay now

01:10:40.000 | let's not train a sastrack from scratch let's train a um let's fine tune a quen model from scratch and

01:10:48.960 | this was not part of the paper this was just uh for my own learning you can see the paper uses all these

01:10:54.640 | uh recommendation models but yeah that's it and uh because i'm also planning to train an rqva is there

01:11:04.720 | some uh good places i can start with i remember this uh there should be some official report for

01:11:10.240 | that on github if i'm not wrong i remember seeing that a while back uh i well i wish there

01:11:17.840 | was but i definitely didn't come across that um but what i would recommend is essentially this is my

01:11:27.680 | recommendation uh is very great for learning especially now with things like clock code

01:11:33.520 | to try to code this out from scratch uh you really learn the nitty-gritty things right about how

01:11:39.360 | that code is actually represented uh s and and yeah you're i think colette was right i was using euclidean

01:11:48.000 | distance uh and this is the distance i was computing so but yeah i don't know maybe there's a better

01:11:53.600 | distance i i certainly haven't gone as far down the road i just took the the default implementation and

01:11:59.760 | just followed it makes sense thanks for answering the questions eugene you're welcome eugene quick

01:12:07.440 | question on your highlighting what does red and green mean um so over here so green means good right like

01:12:20.000 | these are the benefits training allows knowledge sharing atomic item ideas and then red is the

01:12:25.840 | downsides right essentially we are considering a new technique what a downside so with the regular sas

01:12:31.440 | right if i had to train a regular sas right and i had a billion products my embedding table would be a

01:12:36.160 | billion products but if i had two five six with the power of with just a four level code book two five six

01:12:45.120 | four four four levels i can represent four billion products and that's just one oh two four embeddings

01:12:52.800 | right so instead of a billion embeddings i uh yeah yeah a billion level embedding table i just need a

01:13:00.000 | thousand level embedding table and that saves you a lot of uh compute i see thank you thank you you're

01:13:08.320 | welcome kishore has a hand raised i assume kishore has asked his question frankie you have a hand raised

01:13:13.120 | uh yeah so i think it's similar to what you just spoke about i had a question about if you do the two

01:13:18.640 | tower one you have two encoders and they're trying to like create this embedding that approximates whatever

01:13:23.760 | query and item so it creates an embedding and i can't so i think you tried to explain a little bit just

01:13:30.800 | now but i try to understand why is that embedding worse than this one that's creating by you know

01:13:37.600 | whatever your quantization steps right then the betting is not worse i think the embedding is probably

01:13:43.680 | just as good or even better but there will be a lot of those embeddings so imagine if we had a billion

01:13:50.720 | products we will need a billion embeddings one for each product right but with semantic ids

01:13:57.680 | if i have um if i have a four level code book because each code book can represent two five and

01:14:04.640 | assume each code book can represent two five can you see my raycast yes so imagine each code book

01:14:10.480 | can represent two five six right and then the combination all the auto permutations of this

01:14:16.160 | codebook is actually four billion now i don't have a i don't need a billion products i just need

01:14:23.840 | two five six times four i don't need a billion embeddings i just need two five six times four

01:14:28.000 | embeddings so in some sense i kind of think of it as a better encoding of what it is right so you're

01:14:36.240 | saying that i can more economically compress more economically compress yeah i think economically

01:14:41.120 | compress is the right term so and and the reason is because at least in my so in the paper

01:14:49.920 | in the paper they showed that um using their generative retrieval they were able to achieve

01:14:56.000 | better results that's not something that i was able to replicate um and also because their data

01:15:01.840 | sets are actually kind of small the other size is actually very small all right you can see that

01:15:09.040 | their data sets is like very few items um i deliberately chose a data set that was much bigger

01:15:16.640 | and maybe with a bigger this i again i don't know how this would scale with a much bigger data set my

01:15:21.120 | data is at least 4x bigger than their largest data set and actually want to scale this to million level

01:15:26.480 | data sets right uh so i haven't that's one thing i haven't been able to replicate but again you can see

01:15:32.800 | here that um it's it's not stupid this idea is the model even a regular substrat is able to learn

01:15:42.480 | on this sequence of token ids

01:15:43.920 | yeah any other questions uh how are you sorry the hand raising function seems like very me i just asked

01:15:57.120 | directly okay so i'm curious that for the suspect models and the crane model that you fine tuned have

01:16:05.440 | you compared the performance of those in terms of uh next uh cement handy recommendation

01:16:13.760 | so obviously the sasrack model is way better um the the quen model is not as good um but the purpose of

01:16:24.080 | the quen model is not to be better than the sasrack model right the purpose of the quen model is to

01:16:32.080 | train to have this new capability whereby users can

01:16:40.160 | shape their recommendations essentially you can imagine this like chat right let's say okay

01:16:50.800 | it's the convergence of chat it's the convergence of recommendation systems search and chat

01:16:59.520 | that is the intent in the sense that it's have more general interface than than the suspect that

01:17:09.920 | that mostly like the commandless semantic id in this case i think if you will build a chat model

01:17:16.400 | then this cram model could be helpful uh yeah yeah so flow has a great question um what is this model

01:17:25.920 | param size so um let's look at it

01:17:31.680 | do i have logs please tell me i have logs yes i do have logs fantastic

01:17:39.280 | usually you're not sharing your skin again yeah i'm showing my screen now uh okay so you can see

01:17:43.440 | this is the regular sasrack model um you can see we have these users and this is a total parameter

01:17:49.840 | size it's only a one million parameter model right and then when we train it on and then for the larger

01:17:58.880 | sasrack model which is the one with the tokens um

01:18:08.880 | oh it's a seven million parameter model yeah so yeah it's whereas quen is like 8b

01:18:17.120 | so yeah it's a lot smaller but you know for recommendation systems models where you're just

01:18:22.880 | predicting an express token you don't need something so big there's there's no benefit from additional

01:18:27.600 | depth in your transformer model

01:18:36.960 | okay okay i guess the questions are drying up okay so that's it then thank you for staying

01:18:41.760 | oh this is me yes so the semantics id uh is it uh only four uh level four digits

01:18:54.800 | in my use case i only use four digits but you can imagine this to be any you can make it eight levels

01:19:02.640 | at every code book level you can have more code books more code words in my case every couple

01:19:08.400 | levels are in 256 so you can have it as wide and as deep as you want okay and again how to decide that

01:19:17.520 | is very very difficult uh because there is if you know of a way please share me i don't know of a way

01:19:25.360 | that i can assess if my sas track is good or not no i don't know of a way where i can assess if my

01:19:31.680 | model is good or not my rqvae the value of my model is actually the value of what it does downstream

01:19:43.600 | um so that is tricky so i have i have some rqvae run analysis so essentially with all the different

01:19:51.760 | ways like what the different unique ids are so essentially eventually what i did is i prioritize

01:19:57.200 | validation loss and the proportion of unique ids um and also not too many tokens because that makes

01:20:03.520 | real-time generation uh in the chat format if you have like if your if your semantic id is like 32 tokens

01:20:10.480 | long then in order to return the product you need 32 tokens which is not very efficient so i just chose

01:20:15.600 | to have four tokens long uh yeah i think there are other ways uh you know to measure that you mentioned

01:20:25.120 | two of them um but um you can also look at the perplexity or code book like token efficiency

01:20:37.360 | and uh what does that mean like uh how many tokens are needed to achieve uh good performance in your

01:20:45.440 | opinion but but what is the depth measurement of good performance then yes uh you there are some