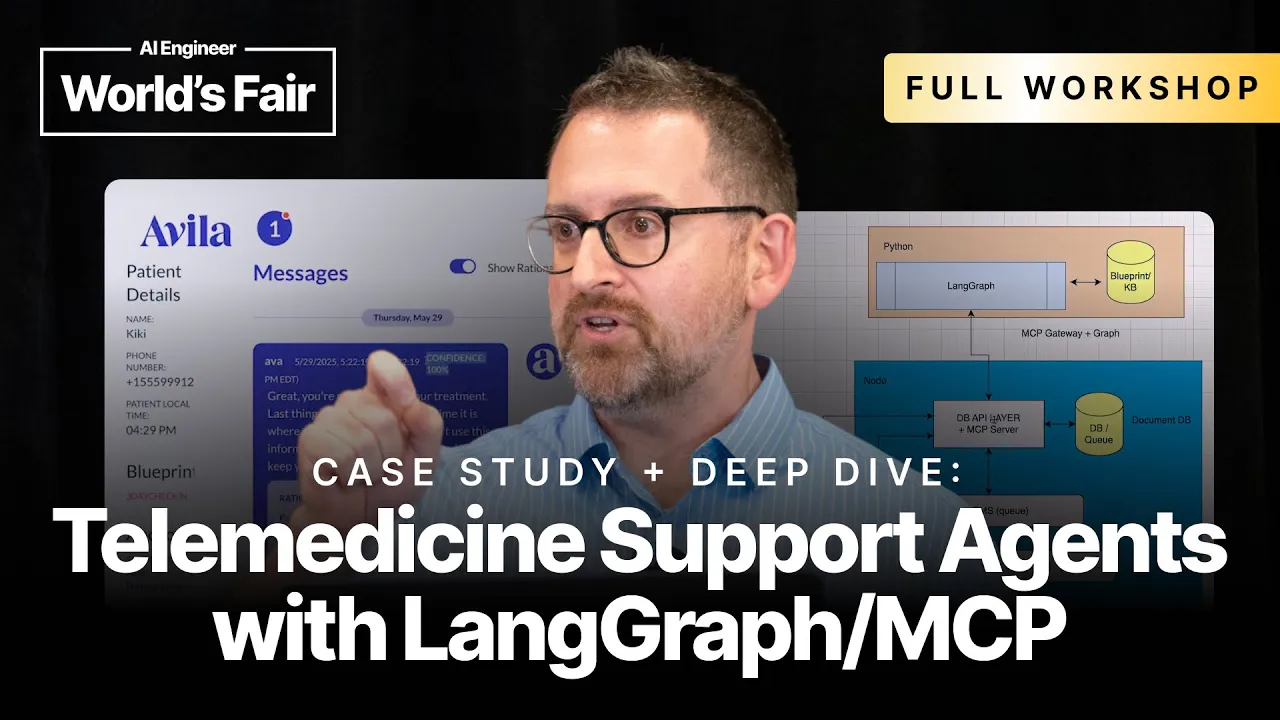

Case Study + Deep Dive: Telemedicine Support Agents with LangGraph/MCP - Dan Mason

00:00:00.040 | Okay. Hey everybody, thank you so much for coming. Really appreciate you being here. This is a great

00:00:20.340 | show. I love this show. I was here last year as an attendee. Spoke in New York at the New York

00:00:25.860 | Summit in February, and I'm really thrilled to be back. So this is very much a show and

00:00:31.420 | tell. I said this in the Slack channel, so if anybody's not in the Slack channel, feel

00:00:34.380 | free to join it. There's a couple of links in there that might be helpful to you. It

00:00:37.680 | is Workshop LangGraph MCP Agents, if anybody needs that. But fundamentally, I'm just here

00:00:45.200 | to walk through some really interesting work that my team's been doing around building agent

00:00:49.920 | workflows for a healthcare use case. And this is very much like the way we did it. I'll

00:00:57.060 | get into some details about that. It's not the only way to do it. And I'm hopeful that

00:01:01.420 | somebody in this audience might look at this and be like, that's dumb. You should do that

00:01:04.480 | better. And please raise your hand and tell me. But it's been really fun to build. I'm really,

00:01:09.540 | really happy with the results and really excited to show you guys what it's all about. Okay,

00:01:14.860 | so I will go into presentation mode. All right, here we go. Okay, first, just a very quick

00:01:25.180 | couple things about Stride. That's me if anybody needs my LinkedIn. But there's a couple other

00:01:29.080 | places you can find that. We are a custom software consultancy. So what that means in practice

00:01:35.780 | is whatever you need, we'll build it. We have been doing a whole lot of AI stuff. This kind

00:01:41.540 | of falls into a few specific buckets. We use a lot of AI for code generation. We have a couple

00:01:47.480 | of both products and services we've built to do things like unit test creation and maintenance.

00:01:52.580 | We've done a bunch of stuff around modernization of super old dumb code bases. You know, things like,

00:01:58.460 | you know, early 2000s era dot net is one of the things we specialize in. But what I'm going to show

00:02:04.280 | you today is really what we do around agent workflows. So the idea with, you know, this agent workflow

00:02:09.140 | stuff is really just that, you know, it's something that could be done with traditional software. And

00:02:13.820 | this thing I'm going to show you was done with traditional software in its first run. But we have

00:02:19.040 | rebuilt it with an LLM at the core to make it more flexible, more capable, and, you know, ultimately,

00:02:24.800 | just a lot cooler. So really excited to show you guys more about that. So I'm going to start with a

00:02:31.580 | little bit of grounding. I'm going to do a case study, which is very brief, but let's give you a sense of

00:02:35.880 | kind of the problem we were trying to solve and how well I think we solved it. And then we'll go as

00:02:40.560 | deep as we all want to go in terms of how it works. Let me ask this up front. If you have questions,

00:02:46.380 | please raise your hand. I will try to notice and I'll try to get to you. There are some mics we could

00:02:50.280 | pass around, but it probably is better for you to just shout it out. And then I'll repeat it into into

00:02:54.560 | my mic. And fundamentally, like, I have no idea if this is two hours worth of material.

00:02:59.240 | It probably is. But, you know, please keep me honest. I'll talk about anything that is relevant

00:03:03.920 | to this that you guys want to talk about. So the client here is Avila. So Avila Science is a women's

00:03:13.600 | health sort of institution, which is trying to help with the treatment of early pregnancy loss,

00:03:18.880 | otherwise usually known as miscarriage. What this is, though, is specifically a treatment where what

00:03:25.040 | happens is that, you know, you experience the event, you end up at the hospital or at a clinic. They send you

00:03:29.280 | home with medicine, right? The medicine is something that then you have to administer yourself at both a

00:03:34.880 | very traumatic time for you and your family, at a time when, you know, you need to keep track of when

00:03:38.720 | you are supposed to do things. It can be really challenging. There's other use cases beyond this in

00:03:43.040 | terms of chemotherapy, when people have trouble remembering what day it is, you know, let alone what

00:03:46.800 | they're supposed to be doing, right? You know, there's a variety of treatments that this is relevant for.

00:03:50.080 | But Avila, in particular, has a system that they use to help people essentially administer these

00:03:56.480 | telemedicine regimes at home, right? And that system is text message-based. So everything here I'm going

00:04:01.840 | to show you is essentially a text messaging-based engine with, you know, some core business logic

00:04:07.520 | that helps people stay on track. It answers their questions. It checks in on them, you know,

00:04:12.240 | to make sure that the treatment went well. They still have a doctor relationship. This isn't replacing

00:04:16.000 | the doctor. It is simply helping to get people through this treatment without a doctor's direct

00:04:20.480 | support, at least a lot of the time. So a few disclaimers up front. First of all,

00:04:25.360 | I'm going to show you a whole bunch of stuff here that is the client's actual code. Thank you so much

00:04:30.320 | to my client. Thanks to Avila for being so open with this. It's really awesome. I'm really happy to be

00:04:34.000 | able to show you as much as I'm going to show you. I have redacted a few things. I think what's left is

00:04:38.560 | still, you know, very much going to give you the character of the whole thing and an idea of how it works.

00:04:42.000 | Stride, we are custom software people, so we built custom software. I don't want to hide that part,

00:04:48.480 | right? It's possible to do a lot of this stuff with off-the-shelf tools, but there were some specific

00:04:53.120 | requirements this client had that made it better, frankly, to build a lot of it custom. So we did,

00:04:58.880 | but we did the best we could to use, you know, big swaths of off-the-shelf, right? So you'll see a lot of

00:05:03.280 | LangGraph, LangChain, LangSmith, you know, a bunch of other things like that, you know, very much in here,

00:05:07.520 | because we do believe that that adds value and that it fundamentally makes the system a lot more

00:05:12.160 | explainable. Yeah, and, you know, there are also some constraints in terms of how it's hosted. This

00:05:16.800 | is, you know, at least partially intersecting with patient data and various things like HIPAA and other

00:05:21.280 | privacy requirements. The other thing, as I started with earlier, there's no right way to do this,

00:05:27.040 | but this one does work for us, and I think you'll see as we walk through it some of the choices we made.

00:05:31.440 | You know, there are definitely other ways we could have plugged the tools together. I think there's

00:05:35.440 | definitely other ways we could have done this workflow. But we like how this came out. It

00:05:39.520 | preserved some of the things that we really knew were important to our client and that kind of reserve,

00:05:43.440 | you know, a lot of human judgment, you know, as opposed to sort of taking the elements entirely at

00:05:48.240 | their word. And this is very much a hybrid system with humans very much in the loop. And again, as I

00:05:54.560 | mentioned before, I would really love it if you guys looked at what we're doing here and said, "That's dumb."

00:05:59.840 | Or have you thought about this, right? Because, A, you know, this is a project which we've only been

00:06:05.200 | working on for a few months, but, you know, things have already evolved. That's the way it is in AI.

00:06:09.440 | So I'm certain, and I know of a handful of things where, you know, we could replace

00:06:13.360 | some of the choices we made with newer, more modern choices. And at the same time, you know,

00:06:17.440 | there may be cases where, you know, I'm genuinely not using line graph right. I would love if someone

00:06:22.320 | raises their hand and tells me that. So please do have that in the back of your brains.

00:06:25.520 | Okay, cool. Really briefly on the stack that we used and on the team that we built. So the first

00:06:32.160 | thing, again, there's a lot of line chain in here. That is not because other frameworks can't do this.

00:06:37.440 | It's not because we couldn't build our own. The number one reason we went with this is because of

00:06:41.280 | how easy it is to explain the system to other people, right? You know, if you look at, and I'll show you

00:06:45.680 | the line graph stuff in particular, it was straightforward to go into our client, you know, on a very early day

00:06:51.200 | in the project and say, "Hey, this is how this thing works." You can see it goes from here to here.

00:06:54.960 | There's loops here. Like this is where we're doing our, you know, our evaluation of the process.

00:06:59.360 | And here's where humans come in. It was very straightforward to do that. And I think it would

00:07:03.920 | have been a lot harder with something that was less visual and frankly, just less well orchestrated.

00:07:08.000 | So we're happy with this, right? There are some trade-offs to the line chain tools, but they're

00:07:12.160 | mostly things we can live with. We are using Claude in the examples that I'm going to show you here.

00:07:18.880 | But the core code that we wrote works with Gemini, works with OpenAI. There are a few reasons that we

00:07:23.760 | think Claude is better for this. I'll get into that as we go. But, you know, there's, there's no model

00:07:28.320 | specific stuff really happening here. This is almost all just tool calling and MCP and, you know, other

00:07:32.720 | things that are pretty portable across most of the models. The stack overall is not just the LLM piece,

00:07:40.400 | right? So the LLM piece is Python and a line graph container. And then the other piece, right,

00:07:45.200 | the piece that is a text message gateway and a database and a dashboard, which I'm going to show you

00:07:49.440 | pretty extensively, is Node and React and MongoDB and Twilio. And the whole thing is hosted in AWS.

00:07:55.760 | None of that has to be that way. That's just what we picked. You know, the main reason we picked AWS

00:08:00.640 | was for, you know, this has to support multiple different regions. We had to be able to deploy

00:08:04.560 | stuff, you know, entirely in Europe in a couple of cases, right? And so we needed to make sure that we

00:08:08.720 | had, you know, a decent set of, you know, cloud connections that we could work with.

00:08:12.400 | Evals. So I will show you the eval system that we built. We were not able to use, or at least I

00:08:20.960 | shouldn't say not able. We chose not to use the stuff entirely off the shelf from Langsmith. This

00:08:25.200 | is partly because I didn't really want to be fully locked into them. I wanted the data to live there.

00:08:28.960 | I wanted to be able to see, you know, the current system in Langsmith. But I wanted to have something

00:08:32.960 | separate. And it turns out that some of what we had to do to make the evals, you know, fundamentally

00:08:38.240 | functional required a lot of pre-processing. So we built an external harness that essentially pulls

00:08:43.280 | data out of Langsmith, processes it, and then runs things through PromptFu. And one of the reasons we

00:08:48.480 | picked PromptFu, if anyone's ever worked with it, they have a very flexible, they call it an LLM rubric.

00:08:53.920 | And so this is an LLM as a judge. You basically describe how you want the eval to work. You feed the

00:08:59.200 | data in and, you know, then it gives you a separate sort of visualization for that. So we ended up very

00:09:03.280 | happy with it. It's not the only way to do it at all. It was definitely, you know, the thing that fit best for

00:09:08.080 | for us. The team. So there were, and still are, two software engineers, one designer, and me. And I'm

00:09:17.600 | just, I would not call myself a software engineer. That's why I didn't include myself in that pool.

00:09:21.440 | You can imagine there being two software engineers kind of maintaining the core

00:09:25.040 | system that has the gateway and the dashboard and the text message stuff, right? And the database.

00:09:30.960 | I maintained and built basically everything on the line graph side, right? So imagine this is being

00:09:37.920 | two separate systems that talk to each other through a well-defined contract. And those two

00:09:42.720 | software engineers understand roughly how my code works, but they really weren't maintaining it. You

00:09:46.320 | know, it was almost entirely me with AI friends. And on that note, so everything I'm going to show

00:09:53.600 | you is the code that I wrote. And I want to be very clear. I haven't been a real software engineer in

00:09:58.080 | a long time. I do have an engineering background. I spent seven years out of college, you know, hacking on

00:10:01.440 | mobile apps. I took 15 years off and went to be a product person. And for about two years now, I've

00:10:08.080 | been back. But what that really means is just that, you know, essentially the stuff you're seeing, right,

00:10:12.800 | or the stuff I'm going to show you is mostly, you know, code that I wrote with Klein. That's my personal

00:10:17.120 | favorite. And so there's a bunch of options here. I like Klein best of all these options. You can use

00:10:23.200 | anything you want. The code isn't actually that complicated. Like, I would estimate, and I haven't

00:10:27.200 | actually counted, but there's probably a few thousand lines of Python, and there's a few

00:10:30.800 | thousand lines of prompt. It's about equal, right? So I vibe-coded the Python, and I mostly hand-coded

00:10:37.600 | the prompt. Not 100%, right? But that's the way to think about the division of labor here.

00:10:42.320 | And for that matter, I mean, any of these tools can be great. The main reason I picked Klein was just

00:10:47.040 | because, you know, we did not need, you know, sort of a hyper-optimized, you know, like $20 a month flow.

00:10:52.960 | Like, I've spent a lot more than $20 a month on tokens. That's just the way it is.

00:10:56.960 | You know, it was worth spending the money to just have sort of the best available

00:10:59.760 | context of the model at any given point. Klein is a very good way to do that.

00:11:02.960 | Okay, and there's a little bit of sample code. So I did mention, and this is in the Slack channel as well,

00:11:08.400 | if you wanted to follow along with any of this, you could sort of do it by standing up your own

00:11:12.560 | little LangGraph container with MCP. You're more than welcome to do that. Everything I'm going to show

00:11:17.040 | you, though, is proprietary client code, so I obviously can't send you those links. So if you'd like to,

00:11:22.000 | feel free to fire it up. We have two hours, which is a really long period of time. If you're

00:11:26.720 | interested in spending a little time at the end of this actually working with some of

00:11:29.440 | this real code, I'm thrilled to do that. So feel free to get yourself ready in the meantime.

00:11:33.600 | Okay, just a couple things up front, just to make sure we're level set in terms of sort of the terms

00:11:40.640 | and kind of the way that we're talking about this stuff. So I do like the Langchain definition

00:11:45.520 | here of agent, basically just because, you know, you'll see what we're doing here is using an LLM to

00:11:51.200 | control the flow, right, of this application. That is literally what this is. And I like this,

00:11:59.280 | and I don't know if Chris is here, he was at the last event in New York. I like this as a way of sort of

00:12:04.000 | justifying the way that we tried to architect this system and why, right? So the idea of the agents in

00:12:11.120 | production, right? You have to know what they're doing, you have to know, you know, that they can do it,

00:12:16.640 | and you have to be able to steer, right? If you only have a couple of these things, you end up with

00:12:21.040 | bad outcomes, right? And so I just like this framing of if you're capable, but you can't tell what it's

00:12:25.280 | doing, it's dangerous. If you know exactly what it's doing, but you can't control it, it does weird

00:12:29.280 | stuff and you can't help. Please go ahead. Oh, let me find that one more. It's right here, actually.

00:12:35.920 | Workshop Lang graph MCP agents. Got it? Okay. No problem. Okay, but and so transparency with no control is

00:12:44.960 | frustrating and control with no capability is useless. I just love this framing. I think this

00:12:49.200 | is exactly the thing that we were trying to solve for. We needed something that was able to do the job,

00:12:53.840 | clear about what it was doing, and that was steerable by humans in a really obvious way.

00:12:57.440 | So with that said, I'm going to start with a case study, right? And this is going to be a little weird

00:13:02.960 | out of context, but hopefully this will give you a sense of what we were trying to solve for. So the idea

00:13:08.560 | here was that there's an existing product, right? So there was a product out there that was essentially

00:13:13.360 | having humans manually push buttons on a console that would enable a text message to go out, right?

00:13:20.400 | So you would read what the patient had said. They could say, I took my medicine at 3 p.m. They could

00:13:25.040 | say, I'm bleeding and I don't know what's going on. Like, am I okay? They could ask other sorts of

00:13:29.920 | questions about the treatment. And a human would have to go into a piece of software and click, you know,

00:13:34.800 | a button that accurately reflected sort of where in the workflow somebody was, right? Because, you know,

00:13:39.680 | you can model a lot of this out. Imagine there being fantastically complicated flow charts of all the

00:13:44.560 | things that can happen during a medical treatment. So the Avila team had built this, right? They realized,

00:13:50.080 | though, that essentially to scale the human team to be able to serve a lot more patients was prohibitive,

00:13:54.400 | right? They needed too many people clicking too many buttons. They also realized they couldn't really

00:13:59.040 | scale the system to new treatments, right? Which was something they wanted to do, that this isn't the only

00:14:02.480 | regimen that you needed to support. They had other ones. And so the idea is that either they were

00:14:07.920 | going to rebuild the legacy software to be more flexible or they were going to essentially rebuild

00:14:12.400 | it to use a different kind of decisioning at the core. And when they were looking at doing this,

00:14:17.200 | you know, LLMs had started, I think, become capable enough to handle this kind of work.

00:14:21.120 | So what we did, what we did is we built for them a workflow and essentially a piece of software that

00:14:27.520 | connects to it that enabled them to do new treatments flexibly, right? So this idea of

00:14:32.320 | essentially defining a blueprint and a knowledge base is the way that we thought about this.

00:14:35.760 | And essentially medically approved language, right? So one of the reasons that you had humans pressing

00:14:40.560 | buttons instead of typing text messages is because this is medical advice, right? You know, you are not,

00:14:46.080 | you should not at least be giving medical advice that differs substantially from this approved language,

00:14:51.120 | right? There's reasons that this stuff is said the way that it's said, you know, and doctors have,

00:14:55.280 | you know, similar limitations. We also built a self-evaluation function, which I'll go into

00:15:01.200 | tremendously in a second. We wanted to make sure that we caught essentially situations that were

00:15:06.320 | complicated and surfaced them for humans, right? Because we wanted to have a human in the loop.

00:15:10.960 | But we were trying to raise up the existing folks who were really just operating the system and clicking

00:15:15.440 | all those buttons to be supervisors of agents that were doing that instead, right? That really was the

00:15:20.800 | model that we were working with at its core. So I have a question over here. Yeah?

00:15:23.840 | You may have said it. Were these operators medically trained?

00:15:26.560 | So the question is, are these operators medically trained?

00:15:29.840 | There is a physician's assistant who essentially leads the operations team. So the way that you can

00:15:34.400 | think about it is that she would be escalated to whenever something came up that was outside of the

00:15:39.280 | blueprint, right? So if you had a situation where they're just like, "I'm really not sure what to do

00:15:42.400 | here." A slack goes out to that channel with a physician's assistant in it who would then give

00:15:46.400 | medical advice. So again, this is one of the reasons it was hard to scale, right? Because you only had one

00:15:51.200 | of those people on this particular team. Sure. And so to sort of jump a little bit ahead, but hopefully

00:15:58.640 | you'll see why this is in a minute, this roughly, and again, we're still doing the measurement, right?

00:16:03.280 | We're still trying to figure out exactly what capacity has gone up to. We think it's something like

00:16:08.320 | 10x. We think that they can serve as roughly 10x more people with this new approach.

00:16:12.720 | Now, it's not free, right? We have to build the software. We have to pay for the tokens. Tokens can

00:16:17.600 | get expensive. But if you think about just the scale issues involved in scaling up a team of people

00:16:22.320 | and, again, in building the software to be more flexible for more treatments, we think this capacity

00:16:26.560 | increase is very much warranted and very much the thing that solves the problem. And you can do new

00:16:33.440 | treatments and new workflows without writing more code, right? That was the single biggest thing about

00:16:37.200 | this. And you'll see what we're doing here is largely Google Docs, right? And, you know,

00:16:40.720 | we have some more advanced techniques to manage those things and inversion them over time. But we're

00:16:45.120 | talking about being able to support whole new treatments and whole new workflows without going

00:16:48.240 | back to the code, right? That's hugely valuable to these guys. Question?

00:16:52.880 | You mentioned velocity increasing like 10x. Is there any measuring about quality of care?

00:16:58.320 | So the question was velocity increases. Is there a quality of care measure? Short answer is it's early,

00:17:04.720 | right? I mean, this is still a system that's, you know, in progress. It is being used with real people,

00:17:08.240 | but it's still very, very much early on that. The way that I think we're looking at it is that

00:17:12.240 | there would be some combination of the operators being the ultimate arbiter, right? They're going to be

00:17:16.720 | able to see these conversations and determine as they approve them, you know, as they review them, like,

00:17:20.480 | hey, is this mostly getting it right? And then there are sort of existing kind of CSAT, you know, level of measures that you can

00:17:25.840 | apply to the people who are on the other end of the treatment.

00:17:28.800 | So 10x sounds a little low. Is that because the operators are still approving everything that comes

00:17:32.640 | out right now? So they're not approving everything that comes out. And I agree, the 10x is kind of,

00:17:36.560 | it's an order of magnitude, not a precise measure, right? But I think in this case,

00:17:40.640 | you'll see a couple of cases that require approval, right? And sort of why. But the approval also is

00:17:45.360 | very quick, right? So the argument is that you probably only see one of every 10 exchanges. And when you see it,

00:17:50.960 | it takes you roughly as long as it took the last time to just push the button, right? Which was the thing they were

00:17:55.120 | already doing. So that's kind of why we've benchmarked it there. All right. So let's get into it a little

00:18:02.240 | bit. So this is just a snapshot of what this looks like in line graph. I'll show you the real thing in

00:18:07.040 | just a minute. And it's actually evolved a tiny bit since I took this picture. But really, what we're

00:18:11.440 | talking about here is the people who operate the system today, we call them operations associates.

00:18:16.240 | So what this is really doing is introducing a virtual operations associate. That operations associate is going to

00:18:22.160 | assess the state of essentially a conversation, interaction with a patient, determine what the

00:18:28.240 | best response is, both in terms of the text message you might send, the questions you might ask, the

00:18:33.840 | actions you might take. Because some of this is about maintaining essentially a state for that patient,

00:18:38.240 | right? You know, you are at any given point trying to figure out, when is this person taking their

00:18:43.200 | medicine? When did they take their medicine? You know, what medicine do they have? What time is it for them,

00:18:48.720 | which is actually more important than you may think? All of this has to be maintained, right,

00:18:52.640 | by the system. And so the virtual lawyer is doing all that work. And then it's passing essentially its

00:18:57.760 | proposal, right? It basically comes up with, I think this is what we should do. And it passes it to an

00:19:02.800 | evaluator agent. So there's a live LLM as a judge process, separate from the evals, which we'll get to.

00:19:08.480 | But the live LLM as a judge is essentially saying, okay, given this thing that just happened,

00:19:13.280 | here is our assessment of A, you know, how right the LLM thinks it is. That's frankly very challenging.

00:19:19.440 | LLMs are very hard to convince that they're wrong about anything. But it also is looking at the

00:19:24.080 | complexity, right? So even if the LLM believes it's made all the right decisions, you can have it

00:19:27.600 | impartially say, well, I changed this and I changed that and I'm scheduling a bunch of messages. That's

00:19:31.840 | complicated. Maybe a human should look at this, right? So that's actually a lot easier to implement.

00:19:37.840 | And both of these things are calling tools. The tools are a mix of MCP. And so there's sort of

00:19:44.400 | two versions of MCP here. I'm going to show you one, which is basically just looking at local files,

00:19:48.560 | just so I can show you all the stuff in my environment. But there's also MCP going across

00:19:52.480 | the wire to the larger software system and keeping all this stuff in the database, right? So there's a

00:19:56.720 | mix of those two things. And the rest of the tools are about maintaining state. Because as a conversation

00:20:03.440 | is happening, the LLM needs to know, you know, essentially, well, I made this update and that

00:20:08.000 | update. And here's the current state that I'm working with. And it has to be able to sort of

00:20:11.440 | manipulate these things in real time. That is not MCP. That's not going to a database anywhere. Like,

00:20:15.520 | this is happening entirely in sort of the live thread. And then once it finishes, then it gets pushed out

00:20:20.080 | and essentially saved away. Okay? Again, we'll get into a lot more of that. I did want to spend a minute on

00:20:27.440 | this on the system architecture, right? And so I realized it was a little small. Go ahead.

00:20:30.720 | When you mentioned about the system state, I heard before that LNGraph has a context,

00:20:36.720 | or some of the state object building the LNGraph itself. You mentioned that you use tools. Are you

00:20:41.520 | talking about separate things or the same thing? The question was about how the state is managed

00:20:45.680 | in LNGraph. So short answer is, this may be one of the things where I'm not doing it optimally,

00:20:50.800 | by the way. But with LNGraph, there is a state object that we load, essentially, when the request comes in,

00:20:56.960 | from a JSON blob, right? We keep it alive inside the graph run. It is not directly accessible to the

00:21:03.840 | model, right? At least not the way that we're doing it, right? So you'll see, actually, as we get into

00:21:08.400 | this, that you can see all the state coming in in LNGsmith, right? I can see, like, hey, this is the

00:21:12.160 | whole thing that was loaded. I still have to repeat that in my first message to Claude, right? It doesn't

00:21:17.360 | actually show up, you know, in the same place. And then I call the functions. That state will evolve in terms of

00:21:22.560 | what's inside the graph run. And then when it outputs, it's the Python code, not the model,

00:21:27.040 | which essentially takes all that state and then serializes it and sends it out.

00:21:31.200 | So you'll see how it works. But like, that's generally one of the things that I'm not sure I'm

00:21:35.280 | doing right. Anything else? Yep? Yeah, somewhat related. You have one node for that virtual

00:21:41.360 | and it's going back and forth to tools, right? And updating the state. Yeah. I'm assuming the reason

00:21:45.840 | we have it in some form hard-coded out that business logic more into separate nodes is precisely because

00:21:51.520 | you'll lose the workflow diagnostic agent for the next time. Is that sort of the notion there?

00:21:56.960 | Yeah. So the question is why, essentially, the virtual lay is one agent and not, you know,

00:22:01.520 | a sort of a pre-coded sort of version of here's how I administer the specific treatment.

00:22:05.600 | Yes, the reason I think we kept it simple is because we did not want to be super

00:22:09.920 | treatment specific and how the architecture worked. But you could imagine doing, you know,

00:22:14.000 | a set of slightly smaller, you know, better tuned agents that were, you know, kind of taking care

00:22:18.640 | of elements of the task that was still pretty generic. The main reason I think it's not optimal to do that

00:22:24.240 | is caching. And this is another question where, you know, I think I'm doing this right, but there

00:22:28.960 | are a lot of variations here. Caching the entire message stream is easier with either one agent or with

00:22:35.440 | sort of one agent doing most of the work. We're using Claude. Claude has very explicit

00:22:39.840 | caching mechanisms. And every time I switch the system prompt, I think the cache blows up.

00:22:45.040 | And so fundamentally changing the agent identity does that. So that was one, that's one reason we

00:22:50.000 | chose that. It's certainly not, you know, a hard and fast forever choice.

00:22:53.360 | What's the duration of the, like, care? Are we talking like months? Or like how many messages?

00:23:04.240 | Yeah. So this use case, the early pregnancy loss, it tends to be a treatment which takes,

00:23:10.080 | I think, three days end-to-end to administer most of the time. And then there's a check-in after that,

00:23:14.160 | right? So imagine that probably within a week, the entire interaction with that patient is done,

00:23:18.400 | unless they come back and just have questions later on, right? You know, there are some variants of this,

00:23:22.240 | where you take a pregnancy test after six weeks, right? And so that's all fine.

00:23:26.000 | The message history is preserved, but the computation that happens to generate each message is not,

00:23:32.880 | or at least not in sort of the state that we save. So like, you know, the most complicated conversation

00:23:38.320 | I've seen was something like 150 texts. It's a lot in terms of, you know, a human keeping it in their

00:23:43.120 | brain. It's not that bad for an LLM, right? So, but it's that level.

00:23:48.960 | All right. So again, just to point out where the lines are here, right? So I kind of got off on a

00:23:54.560 | tangent. The top box is what we're going to be looking at here today, right? It's really a Python

00:23:58.640 | container with access locally to these blueprints, this knowledge base, right? We are also then

00:24:04.320 | maintaining some stuff over across the wire in this blue container. That's really where the dashboard I'm

00:24:08.400 | going to show you is. It's where the text message gateway is. And it is where we're going to be moving,

00:24:12.160 | I think, a lot of that context, right? Although the blueprints, like all that stuff really should live

00:24:15.920 | kind of in the more durable software container. Right now it lives, you know, close to the Python.

00:24:19.520 | Okay. So let's get into it. So the first thing I'll do here is just to show you kind of at a high

00:24:27.760 | level what the software looks like. So this again is the console, the dashboard, right? The thing that

00:24:34.960 | the operations associates, the humans are going to be looking at. And I'll show a couple things here just

00:24:39.360 | to give you the sort of baseline, right? So the first thing here is this needs attention. So the current

00:24:43.600 | system basically has this needs attention flashing all the time. Every time a text message comes in

00:24:48.800 | from any patient, this thing is going off, right? You know, so there's, you know, hundreds of patients,

00:24:52.960 | thousands of pages in the system at any time. So, you know, this needs attention used to be something

00:24:57.840 | that multiple people were having to stare at constantly, right? Just to make sure that they

00:25:01.200 | caught everything so that they could get out messages in a reasonable time. Now, needs attention is

00:25:06.080 | really, you know, just sort of one thing at a time, right? And if I look here at the conversations,

00:25:09.920 | there we go, you can see that the top one here actually needs a response. I'll get to that in a

00:25:13.520 | minute. But at any given point, right, this is my test environment, you know, I've got a handful of

00:25:17.120 | these conversations kind of already, already queued up. What I can see here, if I click into these things,

00:25:22.480 | is essentially, I'll just go back to the beginning here for the whole message history, and I'm going to

00:25:26.080 | toggle this rationale on. What you're seeing is the entire conversation, is that readable? Let me see if I

00:25:32.960 | blow it up a little bit. Is that a little better? Okay. So the idea here is the agent is named Ava, right?

00:25:40.320 | That's the personality that people are interacting with. This language is all coming out of these

00:25:46.080 | blueprints, right, that I'll show you. And so this first message is just an initial message sent by the

00:25:50.240 | system, essentially, just to kick things off. So imagine someone is, they have a package of medicine in

00:25:54.560 | their hand, they scan a QR code. They put in their phone number, they get this text message, right? And then they

00:25:59.760 | start talking. So you can see here, the kinds of things a patient is going to say are, you know,

00:26:04.400 | freeform text, right? You know, this, I mean, they could say yes in any number of ways. The old system

00:26:09.840 | used to have literally different buttons for yes, like, yes, I have the medicine. Yes, I heard you. I

00:26:15.840 | mean, it's like, there's all sorts of variants, right? And because you did have to respond differently

00:26:19.280 | depending on what those things were. What we're able to do here is really just take, you know, these

00:26:24.160 | freeform answers, interpret them, and then essentially provide a rationale for why you would

00:26:29.680 | say a given thing at a given time, right? So this is equivalent to if you were doing this with a

00:26:33.520 | human, and you asked the human, well, why did you say this? The LLM can provide this kind of context.

00:26:37.920 | So this is Claude looking at the history here, and I'll show you what this looks like in Langsmith,

00:26:41.760 | which will make it a lot more obvious, and then saying, okay, here's the next thing that I should

00:26:46.400 | say, and my confidence that I should say it is 100%, right? It's usually very confident, right? But the

00:26:52.000 | point is, this whole process is largely going to go along in an automated fashion, right? You don't usually need

00:26:57.520 | humans involved because this is a very straightforward thing. They have their medicine.

00:27:00.880 | The next thing I need to know, and this is a very interesting part of this treatment,

00:27:04.000 | I need to know what time it is. These are text messages. We don't know anything about these people.

00:27:08.320 | For a variety of reasons, it's kind of good that we don't know much about them, right? We don't want

00:27:11.280 | to have to deal with all of the stuff around provider confidentiality and patient data, right? So one of the

00:27:16.160 | things that we need if we're going to go through this longitudinal treatment is to figure out what time it is for

00:27:20.000 | them, and then essentially pull out that data and figure out what their local time is, right? So in this case,

00:27:24.960 | I was in Eastern time when I answered these questions. This is all me doing this, you know, from my laptop.

00:27:29.280 | I tell it what time it is. It calculates an offset from UTC and says, well, I guess you're in Eastern time, right?

00:27:34.800 | And then it sets this over here and it says, all right, from now on, I know that my patient is in Eastern time

00:27:38.800 | unless they tell me otherwise. And they could come back and tell you otherwise, right? That's something the old system

00:27:43.680 | really didn't have a good way to do. But if the patient comes back and says, I'm on a plane,

00:27:47.440 | it's actually seven for me, we just update the time zone and move on, right? This is a very flexible system that way.

00:27:52.880 | Then we get into this over here and we say, okay, now that I know what time it is,

00:27:57.200 | I'm going to ask them if they've started their treatment, right? And, you know, there is a blueprint,

00:28:01.920 | right, which we'll get to, you know, that essentially just has, you know, the medicine that they're going to

00:28:05.600 | take in a very specific way to take it, right? The protocol. The patient says, well, no, I want to take

00:28:11.040 | it soon. You know, the Ava says, cool, I'll text you when we're ready. And then it gives, you know, a regimen,

00:28:15.600 | which in this case, this is an SVG that we are stapling times and dates on top of, right? So,

00:28:21.040 | you know, fairly straightforward. We're doing this in software. The LLM is not doing it. The LLM is

00:28:24.560 | actually just passing along the instructions. You know, it says, send the step one image and provide,

00:28:30.240 | you know, like this date and this time, and we substitute the rest of it in. And this goes out as

00:28:33.840 | an MMS, right? So this is a text message. And so we provide this. The patient, you know,

00:28:40.000 | says, you know, in this case, we're talking, you know, again, this is all the LLM reasoning through

00:28:43.680 | this, right? You know, I am sending this immediately because it's actually within sort of the 35 minute

00:28:49.120 | window that you've told me that I have to send these things. This is all business logic that the LLM is

00:28:53.760 | interpreting pretty much on the fly. And then I have these reminders, right? I didn't get back to it.

00:28:59.040 | So this is an important part. It sent me this thing and it thought that I was going to take it at 5:45.

00:29:03.440 | I didn't text it back, right? This is partly because I was maintaining the system myself and

00:29:07.040 | I forgot. So I had to come back in the next day and catch up. So it sent me an automated reminder

00:29:11.360 | because it scheduled one when it sent the first message. So part of this is the LLM only gets

00:29:15.680 | called when the patient says anything. So if they don't, you know, you have to make sure that you

00:29:20.080 | stay engaged, right? You don't do this overly. Like we don't try to bother people beyond one or two

00:29:23.920 | reminders. It's their treatment. But this bump sort of functionality was really important to the

00:29:28.560 | client, right? So we built it in. So you can see here, I came back the next day and I said,

00:29:33.040 | yep, sorry, I did take it. You know, Ava confirms that I completed step one. And what it does is

00:29:37.680 | it sets this thing called an anchor, right? And it says, okay, you know, the patient was going to take

00:29:42.320 | it at 5:45. They confirmed that they did. And so now, you know, I can refer back to this. I know that

00:29:46.640 | this happened, right? And if the patient had then said, oh, no, I screwed up. I actually haven't taken

00:29:50.640 | it. I'll take it today. We just changed the anchor. We update everything, right? So this is a system

00:29:54.640 | that humans used to have to do. If a patient came back and said, I didn't take my medicine,

00:29:59.280 | you know, a human has to go in and manually update all the times and all the scheduled messages. And

00:30:03.600 | it was, it was a big pain in the butt. Um, yeah, please.

00:30:16.240 | Not exactly. Um, the way that we do state and I'll, I'll spend a lot of time on this,

00:30:21.200 | but the way that we do state is really just that with any given message from the patient,

00:30:25.120 | right? This entire system only kicks off when the patient sends a message. Um, what we do is we say,

00:30:31.040 | all right, given this state, what is the best response? And that response could be,

00:30:35.440 | I changed some of these anchors. I update their treatment phase. I scheduled a bunch of messages.

00:30:40.080 | All that state is preserved so that the next time they write in, then, you know, we have that state

00:30:44.960 | to go on. Um, but again, we're not checking, right? There's no polling going on in the system

00:30:49.280 | where we're saying after three hours, did the patient text me back? We don't do that. We depend

00:30:53.440 | on the scheduled messages essentially just to nudge the patient. Um, if they choose to not say anything

00:30:58.560 | for three days and they come back after three days, we just pick up where we left off. Um, again,

00:31:02.640 | this is a choice. This is the way the client wants it. It's, it's intended to be low enough touch that

00:31:07.040 | it doesn't bother people, but high enough touch that it doesn't lose track. Sure. Um, I'll pause here,

00:31:12.800 | actually. Any other questions so far? Um, I, I realize I'm going through a lot. Yes.

00:31:15.920 | Do your anchors have to be sequential or can your user come in at any point in the treatment plan?

00:31:22.080 | They can. Great question. So the question was, do the anchors have to be sequential? Um, or like,

00:31:26.160 | do you have to go through these one step at a time? So one of the great things, one of the best things

00:31:29.520 | about this system is that I could have, and I'm happy to try this when we go a bit later, I could

00:31:34.640 | have basically said, oh yeah, I already took the first pill and I'm like in the middle of taking the

00:31:37.760 | second pill, you know, as like the first thing I say to, to Ava and she would be like, okay,

00:31:41.920 | cool. There's an anchor. Here's the next thing. It skips ahead and it doesn't force you to go through

00:31:45.840 | this prescriptive part of the blueprint. Whereas the old system, you know, at least nominally did,

00:31:49.520 | right? Like you could, you could kind of skip ahead, but this automatically does it. You know,

00:31:52.800 | part of the instructions are don't ask the patient a question they've already answered,

00:31:56.240 | like period. Right? But that's annoying. Don't do that. Um, so, so yes, that's, that's very much in there.

00:32:01.040 | Anything else? Yeah.

00:32:03.600 | Does the LLM itself have a concept of the internal state machine that is kind of

00:32:08.560 | determining all of this? Or is that kind of outsourced to the actual software stack of the LLM?

00:32:14.240 | Yeah. Yeah. So question is,

00:32:16.400 | does the LLM have an internal representation of the state? Um, kind of, sort of. So you'll,

00:32:20.320 | you'll see, um, when we get into the, the, the actual back and forth with Claude, um, in Langsmith,

00:32:26.000 | you as a human can see kind of where it starts, right? So every thread is going to show like,

00:32:30.080 | all right, here's the incoming state. We repeat it essentially to Claude. Again,

00:32:33.680 | that's just one dot I've never made it managed to connect with line graph, right? So we basically

00:32:36.880 | have to serialize the state and say, this is your, this is your starting point. But then the LLM has

00:32:40.960 | that in its window. And then, you know, it's going to cause changes to the state. It'll call functions

00:32:46.000 | that update the state. It can always ask again. It can say, well, what's the current state? You know,

00:32:49.600 | it can go back and retrieve it. Um, but in the context of that one, from when the patient responded,

00:32:54.960 | you know, to when I actually come up with my response to them, that whole thing is going to be in its memory at one moment.

00:32:59.680 | So the, the short answer is the question was, um, do we ever run into the state being too big?

00:33:10.640 | Uh, generally speaking, because of the way that we're kind of compressing and serializing at the

00:33:15.440 | end of the conversations, it doesn't ever get so big that it can't finish its job of responding to

00:33:20.400 | one situation, right? You know, like patient said this, now I'm going to do this. We have considered

00:33:26.320 | having longer running threads where you kind of pick up in the middle and you've already,

00:33:29.040 | you can reload sort of the entire previous conversation. That does get weird, right?

00:33:32.800 | Especially with older clods, you would get it for getting to sort of call tools the right way and

00:33:37.200 | have all sorts of JSON errors, right? We have a bunch of retry logic in there to kind of compensate for

00:33:40.880 | that. Um, so that's one reason we kept it short. We make it so that we basically throw everything out

00:33:45.920 | and restart when the patient gets back to us in part because blueprints could change, right? You know,

00:33:50.560 | a bunch of things could change in the meantime that might end up with weird states.

00:33:54.000 | So on the management of states and taking decisions what to do next and so on,

00:34:00.000 | this is 100% LLM driven or there's some software like logic around it as well?

00:34:07.040 | It's 100% LLM driven. Uh, sorry, the question was, uh, is the, is the steering done by software,

00:34:12.160 | right? Any, any of that steering? The answer is really no, it's not, um, except for when it surfaces to a

00:34:17.200 | human, right? And so when it goes to a human for approval, the human can use English and basically

00:34:21.840 | say, yeah, change that word to that and that message shouldn't go out and you know, whatever.

00:34:26.400 | So like we, we actually, as part of the flexibility part, we are not building any software that manages

00:34:32.240 | the state. We just want you to talk to the LLM to do it, right? We think that's a better practice,

00:34:36.400 | right? It means like, you know, you as a human just have to talk to it and you don't have to figure

00:34:40.000 | out how to flip all the bits on this new console.

00:34:41.840 | I'm sorry. What was the first question?

00:34:53.280 | Do you have any RAC system?

00:34:55.360 | RACs? I'm sorry. I just don't understand.

00:34:58.320 | RAC like a retrieval?

00:34:59.920 | Oh, oh, got it. Sorry. So, um, question was, is there a RAC? Uh, no, there's not. And, and it's actually

00:35:06.240 | just because what we really did is we just came up with a structure for the documents that was

00:35:10.480 | self-referential. So you read a very small document which says, here's the treatment,

00:35:14.560 | right? If you need to read for this phase, go to this file, right? If you need to read for this phase,

00:35:17.760 | go to this file. If you have a question that doesn't fall underneath any of those things,

00:35:20.960 | here's a CSV with a bunch of questions and answers. We didn't do it as RAC in part because we didn't

00:35:27.040 | believe that either we could do a really good job of getting all the right information into the window,

00:35:31.200 | like we didn't think we'd be reliable enough about that. We just want to give the entire document.

00:35:34.720 | They're not that big. Um, and because these, this is Claude, right? It's, it's got a big enough

00:35:39.040 | window that we could just put the entire thing in there, you know, for, for most treatments.

00:35:42.160 | Um, so we chose to do that. What was your second question though?

00:35:44.000 | Uh, is the patient going off a typical journey, uh, how do you detect and intercept?

00:35:50.000 | Right. So the question is if the patient goes off track. So we, we have this idea of a blueprint,

00:35:54.640 | but then there are plenty of cases where the blueprint may, um, you know, not fully answer whatever the

00:35:59.760 | patient is, is, is bringing up. Um, like one example is the blueprint is very much about asking questions,

00:36:05.200 | right? So you will say, have you taken your medicine yet? When do you plan to take your

00:36:09.120 | medicine? The patient will say, my stomach hurts. Okay. So yes, your stomach hurts. You didn't answer

00:36:15.520 | the question. What we do is the patient typically will get an answer to their question. So one of the

00:36:19.920 | principles is always answer the patient's question, right? We don't ever want to leave them hanging,

00:36:24.000 | but then ask yours again. So the idea is that at any given point, we can answer anything that they need

00:36:29.280 | and as gently as we can, we'll try to pull them back onto the blueprint so that we understand where they are in

00:36:33.040 | the treatment. Um, it's an, an exact science, but, uh, is there a way to detect if, uh, someone

00:36:40.000 | actually was off track? Well, the LLM does that effectively by knowing that it's supposed to keep

00:36:45.840 | people on the blueprint, but having an escape hatch for the knowledge base, essentially what we call

00:36:49.440 | it, right? Triage or knowledge base, you know, whatever you want to call it. Um, so, you know, we,

00:36:53.520 | we don't have an explicit bit sort of flipped in the system that will say this patient is off track.

00:36:59.120 | We just kind of know roughly where they are in the treatment. And if they want to answer,

00:37:02.480 | if they want to ask a bunch of questions, we'll, we'll just answer them until they, they are satisfied.

00:37:05.760 | Okay. Uh, seeing this. Yeah.

00:37:08.720 | So if you were building it again today, or actually the question is twofold.

00:37:14.320 | Yeah.

00:37:14.640 | What drove you to actually use LangChain? And secondfold, if you were building it today,

00:37:19.760 | do you still use LangChain?

00:37:21.440 | So the question is why did we choose LangChain and would we still, um, I will be very candid

00:37:27.280 | that the main reason that I chose LangChain is that I had personally gotten pretty comfortable

00:37:30.960 | with LangGraph as, as a demonstration of these concepts, right? It's not that crew, I mean,

00:37:35.840 | we did a lot of autogen work back in the earlier days, right? You know, I've, I've done a little bit

00:37:39.040 | with crew AI. All of those frameworks can functionally do very similar things. LangGraph was the absolute

00:37:44.720 | best at explaining to people who were not neck deep in this stuff, how it worked. Um, and because there

00:37:50.000 | was a path to production from there, I didn't feel a need to, to re-platform and change all of it.

00:37:54.000 | We certainly thought about it, right? We considered, well, what if we didn't do this in LangGraph,

00:37:57.040 | what would we gain? And the, but the answer is you still have to implement observability in certain

00:38:01.360 | ways. You know, you don't necessarily get, you know, the support that you might get from LangChain

00:38:05.280 | if you end up in a place, remember that we're also doing this for clients. We're not going to be there forever.

00:38:08.800 | Um, leaving them with something that they can call, you know, somebody to, to support is also

00:38:13.040 | a helpful aspect. So I think, I, I don't think I'd do it differently. I think it's really just that,

00:38:18.960 | you know, ultimately, you know, we're getting pushed, all of us, in the direction of using the native

00:38:24.400 | model tools for this, right? You know, OpenAI has the responses API, which lets you define tools.

00:38:29.200 | Claude has its new stuff, right? Like, I don't really want to be locked in. Um, I, I am to some

00:38:34.560 | degree locked into LangChain now, but I, I prefer that honestly to being locked into the models.

00:38:38.560 | Um, these are, these are not performance intensive things we're doing in terms of the software,

00:38:42.320 | right? Like, you know, I don't care that LangChain is sometimes a little slow. Um, I would rather

00:38:46.640 | have the optionality. So, you said you are not using RAG in such documents. So, as the documents scale,

00:38:54.640 | how, how do you just to, like, fetch those in a deterministic way if it's not RAG?

00:38:59.520 | Uh, it, it, it is just that they have, uh, sorry, the question was about, um, if it's not RAG,

00:39:03.600 | how do we fetch documents? The documents refer to each other. So you, you'll see that we have an

00:39:08.000 | overview.md, right? This is all in markdown. Um, there's an overview.md that tells you what

00:39:13.600 | other documents are involved in the treatment, right? There's some of the prompting which says

00:39:17.520 | you can always request a triage overview, right? To, to try to handle problems, um, and it'll be there,

00:39:23.120 | right? Regardless of what the treatment is. So it, it is very much just a document management thing.

00:39:27.280 | Um, RAG, the main issue is just that I, I don't think, and, and, you know, this will probably be more

00:39:34.400 | obvious as we get into it, right? I don't think that you could really design a RAG which would pull

00:39:38.080 | back snippets of everything in sort of perfectly relevant, relevant ways. You really do kind of

00:39:42.560 | need to understand the shape of the whole treatment, right? To, to make a good decision, right? Otherwise,

00:39:46.560 | you're just going to pair it whatever particular snippet the RAG happened to bring back, and then the

00:39:50.080 | logical has to be in the RAG. It makes more sense, and it's more transparent, I think, to do it this way.

00:39:54.080 | If you'll get into this in the state management later on, are anchors predefined in the blueprint,

00:39:59.600 | or are they perving by the LL? They, they are mostly predefined by the blueprint, and that we say,

00:40:04.480 | as part of the overview, you know, the concept of an anchor is that it is a thing that happened,

00:40:08.160 | or a thing that will happen, and here are the examples for this treatment, right? This is the

00:40:11.760 | thing that will happen, or did happen in this treatment. Sorry, that was the question about the anchors. Yeah?

00:40:16.080 | So when you, you mentioned that you, like, compressed the conversation, and you keep track of all that,

00:40:22.000 | is that anchors, or is that another part of that? No, so the, the state is essentially,

00:40:26.240 | you know, we, we call it, for reasons that only an engineer could love, we call it a schedule document,

00:40:31.200 | right? The idea is that for any given patient, there is a schedule that they're on, and the document

00:40:36.080 | snapshots their current state at any given point, right? And it's a version database, so we could go back

00:40:40.400 | in time, and we could see what their document was three days ago. But it has, at any given point,

00:40:44.880 | the messages that have been exchanged, any unsent messages that are scheduled,

00:40:48.880 | and enough state about their treatment to fill out this view. Yeah? Yeah.

00:40:53.600 | So in this case, all of this stuff is locked away, right? So I mean, just to go back to this diagram

00:41:04.640 | for a second, this entire thing is all behind, you know, AWS's VPC, right? So like, there is no external

00:41:11.760 | access to the LLM period, the only things it can talk to are essentially its own documents,

00:41:15.760 | you know, in, in local files, and to the, the blue box. So, you know, there, there certainly are vectors,

00:41:21.120 | but the vectors would be through the text messages, right? Not really through anything else.

00:41:26.320 | Yeah. Yeah. Oh, I'm sorry, a bunch of people. You first.

00:41:28.960 | Yeah. Just a question regarding, I guess, it's two boulders. One is like, how are you assessing the confidence rate from the model's response, and the second is how are you safeguarding against prompt injection for malicious behavior?

00:41:40.320 | Yeah. Well, so, the question was about prompt injection and, and generally sort of steering.

00:41:44.320 | I mean, the, the, the basic answer is just that you could definitely try to trick the model by sending weird texts, right? And we do that as part of our, you know, sort of internal red teaming. Like we have the entire team of operations associates who have been spending, you know, weeks and months trying to trick this thing.

00:42:00.320 | Um, and granted, they're not trying to trick it from a reveal proprietary personal medical data, you know, I mean, there, there's things like that. We also obscure a lot of that medical data.

00:42:08.320 | So the things that get, get to the yellow box do not include phone numbers. They do not include anything other than the patient's identified first name. Um, so there's a lot of, there's a lot of that data that's kept only in the blue, which is a lot easier to, to defend against.

00:42:20.320 | Um, so yeah, we, we, we, we very much do obscure the, the, the patient. We don't obscure the treatment, right? The treatment is fully visible to the LM.

00:42:28.320 | Yeah, cool. Yep.

00:42:30.320 | You mentioned human in the loop. How did you do the evaluation of asking the correct answer in the loop?

00:42:37.320 | Yep. Uh, hold that thought. I will get to that very, very shortly. Um, we're back there.

00:42:41.320 | Uh, sorry. It's just a question about unclear instructions. Um, so, uh, when, when the situation is ambiguous,

00:42:57.320 | the LLM is told to look at the blueprint and pick the best possible answer. Now, if you don't believe the, the LMU, if you don't believe the, the answer is perfect, um, you should say so.

00:43:07.320 | Right. In the rationale. So if I go back over here, this idea of the rationale, if there is uncertainty on the models, you know, point of view, it can say, well, I picked this blueprint response, but I'm not sure that it's right.

00:43:16.320 | In practice is not great at doing that. Right. But that is the idea. And then the evaluator is also going to look at this and say, well, did you actually pick either the exact blueprint response word for word?

00:43:26.320 | Did you adapt it? You know, does this seem right to you? Like we're trying to at least give a little bit of a layer before we get to humans.

00:43:32.320 | And then hopefully we can trap situations like that and say, well, this is a complicated situation. A human should, should take a look. Um, it is not an exact science though.

00:43:39.320 | Like that's generally just true with this stuff. Sorry. You in the back.

00:43:42.320 | I'm curious about the scale. Like, uh, like, what's the load of how many batches of the .

00:43:53.320 | Yeah. Um, so the question was just about load and scale. So, uh, look, the really short answer is that this, this system exists, right?

00:43:58.320 | There's an existing version of it that is humans pushing buttons. Um, that scale is, you know, again, let's say thousands, not millions of patients.

00:44:05.320 | Um, this opens up the possibility of doing more treatments, right? That's how we would get sort of additional patient scale.

00:44:11.320 | You can also sell this to new hospitals, new clinics, things like that. Um, so part of this is to get the scale to be larger.

00:44:17.320 | Um, we have not run into scale issues with, you know, just the, the conversations with Claude, you know, the software that we're building would scale much, much larger than thousands of users, right?

00:44:25.320 | You know, the, the text message gateway might actually be the, the biggest bottleneck. So it's, it's honestly, it's a problem we want to have. Um, go ahead.

00:44:32.320 | So, um, you keep saying Claude, did you guys select the LLMs because it was what the client had access to?

00:44:38.320 | Or was there like a specific reason why you're going with 3.5 or whatever you're using?

00:44:42.320 | Yeah. Uh, so the question was of model selection. Um, when we started this, right?

00:44:46.320 | And I think, you know, let, let's assume that we kicked this project off, you know, late last year, early this year, right?

00:44:51.320 | Um, we had to make a choice and our main criteria were it had to be a steerable model that we felt pretty good about, you know, transparency wise. Um, you know, one example, just, just to give you a specific one.

00:45:00.320 | Oh, four mini is pretty good at this workflow, but it won't show its reasoning. Um, like, I mean, that's just one example.

00:45:07.320 | And like, it's not a deal breaker. Like we can still see the rationales. Like there's some pieces of it, but I like being able to go into Langsmith and seeing the whole conversation, right?

00:45:13.320 | That, that really helps me out. Um, we needed, you know, again, flexible hosting, but I mean, all the clouds kind of do that.

00:45:18.320 | Frankly, we didn't want to deal with Microsoft and we kind of preferred AWS to Google. That was kind of how we got there, but you know, you can do this anywhere.

00:45:27.320 | It really was just, we had to pick a horse and we largely have not regretted it. And in part, because we built enough flexibility where if I want to switch, I still can.

00:45:34.320 | So my question is about, um,

00:45:41.320 | Yeah.

00:45:43.320 | Uh, so the question is about sensitivity, uh, of data through the text carriers and also about, uh, using the data to learn. Um, I'll do the learning first. Um, we don't.

00:46:05.320 | We, we do not take any of the responses and do anything to the models other than when we see situations that we as humans have evaluated and found wanting, um, we can tweak the prompting and the guidelines, right?

00:46:17.320 | But we are not putting this in any sort of durable form. Like ultimately, you know, we believe the right model here is the provider interaction. If there's a provider involved, that sticks around, right?

00:46:26.320 | The provider knows that you interact with the system. They can have, you know, whatever records they need. Um, otherwise, you know, we forget about you when your treatment is done.

00:46:32.320 | We think it's better that way. Um, on the, on the, the sensitivity question, yes, there is sensitivity involved. And at the same time, again, there's prior art with these products, right?

00:46:42.320 | There are existing systems which essentially take, you know, text messages in and provide medical advice. Um, we're just trying to stay within the guidelines of that.

00:46:50.320 | And again, that's one reason why we don't want the LLM actually to have any data that is not explicitly required just to do decisioning, right?

00:46:57.320 | It doesn't need anything beyond that to, to make a good decision. Okay.

00:47:03.320 | Yeah.

00:47:04.320 | So every response is a hundred percent.

00:47:06.320 | Uh-huh.

00:47:07.320 | Is it determining that that's a hundred percent?

00:47:09.320 | And then what are the situations where it's not ?

00:47:12.320 | Yep. Um, sorry. Hold that thought too. Cause I will get to that in just a second. Um, let me move on.

00:47:16.320 | Uh, please like bring these questions back up. I just want to get a little bit further so we can see some other, some other cool things about this. Um, I'm going to move on from this flow just because you can imagine that this is going over a period of days, right?

00:47:25.320 | There's another step here, step two, where there's, you know, more medicine being dispersed. Um, and then, you know, ultimately we're going to get to the end, right?

00:47:32.320 | And, you know, essentially, did you complete this? And then, okay, great. You know, this is what's going to happen to you. You know, you're going to see some bleeding. Um, and then we have this check in, right?

00:47:40.320 | So imagine that this now is, you know, a full, let's say three or four days later, right? After the, the treatment has begun. Um, you know, we check in, you know, the patient gets back to them or not, right?

00:47:47.320 | Remember, some of these patients will just be like, I'm done. I don't really need to talk to this thing anymore. But if they do, right, we continue with the treatment. We don't bother them. We just let them sort of resume where they left off.

00:47:58.320 | Again, we have these rationales, you know, we have these questions. And then what I want to do here is just to show you briefly, um, sorry, I got to zoom back out so I get the full phone number, um, what it would look like to interact.

00:48:08.320 | So if I go here into my sandbox, um, imagine that normally this would be a text message. Um, so, you know, I would be doing this on my phone. Um, but here, you know, I can answer this question.

00:48:18.320 | If I had any pregnancy systems before, have they decreased? It's like, yes, uh, they have decreased.

00:48:27.320 | Okay, so I post this message. Now what's going to happen from here is thinking. So none of this is instant. And so now what I want to show you is what this looks like in Langsmith.

00:48:36.320 | So, um, you can see here a couple of things. Um, one is that this, this is now spinning. Um, so this thing that I just asked it is now in active processing.

00:48:44.320 | I'll show you what it looks like when we're done. Um, but I will give you just a brief look at, um, I think this is probably a useful one here.

00:48:51.320 | Um, what this actually looks like in terms of processing the state. Um, so I'll blow this up a little bit and make it a bit bigger.

00:48:59.320 | So, um, what you can imagine, this is using Sonnet 4, um, is that every time a message comes in from a page,

00:49:06.320 | a patient, this is what I get. Okay? I get this description of, you know, everything that's going on here.

00:49:12.320 | I can see this is an Avela patient. I can see the thread that we're currently executing, right?

00:49:17.320 | Because you may need to resume these threads if you need to give feedback. Um, I have this idea of I'm in the three day check-in phase.

00:49:22.320 | So that's the blueprint that I'm going to read. Um, and then I have a couple of things. I have these anchors, right?

00:49:27.320 | Which, you know, you could see, I think this is exactly what you saw before, um, you know, in that same patient.

00:49:31.320 | Um, these are all defined as, you know, actually a mix of UTC and Eastern timestamps. Um, that's one of the problems that's hard to eradicate.

00:49:39.320 | Um, getting LLMs to deal well with time is really tough. Um, but then I have this entire message queue, right?

00:49:43.320 | And this is the compressed state of the conversation to date, right? This does not include every message that Claude sent itself while it was thinking, right?

00:49:52.320 | That part is contained in these individual Langsmith threads. I could go back and I could look at this if I needed it.

00:49:56.320 | Um, but what I'm doing is I'm compressing and basically saying all I really care about is the actual messages that went back and forth.

00:50:01.320 | I want these rationales because I want to be able to review them, right? That helps me understand the decision that's going on here.

00:50:06.320 | Um, you know, I want these confidence scores so I can go back and look, you know, what did it think at any given point?

00:50:11.320 | And again, I'll show you one where the confidence was low, but these things can go on a little ways, right?

00:50:15.320 | This is probably, I don't know, 20, 25 messages, right?

00:50:18.320 | All of this goes in as initial context in the window, right?

00:50:21.320 | So if you had 150 messages, all 150 of them are going to potentially go in.

00:50:25.320 | Now we do have a function where you can optionally set it to compress and say, well, just show me the last 50, right?

00:50:31.320 | If I need to request more, I can do that. There's a way to do it.

00:50:33.320 | Um, but I don't need to have the entire thing in the window.

00:50:36.320 | Um, so I get down here. This is the last message from the patient, right?

00:50:39.320 | So the question was, did you notice blood clots? I said, yes, a few, right?

00:50:43.320 | You know, that was, that was what I, as a patient said, Claude is now going to start processing this thing, right?

00:50:48.320 | So imagine, you know, this all being basically pasted into, you know, a Claude window and then having it go through this process and call tools.

00:50:55.320 | So it starts by looking at directories that it's allowed to view. Again, this is a version where it's got the blueprints kind of all local and it's, it's talking to them this way.

00:51:03.320 | We have another version where it talks via MCP over to the blue box, right? The larger system.

00:51:07.320 | Um, so it figures out what directory it has. It reads these basic ones because these need to be read in all cases.

00:51:13.320 | So these guidelines, right? The idea of how do you do your job, right? The idea of what the confidence framework looks like, the overview of the treatment, right?

00:51:20.320 | You know, those sorts of things. We read those up front. None of these is very large, right?

00:51:23.320 | And so you read all this stuff, you know, it, it comes into the, the window. Um, and then, you know, essentially it reads those descriptions and it says, well, I was told as part of this that I have to read the current blueprint for this current phase, right?

00:51:34.320 | So I read that file individually. So a bunch of these early calls are just about setting up the context. This is not the only way to do it, right?

00:51:40.320 | I mean, this, this is the way that we've chosen to do it again. We chose not to do rag for a couple of, you know, reasons around.

00:51:45.320 | We just did not think we could get good enough results. And because this is honestly easier to interpret, right? You can sort of tell what it's doing.

00:51:51.320 | Um, I get to the blueprint, the blueprint, and you will, we'll see more of these examples in a second, but the blueprint is basically this kind of structured bulleted list, right?

00:51:59.320 | Here's all the stuff that you might need to say to somebody, right? And you know, here's what you do when, you know, the user says a certain thing.

00:52:06.320 | This isn't actually that prescriptive. It's just structured, right? This isn't an if then statement, right? It's kind of like that, but it's not an actual if then statement.

00:52:15.320 | So like this format, you know, is one that we iterated on and got to a point where we actually get really good results. Um, but you know, it wasn't a hundred percent obvious.

00:52:23.320 | This is the way to do it up front. Um, you know, we started with charts. Um, and so now you get to this point where now you can see, okay,

00:52:30.320 | now I got to look at these, you know, uh, conversations. I got to figure out what's been going on here.

00:52:34.320 | And so you can see here, even though I passed in the state, it has a function to list messages.

00:52:38.320 | And so it basically says, all right, well, now that I sort of know what's going on, let me see the last five messages, right?

00:52:42.320 | And you can see here, it's going to start sending, you know, a bunch of these in. Um, and so it does that.

00:52:47.320 | It looks to see if there's anything scheduled. There's not. Right. And so now it says, all right, this is,

00:52:52.320 | this is sort of the point where Claude does its little explaining thing. I understand what's going on.

00:52:57.320 | The patient's in the three day check-in phase. I already asked about bleeding and cramping.

00:53:01.320 | I asked about blood clots and the patient, you know, basically just said, yes, they have blood clots.

00:53:05.320 | And so I'm just going to keep on going. Right. And it goes to the next question about pregnancy systems.

00:53:10.320 | This message comes directly from the blueprint. Okay. And I'll show you in a Google doc form in a second what that looks like.

00:53:16.320 | Um, so it schedules it. It says, you should send this message, you know, as soon as you want to.

00:53:21.320 | And then we get over to this evaluator flow. Right. And the evaluator says, all right,

00:53:25.320 | I'm going to look at this situation. I'm going to look at everything that requires confidence scoring. Right.

00:53:29.320 | That new message is the only thing. It's, it's the, the only thing that just happened.

00:53:32.320 | Um, and I'm going to send it immediately. This is just a, a timestamp for immediately.

00:53:36.320 | Um, I then get this kind of report. Right. And the way that we set up our framework, um,

00:53:42.320 | and I'll show it in code a little bit clearer is, you know, do we know what the user is saying?

00:53:47.320 | Do we know what to say? And do we think that we did a good job?

00:53:50.320 | Again, this is a tough one. Right. Um, generally speaking, the LLM, you know, says at all times, yes, I know what I'm doing.

00:53:56.320 | And you know, like buzz off. Um, but what I can also do is I can say, all right, then there's a bunch of cases in which if I set an anchor,

00:54:04.320 | if I updated the patient's data, like maybe I changed their time zone offset, maybe I changed their name. Right.

00:54:09.320 | That's a weird thing that, you know, if it happened, you'd probably want a human to look at. Um, do I, am I sending multiple messages?

00:54:14.320 | Do I send it, am I sending duplicate messages accidentally? Do I have reminders for things that have already happened?

00:54:19.320 | All of those things would deduct from the score and cause a human to get involved. Right.

00:54:24.320 | That that's part of how we do this is to combine. Does the model think it's okay? Right. That's this top part.

00:54:29.320 | And then overall, is there a weird circumstance that I should try to catch? Right. And then I should try to, to, to show people, uh, to show a human for review.