GraphRAG methods to create optimized LLM context windows for Retrieval — Jonathan Larson, Microsoft

00:00:00.000 | Hello everyone. Thanks for coming to the session here today. My name is Jonathan Larson and I run

00:00:21.120 | the GraphRag team at Microsoft Research. Some of you may have seen our paper that we actually

00:00:26.680 | released last year, the GraphRag paper, or you might have seen our GitHub repo that was

00:00:32.740 | out there and has a lot of stars on it. When it released this last year, to our surprise,

00:00:37.800 | it got a lot of attention and also it inspired many other offerings that we saw that were

00:00:42.280 | out there as well, too. My favorites, of course, were the ones that Neo4j did with the card

00:00:46.300 | game, because I'm a huge board game fanatic as well, too. But there's been lots of derivative

00:00:51.100 | work from it. And today, though, I'm not here to talk to you about some of the things that

00:00:55.360 | happened. I'm here to talk to you about some of the new horizons. And the two things I really

00:00:59.980 | want to leave you with here today are that LM memory with structure is just an absolutely key

00:01:05.440 | enabler for building effective AI applications. The second, as we'll talk about a little bit later in

00:01:11.500 | the talk as well, too, are that agents, of course, paired with these structures can provide something

00:01:16.420 | even more powerful. And let me go ahead and go back to the slide here. So today, I'm going to tell you

00:01:20.360 | about a couple things. The first is actually showing you Graphrag as applied to a specific

00:01:24.980 | domain. In this case, we're going to apply it to the coding domain to look at enterprise productivity

00:01:29.180 | in the coding space. The second is we're going to take a look at a new release that we are announcing

00:01:34.700 | today. We're actually having a blog post on this tomorrow. We have benchmark QED just went open source

00:01:39.600 | as well. And then I'll talk about some new results that we've had on some Graphrag evolutions on a new

00:01:45.720 | technology we've been working on called lazy Graphrag. I won't be going into the specifics of how many it

00:01:49.740 | works. I'll just be showing some benchmarks and talking about some of the ways you'll be able to access it

00:01:54.360 | soon. So with that, let me go ahead and jump into Graphrag for code and tell you about how we've been

00:01:59.660 | using Graphrag to actually help drive repository-level understanding. And I want to start first with a little

00:02:05.540 | demonstration. Everyone likes to watch little videos, so I'm going to go ahead and hit play. And yes,

00:02:09.800 | it's showing on the screen there. So this is a little terminal-based video game that one of my

00:02:14.100 | engineers have put together. It's one where you jump over obstacles, and then you get points when you jump

00:02:19.220 | over them, and you run into them, you lose points. And two important things, though. First, the LLM has never

00:02:23.660 | seen this code before, and it's small enough for the human to know the ground truth

00:02:27.720 | holistically because it's only about 200 lines of code across seven files. But it's complex enough that the LLM's had

00:02:33.660 | a lot of troubles understanding it if you just provide all the code directly into the context window.

00:02:37.920 | So with that as a background, let's take a look at what happens if you use typical regular rag over

00:02:42.660 | the top of this. So if you're using one of the tools that help analyze your code, this is the type of

00:02:47.640 | answer you might get back. And this is -- we ran this through with a regular rag system. And we asked

00:02:52.140 | it, describe what this application is and how it works. And just to read you what it says, because it's too

00:02:58.500 | small to read, "The application is designed as a game that is configured and initiated through a main

00:03:02.820 | function. The game leverages a configuration file and has its main components, such as the game screen,

00:03:08.040 | game logic, encapsulated in separate classes and functions." Totally useless. It just says,

00:03:13.080 | it's a game. There's nothing more to it than that. And it just put a bunch of other cruft in there.

00:03:18.300 | If you use GraphRack for code over the exact same code base with the exact same question,

00:03:24.000 | you get a much, much better description. This is just a very stark contrast you get between the two.

00:03:29.100 | So in the GraphRack for code, it says, "The application is a terminal-based interactive game

00:03:34.320 | designed to run in a curses-based terminal environment. So far, not much better. But this

00:03:39.000 | next line just kills it." It features a player character that can jump vertically, obstacles that

00:03:44.280 | move horizontally across the screen, and a static background layer. The game controls via keyboard

00:03:49.080 | inputs, specifically using the space bar to trigger the player's jump action. So what this is showing you

00:03:54.480 | is semantic understanding. So if you've read our paper or used any of the GraphRack code base that

00:04:00.960 | we've put out there, you'll know about the concept for what we call local and global queries. And so

00:04:05.760 | this is what we would call a global query over top of the repository. It requires understanding the whole

00:04:10.380 | repository to answer that question correctly. And we can see that it excels at that. So one of the next

00:04:16.620 | things we decided to do was, well, if I can answer questions pretty well, can it maybe do code

00:04:21.540 | translation? And so that was the next thing that we aimed it at. So taking the same code base here,

00:04:27.280 | we took on the challenge of taking this Python code and then asking to translate it directly into Rust.

00:04:32.340 | And let me show you just how that actually works. I'm going to play the video here. So we're going to do is

00:04:36.720 | We're going to start off with in VS Code here. We're going to look at the four source code files. Well, there's four main ones.

00:04:41.520 | There's seven files total for this game. These are the source Python code files that we're working with here.

00:04:46.920 | We're going to go ahead and run those just to show you again. This is the same game. So this is again the same

00:04:51.780 | side scroller game here. And then what we're going to do is we're going to actually take those source code files and just

00:04:56.580 | put them straight into an LL. We're first not going to use GraphRack for code. We're just going to take all that

00:05:00.780 | source code, because it's only 200 lines of code, and put in some nice prompts. We tried a wide variety of these prompts and

00:05:05.700 | then try and see if the LL could actually translate that code holistically in all of its pieces into

00:05:11.280 | Rust and actually just work out of the box. And so this is actually the translation happening there.

00:05:15.300 | We're going to go ahead and copy the Rust code that it generated. So it did generate Rust code. I have to

00:05:19.620 | give it credit for that. But after it generated the code and we reviewed it there, we went and we tried

00:05:25.020 | to compile it and there's problems everywhere. It doesn't work out of the box. So then we use GraphRack for code,

00:05:31.440 | again bringing structure and these graph structures to the code base there.

00:05:37.020 | We're going to just go ahead and run the translate function there on GraphRack for code. And it generates

00:05:40.740 | on its side all these new Rust files that you see are on the side there. I'm just going to skip past

00:05:46.200 | here because I don't want to bore you with clicking through a bunch of Rust files. But once we generate

00:05:50.160 | all these Rust files, we can go ahead and run those Rust files on GraphRack for code side of things and

00:05:55.740 | presto, we have a full video game completely translated from Python now working in Rust natively. But we

00:06:03.600 | didn't want to stop there. The next thing that we wanted to do was, well, that game is kind of a toy

00:06:09.060 | example. It's like 200 lines of code. Let's go to a code base with 100,000 lines of code. And that's what we did next.

00:06:14.280 | So we went to the Doom code base. It's about 30 years old. Now, we did run a bunch of tests over

00:06:19.680 | the top of this here first because I figured, well, all the LLMs are trained on the Doom code base. I mean,

00:06:24.840 | it's going to just know these things natively and automatically. So we did a bunch of tests with

00:06:29.460 | that. We actually figured out, well, it knew the Doom code base. It didn't know any of the specifics. So if

00:06:34.440 | you ask it to actually modify the Doom code base in any sort of meaningful way, the LLM models just fail

00:06:39.360 | again completely. So then what we did next was, well, if we can reason and understand over top of this

00:06:46.020 | code base, 100,000 lines of code, 231 files, maybe we can generate some new outputs that you otherwise

00:06:51.820 | couldn't generate. So with that, we then immediately had to start -- used it to start generating documentation.

00:06:56.880 | So this is actually showing a high level repository level documentation. Again, going back to the

00:07:02.100 | concepts of GraphRag, we have local and we have global queries. This is showing you the global query

00:07:06.760 | results. And so it's not looking at the understanding of a single file. This is looking at modules in their

00:07:11.820 | entirety, like across 20, 30 files and being able to actually give you sense for, like, what's the sound

00:07:16.740 | system inside of the video game and how does that work? But then you can still drill down into what we

00:07:22.020 | would call the local style queries and actually see the individual files as well, too.

00:07:25.860 | So then we thought, well, this is kind of neat. We can look at the documentation. But if we look

00:07:32.180 | at the documentation, we can do Q&A and we can do code translation. Can we maybe take this one step

00:07:37.080 | further? Do feature development. So that's the next thing I'm going to show you. So we had a lot of video

00:07:42.840 | game players in our office and we were kind of thinking about, like, what would be a cool thing we

00:07:46.380 | put over top of this Doom video game? And somebody said, well, you can't jump in the original video game.

00:07:50.940 | What if we added the ability for a player to jump? And that's a complex thing to add into Doom,

00:07:55.800 | because it requires multi-file modification. And if you tried to use AI systems to do multi-file

00:08:02.120 | modification, what it'll often do is it will do a great job editing one of the files and then completely

00:08:07.240 | break a bunch of the other files in the process of doing so. And then that just rinses and repeats

00:08:11.640 | and continually kind of -- you end up with something that doesn't work is what you oftentimes run into.

00:08:16.040 | And it's because of this lack of understanding how everything fits together. But again, this is where

00:08:21.480 | GraphRag can really help out. So with that, we went ahead and this is -- if you haven't seen the Doom video

00:08:26.440 | game, this is actually a little video of the original Doom video game being played just to give you some

00:08:31.480 | context for what it was. Then we used the new GitHub Copilot coding agent that was announced at build.

00:08:38.440 | And we wired it up directly to a GraphRag for code. So we're going to go ahead and create a new issue.

00:08:43.480 | This is pointing to the Doom code base. And we're going to tell it add jump capability to the player.

00:08:48.280 | We're just going to add a couple sentences of description here and then tell it to go. And

00:08:52.840 | then we're going to assign it to that GitHub Copilot coding agent. And then the next thing we're going

00:08:59.640 | to do is we're going to then go ahead and look at what's happening underneath the hood. So this is

00:09:03.960 | actually the GitHub Copilot coding agent reaching out to GraphRag for code on the back end, coming up

00:09:08.360 | with a plan that's holistic and it's approaching it from the top down. And then this is really the moment,

00:09:13.560 | the aha moment we had for us. It changed a whole lot of files and it worked out of the box where all

00:09:19.160 | the other agents that we had tried completely failed on this task. And because it worked because of those

00:09:23.960 | GraphRag structures that we were bringing to bear and actually using those with that. So then the end

00:09:29.080 | result was we were related with joy and jumping in Doom specifically. So that's kind of the story of how

00:09:37.000 | we got to with that. So with that, I do have another part of the talk I'm going to talk about today.

00:09:42.840 | And that's also five minutes to talk about that one. And that's benchmark QED. Just shifting topics a

00:09:48.200 | little bit. I just showed you GraphRag is applied to one vertical. Next, I want to specifically talk

00:09:53.080 | about how do we measure and evaluate systems like GraphRag? How do we build systems to evaluate those

00:09:58.680 | local and global quality metrics? And so for that, today I'm announcing benchmark QED. This is available

00:10:04.680 | now open source on GitHub. So you can go ahead and check it out at the link. We just, I think,

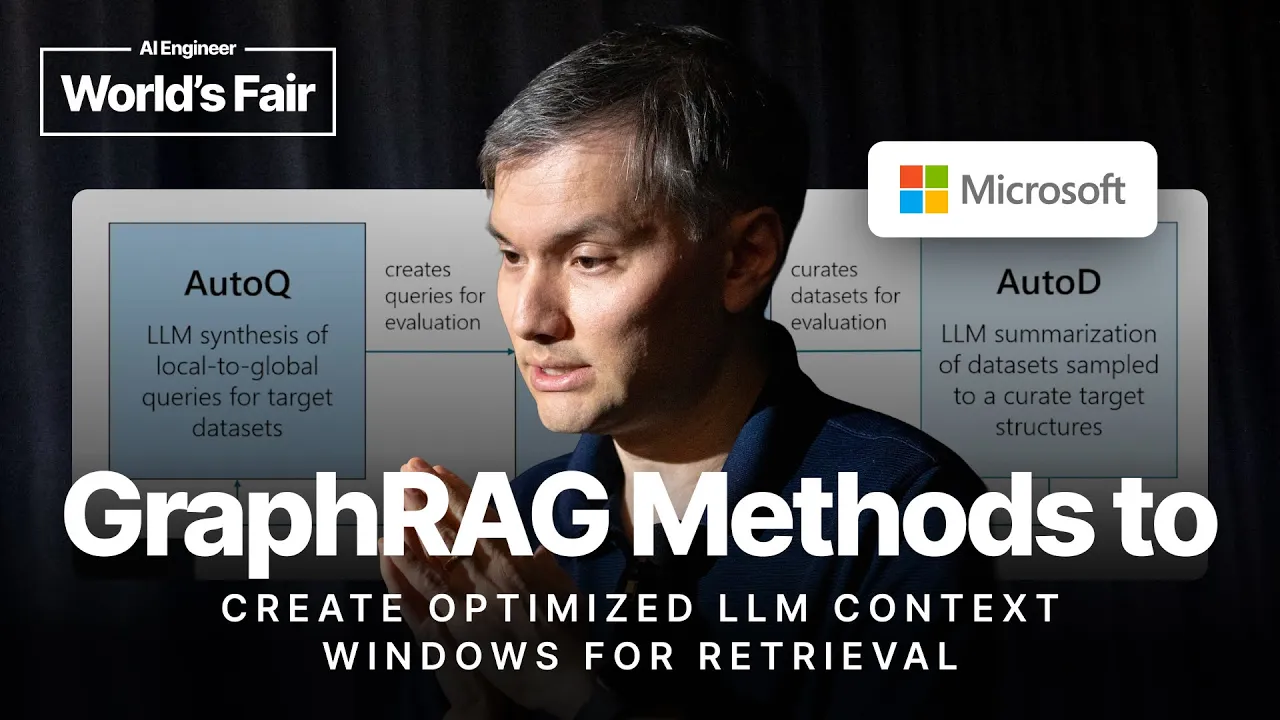

00:10:09.800 | got it live last night. And there's three components to this. The first is what we're calling

00:10:15.160 | Auto-Q. And Auto-Q is focused on doing query generation for target data sets. So it allows you to take a

00:10:22.040 | data set and then generate queries for it. Auto-E is the evaluation using LLM as a judge to actually then

00:10:28.280 | evaluate how those queries performed on that said data set. Auto-D is the third component. And that really focuses on

00:10:35.160 | data set summarization and data set sampling. Let's jump into just a couple of these in a little more

00:10:39.960 | detail. So if you take a look at Auto-Q, Auto-Q is taking a look at the local and global aspects. You

00:10:46.200 | can see that there on the x-axis. On the y-axis, it's then combining that against data-driven, basically

00:10:52.600 | questions that are generated based on the data itself, or persona or activity-driven, which is the second

00:10:58.280 | type that we have there. Those are more complex. So like, if you take on the role of a person in that domain

00:11:02.520 | field, and then using that to generate questions. So to show you a few of these sample questions,

00:11:07.400 | I'm just going to choose a couple here. This was built on an AP news data set that was focused on

00:11:12.120 | like medical type of events. And so a data local question might be, why are junior doctors in South

00:11:17.880 | Korea striking in February 2024? There's a lot of highly specific information in this, and I would

00:11:23.080 | expect regular AG to perform very well on this type of question. In contrast, take an activity global

00:11:29.160 | question here. It might be, what are the main public health initiatives mentioned that target underserved

00:11:34.920 | communities? There is nothing in that question that you can really pivot on in terms of like embedding

00:11:39.480 | or indexing that would really work on that. You really have to holistically know the entire data

00:11:44.280 | set. And so this Auto-Q will help generate questions across that whole spectrum, from local to global,

00:11:51.080 | from data-driven to activity-driven, and give you these categories of questions that you can use.

00:11:55.000 | Now, once you generate those questions, we can then start measuring them using Auto-E,

00:11:59.640 | which is the evaluation platform that we released along with this as well too. So just to show you

00:12:04.840 | how this in practice and how it works, it gives you a composite score for metrics. The first is

00:12:10.280 | comprehensiveness, diversity, and empowerment, which if you're familiar with our paper, those are the three

00:12:15.480 | original ones that we used back in that one, and a new one that we also call relevance. And so we actually

00:12:20.040 | did some comparisons just to show you how this works on lazy graph rag, which is one of the newer technologies

00:12:24.600 | that we've been working on. And we compared it to vector rag on 8K, 120K, and million token context

00:12:31.240 | windows. And we had a few takeaways from that. The first is if you take a look at these charts,

00:12:35.560 | any bar that is above the 50% mark means that lazy graph rag is winning in that benchmark against those

00:12:41.960 | specific vector rags. So vector rag blue here is 8K, 120K, and a million token context windows. And it's

00:12:47.880 | winning correspondingly at 92%, 90%, and 91% of the time against data local questions, which is kind

00:12:54.040 | of a surprise. Because one of the first things that we started seeing is that lazy graph rag was actually

00:12:58.040 | providing dominant performance across the entire span of questions, whether they were local or global.

00:13:04.120 | And we do expect rag to be performing better on local questions. And you can see that from the fact that

00:13:09.160 | data local and the data global, there's a little bit of a lift between these bars between the global and the

00:13:14.360 | local ones. Second thing we noticed is that the long context window didn't really make much of a

00:13:19.240 | difference. We were expecting that maybe the long context windows might give you a little bit of a

00:13:22.520 | because, again, a better understanding towards those global types of questions. But it actually turns out

00:13:27.560 | that lazy graph rag in this case was still able to dominate those metrics. And in fact, when we ran the

00:13:32.600 | test, it's not on the slide here, we actually found that lazy graph rag was a tenth of the cost of what we saw in

00:13:38.040 | the one million token context windows as well, too. So those are a couple things to show there.

00:13:43.000 | Now, with the last minute that I have, I did want to address another thing, too, with lazy graph rag.

00:13:48.120 | You may have read the blog posts about it in November last year when we first discussed it.

00:13:52.440 | It is now officially being lined up for launch in a couple products. The first is Azure Local. And so

00:13:58.440 | they just announced at Build that lazy graph rag will soon be incorporated into their platform for

00:14:04.520 | that. So you can actually try it out for yourselves there. And it's also being incorporated into the

00:14:08.600 | recently announced app build Microsoft discovery platform tool as well. So if you're not familiar

00:14:13.720 | with Microsoft discovery, it does graph-based scientific co-reasoning. And just to give you a

00:14:17.960 | quick summary of it, it goes from hypothesis to experiment, learning, knowledge. I'll just play a

00:14:23.400 | quick, like, five-second video here. And the questions -- the answers to the questions that you start

00:14:27.800 | coming back to with on this are powered underneath the hood by graph rag and lazy graph rag as well,

00:14:32.600 | too. So as you can see, the co-pilot here is generating the deep reasoning over graph-based

00:14:36.680 | scientific knowledge. Those graphs are being powered by graph rag and lazy graph rag.

00:14:42.520 | So with that, I just want to leave you with a couple takeaways. And the first is just that LLM memory

00:14:47.160 | structure -- LLM memory with structure is just a really, really powerful tool to keep in your tool

00:14:53.240 | belt. And the agents, of course, can massively amplify this power. And if you have any questions,

00:14:58.600 | I'll be outside if you want to talk about any of this further. Thank you so much for your time.