Programming Meme Review with George Hotz

00:00:00.000 | This is a review of programming memes with George Hotz.

00:00:04.360 | Quick mention of two sponsors,

00:00:06.200 | Four Sigmatic, the maker of delicious mushroom coffee

00:00:10.040 | and Public Goods, my go-to online store

00:00:12.940 | for minimalist household products and basic healthy food.

00:00:16.880 | Please check out these sponsors in the description

00:00:19.240 | to get a discount and to support this channel.

00:00:22.760 | And now, on to the memes.

00:00:26.040 | Let me ask you to do a meme review or inspect

00:00:30.640 | some printed black and white memes

00:00:33.800 | and maybe give a rating of pass or fail, like binary,

00:00:37.980 | if this is a win or a lose.

00:00:40.840 | These are mostly from the programming subreddit.

00:00:44.440 | - Man.

00:00:45.640 | - You don't like this one.

00:00:46.520 | This doesn't pass.

00:00:47.360 | - I mean, you were like, I could do it straight up

00:00:49.120 | like PewDiePie meme review.

00:00:50.640 | This meme gets a one out of 10.

00:00:54.800 | (laughing)

00:00:56.640 | - Wow, this is too much text.

00:00:58.960 | I half-assed looked at this.

00:01:00.440 | - Already too much text.

00:01:01.700 | (laughing)

00:01:03.080 | - Are you gonna skip on the text?

00:01:04.720 | - Well, what am I doing?

00:01:05.880 | Yeah, I don't know about this.

00:01:09.960 | I think this plays on bad stereotypes.

00:01:13.880 | (laughing)

00:01:15.520 | - Isn't that all of memes?

00:01:17.200 | I don't know.

00:01:18.040 | - No, I think, like, now this one, this meme's much better.

00:01:22.240 | - Okay.

00:01:23.080 | - You can spend six minutes doing something by hand

00:01:25.120 | when you can spend six hours failing to automate it.

00:01:27.280 | Now this one I like.

00:01:28.880 | - Crys in PowerShell.

00:01:30.200 | (laughing)

00:01:31.040 | What are you doing in PowerShell?

00:01:32.640 | Do you do that yourself?

00:01:34.880 | Like, do you find yourself over-automating?

00:01:37.520 | - Of course.

00:01:38.360 | I mean, but you learn so much trying to automate.

00:01:40.320 | You know, you could spend six minutes just driving there

00:01:42.280 | instead of spending, you know, 10 years of your life

00:01:44.800 | trying to solve self-driving cars.

00:01:45.960 | - That's true.

00:01:46.880 | That's the programming spirit.

00:01:48.560 | - Of course.

00:01:49.400 | - I don't know what that is.

00:01:50.240 | - Of course, you know, it's like you're trying

00:01:51.080 | to overcome laziness.

00:01:52.200 | - Kind of true.

00:01:53.040 | - You end up putting in way more effort

00:01:54.080 | to overcoming laziness than just overcoming it.

00:01:56.520 | - 78,000 upvotes on that one?

00:01:59.320 | Jesus, okay.

00:02:00.680 | - Mine doesn't have the, oh wow.

00:02:02.040 | Oh, that's up there, I see.

00:02:03.400 | This just, yeah, this is why I trust programmers

00:02:07.680 | a lot more than I trust doctors.

00:02:10.200 | - You like Googling.

00:02:11.480 | You like--

00:02:12.480 | - Doctors think they have some, like, divine wisdom

00:02:16.120 | because they went to med school for a few years.

00:02:17.960 | I'm like, yeah, dude, I understand.

00:02:19.680 | I didn't Google this on WebMD.

00:02:20.960 | Actually, like, I read these three papers.

00:02:22.600 | Like, you know?

00:02:24.440 | - Yeah.

00:02:25.280 | - I think I know.

00:02:26.100 | I remember once when I was, let's say, drug shopping

00:02:30.080 | and one of the doctors referred to Adderall

00:02:32.720 | as a Schedule B drug.

00:02:34.480 | And I can't correct this guy.

00:02:35.720 | That's drug-seeking behavior to an extreme.

00:02:38.320 | Schedule II, it's not Schedule B.

00:02:40.360 | But, you know, like, doctor, you don't know that?

00:02:43.080 | The DEA gave you a pad to prescribe

00:02:45.040 | and you don't know about the drug scheduling system?

00:02:46.600 | Like, like--

00:02:47.840 | - Yeah.

00:02:49.080 | Yeah, programmers don't have that.

00:02:50.600 | They just outsource.

00:02:51.840 | Do you think that becomes a problem?

00:02:54.080 | If you outsource the entirety of your knowledge

00:02:56.240 | to Stack Overflow?

00:02:57.480 | You write stuff from scratch pretty well.

00:03:00.040 | - Yeah, yeah, yeah.

00:03:00.880 | They asked me on Instagram, they were like,

00:03:03.520 | what's the integral of two to the X?

00:03:06.080 | And I'm like--

00:03:07.360 | - They'll look it up.

00:03:09.000 | - Well, the integral of E to the X is E to the X.

00:03:12.280 | So it's like something like that.

00:03:14.400 | - Yeah.

00:03:15.840 | Yeah, the same with Wolfram Alpha.

00:03:17.160 | You just start looking it up.

00:03:19.600 | - Uh.

00:03:20.440 | (both laughing)

00:03:24.880 | - Yeah.

00:03:25.720 | - That's pretty good.

00:03:26.920 | That's funny.

00:03:27.760 | That's the first--

00:03:29.200 | - That's the first funny one?

00:03:30.880 | - Yeah.

00:03:31.720 | - You do testing?

00:03:34.000 | You do like user, not user, unit testing?

00:03:38.360 | - Oh, we do unit testing, yeah.

00:03:39.480 | We have, our regression testing's gotten a ton better

00:03:42.600 | in the last year.

00:03:43.840 | Now we're pretty confident, if the tests pass,

00:03:46.640 | that at least it's gonna go into someone's car

00:03:50.520 | and do something, right?

00:03:52.120 | Versus in the past, like sometimes we'd merge stuff in

00:03:54.040 | and then one of the processes wouldn't start.

00:03:56.000 | - Right, so you think that kind of testing

00:03:57.280 | is still really useful for Autonomous Vehicles?

00:03:59.640 | - Not as much unit, but like some kind of,

00:04:01.840 | God, bad with words today, holistic,

00:04:05.040 | like integration testing.

00:04:06.480 | - Sure.

00:04:07.320 | The thing runs.

00:04:08.480 | - Yeah, that it runs, and that it runs in CI,

00:04:10.440 | and that it doesn't output crazy values.

00:04:12.480 | And then, actually, we wrote this thing

00:04:13.960 | called Process Replay, which just runs the processes

00:04:16.520 | and checks that they give the exact same outputs.

00:04:18.560 | And you're like, this is a useless test,

00:04:20.800 | because what if something changes?

00:04:23.040 | Well, yeah, then we update the process replay hash.

00:04:25.280 | This prevents you from, if you're doing something

00:04:27.520 | that you claim is a refactor, this will pretty much,

00:04:31.520 | it will check that it gives the exact same output

00:04:33.120 | every single time, which is good.

00:04:34.760 | And we run that over like 60 minutes of driving.

00:04:37.600 | - But that one's a win for you, you get past the 10,

00:04:40.960 | the scale of 10. - Yep, yep, yep, yep, yep.

00:04:42.880 | That's an eight out of 10.

00:04:43.920 | - Eight out of 10. - Can't give anything

00:04:44.840 | at 10 out of 10, that's a good meme.

00:04:47.160 | - Breaks the rule.

00:04:48.080 | - Yeah, yeah, I mean, you know,

00:04:54.640 | this is why I don't use IDEs, that's just true.

00:04:57.120 | - It's just true, it's not even funny.

00:04:57.960 | - That's just true, it's not even funny.

00:04:58.800 | - But I gotta say, I mean, to me, it's kind of funny,

00:05:01.280 | 'cause it's so annoying, it is so annoying with IDEs.

00:05:04.440 | That's why I'm still using it, EMAX,

00:05:06.000 | is 'cause IDE, it's like clippy on steroids.

00:05:08.480 | - It is, it is, of course.

00:05:10.440 | They're gonna make good ones, though.

00:05:11.400 | I always still wanna, this is a company idea I wanna do,

00:05:14.160 | and I've trained language models on Python.

00:05:18.200 | You can train these big language models on Python,

00:05:20.960 | and they'll do a pretty good job

00:05:22.280 | reading and checking for bugs and stuff.

00:05:23.920 | - So you hope for that, like machine learning approaches

00:05:26.440 | for improved IDEs? - Yeah.

00:05:28.640 | - That's very interesting, yeah.

00:05:30.040 | - Yeah, I think machine learning approaches for improved,

00:05:32.800 | look at Google's auto-suggest in Gmail.

00:05:35.880 | - Yeah.

00:05:36.720 | Oh yeah, even the dumb thing that it does

00:05:39.880 | is already awesome.

00:05:40.920 | So there's a lot of basic applications

00:05:43.400 | of GPT-3 language model that might be kind of cool.

00:05:46.520 | Yeah.

00:05:47.360 | - I think that the idea that you're gonna somehow

00:05:48.720 | get GPT-3s to replace customer service agents,

00:05:51.840 | I mean, really, this reflects so badly

00:05:53.440 | on customer service agents, and why don't you just

00:05:55.520 | give me access to whatever API you have?

00:05:57.480 | - I don't know, this one is...

00:06:01.080 | - Yeah.

00:06:01.920 | - I just saw a picture.

00:06:05.280 | I actually wondered if it works at all.

00:06:07.240 | (both laughing)

00:06:10.680 | - That's good.

00:06:11.520 | That's good.

00:06:13.200 | The last line is good.

00:06:16.080 | - Okay, thanks, bye.

00:06:17.600 | - No, I'd rather you mine cryptocurrency in my browser

00:06:20.240 | than use my speakers.

00:06:22.280 | - Yeah.

00:06:23.800 | I feel that way about pop-ups too.

00:06:26.240 | Just anything.

00:06:27.320 | - I mean, those you can at least block.

00:06:28.800 | It's like, you know what?

00:06:29.640 | The only thing I do want to autoplay is like YouTube.

00:06:32.160 | - Why do news sites start autoplaying their news?

00:06:37.000 | I don't get...

00:06:38.960 | I haven't been to a news site in years.

00:06:41.680 | I do not read the news.

00:06:43.160 | I still hear about the news 'cause people talk about it.

00:06:47.880 | I've never been to a news site.

00:06:49.000 | - Yeah, who goes there?

00:06:50.200 | Who goes there?

00:06:51.040 | - Who goes to these sites?

00:06:51.880 | And in fact, even on Hacker News, I'll always avoid it

00:06:54.280 | if the link is like a New York Times or CNN.

00:06:57.720 | I've read a few things, but yeah,

00:07:00.800 | those like the pop-uppy news sites.

00:07:02.600 | - Yeah, those websites are broken.

00:07:04.000 | Somebody needs to fix it.

00:07:04.840 | That's at the core why journalism is broken, actually,

00:07:07.120 | just 'cause like the revenue model is broken,

00:07:10.160 | but actually the interface.

00:07:11.520 | Like I have to, like I literally,

00:07:14.400 | I want to pay the New York Times and the Wall Street Journal.

00:07:16.880 | I want to pay them money, but like they make it

00:07:20.560 | like to where I have to click like so many times

00:07:25.200 | and they want to do--

00:07:26.040 | - And they have to have a login

00:07:26.960 | and have a New York Times password?

00:07:28.480 | - Yes, and also they're doing the gym membership thing

00:07:31.120 | where they try to make you forget

00:07:33.640 | that you signed up for them.

00:07:35.640 | So that they charge you indefinitely.

00:07:37.520 | Like just be up front and let it be five bucks.

00:07:41.160 | And then everybody would, it's your idea,

00:07:42.960 | which is like just make it cheap, accessible to everyone.

00:07:46.120 | Don't try to do any tricks.

00:07:47.480 | Just make it a great product.

00:07:49.600 | - Yeah, people like that.

00:07:50.440 | - And everybody will love it, yeah.

00:07:52.000 | - Actually, what I want to see also,

00:07:53.520 | I've seen them start to exist,

00:07:55.160 | is ad block that also blocks all the recommendeds.

00:07:58.600 | I don't want trending topics on Twitter.

00:08:01.440 | Please get rid of it.

00:08:02.400 | These trending topics have zero relevance in my life.

00:08:04.840 | They're designed to outrage me and bait me.

00:08:07.080 | Nope, don't want it.

00:08:08.880 | - You know, one interesting thing I've used on Twitter,

00:08:12.120 | I had to turn it back on 'cause it was tough,

00:08:14.600 | is there's Chrome extensions people could try,

00:08:17.360 | is it turns off the display of all the likes

00:08:20.200 | and retweets and all that.

00:08:21.720 | So all numbers are gone.

00:08:23.680 | It's such a different, people should definitely try this.

00:08:26.260 | It's such a different experience.

00:08:27.560 | 'Cause I've realized that I judge the quality

00:08:30.080 | of other people's tweets.

00:08:31.160 | - By, of course.

00:08:32.000 | - By the number of likes.

00:08:33.440 | Like it's not, you know, basically if it has no likes,

00:08:37.920 | then I'll judge it versus one

00:08:39.220 | that has like several thousand likes.

00:08:41.240 | I'll just see it differently.

00:08:42.720 | And when you turn those numbers off, I'm lost.

00:08:45.720 | At first I'm like, what am I supposed to think?

00:08:47.600 | It's a beautiful thing.

00:08:49.960 | - That's right, but then how much is it manipulated?

00:08:52.040 | - Yeah, it totally is.

00:08:54.000 | But it's an interesting experience, worth thinking about.

00:08:56.520 | - I found that, so actually I do, I block,

00:08:58.320 | you can, I do it with ad block.

00:08:59.640 | I have like, like trending topics.

00:09:01.060 | Anything that's global and applicable to everybody,

00:09:03.360 | I definitely do not want.

00:09:05.000 | I do not want to know what's trending

00:09:06.320 | in the United States right now.

00:09:07.480 | It's always some like political agitprop or like some,

00:09:10.880 | it's agitprop a lot of the times.

00:09:13.200 | - But at the same time, like to push back slightly,

00:09:15.680 | for me, trending, like if there's a nuclear war

00:09:19.480 | that breaks out.

00:09:20.880 | - That's how they get you, that's how they get you.

00:09:22.600 | And I just trust that if nuclear war breaks out,

00:09:24.800 | someone's gonna come tell me.

00:09:25.640 | - A friend will text you?

00:09:26.480 | - A friend will text me, like, you know, yo.

00:09:27.720 | - Hey, are you in a shelter or what?

00:09:29.440 | - Right?

00:09:30.520 | - Yeah, okay.

00:09:31.640 | So if it's bad enough, you'll know.

00:09:33.200 | - Yeah, I did also try turning off

00:09:34.960 | YouTube's recommendations,

00:09:36.720 | but I realized that they do provide value to me.

00:09:39.260 | Like the tailor--

00:09:40.100 | - Customized to you.

00:09:41.080 | - Yeah, yeah.

00:09:42.680 | - As opposed to a general one, like a trending one, yeah.

00:09:45.280 | Now YouTube does a pretty good job.

00:09:48.200 | So this one is about how Stack Overflow can be really rough.

00:09:51.000 | Asking a question on Stack Overflow, be like.

00:09:54.060 | - No.

00:09:55.120 | (laughing)

00:09:55.960 | No, I've never asked a question on Stack Overflow.

00:09:57.480 | - You've never, yeah, it's rough.

00:09:59.280 | - I don't comment, okay?

00:10:00.880 | I've never, if someone,

00:10:02.080 | if there's a comment on the internet,

00:10:03.480 | I just want you to know that they probably

00:10:05.160 | do not reflect my values.

00:10:06.840 | - Well, I mean, there is some truth to that too.

00:10:10.600 | One of the documents that I make everyone,

00:10:12.800 | everyone at Comma has to read it,

00:10:13.920 | it's linked from the thing,

00:10:14.800 | is it's Eric Raymond and it's how to ask smart questions.

00:10:17.400 | - Yeah.

00:10:18.240 | - And it's just like, well, you know,

00:10:21.200 | the quality of the answer you get depends a lot

00:10:24.080 | on how you will phrase the question.

00:10:25.160 | To be fair, I think, yeah, the auto keyword is not,

00:10:27.320 | is a, I think Stack Overflow likes it

00:10:31.240 | where it's like, there is an answer.

00:10:34.040 | There's not really an answer to that.

00:10:35.760 | - Right.

00:10:36.600 | - That's a great Reddit thread.

00:10:38.420 | - But some of the most interesting questions

00:10:41.000 | are a little bit--

00:10:42.400 | - Ambiguous, yeah.

00:10:43.240 | - Ambiguous, yeah.

00:10:45.160 | - I do this in interviews, I ask ambiguous questions.

00:10:47.640 | And I just see how, I found that that's so much more useful

00:10:50.320 | for gauging candidates than like--

00:10:52.880 | - To see how they reason through things?

00:10:54.720 | - To see just like what they think of

00:10:56.440 | and like what language they use

00:10:57.980 | and whether they show familiarity or not.

00:11:00.880 | - What do you, by the way, just as a quick aside,

00:11:03.840 | is there any magic formula to a good candidate?

00:11:06.480 | - Intelligence and motivation.

00:11:08.760 | - Intelligence and motivation, yeah.

00:11:10.680 | Motivation, passion, yeah.

00:11:12.060 | And you can see it through just conversation?

00:11:16.500 | I always wish there was a test that you could just online,

00:11:20.320 | like a form that you can fill out.

00:11:21.600 | - Well, okay, so there's the old problem of,

00:11:23.360 | testing for intelligence is easy because, okay,

00:11:25.300 | smart people can lie and say they're dumb,

00:11:27.080 | but dumb people can't really lie and say they're smart.

00:11:29.400 | But testing for motivation is much harder.

00:11:31.920 | So we do this, we have a micro-internship.

00:11:35.240 | We bring people in for two days paid

00:11:37.880 | to come work on something real in our code base.

00:11:41.640 | And we see like, you know,

00:11:43.240 | are you just doing this as like a job interview?

00:11:45.560 | If you're doing this as like,

00:11:46.400 | okay, I gotta like check this box,

00:11:47.920 | then yeah, you're not a good fit.

00:11:49.360 | - That's awesome, that's a good idea.

00:11:50.640 | And then you see, and then you can be picky

00:11:53.300 | and see like if there's a, is there a fire there like that?

00:11:56.960 | And we see, and like now we hire a lot of people,

00:11:59.840 | you know, who at least, like,

00:12:02.360 | if you're coming to work at Kama,

00:12:03.960 | you're kind of expected to have read the open pilot code.

00:12:06.880 | All right, like the best candidates are the ones

00:12:08.320 | who like read the code and ask questions about it.

00:12:10.640 | Like it's open source, right?

00:12:12.200 | The beauty of Kama is you don't need to be hired by us

00:12:15.640 | to start contributing.

00:12:16.740 | True.

00:12:24.260 | - Yeah, are you obsessed about resource constraints?

00:12:27.480 | - Some, not size, I don't care about bytes, but CPU.

00:12:32.540 | - CPU.

00:12:33.380 | - Well, CPU is watts, and watts is bad.

00:12:35.620 | - Watts is bad.

00:12:36.460 | - Watts is, we gotta pump them somewhere

00:12:37.860 | where we're lowering the CPU temp.

00:12:39.860 | People complain about the Kama 2's overheating.

00:12:43.060 | We have a solution.

00:12:44.620 | We're lowering the CPU frequencies.

00:12:47.100 | We're gonna ship that in the next one.

00:12:48.340 | But don't worry, we've made the code

00:12:49.760 | more than efficient enough to cover for it.

00:12:51.900 | - Awesome, that's, yeah, that's one way to go.

00:12:55.220 | - Beautiful.

00:12:56.060 | - This is good.

00:12:57.900 | I mean, okay, five out of 10.

00:13:00.140 | - Which one?

00:13:01.100 | - My code doesn't work, let's change nothing.

00:13:02.700 | I do that all the time.

00:13:03.860 | - That's the weird thing, and it often works.

00:13:07.220 | It's running stuff again.

00:13:09.140 | I don't get it.

00:13:09.980 | I don't get how this world, this universe is programmed,

00:13:12.280 | but it seems to work.

00:13:14.060 | Just like restarting a computer, restarting the system.

00:13:16.820 | Like this audio setup, sometimes it's just like not working,

00:13:20.700 | and then I'll just shut it off and turn it back on,

00:13:22.980 | and it just works.

00:13:23.980 | I interpreted this beam totally differently.

00:13:27.020 | You interpreted it as in like, this is a good idea.

00:13:30.700 | I interpreted it as in, I find myself doing this all the time.

00:13:33.620 | - And this is dumb, yeah.

00:13:34.900 | - I just run it again.

00:13:35.740 | I know it's gonna give me the same answer,

00:13:37.260 | and I'm not gonna be happy with it, but like, you know.

00:13:39.500 | I found this, I did Advent of Code last December.

00:13:43.100 | It's, Advent of Code's great.

00:13:44.340 | It's a programming challenge every night

00:13:47.380 | at midnight for December.

00:13:49.380 | Yeah, I'm proud of myself,

00:13:50.220 | proud of how I did on leaderboard.

00:13:53.540 | Yeah, I found myself doing that all the time.

00:13:54.980 | I'm like, this has to be right.

00:13:56.340 | I don't understand.

00:13:57.600 | Yup.

00:14:01.500 | - This is like, this is kind of,

00:14:02.500 | you embody this in some sense.

00:14:04.140 | You're able to, I mean, at least I've seen you

00:14:08.420 | able to accomplish quite a lot

00:14:09.700 | in a very short amount of time.

00:14:11.220 | It's interesting.

00:14:12.300 | - Yeah, sometimes.

00:14:14.100 | I don't know, some of them, like people don't realize

00:14:15.780 | how much like I cheated at Twitch Slam.

00:14:18.300 | You know, like, okay.

00:14:19.980 | To be fair, I did write that slam from scratch on there.

00:14:22.300 | I did not have like a copy of it written,

00:14:24.220 | but I also spent like the previous six months studying Slam.

00:14:28.740 | So like, you know, I had so much--

00:14:30.340 | - There's a base there, sure.

00:14:31.860 | - Going into it, whereas like with these other things,

00:14:34.580 | like I don't have anything going into it.

00:14:36.700 | And people are like, why isn't it like Twitch Slam?

00:14:38.180 | Well, you know.

00:14:39.960 | - So this one is just, you find this to be true?

00:14:45.460 | Is this funny at all?

00:14:46.660 | - I find it to be--

00:14:48.420 | - Is this a three out of 10?

00:14:51.100 | - Yeah, three out of 10.

00:14:52.300 | There's a lot of threes in there.

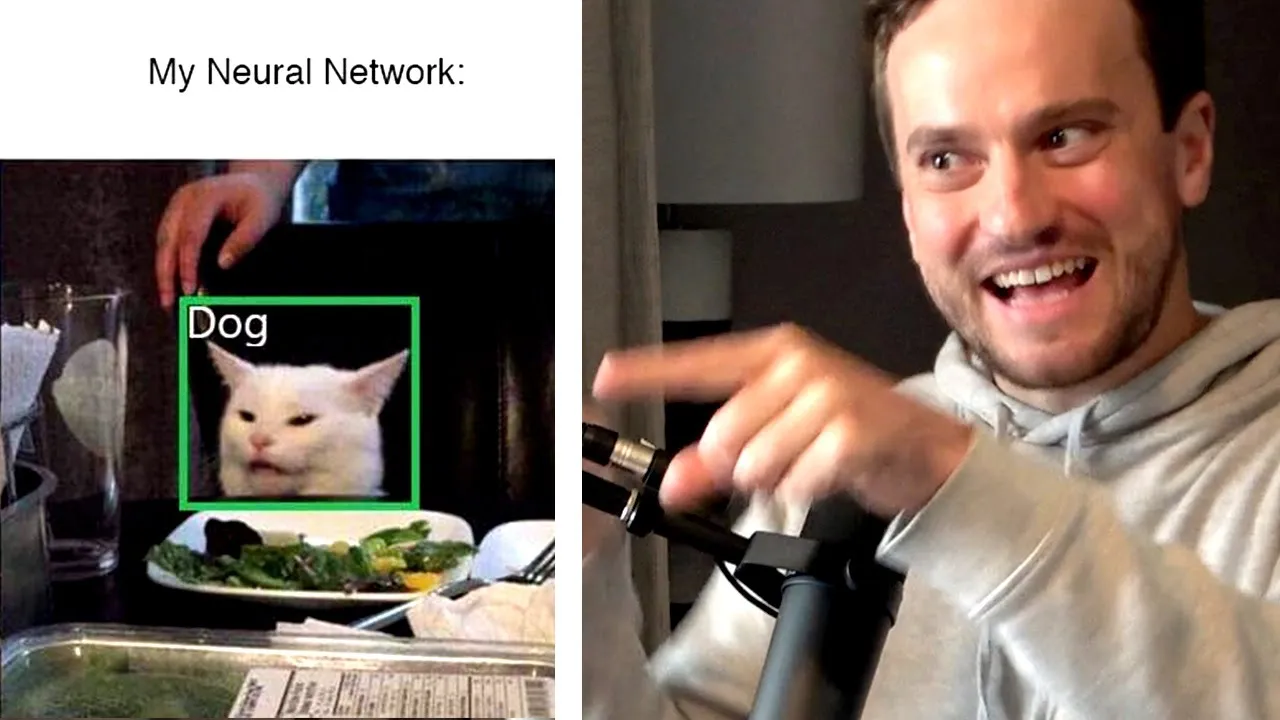

00:14:53.940 | - This is a machine learning question.

00:14:59.900 | - Yeah, true.

00:15:01.060 | - Yeah.

00:15:03.340 | I mean, this is the tension.

00:15:05.340 | I get this, I mean, sometimes this meme pisses me off

00:15:08.260 | a little bit, because people that like learn

00:15:11.420 | machine learning for the first time,

00:15:13.500 | and they're struggling with like object detection,

00:15:17.420 | and they think how is this supposed to achieve

00:15:18.980 | general intelligence.

00:15:20.920 | - That's 'cause you're just doing a first intro

00:15:23.120 | to what software 2.0, as opposed to like,

00:15:26.680 | when you scale things, I mean, you can achieve

00:15:29.240 | incredible things that would be very surprising.

00:15:32.680 | - I mean, it's the same thing with software 1.0.

00:15:34.520 | You know, they sit there and they write "Hello World,"

00:15:37.040 | and they're like, how is this thing supposed to fly rockets?

00:15:39.560 | - Right, and it does.

00:15:40.760 | The thing is, it does.

00:15:42.360 | - Just takes a whole lot more effort than you think.

00:15:46.480 | I don't know, but I'm very--

00:15:49.300 | - You're bullish on neural networks.

00:15:51.380 | - I'm bullish on neural networks.

00:15:52.260 | I think neural networks are definitely going

00:15:53.620 | to play a component.

00:15:55.240 | I'm bullish on what neural networks are,

00:15:56.980 | which is a beautiful, generic way.

00:15:59.300 | I was,

00:16:00.140 | one of our screening questions is,

00:16:05.060 | what's the complexity of matrix multiplication?

00:16:07.420 | And I was explaining this, like why fundamentally

00:16:12.660 | this is true, and then I went a little further with it.

00:16:14.220 | I'm like, you know, neural networks is just like

00:16:16.180 | matrix multiplications, interspersed with nonlinearities.

00:16:19.700 | And there is something, so,

00:16:21.600 | the fact that that's such an expressive thing,

00:16:25.300 | and it's also differentiable, it's also quasi-convex,

00:16:27.580 | like it's convex-y, which means convex optimizers

00:16:30.140 | work on it, like, yeah, well this isn't going anywhere.

00:16:33.660 | Of course not.

00:16:34.500 | - Yeah, the question is, what are the limits

00:16:36.500 | of its surprising power?

00:16:39.620 | - I think that we haven't seen the loss function yet.

00:16:42.060 | We haven't seen the right loss function.

00:16:44.820 | You know, it's gonna be something fancy

00:16:46.020 | that looks, I think, like compression.

00:16:47.820 | And I haven't seen these things really applied.

00:16:51.140 | Maybe there was that one paper where they tried,

00:16:52.620 | like, surprise to solve Montezuma's Revenge.

00:16:55.520 | - Yeah, there's a lot of fun stuff in RL that

00:16:59.740 | reveals the power of these things

00:17:02.620 | that's yet to really be understood, I think.

00:17:05.060 | So, that's kind of an exciting one.

00:17:07.100 | Thank you for putting up with this nonsense

00:17:09.780 | of a paper-based meme review, George.

00:17:12.100 | (upbeat music)

00:17:14.680 | (upbeat music)

00:17:17.260 | (upbeat music)

00:17:19.840 | (upbeat music)

00:17:22.420 | (upbeat music)

00:17:25.000 | (upbeat music)

00:17:27.580 | [BLANK_AUDIO]