Using Semantic Search to Find GIFs

Chapters

0:0 Intro0:17 GIF Search Demo

1:56 Pipeline Overview

5:33 Data Preparation

8:17 Vector Database and Retriever

12:37 Querying

15:42 Streamlit App Code

Transcript

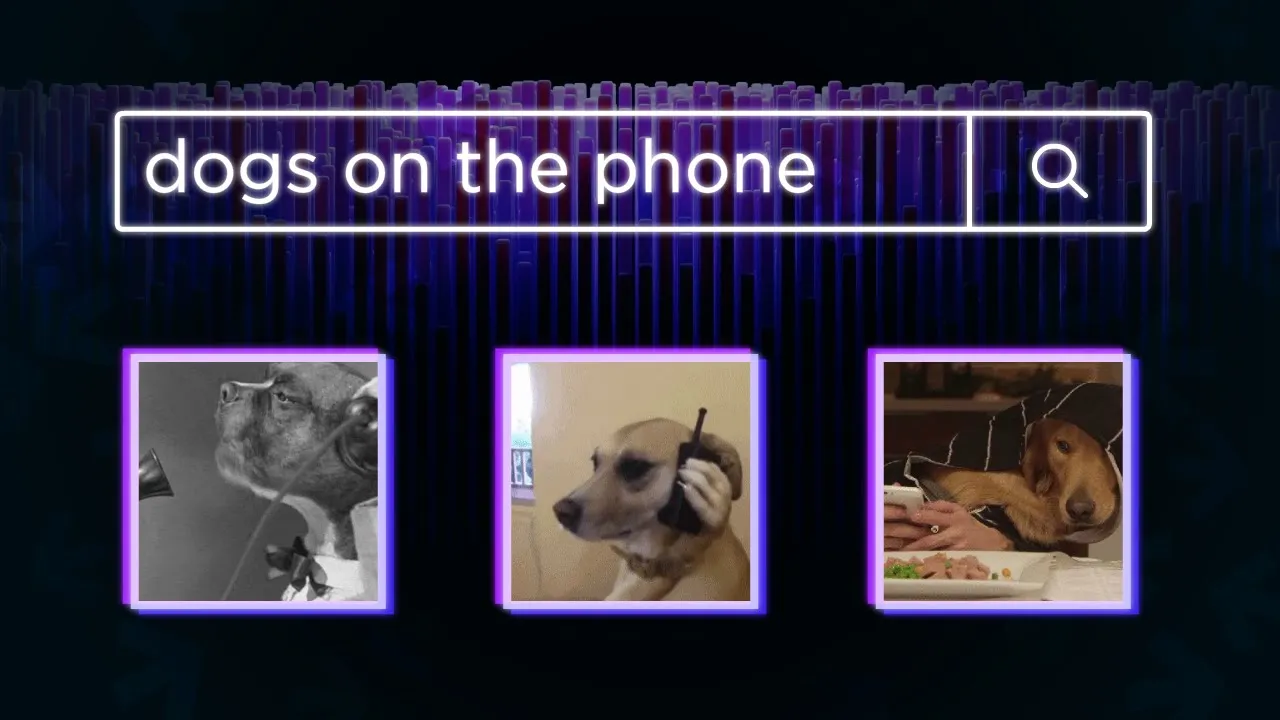

Today we are going to have a look at how we can use semantic search to intelligently search gifs and I think the best way for me to Explain what I mean by that is to simply show you what I mean by that. So here we have very simple streamlit front-end for our for our search pipeline and I'm going to search for dogs talking on the phone now this is a Natural language query.

I'm not I am there is some dependence on keywords here Or there will be some keywords that are shared, you know, we have dogs phone But because we're using semantic search we can Try and avoid using these keywords So if we go for something that has the same meaning but just doesn't include the word dog so for legged mammal We'll see that we actually still return this one here we've lost see we've lost this one that's a little further back now But for legged mammal talking on the phone still works pretty pretty well Now that's pretty cool.

But what I want to do here is show you how you can do this and In particular, okay. This is gif search Not maybe maybe it is actually pretty useful particularly when you are searching for gifts to use on social media and so on but I Think the real Power for this is its potential we can use the same pipeline To do a lot more than just gif search.

We can adapt it super easily to financial documents image search video search and a lot more So let's just have a quick high-level view of what this pipeline actually looks like so We start with our query at the top. So dogs on the phone or dogs talking on the phone Okay, that is our query it's going to come down here and it's going to go into what we call a retriever model a retriever model it's basically a transformer model Let me call it Retriever or tree.

Yeah, it's transform model. That's especially trained to output meaningful vector representations of that text so from here, we're going to get this big long vector is plenty of numbers in there, I think the what the retriever we use is going to output a dimensionality of I think 374 if I'm not wrong Or something along those lines, but that's actually a very small Vector usually they are much larger So we get that vector embedding and it's going to go into a vector database now The vector database for us is going to be pinecone And in pinecone we have all of these We have so the gift you just saw Each one of those has a little description Okay, so the one you saw before might say I think it says something like vintage dogs talking on the phone or something along those lines and We take that description We pass it through to retrieve a model So the same retrieve model views here and saw it as a vector embedding site inside pinecone Now this has been done for hundreds of thousands of gifts in their descriptions So then what we do is we look inside pinecone.

So we have all of these different Gift descriptions and What we do and use different color here we Introduce our query. So this is actually this is this vector here and we say, okay, which of these other Items are the closest to our query and maybe we say, okay, it's used to here So what we would do is return those over here and We don't really care about the vectors themselves, but we care about the metadata that's been attached to those vectors So in that metadata, we are going to have the URL Okay, so the URL where we can find that gif image or the gif file so that's included in here okay, so that comes along with us and We use that to display the gif on our screen.

So that's what you saw before We just have some HTML. It's a just like an image tag And We just have the URL in there Okay when we display them and that's what we are going to build so Let's take a look at how how we can do that now you will be able to find all of this code in a link in the video description or if you're Watching this on the article It will be at the bottom of the article So with that in mind, I'm not going to go really in-depth on the code I was going to kind of go through it quite quickly and just give you an idea of what we're actually doing so the first thing we would need to do is Install any of these libraries if we don't have them installed already And so we have our connections pine cone here.

We have sentence transformers, which is our retriever model TQDM just progress bar and pandas is just pandas day frames Now here not really that important if you're building a app But if you're doing this in the notebook You will want this because this will allow us to display HTML within our Jupiter notebook Which I'll see important if we want to see what gifts we are returning and then We obviously need a data set.

So we have this data set here and If we go down we see it's a tumblr gif data set 100,000 animated gifs and 120 sentences describing those gifts now What that means so there's a bit of an imbalance there. That means that there are multiple in some cases not all there are multiple descriptions for a single gif and We can go down here.

We can we can have a look at that in a moment. But this is the Dataset structure. We have the URL and then we have a description and We'll print these out in a moment so Let me show you this first. So we come down here and this is an example, right?

So we have this image that which is from the URL. So I've pulled that in from your own See here. We have the image tag just plugging that in and then we have the description description It pretty accurately describes what is happening in the in the gif Okay now we have those duplicates or Duplicate descriptions.

Let's have a quick look at those as well so these are a few of those duplicates and we can see that they are all the same gif as expected and They just have different descriptions, but the descriptions are all pretty accurate. They're not, you know, they don't not describe the gif So in this case Keeping these duplicates make sense because we just simply have multiple descriptions that are gonna point to the same gif All of those descriptions are accurate.

So it's not really an issue So we have our data set now. Let's just have a quick look at our Graph here. So the first thing we need to do here is initialize our retrieval model and our Vector database and as you can see on the left here We are going to be indexing our data Using our retriever and vector database before we begin querying anything Basically, we're just going to take all that data Putting it into our vector database So first thing I'm going to do here is initialize the Retriever model which is here and I'm going to initialize this sentence transform model If you don't know anything about sentence transformers There's a lot of videos on my youtube channel and love articles on pinecone that cover these in a lot of detail, so I'd recommend Having a look at those because they are really interesting now One important thing here to know is that our model is going to be outputting vectors with dimensionality 384 okay, I think earlier I said 374 it's 384 That's important.

We need to know that when we're initializing our vector database Which we can do like this so we import pinecone For the API key here. You do need to go To app dot pinecone the i/o to get a free API key and you just put it in here And then with that we can initialize our connections pinecone Then we all we need to do is pass an index name.

So index name can be anything you want Just make sure it makes sense. So for me, this is a gift search Index, so I am going to create that index with the dimensionality 384 in case that's what we got from the retriever model earlier the metric is also important this should align to what the Sentence transformer retrieval model has been fine-tuned to work with And then we connect to the index.

We've just created Okay, so once we've done that we move on to actually indexing okay, so if initialize the Vector database initialize the retriever now use the Retriever model to create our embeddings or the vectors That represent all of our gift descriptions and then we insert all those into pinecone So we do that in this loop.

It's pretty simple. We do in batches of 64 Here I'm extracting a batch from the data frame that we have. I generate embeddings for that batch We retrieve the metadata for that batch. So the metadata is going to be in a format. Do I have something here? Maybe I don't so that metadata will be in the format.

It's like description Description and then we have a description of what is happening in there and Then also the URL and that will go to the URL, right? pretty simple That's our metadata and we have one of those for each record or item and Then we create our IDs.

So the IDs need to be unique there needs to be strings and That's what we're doing here. Okay, so we're just going through the IDs is simple 0 1 2 3 But obviously there's a string and then we pull that together. Okay, and we insert these two pine cones So we do that in batches of 64 and then the end here I'm just checking that we have all the vectors in the index, which we do have a hundred and twenty five Thousand or just over Okay, cool and we can also see the index fullness so We can go up to about a million vectors on the free tier of pinecone.

So Here you can see we have plenty of space left, which is obviously pretty useful so With that we can move on to the right of our chart here Which is the querying step so querying is what we're going to do all the time said indexing we do once Unless we're sort of adding more data and then we might do it again Otherwise querying is the main task of our pipeline Every time a user makes a query search of something.

We're going to be going through this pipeline. So To do that again It's pretty much same as before query goes through to our retriever model that creates what we call a query vector We pass that to pinecone and then we search for the most similar already indexed gif description vectors or context vectors and then From there.

We return the most similar ones. Okay, and we within those Records from pinecone. We also include the metadata and That includes URL to the original gif So then we can just use some simple HTML to display those gifts to the user and let's have a look at how we actually do that in code So I split into two steps There's a search section or part which is searching encoding our query and also Searching for the most relevant Context vectors and that's what we're doing here.

So we encode with our retriever we query and we are going to return the top 10 most similar context there and Yeah, we just append that to this list Yeah Okay, you can see here. We're getting the metadata as well. It's also important So here include metadata needs to be true.

Otherwise, we're not going to be turning any metadata and we can't extract those URLs okay, and I'm just putting that in a search a gif function there and Then we have this display gifts. So all this is is the really simple HTML that we are displaying So we just have these Developments inside here.

We have a figure and our image with the URL source that we have. Okay And then to do that or to perform a search we just do search gif Dog being confused in this case and we display them Okay, and we can see that we're getting all of these gifts where a dog is is confused, which is I think pretty cool And we have loads of examples here.

I just went through a load them to see what we get Yeah, oh this one quite specific you can get really specific with this as well So fluffy dog being cute and dancing like a person and then we get this one up at the top here So yeah, I think that is pretty cool.

Now if we want to Replicate the sort of app the streamer app that you saw before You can do one if you just want to test it you can but to create this app. It's super simple Obviously we're using stream lit and this is all we do I'm gonna zoom out a bit so it might be kind of hard to read on your screen, but it's honest kind of showed a whole code and Again, you can just download this anyway, so it shouldn't really be an issue This code is slightly outdated actually.

So this model here is not using the MP net model anymore It's actually using the mini LM model. It's just a smaller model and Yeah, all we do we initialize pinecone initialize our retriever like we did in those notebooks We have our HTML here exactly the same as in the notebooks again, and then we just write our app So we have a I powered gift search What you're looking for?

It's just a text input That passes into query whenever query is not empty We will begin to search. Okay, so we Encode as we did before the query to create our query vector. We retrieve the most similar context and then we extract the URL metadata from those and then we display them and that's literally it's super simple and We get this really cool gift search app from that.

So That's it for this video. I hope this has been interesting so Thank you very much for watching and I will see you again in the next one. Bye