Stanford CS25: V1 I Decision Transformer: Reinforcement Learning via Sequence Modeling

Chapters

0:03:23 Reinforcement Learning

3:29 What Is Reinforcement Learning

4:34 Offline Reinforcement Learning

8:2 Cause for Why Rl Typically Has Several Orders of Magnitude Fewer Parameters

9:59 Reason Why You Chose Offline Rl versus Online Rl

11:44 Causal Causal Transformer

14:38 Output

15:28 Differences with How a Decision Transformer Operates as Opposed to a Normal Transformer

20:18 Partial Observability

32:47 Offline Rl

44:36 How Much Time Does It Take To Train Distant Transformer

45:24 Person Behavioral Cloning

58:58 The Keycard Environment

Transcript

So I'm excited to talk today about our recent work on using transformers for reinforcement learning. And this is joint work with a bunch of really exciting collaborators, most of them at UC Berkeley, and some of them at Facebook and Google. I should mention this work was led by two talented undergrads, Li Chen and Kevin Liu.

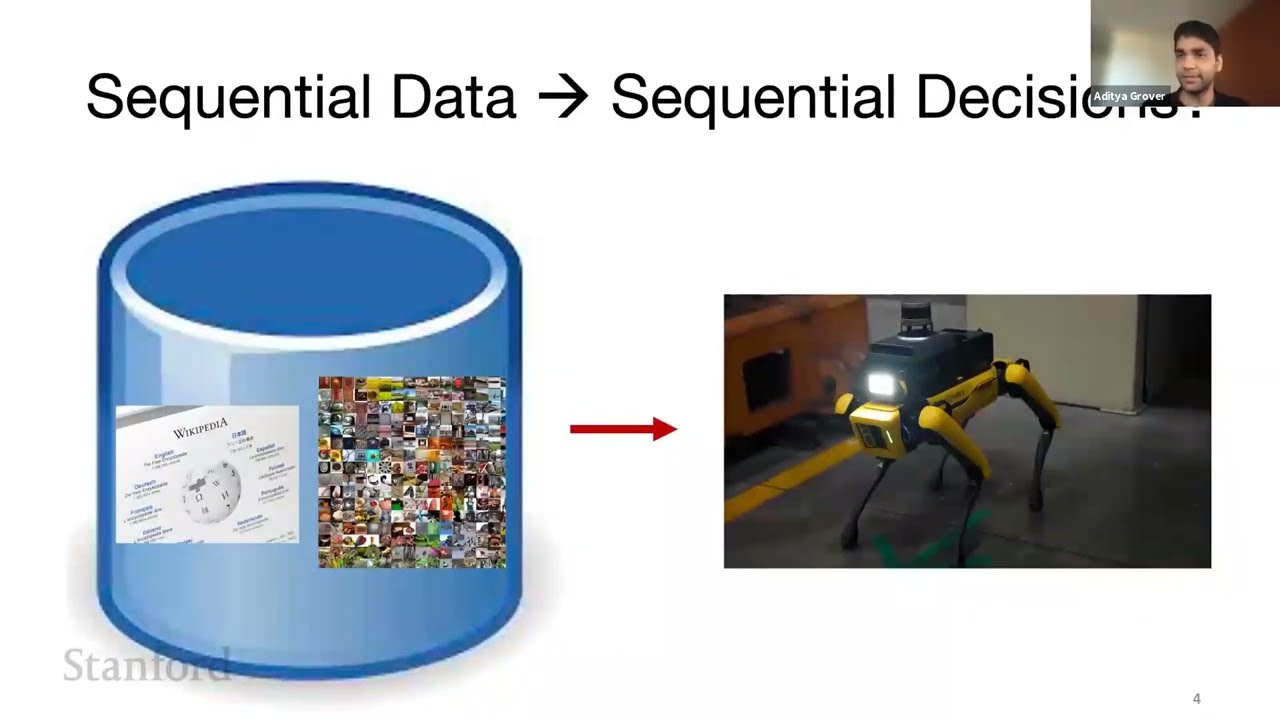

And I'm excited to present the results we had. So let's try to motivate why we even care about this problem. So we have seen in the last three or four years that transformers, since the introduction in 2017, have taken over lots and lots of different fields of artificial intelligence.

So we saw them having a big impact for language processing. We saw them being used for vision, using the vision transformer very recently. They were in nature trying to solve protein folding. And very soon they might just replace us as computer scientists by having an automatically generate code. So with all of these advances, it seems like we are getting closer to having a unified model for decision-making for artificial intelligence.

But artificial intelligence is much more about not just having perception, but also using the perception knowledge to make decisions. And this is what this talk is going to be about. But before I go into actually thinking about how we will use these models for decision-making, here is a motivation for why I think it is important to ask this question.

So unlike models for RL, when we look at transformers for perception modalities, like I showed in the previous slide, we find that these models are very scalable and have very stable training dynamics. So you can keep-- as long as you have enough computation and you have more and more data that can be sourced, you can train bigger and bigger models and you'll see very smooth reductions in the loss.

And the overall training dynamics are very stable and this makes it very easy for practitioners and researchers to build these models and learn richer and richer distributions. So like I said, all of these advances have so far occurred in perception. What we'll be interested in this talk is to think about how we can go from perception, looking at images, looking at text, and all these kinds of sensory signals, to then going into the field of actually taking actions and making our agents do interesting things in the world.

And here, throughout the talk, we should be thinking about why this perspective is going to enable us to do scalable learning, like I showed in the previous slide, as well as bring stability into the whole procedure. So sequential decision making is a very broad area. And what I'm specifically going to be focusing on today is the one route to sequential decision making, that's reinforcement learning.

So just as a brief background, what is reinforcement learning? So we are given an agent who is in a current state, and the agent is going to interact with the environment by taking actions. And by taking these actions, the environment is going to return to it a reward for how good that action was, as well as the next state into which the agent will transition, and this whole feedback loop will continue on.

The goal here for an intelligent agent is to then, using trial and error-- so try out different actions, see what rewards will lead to-- learn a policy which maps your states to actions, such that the policy maximizes the agent's cumulative rewards over time horizon. So you take a sequence of actions, and then based on the reward you accumulate for that sequence of actions, we'll judge how good your policy is.

This talk is also going to be specifically focused on a form of reinforcement learning that goes by the name of offline reinforcement learning. So the idea here is that what changes from the previous picture where I was talking about online reinforcement learning is that here, now instead of doing actively interacting with the environment, you have a collection of log data of interactions.

So think about some robot that's going out in the fields, and it collects a bunch of sensory data, and you've all logged it. And using that log data, you now want to train another agent-- it could be another robot-- to then learn something interesting about that environment just by looking at the log data.

So there's no trial and error component, which is currently one of the extensions of this framework, which will be very exciting. So I'll talk about this towards the end of the talk, why it's exciting to think about how we can extend this framework to include an exploration component and have trial and error.

OK, so now to go more concretely into what the motivating challenge of this talk was now that we have introduced RL. So let's look at some statistics. So large language models have billions of parameters. And today, they have roughly about 100 layers and transformer. They're very stable to train using supervised learning style losses, which are the building blocks of autoregressive generation, for instance, or for mass language modeling, as in BERT.

And this is like a field that's growing every day. And there's a course at Stanford that we're all taking just because it has had such a monumental impact on AI. RL policies, on the other hand-- and I'm talking about deep RL-- the maximum they would extend to is maybe millions of parameters or 20 layers.

And what's really unnerving is that they're very unstable to train. So the current algorithms for reinforcement learning, they're built on a mostly dynamic programming, which involves solving an inner loop optimization problem that's very unstable. And it's very common to see practitioners in RL looking at reward codes that look like this.

So what I really want you to see here is the variance in the returns that we tend to get in RL. It's really huge, even after doing multiple rounds of experimentation. And that is really at the core got to done with the fact that our algorithms, our learning objectives, need better improvements so that the performance can be stably achieved by agents in complex environments.

So what this work is hoping to do is it's going to introduce transformers. And I'll first show in one slide what exactly that model looks like. And then we're going to go into deeper details of each of the components. I have a-- Quick question. Yeah, can I ask a question real quick?

Yes. And-- Thank you. What I'm curious to know is, what is the cause for why RL typically has several orders of magnitude fewer parameters? That's a great question. So typically, when you think about reinforcement learning algorithms, in deep RL in particular, so the most common algorithms, for example, have different networks playing different roles in the task.

So you have a network, for instance, playing the role of an actor, so it's trying to figure out a policy. And then there'll be a different network that's playing the role of a critic. And these networks are trained on data that's adaptively gathered. So unlike perception, where you will have a huge data set of interactions on which you can train your models, in this case, the architectures and even the environments, to some extent, are very simplistic because of the fact that we are trying to train very small components, the functions that we are training, and then bringing them all together.

And these functions are often trained in not super complex environments. So it's a mix of different issues. I wouldn't say it's purely just about the fact that the learning objectives are at fault, but it's a combination of the environments we use, the combination of the targets that each of the neural networks are predicting, which leads to networks which are much bigger than what we currently see, tending to overfit.

And that's why it's very common to see neural networks with much fewer layers being used in RL as opposed to perception. Thank you. Do you want to ask a question? Yeah. Yeah, I was going to. Is there a reason why you chose offline RL versus online RL? That's another great question.

So the question is, why offline RL as opposed to online RL? And the plain reason is because this is the first work trying to look at reinforcement learning. So offline RL avoids this problem of exploration. You are given a log data set of interactions. You're not allowed to further interact with the environment.

So just from this data set, you're trying to unearth a policy of what the optimal agent would look like. So it would. Right. If you do online RL, wouldn't that just give you this opportunity of exploration, basically? It would. It would. And what it would also do, which is technically challenging here, is that the exploration would be harder to encode.

So offline RL is the first step. There's no reason why we should not study why online RL cannot be done. It's just that it provides a more contained setup where ideas from transformers will directly extend. Okay. Sounds good. So let's look at the model and it's really simple on purpose.

So what we're going to do is we're going to look at our offline data, which is essentially in the form of trajectories. So offline data would look like a sequence of states, actions, returns over multiple time steps. It's a sequence. So it's natural to think of us as directly feeding as input to a transformer.

In this case, we use a causal transformer as it's common in GPT. So we go from left to right. And because this dataset comes with the notion of time step, causality here is much more well-intended than the general meaning that's used for perception. This is really causality, how it should be in perspective of time.

What we predict out of this transformer are the actions conditioned on everything that comes before that token in the sequence. So if you want to predict the action at this T minus one step, we'll use everything that came at time step T minus two, as well as the returns and states at time step T minus one.

So we will go into the details of how exactly each of these are encoded. But essentially, this is in a one liner. It's taking the trajectory data from the offline data, treating it as a sequence of tokens, passing it through a causal transformer, and getting a sequence of actions as the output.

OK, so how exactly do we do the forward pass through the network? So one important aspect of this work, which is we use states, actions, and this quantity called returns to go. So these are not direct rewards. These are returns to go, and let's see what they really mean.

So this is our trajectory that goes as input. And the returns to go are the sum of rewards starting from the current time step until the end of the episode. So really what we want the transformer is to get better at using a target return-- this is how you should think of returns to go-- as the input in deciding what action to take.

This perspective is going to have multiple advantages. It will allow us to actually do much more than offline RL and generalizing to different tasks by just changing the returns to go. And here it's very important. So at time step one, we will just have the overall sum of rewards for the entire trajectory.

At time step two, we subtract the reward we get by taking the first action, and then have the sum of rewards for the remainder of the trajectory. OK, so that's how we call it returns to go, like how many more rewards in accumulation you need to acquire to fulfill your return goal that you set in the beginning.

What is the output? The output is the sequence of predicted actions. So as I showed in the previous slide, we use a causal transformer. So we'll predict in sequence the desired actions. The attention, which is going to be computed inside the transformer, will take in an important hyperparameter k, which is the context length.

We see that in perception as well here. And for the rest of the talk, I'm going to use the notation k to denote how many tokens in the past would we be attending over to predict the action and the current time step. OK, so again, digging a little bit deeper into code, there are some subtle differences with how a decision transformer operates as opposed to a normal transformer.

The first is that here, the time step notion is going to have a much bigger semantics that extends across three tokens. So in perception, you just think about the time step per word, for instance, like an NLP or per patch for vision. And in this case, we will have a time step encapsulating three tokens, one for the states, one for the actions, and one for the rewards.

And then we'll embed each of these tokens and then add the position embedding as is common in a transformer. And we feed those inputs to the transformer. At the output, we only care about one of these three tokens in this default setup. I will show experiments where even the other tokens might be of interest as target predictions.

But for now, let's keep it simple. We want to learn a policy, a policy that's trying to predict actions. So when we try to decode, we'll only be looking at the actions from the hidden representation in the pre-final layer. OK, so this is the forward pass. Now, what do we do with this network?

We train it. How do we train it? Sorry, just a quick question on semantics there. If you go back one slide, the plus in this case, the syntax means that you are actually adding the values element-wise and not concatenating them. Is that right? That is correct. OK, cool. So let me check.

Thanks. OK, so what's the last function? Follow up on that. I thought it was concatenated. Why are we just adding it? Sorry. Can you go back? Yeah, I think it's a design choice. You can concatenate. You can add it. It leads to different functions being encoded. In our case, it was addition.

OK, why did you-- did you try the other one and it just didn't work, or why is that? Because I think intuitively, concatenating would make more sense. So I think both of them have different use cases for the functional encoding. One is really mixing in the embeddings for the state and basically shifting it.

So when you add something, if you think of the embedding of the states as a vector, and you add something, you are actually shifting it, whereas in the concatenation case, you are actually increasing the dimensionality of the space. So those are different choices, which are doing very different things.

We found this one to work better. I'm not sure I remember if the results were very significantly different if you would concatenate them, but this is the one which we operate with. But wouldn't there-- because if you're shifting it, if you have an embedding for a state, let's say you perform certain actions and you end up at the same state again, you would want these embeddings to be the same, however, now you're at a different time step.

So you shifted it. So wouldn't that be harder to learn? So there's a bigger and interesting question in that what you said is basically, are we losing the Markov property? Because as you said, if you come back to the same state at a different time step, shouldn't we be doing similar operations?

And the answer here is yes, we are actually being non-Markov. And this might seem very non-intuitive at first, that why is non-Markovness important here? And I want to refer to another paper which came very much in conjunction with this, the triarchy transformer, that actually shows in more detail. And it basically says that if you were trying to predict the transition dynamics, then you could have actually had a Markovian system built in here, which would do just as good.

However, for the perspective of trying to actually predict actions, it does have to look at the previous time steps, even more so when you have missing observations. So for instance, if you have the observations being a substrate of the true state. So looking at the previous states and actions helps you better fill in the missing pieces in some sense.

So this is commonly known as partial observability, where by looking at the previous tokens, you can do a better job at predicting the actions that you should take at the current time step. So non-Markovness is on purpose. And it's not intuitive, but I think it's one of the things that separates this framework from existing ones.

So it will basically help you-- because RL usually works better on infinite horizon problems, right? So technically, the way you formulate it, it would work better on finite horizon problems, I'm assuming. Because you want to take different actions based on the history, based on given a fact that now you're at a different time step.

Yeah. Yeah. So if you wanted to work on infinite horizon, maybe something like discounting would work just as well to get that effect. In this case, we were using a discount factor of 1, or basically no discounting at all. But you're right. If I think we really want to extend it to infinite horizon, we would need to change the discount factor.

All right. Thanks. OK. So-- Quick question. Oh. I think it was just answered in chat, but I'll ask it anyways. I think I might have missed this, or maybe you're about to talk about it. The offline data that was collected, what policy was used to collect it? So this is a very important question, and it will be something I mentioned in the experiment.

So we were using the benchmarks that exist for offline RL, where essentially the way these benchmarks are constructed is you train an agent using online RL, and then you look at its replay buffer at some time step while it's training. So while it's like a medium sort of expert, you collect the transitions it's experienced so far and make that as the offline data.

It's something which is-- like our framework is very agnostic to what offline data that you use. So I've not discussed it so far. But it's something that in our experiments is based on traditional benchmarks. Got it. So the reason I ask isn't-- I'm sure that your framework can accommodate any offline data.

But it seems to me like the results that you're about to present are going to be heavily contingent on what that data collection policy is. Indeed. Indeed. And also-- so we will-- I think I have a slide where we show an experiment where the amount of data can make a difference in how we compare with baselines.

And essentially, we will see how this especially shines when there is small amounts of offline data. OK. Cool. Thank you. OK. Great questions. So let's go ahead. So we have defined our model, which is going to look at these trajectories. And now, let's see how we train it. So very simple, we are trying to predict actions.

We'll try to match them to the ones we have in our data set. If they are continuous, using the mean squared error. If they are discrete, then we can use the cross-entropy. But there is something very deep in here for our research, which is that these objectives are very stable to train and easy to regularize because they've been developed for supervised learning.

In contrast, what RL is more used to is dynamic programming style objectives, which are based on the Bellman equation. And those end up being much harder to optimize and scale. And that's why you see a lot of the variance in the results as well. OK. So this is how we train the model.

Now, how do we use the model? And that's the point about trying to do rollout for the model. So here, again, this is going to be similar to doing an autoregressive generation. There is an important token here, which was the returns to go. And what we need to set during evaluation, presumably, we want export level performance because that will have the highest returns.

So we set the initial returns to go, not based on our trajectory, because now we don't have a trajectory. We're going to generate a trajectory. So this is at entrance time. So we'll set it to the export return, for instance. So in code, what this whole procedure would look like is basically you set this returns to go token to have some target return.

And you set your initial state to run from the environment distribution of initial states. And then you just roll out your decision transformer. So you get a new action. This action will also give you a state and reward from the environment. You append them to your sequence, and you get a new returns to go.

And you take just the context and key, because that's what's used by the transformer to making predictions, and then feed it back to the decision transformer. So it's regular autoregressive generation, but the only key point to notice is how you initialize the transformer for RL. Sorry, I had one question here.

How much does the choice of the export target return matter? Does it have to be the mean export reward, or can it be the maximum reward possible in the environment? Does the choice of the number really matter? That's a very good question. So we generally would set it to be slightly higher than the max return in the data set.

So I think the factor we use is 1.1 times. But I think we have done a lot of experimentation in the range, and it's fairly robust to what choice you use. So for example, for Hopper, export returns about 3,600, and we have found very stable performance all the way from 3,500, 3,400 to even going to very high numbers, like 5,000, it works.

Yeah. So however, I would want to point out that this is something which is not typically needed in regular RL, like knowing the export return. Here we are actually going beyond regular RL in that we can choose a return we want, so we also actually need this information about what the export return is at test.

Sorry. There's another-- Yeah. Yes. Hi. So it's just that you cannot be on the regular RL, but I'm curious about do you also restrict this framework to only offline RL, because if you want to run this kind of framework in online RL, you'll have to determine the returns to go a priori.

So this kind of framework, I think it's kind of restricted to only offline RL. Do you think so? Yes. And I think asking this question as well earlier, that yes, I think for now, this is the first book, so we were focusing on offline RL where this information can be gathered from the offline data set.

It is possible to think about strategies on how you can even get this online. What you'll need is a curriculum. So early on during training as we're gathering data, you will set-- when you're doing rollouts, you will set your expert return to whatever you see in the data set, and then increment it as and when you start seeing that the transformer can actually exceed that performance.

So you can think of specifying a curriculum from slow to high for what that expert return could be for which you roll out the decision transformer. I see. Cool. Thank you. So yeah, this was about the model. So we discussed how this model is-- what the input to this model are, what the outputs are, what the loss function is used for training this model, and how do we use this model at test time.

There is a connection to this framework as being one way to instantiate what is often known as RLS probabilistic inference. So we can formulate RL as a graphical model problem where you have the states and actions being used to determine what the next state is. And to encode a notion of optimality, typically you would also have these additional auxiliary variables, O1, O2, and so on and forth, which are implicitly saying that encoding some notion of reward.

And conditioned on this optimality being true, RL is the task of learning a policy, which is the mapping from states to actions such that we get optimal behavior. And if you really squint your eyes, you can see that these optimality variables and decision transformers are actually being encoded by the returns to go.

So if when we give a value that's high enough at test time during rollouts, like the expert return, we are essentially saying that conditioned on this being the mathematical quantification of optimality, roll out your decision transformer to hopefully satisfy this condition. So yeah, so this was all I want to talk about the model itself.

Can you explain that, please? What do you mean by optimality variables in the decision transformer? And how do you mean like return to go? Right. So optimality variables, we can think in the most simplest context as, let's just say they were binary. So 1 is if you solve the goal, and 0 is if you did not solve the goal.

And what basically in that case, you could also think of your decision transformer as at test time and we encode the returns to go, we could set it to 1, which would basically mean that conditioned on optimality-- so optimality here means solving the goal as 1-- generate me the sequence of actions such that this would be true.

Of course, our learning is not perfect, so it's not guaranteed we'll get that. But we have trained the transformer in a way to interpret the returns to go as some notion of optimality. So if I'm interpreting this correctly, it's roughly like saying, show me what an optimal sequence of transitions look like, because the model has learned both successful and unsuccessful transitions.

Exactly. Exactly. OK. And as we've seen some experiments, for the binary case, it's either optimal or non-optimal. But really, this can be a continuous variable, which it is in our experiments. So we can also see what happens in between experimentally. OK, so let's jump into the experiments. So there are a bunch of experiments, and I've picked out a few which I think are interesting and give the key results in the paper, but feel free to refer to the paper for an even more detailed analysis on some of the components of our model.

So first, we can look at how well does it do an offline RL. So there are benchmarks for the Atari suite of environments and the OpenAI Gym. And we have another environment, Key2Door, which is especially hard because it contains sparse rewards and requires you to do credit assignment that I'll talk about later.

But across the board, we see that decision transformer is competitive with the state-of-the-art model-free offline RL methods. In this case, this was a version of Q-learning designed for offline RL. And it can do excellent, especially when there is long-term credit assignment where traditional methods based on TD learning would fail.

Yeah, so the takeaway here should not be that we should be at the stage where we can just simply substitute the existing algorithms for the decision transformer. But this is a very strong evidence in favor that this paradigm which is building on transformers will permit us to better iterate and improve the models to hopefully surpass the existing algorithms uniformly.

And there's some early evidence of that in harder environments, which do require long-term credit assignment. OK. Can I ask a question here about the baseline, specifically TD learning? I'm curious to know, because I know that a lot of TD learning agents are feedforward networks. Are these baselines, do they have recurrence?

Yeah, yeah. So I think the conservative Q-learning baselines here did have recurrence, but I'm not very sure. So I can check back on this offline and get back to you on this. OK. Thank you. Also, another quick question. So just how exactly do you evaluate the decision transformer here in the experiment?

So because you need to supply the returns to go, so do you use the optimal policy to get what's the optimal rewards and speed that in? Yes. So here we basically look at the offline data set that was used for training. And we said, whatever was the maximum return in the offline data, we set the desired target return to go as slightly higher than that.

So 1.1 was the coefficient we used. I see. So the performance-- sorry, I'm not really well-versed in RLs, but how is the performance defined here? It's just like, is it how much reward you get actually from the-- Yes. Yes. So you can specify a target return to go, but there's no guarantee that the actual actions that you take will achieve that return.

So you measure the true environment return based on that. Yeah. I see. But then just curious, so are these performance the percentage you get for how much reward you recover from the actual environment? Yeah. So these are not percentages. These are some way of normalizing the return so that everything falls between 0 to 100.

Yeah. Yeah. I see. Then I just wonder if you have a rough idea about how much reward actually is recovered by decision transformers. Does it say, if you specify, I want to get 50 rewards, does it get 49? Or is this even better sometimes? That's an excellent question. And my next slide.

OK. I see. Thanks. So here we're going to answer precisely this question that we're asked is like, if you feed in the target return, it could be expert or it could also not be expert. How well does the model actually do in attaining it? So the x-axis is what we specify as the target return we want.

And the y-axis is basically how much, how well do we actually get. For reference, we have this green line, which is the oracle. Which means whatever you desire, the decision transformer gives it to you. So this would have been the ideal case. So it's a diagonal. We also have, because this is offline RL, we have in orange what was the best trajectory data set.

So the offline data is not perfect. So we just plot what is the upper bound on the offline data performance. And here we find that for the majority of the environments, there is a good fit between the target return we feed in and the actual performance of the model.

And there are some other observations which I wanted to take from the slide is that because we can vary this notion of reward, we can, in some sense, do multitask RL by return conditioning. This is not the only way to do multitask RL. You can specify a task via natural language, you can via goal state, and so on and so forth.

But this is one notion where the notion of a task could be how much reward you want. And another thing to notice is occasionally these models extrapolate. This is not a trend we have been seeing consistently, but we do see some signs of it. So if you look at, for example, Sequest, here the highest return trajectory in a data set was pretty low.

And if we specify a return higher than that for our decision transformer, we do find that the model is able to achieve. So it is able to generate trajectories with returns higher than it ever saw in the dataset. I do believe that future work in this space trying to improve this model should think about how can this trend be more consistent across environments, because this would really achieve the goal of offline RL, which is given suboptimal behavior, how do you get optimal behavior out of it, but remains to be seen how well this trend can be made consistent across environments.

Can I jump in with a question? Yes. So I think that last point is really interesting, and it's cool that you guys occasionally see it. I'm curious to know what happens. So this is all conditioned. You give as an input what return you would like, and it tries to select a sequence of actions that gives it.

I'm curious to know what happens if you just give it ridiculous inputs. Like, for example, here the order of magnitude for the return is like 50 to 100. What happens if you put in 10,000? Good question. And this is something we tried early on. I don't want to say we went up to 10,000, but we try really high returns that not even an expert would get.

And generally, we see this leveling performance. So you can see hints of it in Half Cheetah and Pong as well, or Walker to some extent. And if you look at the very end, things start saturating. So if you exceed what is like certain threshold, which often corresponds with the best trajectory threshold but not always, beyond that, everything is similar returns.

So at least one good thing is it does not degrade in performance. So it would have been a little bit worrying if you specified a return of 10,000 and gives you a return which is 20 or something really low. So it's good that it stabilizes, but it's not that it keeps increasing on and on.

So there would be a point where the performance would get saturated. OK. Thank you. I was also curious. So usually, for transform models, you need a lot of data. So do you know how much data do you need? Where does it scale with data, the performance of decision transformer?

Yeah. So we actually use the standard data, like the D4RL benchmarks for MuJoCo, which I think have a million transitions in the order of millions. For Atari, we used 1% of the replay buffer, which is smaller than the one we used for the MuJoCo benchmarks. And I actually have a result in the very next slide, which shows decision transformer especially being useful when you have little data.

So yeah. So I guess one question to ask-- Before you move on in the last slide, what do you mean, again, by return conditioning for the multitask part? Yeah. So if you think about the returns to go at test time, the one you have to feed in as the starting token, as one way of specifying what policy you want, why-- How is that multitask?

So it's multitask in the sense that because you can get different policies by changing your target return to go, you're essentially getting different behaviors encoded. So think about, for instance, a hopper, and you specify a return to go that's really low. So you're basically saying, get me an agent which will just stick around its initial state and not go into unchartered territory.

And if you give it really, really high, then you're asking it to do the traditional task, which is to hop and go as far as possible without falling. Can you qualify those multitask because that basically just means that your return conditioning is a cue for it to memorize, which is usually like one of the pitfalls of multitask?

So I'm not sure-- It's a task identifier, that's what I'm trying to say. So I'm not sure if it's memorization because I think the purpose of this, I mean, having an offline data set that's fixed is basically saying that it's very, very specific to if you had the same start state, and you took the same actions, and you had the same target if it turns, that would qualify as memorization.

But here at this time, we allow all of these things to change, and in fact, they do change. So your initial state would be different, your target return, which could be a different scaler than one you ever saw during training. And so essentially, the model has to learn to generate that behavior starting from a different initial state, and maybe a different value of the target return than it saw during training.

If the dynamics are stochastic, that also makes it that even if you memorize the actions, you're not guaranteed to get the same next state, so you would actually have a bad correlation with the performance if the dynamics are also stochastic. I also was very curious, how much time does it take to train this new transformer in general?

So it takes about a few hours, so I want to say like about four to five hours, depending on what quality GPU you use, but yeah, that's a reasonable estimate. Yep, got it, thanks. Okay, so actually, while doing this experiment, this project, we thought of a baseline, which we were surprised is not there in previous literature on offline RL, but makes very much sense, and we thought we should also think about whether decision transformer is actually doing something very similar to that baseline.

And the baseline is what we call as person-behavioral cloning. So behavioral cloning, what it does is basically it ignores the returns and simply imitates the agent by just trying to map the actions given the current states. This is not a good idea with an offline data set, which will have project trees of both low returns and high returns.

So traditional behavioral cloning, it's common to see that as a baseline in offline RL methods and it is, unless you have a very high quality data set, it is not a good baseline for offline RL. However, there is a version that we call as person-BC, which actually makes quite a lot of sense.

And in this version, we filter out the top trajectories from our offline data set. What's top? The ones that have the highest rewards. You know the rewards for each transition, you calculate the returns of the trajectories and you take the trajectories with the highest returns and keep a certain percentage of them, which is going to be hyperparameter here.

And once you keep those top fraction of your trajectories, you then just ask your model to imitate them. So imitation learning also uses, especially when it's used in the form of behavioral cloning, it uses supervised learning essentially. It's a supervised learning problem. So you could actually also get supervised learning objective functions if you did this filtering step.

And what we find actually that for the moderate and high data regimes, the descent transform is actually very comparable to person-BC. So it's a very strong baseline, which I think all of future work in offline RL should include. There's actually an ICARE submission from last week, which has a much more detailed analysis on just this baseline that we introduced in this paper.

And what we do find is that for low data regimes, the descent transformer does much better than person-behavioral cloning. So this is for the Atari benchmarks where, like I previously mentioned, we have a much smaller data set as compared to the Mojoco environments. And here we find that even after varying the different fraction of the percentage hyperparameter here, we are generally not able to get the strong performance that a descent transformer gets.

So 10% BC basically means that we filter out and keep the top 10% of the trajectories. If you go even lower, then this data set becomes very small. So the baseline would become meaningless. But for even the reasonable ranges, we never find the performance matching that of descent transformers for the Atari benchmarks.

Diti, if I may. So I noticed in table 3, for example, which is not this table, but the one just before in the paper, there's a report on the CQL performance, which to me also feels intuitively pretty similar to the percent BC in the sense of you pick trajectories you know are performing well, and you try and stay roughly within sort of the same kind of policy distribution and state space distribution.

I was curious, on this one, do you have a sense of what the CQL performance was relative to, say, the percent BC performance here? So that's a great question. The question is that even for CQL, you rely on this notion of pessimism, where you want to pick trajectories where you're more confident in and make sure policy remains in that region.

So I don't have the numbers of CQL on this table, but if you look at the detailed results for Atari, then I think they should have the CQL for sure, because that's the numbers we are reporting here. So I can tell you what the CQL performance is actually pretty good, and it's very competitive with the decision transformer for Atari.

So this TD learning baseline here is CQL. So naturally by extension, I would imagine it doing better than percent BC. Yeah. And I apologize if this was mentioned, I just missed it, but do you have the sense that this is basically like a failure of CQL to be able to extrapolate well, or sort of stitch together different parts of trajectories, whereas the decision transformer can sort of make that extrapolation between-- you have like the first half of one trajectory is really good, the second half of one trajectory is really good, and so you can actually piece those together with decision transformer, where you can't necessarily do that with CQL, because the path connecting those may not necessarily be well covered by the behavior policy.

Yeah. Yeah. So this actually goes to one of the intuitions, which I did not emphasize too much, but we have a discussion on the paper where essentially, why do we expect a transformer, or any model for that matter, to look at offline data that's suboptimal, and get a policy that generates optimal rollouts?

The intuition is that, as Scott was mentioning, you could perhaps stitch together good behaviors from suboptimal trajectories, and that stitching could perhaps lead to a behavior that is better than anything you saw in individual trajectories in your data set. It's something we find early evidence of in a small scale experiment for graphs, and that is really our hope also, that something that the transformer is really good at, because it can attend to very long sequences, so it could identify those segments of behavior which when stitched together would give you optimal behavior.

And it's very much possible that is something unique to decision transformers, and something like CQL would not be able to do, PersonBC, because it's filtering out the data, is automatically being limited and not being able to do that, because the segments of good behavior could be in trajectories which overall do not have a high return.

So if you filter them out, you are losing all of that information. OK, so I said there is a hyperparameter, the context in K, and like with most of perception, one of the big advantages of transformers, as opposed to other sequence models like LSTMs, is that they can process very large sequences.

And here, at a first glance, it might seem that being Markovian would have been helpful for RL, which also was a question that was raised earlier. So we did this experiment where we did compare performance with context and K equals 1. And here, we had context between 30 for the environments and 50 for Pong.

And we find that increasing the context length is very, very important to get good performance. OK, now, so far I've showed you how decision transformer, which is very simple, there was no slide I had which was going into the details of dynamic programming, which is the crux of most RL.

This was just pure supervised learning in an autoregressive framework that was getting us this good performance. What about cases where this approach actually starts outperforming some of the traditional methods for RL? So to probe a little bit further, we started looking at sparse reward environments. And basically, we just took our existing MuJoCo environments, and then instead of giving it the information for reward for every transition, we fed in the cumulative reward at the end of the trajectory.

So every transition will have a zero reward, except the very end where you get the entire reward at once. So it's a very sparse reward perform scenario for that reason. And here, we find that compared to the original dense results, the delayed results for DT, they will deteriorate a little bit, which is expected, because now you are withholding some of the more fine-grained information at every time step.

But the drop is not too significant compared to the original DT performance here. Whereas for something like CQL, there is a drastic drop in performance. So CQL suffers quite a lot in sparse reward scenarios, but the decision transformer does not. And just for completeness, you also have performance of behavioral cloning and person-behavioral cloning, which, because they don't look at reward information, except maybe a person basically looks at only for preprocessing the data set, these are agnostic to whether the environments have sparse rewards or not.

>> Would you expect this to be different if you were doing online RL? >> What's the intuition for it being different? I would say no, but maybe I'm missing out on a key piece of intuition behind that question. I think that because you're training offline, the next input will always be the correct action in that sense.

So you don't just deviate and go off the rails technically because you just don't know. So I could see how online would have a really hard cold start, basically, because it just doesn't know and it's just tapping in the dark until it maybe eventually hits the jackpot. >> Right, right.

I think I agree. That's a good piece of intuition out there, but yeah, I think here, because offline RL is really getting rid of the trial and error aspect of it, and for sparse reward environments, that would be harder. So the drop in DT performance should be more prominent there.

I'm not sure how it would compare with the drop in performance for other algorithms, but it does seem like an interesting setup to test DTN. >> Well, maybe I'm wrong here, but my understanding with the decision transformer as well is this critical piece that in the training, you use the rewards to go, right?

So is it not the sense that essentially like for each trajectory from the initial state based on the training regime, the model has access to whether or not the final result was a success or failure, right? But that's sort of a unique aspect of the training regime for decision transformers.

In CQL, my understanding is that it's based on sort of a per transition training regime, and so each transition is decoupled somewhat to what the final reward was. Is that correct? >> Yes. Although like one difficulty which at a first glance you kind of imagined the decision transformer having is that that initial token will not change throughout the trajectory because it's a sparse reward scenario.

So except the very last token where it will drop down to zero all of a sudden, this token remains the same throughout, but maybe that, but I think you're right that maybe just even at the start feeding it in a manner which looks at the future rewards that you need to get to is perhaps one part of the reason why the drop in performance is not noticeable.

>> Yeah, I mean, I guess one sort of obligation experiment here would be if you change the training regime so that only the last trajectory had the reward, but I'm trying to think about whether or not that would just be compensated for by sort of the attention mechanism anyway.

And vice versa, right, if you embedded that reward information into the CQL training procedure as well, I'd be curious to see what would happen there. >> Yeah. >> And how it would go. >> Those are good experiments. Okay. So related to this, there's another environment we tested. I gave you a brief preview of the results in one of the earlier slides, so this is called the key-to-door environment, and it has three phases.

So in the first phase, the agent is placed in a room with the key. A good agent will pick up the key. And then in phase two, it will be placed in an empty room, and in phase three, it will be placed in a room with a door where it will actually use the key that it collected in phase one, if it did, to open the door.

So essentially, the agent is going to receive a binding reward corresponding to whether it reached and opened the door in phase three, conditioned on the fact that it did pick up the key in phase one. So there is this national notion on that you want to assign credit to something that happened to an event that happened really in the past.

So it's a very challenging and sensible scenario if you want to test your models for how well they are at long-term credit assignment. And here we find that, so we tested it for different amounts of trajectories. So here, the number of trajectories basically says how often would you actually see this kind of behavior.

And the Lissen transformer and person-behavioral cloning, both of these actually baselines do much better than other models which struggle at this task. There's a related experiment there, which is also of interest. So generally, a lot of algorithms have this notion of an actor and a critic. Actor is basically someone that takes actions, condition on the states, and think of a policy.

A critic is basically evaluating how good these actions are in terms of achieving a long-term, in terms of the cumulative sum of rewards in the long-term. This is a good environment because we can see how well the Lissen transformer would do if it was trained as a critic. So here, what we did is instead of having the actions as the output target, what if we substituted that with the rewards?

So that's very much possible. We can again use the same causal transformer machinery to only look at transitions in the previous time step and try to pick the reward. And here, we see this interesting pattern where in the three phases that we had in that key-to-door environment, we do see the reward probability changing very much in how we expect.

So basically, there are three scenarios. So the first scenario, let's look at Bloom, in which the agent does not pick up the key in phase one. So the reward probability, they all start around the same, but as it becomes apparent that the agent is not going to pick up the key, the reward starts going down.

And then it stays very much close to zero throughout the episode because there is no way you will have the key to open the door in the future phases. If you pick up the key, there are two possibilities, which are essentially the same in phase two where you had an empty room, which is just a distractor to make the episode really long.

But at the very end, the two possibilities are one, that you take the key and you actually reach the door, which is the one we see in orange and brown here, where you see that the reward probability goes up. And there's this other possibility that you actually pick up the key, but do not reach the door.

In which case, again, you start seeing that the reward probability that's predicted starts going down. So the takeaway from this experiment is that machine transformers are not just great actors, which is what we've been seeing so far in the results from the optimized policy, but they're also very impressive critics in doing this long-term pattern assignment where the reward is also very sparse.

So Aditya, just to be correct, are you predicting the rewards to go at each time step, or is this the reward at each time step that you're predicting? So this was the rewards to go. And I can also check-- my impression was in this part of the experiment, it didn't really make a difference whether we were predicting rewards to go or the actual rewards.

But I think whether it turns to go for this one. Also, I was curious, so how do you get the probability distribution of the rewards? Is it just like you just evaluate a lot of different episodes and just plot the rewards? Or are you explicitly predicting some sort of distribution?

So this is a binary reward. So you can have a probabilistic outcome. Got it. Son, you have a question? Yeah. So generally, we will call something predicts state value or state action value as a critic. But in this case, you ask decision transformer to only predict the reward. So why should you still call it a critic?

So I think the analogy here gets a bit clearer with returns to go. Like, if you think about returns to go, it's really capturing that essence that you want to see the future rewards that-- Oh, I see. So you mean it's just going to predict the return to go instead of single-step reward, right?

Yeah. Yeah. OK. So if we're going to predict the returns to go, it's kind of counterintuitive to me. Because in phase one, when the agent is still in the K room, I think it should have a high returns to go if it picked up the K. But in the plot, in the K room, the agents pick up K, and the agent that didn't pick up K has the same kind of level of returns to go.

So that's quite counterintuitive to me. I think this is reflecting on a good property, which is that your distribution-- like, if you interpret returns to go in the right way, in phase one, you don't know which of these three outcomes are really possible. And phase one also, I'm talking about the very beginning, basically.

Slowly, you will learn about it. But essentially, in phase one, if you see the returns to go as 1 or 0, all three possibilities are equally likely. And all three possibilities-- so if we try to evaluate the predicted reward for these possibilities, it shouldn't be the same. Because we really haven't done-- we don't know what's going to happen in phase three.

Sorry, it's my mistake, because previously, I thought the green line is the agent which doesn't pick up the K. But it turns out the blue line is the agent which doesn't pick up K. So yeah, it's my mistake. It makes sense to me. Thank you. Also, it was not fully clear from the paper, but did you do experiments where you're predicting both the actions and both the rewards to go?

And does it-- can it improve performance if you're doing both together? So actually, we did some preliminary experiments on that, and it didn't help us much. However, I do want to, again, put in a plug for a paper that came concurrently, Trajectory Transformer, which tried to predict states, actions, and rewards, actually, all three of them.

They were in a model-based setup, where it made sense, also, to try to learn each of the components, like the transition dynamics, the policy, and maybe even the critic in their setup together. We did not find any significant improvements. So in favor of simplicity and keeping it model-free, we did not try to predict them together.

Got it. OK, so the summary, we showed this in Transformers, which is a first work in trying to approach RL based on sequence modeling. The main advantages of previous approaches is it's simple by design. The hope is that for the extensions, we will find it to scale much better than existing RL algorithms.

It is stable to train, because the loss functions we are using have been tested and iterated upon a lot by research and perception. And in the future, we will also hope that because of these similarities in the architecture and the training with how perception-based tasks are conducted, it would also be easy to integrate them within this loop.

So the states, the actions, or even the task of interest, they could be specified based on perceptual-based senses. So you could have a target task being specified by a natural language instruction. And because these models can very well play with these kinds of inputs, the hope is that they would be easy to integrate within the decision-making process.

And empirically, we saw strong performance in the range of offline RL settings, and especially good performance in scenarios which required us to do long-term credit assignment. So there's a lot of future work. This is definitely not the end. This is a first work in rethinking how do we build RL agents that can scale and generalize.

A few things that I picked out, which I feel would be very exciting to extend. The first is multi-modality. So really, one of our big motivations with going after these kinds of models is that we can combine different kinds of inputs, both online and offline, to really build decision-making agents which work like humans.

We process so many inputs around us in different modalities, and we act on them. So we do take decisions, and we want the same to happen in artificial agents. And maybe decision transformers is one important step in that route. Multi-task, so I described a very limited form of multi-tasking here, which was based on the desired returns to go.

But it could be more richer in terms of specifying a command to be a robot or a desired goal state, which could be, for example, even visual. So trying to better explore the different multi-task capabilities of this model would also be an interesting extension. Finally, multi-agent. As human beings, we never act in isolation.

We are always acting within an environment that involves many, many more agents. Agents become partially observable in those scenarios, which plays to the strengths of decision transformers being non-Markovian by design. So I think there is great possibilities of exploring even multi-agent scenarios where the fact that transformers can process very large sequences compared to existing algorithms could again help build better models of other agents in your environment and act.

So that, yeah, there's some just useful links in case you're interested. The project website, the paper, and the code are all public, and I'm happy to take any more questions. Okay. So as I said, thanks for the good talk. Really appreciate it. Everyone had a good time here. So I think we are near the class limit.

So usually I have a round of rapid fire questions for the speaker that the students usually know. But if someone is in a hurry, you can just ask general questions first before we stop the recording. So if anyone wants to leave earlier at this time, just feel free to ask your questions.

Otherwise, I will just continue on. So what do you think is the future of transformers in RL? Do you think they will take over-- so they've already taken over language and vision. So do you think for model-based and model-free learning, do you think you'll see a lot more transformers pop up in RL literature?

Yes. I think we'll see a flurry of work. If not already, we have good-- there's so many works using transformers. And this year's ICLR conference. Having said that, I feel that an important piece of the puzzle that needs to be solved is expiration. It's non-trivial. And it will have to-- my guess is that you will have to forego some of the advantages that I talked about for transformers in terms of loss functions to actually enable expiration.

So it remains to be seen whether those modified loss functions for expiration actually hurt performance significantly. But as long as we cannot cross that bottleneck, I think it is-- I'm not-- I do not want to commit that this is indeed the future of RL. Got it. Also, you think that something-- Wait.

Sorry. I have a follow-up question. Sure. I'm not sure I understood that point. So you're saying that in order to apply transformers in RL to do expiration, there have to be particular loss functions, and they're tricky for some reason? Yeah. Could you explain more? Like, what are the modified loss functions, and why do they seem tricky?

So essentially in expiration, you have to do the opposite of exploitation, which is non-aggregating. And there is right now no-- nothing in built-in the transformer right now which encourages that sort of random behavior where you seek out unfamiliar parts of the state space. That is something which is in built-in to traditional RL algorithms.

So usually you have some sort of entropy bonus to encourage expiration. And those are the sort of modifications which one would also need to think about if one were to use decision transformers for online RL. So what happens if somebody-- I mean, just naively, suppose I have this exact same setup, and the way that I sample the action is I sample epsilon greedily, or I create a Boltzmann distribution and I sample from that.

I mean, just what happens? It seems that's what RL does. So what happens? So RL does a little bit more than that. It indeed does those kinds of things where it would change the distribution, for example, to be a Boltzmann distribution and sample from it. But it's also-- there are these-- as I said, the devil lies in the detail.

It's also about how it controls that expiration component with the exploitation. And it remains to be seen whether that is compatible with decision transformers. I don't want to jump the gun, but I would say it's-- I mean, preliminary evidence suggests that it's not directly transferable, the exact same setup to the online case.

That's what we have found. There has to be some adjustments to be made, to make-- which we are still figuring it out. So the reason why I ask is, as you said, the devil's in the details. And so someone naively like me might just come along and try doing what's in RL.

I want to hear more about this. So you're saying that what works in RL may not work for decision transformers. Can you tell us why? What pathologies emerge? What are those devils hiding in the details? I also remind you-- sorry, we are also over time, so if you're in a hurry, feel free to-- I'll send an email and follow up.

But to me, that's really exciting, and I'm sure that it's tricky. Yeah, I will just ask two more questions, and you can finish after this. So one is, did you think something like decision transformer is a way to solve the credit assignment problem in RL, instead of using some sort of discount factor?

Sorry, can you repeat the question? Oh, sorry. So I think usually in RL, we have to rely on some sort of discount factor to encode the rewards to go something like that. But decision transformer is able to do this credit assignment without that. So do you think something like this book is the way we should do it, like we should, instead of having some discount, try to directly predict the rewards?

So I would go on to say that I feel that discount factor is an important consideration in general, and it's not incompatible with decision transformers. So basically, what would change, and I think the code actually gives that functionality, where the returns to go would be computed as the discounted sum of rewards.

And so it is very much compatible. So there are scenarios where our context length is not enough to actually capture the long-term behavior we really need for credit assignment. Any traditional tricks that are used could be brought in back to solve those kinds of problems. Got it. Yeah. I also thought, when I was reading the decision transformer work, that the interesting thing is that you don't have a fixed gamma, like a gamma is usually a hyperparameter, but you don't have a fixed gamma.

So do you think, can we also learn this thing? And could this also be, can you have a different gamma for each time step or something, possibly? That would be interesting, actually. I had not thought of that, but maybe learning to predict the discount factor could be another extension of this work.

Also do you think this decision transformer work, is it compatible with Q-learning? So if you have something like CQL, stuff like that, can you also implement those sort of loss functions on top of decision transformer? So I think maybe I could imagine ways in which you could encode pessimism in here as well, which is key to how CQL works.

And actually most of the neural algorithms work, including the model-based ones. Our focus here deliberately was to go after simplicity, because we feel that part of the reason why our literature has been so scattered as well, if you think about different subproblems that everyone tries to solve, has been because everyone's tried to pick up on ideas which are very well suited for that narrow problem.

Like for example, you have, whether you're doing offline or you're doing online or you're doing imitation, you're doing multitask and all these different variants. And so by design, we did not want to incorporate exactly the components that exist in the current algorithms, because then it just starts looking more like an architecture change as opposed to a more conceptual change into thinking about RLS sequence modeling very generally.

Got it. Yeah. That sounds interesting. So do you think we can use some sort of TD learning objectives instead of supervised learning? It's possible, and maybe like I'm saying that for certain, like for online RL, it might be necessary. Often RL, we were happy to see it was not necessary, but it remains to be seen more generally for transformer model or any other model for that matter, encompassing RL more broadly, whether that becomes a necessity.

Got it. Yeah. Well, thanks for your time. This was great. Thank you. Bye-bye. Bye-bye.