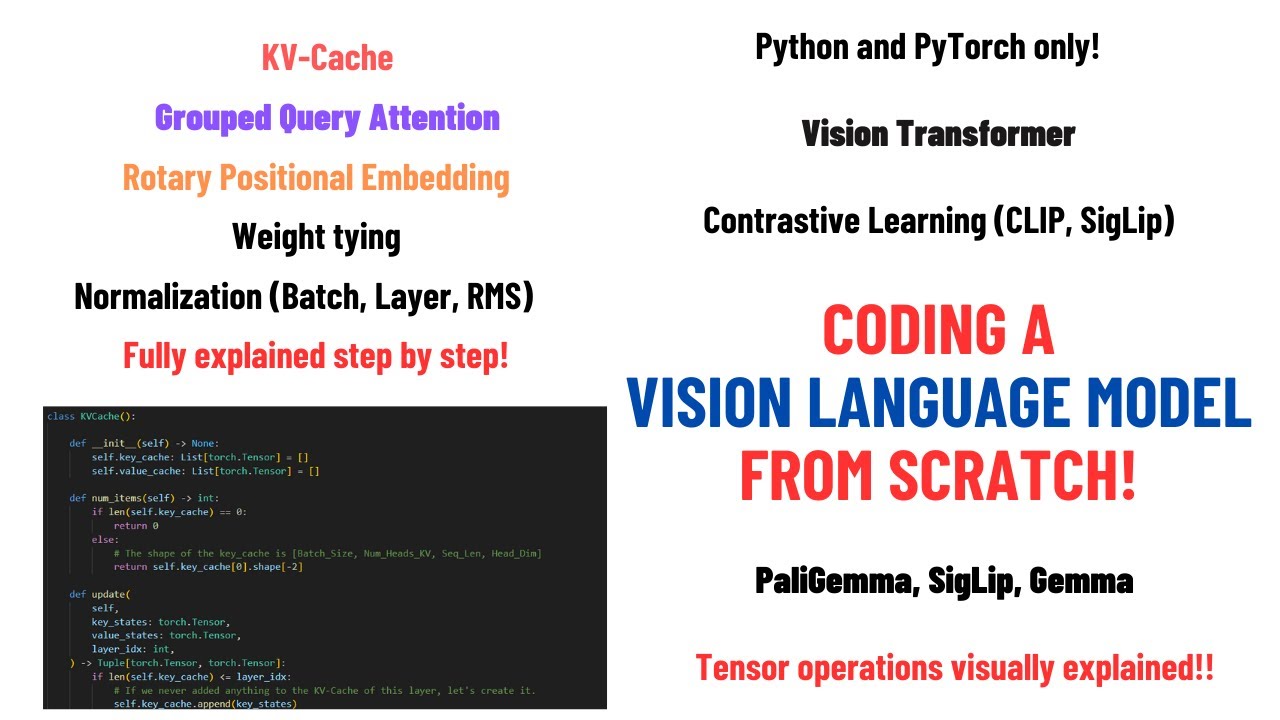

Coding a Multimodal (Vision) Language Model from scratch in PyTorch with full explanation

Chapters

0:0 Introduction5:52 Contrastive Learning and CLIP

16:50 Numerical stability of the Softmax

23:0 SigLip

26:30 Why a Contrastive Vision Encoder?

29:13 Vision Transformer

35:38 Coding SigLip

54:25 Batch Normalization, Layer Normalization

65:28 Coding SigLip (Encoder)

76:12 Coding SigLip (FFN)

80:45 Multi-Head Attention (Coding + Explanation)

135:40 Coding SigLip

138:30 PaliGemma Architecture review

141:19 PaliGemma input processor

160:56 Coding Gemma

163:44 Weight tying

166:20 Coding Gemma

188:54 KV-Cache (Explanation)

213:35 Coding Gemma

232:5 Image features projection

233:17 Coding Gemma

242:45 RMS Normalization

249:50 Gemma Decoder Layer

252:44 Gemma FFN (MLP)

256:2 Multi-Head Attention (Coding)

258:30 Grouped Query Attention

278:35 Multi-Head Attention (Coding)

283:26 KV-Cache (Coding)

287:44 Multi-Head Attention (Coding)

296:0 Rotary Positional Embedding

323:40 Inference code

332:50 Top-P Sampling

340:40 Inference code

343:40 Conclusion

Transcript

Hello guys, welcome back to my channel today. We are going to code a visual language model from scratch Now, what do I mean by first of all by visual language model? And what do I mean for by coding from scratch? The visual language model that we will be coding is called the polygamma and it's a language model visual language model that came out From google around two months ago About the weights, but the paper came out around two weeks ago So we will be coding it from scratch meaning that we will be coding from scratch the vision encoder You can see this here.

Okay the linear projection, which is just a linear Layer the language model itself So which is the transformer language model how to combine the embeddings of the image tokens with the text tokens And of course how to generate the output using the condition. So what is the language visual language model?

First of all, well visual language model is a language model that can extract information from an image So if we have an image like this, for example and a prompt like this, for example, where is the photographer resting? The visual language model can understand where this photographer is resting by looking at the image And generating a response in this case.

The response is in a hammock under a tree on a tropical beach The topics of today basically are first of all, we will be talking about the vision transformer Which is the vision encoder that we'll be using to extract information from this image But this vision transformer has been trained in a particular way called contrastive learning So we will be talking about a lot about contrastive learning because I want to review not only what is contrastive learning But also the history of how it works So the first well-known model is CLIP and then it was transformed into CGLIP by google So we will be seeing these two models Then we will be coding the language model itself So the gamma language model how to combine the embeddings of the vision model and the language model But this one we'll do it in code And we will be talking about the KVCache because we want to Use this language model for inferences So we want to do it in an optimized way and the best way of course is to use the KVCache So we will be coding it from scratch Not only we will be coding it.

I will explain step by step how it works The rotary positional encodings because we need them for the language model and the normalization layers because we have them in the vision model And also the language model. We will be seeing what is the batch normalization, the layer normalization and the rms normalization I will be explaining all the math behind them In this video i'm also using a slightly different approach at teaching let's say Which is by drawing so I will be drawing every single tensor operations that we'll be doing especially in the attention Mechanism because I want people to not only look at the code and hope they get something Like an idea of how it works But actually I want to show each single tensor how it's changing by drawing it from scratch I think this helps better visualize what happens in the transformer model, especially during the attention mechanism So we know what each view operation each reshape operation that we are doing to each tensor and also the matrix Multiplications that we are doing so we can visualize what happens to the tensors itself What are the prerequisites for watching this video?

Well, you have a basic knowledge about the transformer. You don't have to be a master about it It's better if you have watched my previous video on it Which will give you the background knowledge to understand this video and you have a basic knowledge of neural networks So at least you know, what is a loss function, you know, what is a linear layer?

And at least you know, what is backpropagation you don't need to know how it works or the mathematics behind it But at least you know that we train models using backpropagation Having said that guys, let's jump to work. So the first part I will be explaining is the visual transformer So this visual encoder we will be seeing what is the contrastive about it and we will be coding it and then we will move on to how to combine the Embeddings of the image tokens and the text tokens.

The only part that we will not be coding is the tokenizer Because I believe it's a separate topic that deserves its own video. So hopefully I will make another video about it So let's start All right guys before we go deep into each of these topics Let me give you a little Speech actually, so we will be exploring a lot of topics like a lot of topics We will be reviewing for example each of the single Operations that we do in the attention mechanism and we will be looking at it from the code point of view But also from the concept point of view and from the tensor operations point of view There may be some topics that you are already familiar with and that's perfectly fine There are some others that you are not familiar with and that's also perfectly fine because I will be explaining each topic multiple times So for example, we will be Implementing the attention mechanism at least twice So if you don't understand it the first time along with the code, then you will have another time to Understand it and with a different explanation And the same more or less goes goes on with all the other topics.

For example, we will be first introducing the Normalization in one part and then I will review again the normalization The positional encoding done in one way and then we will see another type of positional encoding So don't worry if you don't understand everything at the beginning because I will be reviewing anyway each topic multiple times The important thing is you don't give up So if there is some topic that I couldn't explain because of lack of time For example, I will not be explaining how convolutions work because there are plenty of videos on how convolutions work So if you can pause the video watch five minute video on how a convolution work and then come back to this video That's the best approach I recommend The second thing is always write down all the code that I am I will be showing you so write it Line by line character by character because that's the best way to learn.

So now let's get started Let's start with the first part. So the first part we will be talking about is this contrastive vision encoder Which is something that takes any as input an image and converts it into an embedding Actually a series of embedding. We will see one for each Block of pixels of this image.

So basically our image will be Split into blocks of pixels like this into a grid and each of this grid will be converted into an embedding you can see here This embedding is a vector of a fixed size and that will be concatenated with the Tokens embeddings because as you know, each token is converted into what is known as an embedding Which is a vector of a fixed size.

They will be concatenated and sent to the transformer which will basically attend to this Image tokens as a condition to generate the text. So this is called conditional generation But okay, we will explore all this stuff here Let's talk about this vision encoder now the vision encoder First we need to understand what is why it's called a contrastive vision encoder and to understand why it's contrastive We need to understand what is contrastive learning So let's go back to another slide, which is this one Let's go here so Imagine for now, we will consider the image encoder as a black box and later We will transform this black box into something more concrete now imagine that you have You go to the internet and when you go on wikipedia You see an image and when you see an image there is always a description of what is inside that image If you use a crawler you can crawl all of these images with the corresponding descriptions That in this will produce a data set of images along with the descriptions Now imagine that for some now for now imagine we have a text encoder that is most usually is a transformer model And then we have an image encoder which most of the cases it's a vision transformer And for now, we consider them as black boxes So it's something that takes as input an image and produces Here an image and produces an embedding representation of this image And if you feed a list of images, it produces a list of embeddings one corresponding to each image.

What is this embedding? It's a vector that captures most of the information of this image And we do the same with this text encoder. So the text encoder is a transformer model that produces a series of embeddings. We will We'll see later But imagine you have this text encoder that given a text produces a single embedding of a single text But if you feed it a list of text it will produce a series of embeddings each corresponding to one single text now imagine The data set that we were talking about before which is the data set of images along with the corresponding descriptions So imagine we feed this data set of images along with the corresponding description to the image encoder and respectively to the text encoder It will produce a list of image embeddings and a list of text embeddings Now, what do we want these embeddings to be?

Of course, we want the embedding Of the first image to be representative of that image So we want this embedding to capture most of the information of that image and of course, we want the embedding of the text number one to be A vector that captures most of the information about that text Moreover with contrastive learning we don't want only to capture information about the image or the text But we also want some properties and the property that we want from these embeddings is this We want the embedding of each image when its dot product with the Embedding of the corresponding text it should give a high value for this dot product And when you do the dot product of an image with a text that is not the corresponding one It should produce a low number for this dot product So basically with contrastive learning what we do we take a list of images We take a list of text which is the corresponding text one for each of these images So imagine that the image number one correspond to the text number one the image number two correspond to the text number two, etc etc, etc We encode them into a list of embeddings and then we want to train This model so this text encoder and this image encoder to produce embeddings in such a way That when the dot product of the image with its corresponding text is done It should produce a high value and when you do the dot product of an image with a not corresponding text For example i2 with text3 it should produce a low value now What we can do is basically we take this text embeddings, which is a list of embeddings We take this image embeddings, which is a list of vectors We do all the possible combinations of dot products So the image number one did with the text number one image number one with the text number two image number one with the text Number three, etc, etc Then we do the all the also for the text number one So the text number one with the image number one text number one with the image number two text number one with the image Number three, etc, etc And then we want to find a loss function that forces These dot products to be high so that each text with its corresponding image to be high While all the other possible combinations to be low in value And we do that basically by using what is known as a cross entropy loss.

So To understand why we use cross entropy loss. We need to explore how language models are trained and we will do that very briefly so To not get us confused. So when we train language model, we do the we do so using what is known as the next token prediction task Imagine we want to train a language model on the following sentence.

So I love pepperoni pizza Pizza How do we train such a language model? Well, we give a prompt to this language model for now Let's consider it as a black box. So I love I love pepperoni We feed it to the language model The language model will produce a series of embeddings Which are then converted into logits.

So what is the logits? The logits is a distribution. It's a vector that tells What is the score that the language model has assigned to what the next token should be? Among all the tokens in the vocabulary. So for example, imagine this first number here corresponds to the token.

Hello the second token here corresponds to the The second number here corresponds to the token. Let's say pizza The third corresponds to the token car the fourth Number to the token dog, etc, etc Which one we want to be the next token? Of course, we know that the next token is a pizza So we want the token number pizza to be high and all the other tokens to be low in value So we use the cross entropy loss basically to make sure that the next token is pizza.

So how do we do that? Basically we Language model will output a list of numbers and we force the language model To produce the following output. So pizza should be one and all the others should be zero To compare these two things This one should be a distribution So basically the cross entropy loss what it does it takes a vector it converts it into a distribution With the softmax function and then we compare it with a label and we force the output to be equal to the label This will change the language model To generate a distribution the next time after the training in such a way that the pizza is given a high number and all the others Are given a low number and this is exactly the same that we do here for contrastive learning So we can use the cross entropy loss To force for example in this column here only this number to have a high value and all the others to have a low value And for this row here Only this number to have a high value and all the other number in this Row to have a low value and for example for this row We want the second item to have a high value and all the others to have a low value, etc, etc And we do that with the cross entropy loss Now here is the code that the pseudo code that they show in the Clip paper on how to implement the clip training with contrastive loss So basically we have a list of images and a list of text We encode them and they will become a list of vectors called image vectors and text vectors here image embeddings and text embeddings We normalize them later.

We will see why we normalize stuff But okay, it's make sure that we reduce the internal covariance shift, but for now ignore it Anyway, we normalize them later. We will talk about normalization We calculate all the possible dot products between these embeddings So the text embeddings and the image embeddings, so we basically generate this grid here then We generate the labels the labels are what well for the first row We want the label the first item to be maximum for the second row the second item for the third row the third item And that's why the labels are arranged this This is basically the the function arrange generates a number between zero and in this case n minus one So for the row number zero, we want the item number zero to be maximum for the row number one We want the item number one, etc, etc until the row number n minus one We want the n minus one item to be the maximum one Then we calculate the cross entropy loss between what is the output of the model So what are the numbers assigned by the model to each of these dot products and what we want?

The maximum to be among these numbers. This is the labels And we do it by rows and by columns this one you can see here then we sum these Losses and we compute the average so we compute the average loss between all the rows and all the columns And this is how we do contrastive learning.

Now, let's explore. What is the problem with CLIP? All right. So what is the problem with CLIP? Well, the problem with CLIP is very simple is that we are using the cross entropy loss And the cross entropy loss basically needs to have a compare does the comparison between two distributions So in language model we compare the output logits which are transformed into distribution With the label so which item of this distribution we want to be the maximum one and we do the same here So we have this column We convert it into a distribution and we do it through a function called the softmax function So the softmax function basically it is a function that takes as input a vector and converts it into a distribution What does it mean?

It means that when you have a vector like this, for example, it will be a list of numbers To be a distribution each of these numbers needs to be non-negative. So it needs to be Greater than or equal to zero and plus all of these numbers needs to sum up to one That's what a distribution is Of course The model will predict some numbers and it cannot force all the sum of these numbers to be one and it cannot force the numbers to be non-negative So we apply to the output of the model this function called the softmax Which transforms them into a distribution and then we can compare it with the labels So our label in the case for example for the first For the second row will be this So we want the first item to be zero the second item to be one and this one to be zero this one to be zero This one to be zero this one to be zero, but we need to apply the softmax to the output of the model now the softmax Function has a problem which is And we will see now this is the expression of the softmax basically to we take the output of the model and we exponentiate each item in the output vector, which could be a row or a column And after exponentiating we also divide them with the sum of all the other items So the exponential of all the other items So which means that we need to calculate first of all for each row the exponential of the item And then we need to divide by the sum of all the exponentials of all the other items including itself The the problem is that we are using this exponential.

The exponential is basically a function that grows very fast So if the argument of the exponential Grows the exponential will become huge And this is a problem for computers because in computers we store numbers using a fixed representation Which could be 16 bit or 32 bit which means that we cannot represent up to infinity But we can represent each number up to 2 to the power of n minus 1 basically if you don't have negative numbers So if the exponential is too big then our numbers will grow too much and it may not be represented by 32 bit And that's a problem.

So we need to make this softmax function numerically stable So whenever you heard the term numerical stability in terms of computer science It means that we want to make sure that the number can be represented within 32 bits or 16 bits or whatever range we are using How to make this softmax numerically stable?

Well, the trick is this. The softmax is uh, each item is exponentiated So we do the exponential of each item And then we divide it by this This denominator which is known as the normalization constant, which is the sum of all the Exponentials of all the other items in the vector Now as you know, this is a fraction So in a fraction you can multiply the numerator and the denominator by the same number without changing the fraction So we multiply by this constant called c Each number can be written as the exponentials of the logarithm of the number And this is because the exponential and the log are inverse functions So we can write c as follows.

So the exponential of the log of c By using the properties of the exponential which means that the exponential of the product The product of two exponential is equal to the exponential of the sum of the arguments We can write it like this And then we can bring this exponential inside the summation because of the distributive property of the product with respect to the sum After we bring it inside we can use the same Rule we applied above which is the exponential of the product is equal to the exponential of the sum of the arguments Now what we notice is that if we subtract something from this exponential this log of c We can make the argument of the exponential smaller which may make it numerically stable So what we choose as this log of c, basically we choose the Negative maximum number in the array that we are normalizing using the softmax This way basically the argument of the exponential will decrease and it will be less likely that this exponential will Go to infinity Which makes it numerically stable Now this basically means that to calculate the cross entropy loss for each of these columns and each of these rows First of all the model needs to output a list of Text embeddings and a list of image embeddings as you can see then we do all the possible dot products Then for each column first of all We need to find the maximum value in this column so that we can subtract it before calculating the softmax Then we need to apply the exponential to each of these items then we sum up all of this exponential to calculate the Normalization constant then we divide each of these numbers by this normalization constant so as you can see to apply the cross entropy loss involves a lot of computations and Also, it forces you to always have imagine you want to parallelize this operation Imagine that you want to distribute each row between different devices So this device here needs to have all the row in its memory because it needs to calculate this normalization constant So it has needs to have access to all of this row and if you want to do parallelize by column Then you need to have all the column In your memory because you need to calculate the first of all the maximum item then you need to calculate this normalization constant Then you need to normalize them so dividing by this normalization constant So it is involves a lot of computation But also it makes it difficult to parallelize because at any moment each device needs to have at least one full row or one full Column, which does not allow us to go to very big batch size And this is a problem.

So if you look at the cglib paper, they say that note that Due to the asymmetry of the softmax loss the normalization is also independently performs two times So first of all to make the softmax numerically stable, we need to go through each single vector calculate the maximum Then we need to calculate the softmax but then we also need to calculate the softmax by rows and then by columns why because this Matrix here is not symmetric.

So as you can see This is image number one with all the text and this is Text number one with all the images and this item here is not equal to this item here Because this is image number one with the text number two, and this is image number two with the text number one Because it's not symmetric means that you need to calculate the softmax for each single rows And then you need to calculate it for each single column and then you can calculate the loss So the problem with the clip is that it's very computationally expensive to calculate this loss this contrastive loss that's why in the cglib paper they propose to replace the Cross entropy loss with the sigmoid loss So with the cglib what we do is as follows Again, we have an image encoder that converts a list of images into a list of embeddings one for image image Then we have list of text which convert each text into a list of embedding one for each text Then what we do We calculate this all the possible dot products So the image number one with the text number one image number two with text number two and also image number one with text Number two text number three text four text five blah blah.

So all the possible dot products between all these embeddings then instead of treating the loss as a distribution over a row or a Column or a row So we don't say in this row in this column I want this item to be maximum or in this row. I want this item to be maximum We use what is known as binary We use it as a binary classification task using the sigmoid loss In which each of these dot products is treated independently from each other So this is considered a single binary classification task in which we say okay this item here should be one This item here should be zero.

This item here should be zero. This item here should be zero independently of what are the other items This one here should be zero. This one should be here zero, etc, etc, and we can do that with the sigmoid function So as you can see, this is the function the signature expression of the sigmoid function It takes as input this value called z which will be the dot product of our vectors And the output of the sigmoid is this stuff here, which is a number between zero and one So what we can do is we take each of these dot products.

We run it through a sigmoid And then we force the label to be one for corresponding Text and images and zero for not corresponding ones. So each of these dot products now becomes a independent binary classification task basically this allow us to Grow the batch size to millions of items and also to parallelize because we can put this block here into one device And it can calculate it independently from this other device because they do not need to calculate any normalization Constant for each item or the maximum item in each row or column because each of them is independent from the others Now you may be wondering why are we even using a contrastive vision encoder I mean Why cannot we just use an ordinary vision encoder that just takes an image and instructs some kind of embeddings that capture the information?

Of this image why we want it to be contrastive because We want these embeddings to not only capture a information about the image, but we want these embeddings to be Good representation that can be then contrasted or can be used along with text embeddings And this is exactly what we do in a vision language model.

We extract some image Embeddings which are vectors representing we will see later a patch of the image So this you need to think of this image as being divided into a grid and this first second third four five six So we produce in this case, for example, nine embeddings which are nine vectors Each of them represents information about a patch of the image So we want these embeddings to not only be Representing the information of these patches, but also to be able to be contrasted with the text Which is what we do in a visual language model So we have some prompt and we kind of contrast it with the image embeddings to produce an output It is not really a contrastive learning in this case because we are using it as a condition We will see later how these things are merged But we want a visual language a vision encoder that is already trained to be used with the text because it has a better Representation for the image for being used along with the text.

That's why we use the contrasting vision encoder also, we use them because they are cheaper to train so You can basically to train a contrasting vision encoder You just need to crawl billions of images from the internet Each of them already has a kind of a description because you can for example in wikipedia You always have the description of each image, but also the internet when you have an image you always have the html alt text It's called Which is the alternative text that is displayed when the image is not shown So you always have access to some kind of description Now, of course this vision encoder may be noisy because they we crawl stuff from the internet Which means that this stuff may not always be correct So sometimes you see a picture but the description displayed is not correct or maybe the crawler didn't get the correct information But because we train it on billions and billions and billions of images eventually it learns a good representation of this image So this vision encoder that we will be using is basically a vision transformer.

So now let's talk about the vision transformer Let's talk about it here So the vision transformer is a transformer basically that was introduced in this paper and image is worth 16 by 16 words In which basically they train a transformer as follows. So first of all, what do we How does a transformer work?

we will see later in detail what is the Attention mechanism, but for now, I just need you to remember that the transformer model is a sequence to sequence model which means that you feed it a sequence of embeddings and it outputs a sequence of contextualized embeddings What we do to encode an image with the vision transformer we take an image and we Split it into patches and in this case, for example, we can split into 16 patches So this is the first group of pixels.

This is the second group of pixels This is the group of pixels on the bottom right of the image. This one is on the top right top right, etc, etc we extract Information about this patch using a convolution So when you run a convolution you can extract information about a group of pixels from the image And then for example, this one will produce this output This one the convolution of this patch will produce this output.

The convolution of this patch will produce this output, etc, etc And then we flatten them. So we lose the positional information We just take we don't care if this four is the top right or the bottom left We just concatenate them one with each other We do we lose the two dimensionality in this case basically so we transform into a sequence of patches instead of being a grid of patches Then we add this position information so we say that okay, this is the patch number one So, how do we do that?

This patch basically the embedding of this patch that will be the result of this convolution will be a vector We add to this vector another vector that tells the model Hey, this is the patch number one and this is the patch number two, and this is the patch number three, etc, etc So we do that by adding so this plus operation you can see here and unlike the Vanilla transformer or the transformer model that we see for language models These positional encodings are not calculated using sinusoidal functions, but they are learned So they are vectors that get added always so the positional encoding number one always gets added to the top left Patch the positional number two always gets added to the second patch from the top left, etc, etc The positional encoding number 16 gets added always to the bottom right patch So the model Has kind of access to this to the 2d representation of the image So the model will learn basically that the patch number 16 is always on the top right and this is always on the top left We feed it to the transformer So this is a series of embeddings because the sum of two embeddings is a series of embedding We feed it to the transformer model for now Let's consider it as a black box and later when we code it, we will explore each layer of this transformer The transformer what it does it does the contextualization of these embeddings So at input we have this each series of embeddings each of them representing one single patch The output of the transformer through the attention mechanism will be a series of embeddings again But each of these embeddings is not only capturing information about itself, but also about other patches In language models, we do what is known as We use in the attention mechanism.

We use what is known as the causal mask. So this first Embedding should be only capturing information only about itself the second one only About itself and the previous one the third About itself and the two previous one the fourth one about itself and the three previous one, etc This is what we do with the language models with visual language models in the with the trust Sorry, not with visual language, but with the vision transformers We don't care about this being The model being autoregressive we say so we don't want these patches to only encode information about the previous patches because in the in an image There is no autoregressiveness.

So it's not like the patch number 16 of an image It depends only on the previous patches and the patch number one does not depend on any others Because imagine you have an image in which the sun is here or the light source is here then this part here will be light will be illuminated, but So the illumination here depends on what is coming after in the image So in the image, we don't have this autoregressive relationship Why in the text without we do because we we write the text from left to right or from right to left But anyway, each word that we write depends on what we have written previously But this doesn't happen with image.

So basically this contextualized embeddings They capture information about themselves, but also all the other embeddings and We use this contextualized embedding to capture information about each patch But also how it is present in the image. That's why we want them to contextualize So we want each patch to include information about its position, which is given by the positional encoding But also about what is surrounding this patch in the image By contextualizing them.

So when we code it, this will be more clear for now. I just want you to get a Idea of what we are going to code. So we are going to code a model that will take an image will apply a convolution To extract a series of embeddings. You can see here.

We will add a positional encoding to these ones Which are learned we will apply the attention mechanism Which is will be a series of layer actually of the transferable model that will contextualize these embeddings And then we will use this contextualized embedding as input to the language model for decoding the output of the language model So let's finally start coding Now in this video I will be Using a slightly different approach, which is I will not be writing each line I will be copying each line and explaining it step by step because I want this video to be more about explanation than just Coding because I want to use the code for explaining what happens under the code under the hood So let's create our first file, which is the modeling Oops, I'm using Chinese Siglip.py And let's start by importing stuff which we need I don't need copilot And then we create our first class which is the siglip-config So, what is this basically we will be using this visual encoder and this visual encoder will have some Configurations, why do we need a configuration class because uh, polygamma comes in different sizes Let me put this one.

Okay Polygamma comes in different sizes Which means that each of this size of polygamma each of these models polygamma models has a different configuration for its vision encoder So let's see each of them The hidden size basically it's the size of the embedding vector of this vision transformer that we are going to encode the intermediate size is the Linear layer that we use the size of the linear layer that we use in the feed-forward network The number of hidden layers is the number of layers of this vision transformer The number of attention heads is the number of attention heads in the multi-head attention The number of channels is how many channels is each image has which is RGB The image size is because polygamma comes in I remember three sizes.

So 224, 448 and 896 something like this The default information that we put here is the for polygamma 224 Which supports of course image of size 224. So if you provide any image, it's first get resized into 224 by 224 The size of each patch. So what is the number?

It will be divided each image will be divided into patches. Each patch will be 16 by 16 and the this way is a Parameter for the layer normalization. We will see later The attention dropout is another parameter that we will not be using in the attention calculation Basically, it's a dropout that we use in the attention, but we will not be using it And the number of image tokens indicates how many output embeddings this attention mechanism will this transformer vision transformer will output which is the how many Image embeddings we will have for each image Now before we saw that each an image encoder is something that converts an image into one single embedding So that represents all the information about that image but in the case of the vision transformer we can use all the output of the vision transformer to have because as we saw before Vision transformer is a transformer model.

So which takes as input A list of embeddings and it outputs a contextualized embedding So each of these contextualized embedding will be the tokens of our image so it will not be one single embedding that represents the whole image, but Lists of embeddings that represent a patch of each image, but also information about other patches through the attention mechanism But we will see this later.

So now this class is very very basic. It's just a configuration of our cglib Now let's start by coding the structure of this vision transformer. So let me copy this stuff here How to follow this video now I I am copying the code because I have already written before and I want to explain it instead of Coding it because I also allows me to copy the comments and also allows me to avoid any mistakes while coding it But I recommend that you code it from scratch.

So you take this video and you just type whatever I am pasting here This is the best way to learn because it's like when you study a mathematical proof You should not just watch the proof on the piece of paper Because even if it you think it makes sense to you It doesn't actually because when you write it by hand, so when you code each of these lines by hand Your mind will think why am I typing this?

Why am I writing this? Why am I multiplying this number by this number? Why am I? Calling this function so you question yourself when typing That's why I recommend that you type this code while I am pasting it I do it by pasting otherwise this video will be 20 hours so The first thing that we do is we create this vision Model, this vision model is made up of a transformer and it has a configuration So basically what we are doing is we take the pixel values of this our image, which will be loaded with NumPy So when you load an image with NumPy it gets converted into an array that is channeled by height by width But we can have a batch of images.

That's why we have a batch size here. So the batch dimension And our vision transformer will convert this into a batch size NumPatches Which is how many NumImage tokens we have here and each Vector will be of a fixed dimension called embeddim here So basically our vision model will take an image as you can see a batch of images and it will give us a batch of List of embeddings one list of embeddings for each image where each embedding is a vector of size embeddim Okay.

Now let's code the vision transformer, which is very simple also So let's do it also step by step actually so this vision transformer is basically a Torch layer Where we pass the configuration we save this embeddim, which is the hidden size We saw before which is the size of this embedding vector We first need to extract the embeddings from this We need to extract the patches from this image, which will be done with this layer.

We will call SigLip vision embeddings Then we will run it through a list of layers of the transformer Which is this SigLip encoder because it reminds the encoder of the transformer Which is a series of layers of transformer and then we will have a layer normalization and we will see later how layer normalization works The forward method is very simple So the forward method is basically we take these Pixel values, which is the image which is a patch of images and we convert them into embeddings, which is Which basically means that we are extracting the patches from these images.

So let's visualize it here So what we are doing with this Image embeddings we are taking these images. We will run a convolution here to extract patches Then we will flatten these patches and add the positional encodings And this stuff here will be done by this SigLip and vision embedding then we take these embeddings which are Patches plus the positional encoding and we run it through this encoder, which is a list of layers of the transformer So this stuff here is our encoder.

What is the encoder? Well, the encoder is a list of layers of the transformer So you can think of it as being a list of these layers here. Actually these layers here one after another which includes a multi-head attention, a normalization, a feed-forward network and the normalization In the case of the visual transformer the normalization is done before the feed-forward and before the multi-head attention, but that's the only difference So this part here, so a series of layers is called the here We call it the encoder because it resembles the encoder side of the transformer And then we have a layer normalization.

So now let's go to code this vision embeddings So we want to extract information about these patches Let's do it. Where are the vision embeddings? Here. Okay All right, so The vision embeddings is basically, okay Taking again the configuration because each of these models needs to have access to the configuration because they need to extract different Information from this configuration.

So we have the embedding size, which is the size of the embedding vector, which is the hidden size The image size is how big is the image? And the patch size is how big is the patch that we want to get from this image. So basically we are talking about this In this case the patch size I remember is a 16 Which means that we are going to take this patch here is going to 16 by 16 pixels How do we extract these patches?

We do that through a convolution that is a 2d convolution, which it takes as input The number of channels of the image so three channels are gb and it produces all channels equal to the embedding size So the hidden size The kernel size so as you remember the convolution works like this, so let's use the ipad actually to draw so The convolution works like this.

So we have an image Which is made up of let's say pixels. So suppose this is the grid of pixels And we have a lot of them Basically the convolution works like this imagine the kernel size is three by three So we take a three by three group of pixels.

We apply this convolution kernel So if you are not familiar with how convolutions work, I will not be reviewing that here But basically it means that we have a matrix here You multiply each number of this matrix by the value of the pixel on which it is applied to it will produce features one feature And then you slide this kernel to the next group of pixel then you slide it again Slide it again, etc, etc, and it will produce many features in the output features However at as input we have three channels which you can think of it as three Parallel images one that is only red one that is only green and one that is only blue We run this kernel on all of these channels and it will produce Features how many kernels do we have?

Depending on how many output channels we want. So for each output channel, we have a one kernel that is We have three kernels actually that is used for one for each of this number channels The stride tells us how we should slide this Kernel from one group of pixel to the next and we are using a stride that is equal to the patch size of the Kernels, which is equal to the kernel size.

So which means that we take the first oops We take the first group of let's say three by three kernels Then we skip three kernels to we slide it to the next group of three by three. So there is no overlap So we take this kernel here Then we slide it to this group of pixel here Then we slide it to this group of pixel here so that there is no overlap.

So basically what we are taking is list of features each extracted by a independent patch of this image that we run the kernel on And the padding if valid means that there is no padding added So basically this patch embedding is extracting information from our image patch by patch Where there is no overlap between these patches.

How many patches do we have? Well, it's the size of the image which is 224 in the base version of PaliGamma divided by the patch size So image size is the number of pixels divided by how big is each patch and then to the power of two because we have Along two dimensions this image.

So we run the patch. The patch is It's a square. So it's a 16 by 16 or 3 by 3 or whatever the number patch size is How many positions we have? So how many? Positional encodings we need well It's equal to the number of patches that we have because we need to encode information about where this patch came from So how many positional encodings we need equal to the number of patches that we have And what is each of this positional encoding?

It's a vector. It's a vector of the same size of the patch So it's equal to embeddings. You can see here And it's a learned embedding. So it's a positional encoding that is a learned Embedding how many we have we have noon positions of them each of them with this size here And we will see later that each of them is added to the information extracted from the convolution So that each convolution output encodes information about where it came from in the image we register these positional IDs in the In the module which is just a list of numbers and we will use it later So this is just a range of numbers so between zero and noon positions mine one Now let's implement the forward method This is the reason I like to Copy and paste the code because I can copy all the comments without typing them one by one.

Otherwise, it will take me forever So what we do now is okay. We had our image which is a pixel values here The pixel values came from noon pi so we will see later how we load the image but basically you have to think that you load the image with noon pi and noon pi loads a Batch of images, which is a channel height and width.

It's a tensor with three channels and with the height of the image and the width of the image We will see that this Height and width is equal to the same because we resize each image to the input size of the image expected by the model So we will resize in the case.

We are using the smallest polygama. We will resize each image to 224 by 224 We extract this patch embeddings to this convolution so you can see here So this will basically take our image which is a batch of images and convert it Into a list of embeddings of this size So each image will be a list of embeddings of size embed dimensions How many patches we have well the number of patches For the height and the number of patches for the weight In this case, it will always be the same so you can think of it as a number of patches a total number of patches Each of patches with the dimension embedding dimension And as we saw before we flatten these ones, so we extract them here.

Let me delete it So we extract these patches So we run the convolution and then we flatten them here So basically the convolution will give us 1 2 3 4 5 6 up to 16 or whatever the number of patches is and then we convert it into a tensor where the The patches are flattened So the first patch is here and the last patch is the last element of this tensor and this is what we do here Here because the output of the convolution is a 2x2 grid, but we don't want a 2x2 grid We only want a one-dimensional long list of patches and this is done by this flatten method here Then we transpose because we want the number of patches to come before the embedding dimension Because as input to the transfer we need to give a sequence of embeddings So that's why we want this num_patches dimension to come before so that it becomes a batch of sequence of embeddings and each embedding is a vector of size embedding dimension Each of these embeddings we add the positional encodings which positional encodings?

Well the position Extracted from this embedding layer But which embedding do we want to extract? All the embeddings. So from 0 to Suppose we have 16 patches from 0 to 15 What is the where is this information 0 to 15 is in this self dot position and this which is a range So as you remember a range is just a generates a list of numbers between 0 and the argument minus 1 So we add we extract this the all the positional encodings from this position embedding Layer, which is this embedding layer here.

We add it to the embeddings So what we are doing basically is we flatten this embedding We did that before then we add a positional encoding vector extracted from the positional encoding layer And these positional encodings are learned. So learned why because this embedding layer here is a list of embeddings That when the model is trained these embeddings will change according to the need of the model and basically we encode them So it's not like we are telling the model.

This is position number one. This is position number two We add another embedding that is added to this patch each of these patches And then the model will learn to modify this positional embedding vector in such a way that they should encode the position Information because each of this position embedding is always added to the same patch So the first patch always receives the position number zero the second patch always the position number one We hope that the model actually tries to change this position embedding in such a way that they encode the positional information and actually it does because the model actually learns then the to relate Patch with each other by using their positional information And the only way for the model to do that is to change this position embedding in such a way that they encode the position information If you remember from the vanilla transformer, we use the sinusoidal functions So if you want to look at the original transformer if you remember here We have this position information Where is it here?

So we create this position encoding using sinusoidal functions So instead of learning them we actually pre-compute them and then we force the model to learn the pattern Encoded by these sinusoidal functions in this case. We are not forcing the model to learn any pattern We want the model to create the pattern that is most useful for the model itself so we hope that the model will try to create this embedding layer in such a way that it creates some embeddings that are helpful for the model to to understand the position information and this is the meaning of position embedding Now we skipped before the normalization layer.

So let's go actually to Understand what is normalization and how it works so that we always don't leave anything behind that is not explained All right. Let's talk about normalization. So imagine we have a list of linear layers Now a linear layer is defined by two parameters One is called the input features and one is called the output features Imagine we have input feature is equal to four and output feature is equal to four Actually, there is another parameter called bias So it indicates if the linear layer also has a bias term and suppose that it's true To the input of the linear layer usually we have a batch of items and each item is made up of features Suppose that for now as input there is only one item and it's made up of four features And as you can see the input features are four What will happen with four output features is this the linear layer you can think of it As a number of neurons where the number of neurons equal to the number of output feature of this linear layer what each neuron does is basically it has a weight vector As you can see here made up of four weights How many weights does it have?

Well equal to the number of input features that this layer accepts So which is a four What each neuron will do it will do the dot product of the incoming vector So the input vector x multiply dot product with the weight vector of this neuron plus the bias term Which is one number for each neuron And this basically dot product plus this bias will produce one output feature Because we have four neurons.

We will have four output features So each neuron will do the same job, but each neuron will have its own weight vector and its own bias number So this one here will have its own weight vector different from the other ones and its own bias term here Then suppose that we have another Vector that takes as input four features and produces two output features So you can think of it as a linear layer with the two neurons where the first neuron has a weight vector made up of four numbers because The incoming vector has four features and then one bias term here It will produce an output vector of two items The first item will be this number here and the second item The second dimension will be the dot product of the weight vector of this second neuron with the input vector plus the bias term of the second neuron Now, what is the problem with With the linear layers, but actually with all layers in general The problem is this it's called the covariate shift.

The problem is that When you have an input vector That changes from one batch to another in magnitude Then the output of the layer will also change in magnitude a lot depending on what is the incoming vector So for example, imagine this the first input vector is all the numbers are more or less around one and two And the output is also more or less around suppose around two Then if the next vector that is coming to this layer is Much different in magnitude from the first one then the output will also be much different in magnitude And this is a problem for the model So the problem is that if the input of a layer changes, then the output of this layer will also change a lot So if the input changes drastically the output will also change a lot drastically then because the loss of the Of a model during training depends on the output then the loss will also change a lot because the loss Then determines the gradient during backpropagation It means that if the loss changes a lot then also the gradient will change a lot and if the gradient changes a lot Then because the gradient determines how we update the weights of the model during training then also the update of these weights will also change a lot so basically what happens is that the if the input the distribution of the Dimensions of this vector that is coming to the input of a layer Changes drastically from one batch to the next Then the output of the model will also change and then the loss will change then the gradient will change then the update of the weights Will change so what we will see that the loss will oscillate a lot And also the weights will try to keep up with this changing input distribution Which basically will result in a model that trains slowly.

So here I have made a simple How to say Summary of what is happening So a big change in the input of a layer will result in a big change in the output of a layer which will result In a big change in the loss of the model which will change result in a big change in the gradient Of the during black propagation which will result in a big change in the weights of the network And what is the result of this is that the network will learn very slowly because the network will spend most of its Time but okay most of the effort trying to keep up with this distribution change in the input Instead of actually learning the features How to map the input to the output So the the first solution to this problem was batch normalization, which was introduced in this paper And with batch normalization what we do basically is that we have usually not a single item as input We have a batch of items suppose that we are training a classification image classification model So we have as input a list of images For example the image of a cat the image of a dog of a zebra of a tree of a stone etc, etc So you can think these are the dimensions of the vector that represent the cat These are the dimensions of the vector that represent the dog.

These are the dimensions of the vector that represent the zebra etc, etc So what we do with batch normalization is that we calculate a statistic For each dimension of each item Which statistic do we calculate the mean and the the variance and then we Normalize each item by subtracting the mean and divide it by the standard deviation this will basically make each Dimension of each item be distributed According to a Gaussian with mean zero and the variance of one so basically what will happen is that each if we normalize each number if Because the image of a cat is much different from the image of the zebra Because the color distribution is different.

The rgb distribution is different. So the pixel intensity is much different from each other What will happen is that the model will not see this change in magnitude but it will see And also will not see a change in distribution because all of these items will be distributed according to a mean of zero and the variance of one So what will happen is that the model will oscillate less in the output.

So it will oscillate less in the loss So it will oscillate less In the gradient, so it will make the Weights of the model oscillate less So the model the training will be more stable. It will be it will converge faster basically this way. So To summarize Why do we need normalization is because the input of the model which depends on imagine you are training Classification or the image classification model then the input depends on the image and the image can be much different from each other If the image changes a lot, we don't want the model to feel this change in magnitude of the input We want the distribution of the inputs to be remain constant.

Let's say So that the model doesn't oscillate so that this doesn't force the model to kind of just to keep up with the distribution This change in distribution. How do we do that? We we try to keep the distributions Constant so always try to have the input features to be distributed according to a fixed distribution Which is mean of 0 and 1 and we do that with this formula here, which comes from probability statistics basically each Distribution if you subtract its mean divided by the standard deviation, it will result in a Gaussian distribution of mean 0 and variance of 1 Of course, this is valid also only for Gaussian distributions And And this will basically result in a more stable training Now the best distribution actually worked fine.

However, it has a problem with the problem is that Which best normalization each of these statistics so the mu and the sigma are calculated Along the batch dimension. So we calculate the mu and the sigma for the dimension number one of each of these vectors Along the batch dimension.

So basically to calculate this mean we are summing up the first dimension of each of these vectors And divided by the number of items that we have So we are mixing the features of different items So we are mixing the dimension number one of the cat with the dimension number one of the dog And so basically to to have good results, we need to use a big batch because If we use for example a cat and the dog it will result in one mean But imagine in the next batch, we have the cat and the zebra it will result in a completely different mean And then the next supposing the next batch we have a cat and the tree maybe it results in another different mean So also we will still have this problem of covariance shift because the mean is changing a lot between each iteration So the only solution to this actually is to use a very big batch size So we are forced to use a big batch size in order to alleviate this problem Of kind of mixing the dimensions along the batch dimension We introduce the layer normalization with layer normalization What we do is instead of calculating the statistics along the batch dimension We calculate them along the item dimension So the mu and the sigma that will be used to standardize the cat will only be Dependent on the dimensions of the cat not on the whatever the cat comes with So we are still doing each item minus its mean divided by the standard deviation But instead of this standard deviation and this mean coming from the first dimension of each item It comes from the average of this All the dimensions of the each item independently from the others So it doesn't matter which other item the cat comes with it will always result in more or less the same mu and Same sigma And this makes the training even more stable because we are not forced to use a big batch size And this is why we use normalization Okay, we have seen what is normalization now we should implement what is this thing called the encoder so this is Sigleap encoder Now the encoder is made up of multiple layers of the transformer model And the architecture more or less if you look at the vision transformer paper, it is like this So I changed it a little bit because I wanted to use the exact names that we will be using So we have first of all what we have so far is this thing called the Sigleap vision embeddings Which is basically taking the image it is Taking some patches of this image using a convolution each of this Output of this convolution is an embedding is used as an embedding.

It's a vector And this embedding vector is added to another Vector called the positional encoding which is learned and then we feed this stuff to this thing called the encoder So we convert it into embeddings at the positional encoding then we feed it to the encoder And at the input of the encoder you need to think that we have These layers repeated n times here.

It's written l times One after another such that the output of one becomes the input of the next layer the thing that you need to understand about the transformer is I repeat it is that the transformer is a sequence-to-sequence model that converts a sequence of embeddings into contextualized embeddings What does it mean?

It means that at the input you have a list of Here embeddings each representing a patch of the image as an independent patch So this embedding here only captures information about the first group of pixels This embedding here captures all information about the second group of pixels, etc, etc, etc But then some through some magic called Attention mechanism this contextualized these embeddings become contextualized at the output of the transformer and we will see in detail this attention mechanism Such that this embedding here at the output of the transformer the first embedding is represents information about the first patch plus other it includes information not only about the first part but also about other patches And so is the second the third the fourth and the last one So they become contextualized in the sense that they capture information about the context in which they appear Which is different from language models in which each token captures information about the previous tokens in the case of the vision transformer Each patch includes information about all the other patches Now each of these layers is made up of so we have the this is the input of the encoder let's say And we will have the first layer of this encoder The first thing that we do is we apply a layer normalization and we saw how it works and why we use it The output of this layer normalization is a cop First the input of this linear normalization is saved for a skip connection that we do later Then the output of this layer normalization is sent to the self-attention mechanism It's this one here and this self-attention mechanism takes the output of the layer normalization as a query key and values It calculates the attention just like the usual formula So softmax of the query multiplied by the transpose of the key divided by the square root of the model multiplied by v etc etc The output of this self-attention is then summed up with this skip connection here Then the output of this summation is sent to this layer normalization along with the skip connection that is used later Then the output of the normalization is sent to this multi-layer perceptron, which is a list of linear layers We will see later and then we do another summation here with the skip connection plus the output of the multi-layer perceptron And then we do another layer like this and another another another and the output of the last layer is the output of our vision transformer.

So as you can see the vision transformer takes as an input an image converted into patches. Patches are then fed to this Encoder which is a list of layers and the output is a contextualized patches or embeddings of these patches So let's code this encoder, which is basically this structure here And we will code each part of this structure and while coding each part we will go inside on how it works So the normalization we already know how it works, but we still have to explore what is this stuff here called the self-attention What is this stuff here called multi-layer perceptron?

I believe it's convenient for us to go first through multi-layer perceptron and then we go to the self-attention I think because the self-attention is a little longer to do. So let me do the simple part first Okay, let's code this encoder Now I will copy the first part This one here, so let's copy it here So the encoder is made up of again, the constructor is made up of the configuration We save some stuff which is the hidden size and then we have a block called the self-attention block in this call this Here it's called the siglib attention.

Now Note about the naming I'm using. So I am using the same names as the HuggingFace implementation For only simple reason which is I want to be able to load the pre-trained weights from HuggingFace So the pre-trained weights for the polygam are available on the HuggingFace hub So we want to be able to load them But each of these pre-load pre-trained models they have this dictionary of weights So where the dictionary tells you where to load each of these weights And if the names do not match you need to create some conversion script So I didn't want to do that and also it would just complicate the code uselessly So I just use the same names so that we can Load basically the pre-trained weights from HuggingFace Also because my code is based on the HuggingFace implementation So to create my code I use the HuggingFace implementation, but simplified a lot a lot a lot For example, I remade my own KVCache.

I did a lot of Modifications to simplify it but it's based on the HuggingFace implementation anyway So we have this thing called the self-attention then we have a layer normalization. So we saw it's Where is it? And we have this layer normalization here Then we have this multi-layer perceptron, which is this stuff here.

And then we have another layer normalization, which is this stuff here So we have two layer normalization. So now let's implement the forward method And the forward method I will copy it line by line so we can understand Okay this forward method. Now. The first thing we do is we save a residual connection, which is We basically save the input that we feed to this Encoder because we need to reuse it later.

So we are saving this skip connection because we will need to use it here later Then we run it through the layer normalization the input And it's done here. So the layer normalization does not change the shape of the input It's just normalizing each of these dimensions such that they they all come up It's like they came out from a Gaussian of mean zero and variance of one Then we apply this magic thing that we will explore later called the self-attention and the self-attention system Also does not change the shape of the input Tensor, but as we saw before the attention mechanism is something that takes as input Embeddings and gives you contextualized embeddings.

So it does not change the shape of these embeddings But we will implement it later. So for now just think of it as a black box that you feed in Embeddings and it gives you contextualized embeddings Then we have a residual connection and we can see that here. So this residual connection Skip connection was called Which is this first plus here So we are taking what we saved before with the output of the self-attention So what we saved before is this residual stuff here plus the output of the self-attention, which is this hidden states here This the result of the summation is saved again because there is another skip connection after I don't know why my alt tab is not working.

So We save again another This stuff here. So we save it because later we need to use it here for the skip connection Then we do I guess another linear layer normalization which also does not change the shape of the input tensor And then we have this thing called the multilayer perceptron.

Now the multilayer perceptron is something that It's not easy to explain what is used for but basically The multilayer perceptron we will see later is a series of Linear layers that takes each input embedding and Transforms it independently from each other from the others So while in the self-attention there is kind of a mixing of the patches incoming so that you get contextualized In the multilayer perceptron, there is no mixing between these let's call them tokens or patches Each of them is transformed independently And the multilayer perceptron allow us to increase basically first of all it adds parameters to the model.

So the model has more Degrees of freedom to learn whatever it's trying to learn and the second Objective of the multilayer perceptron is that it allow to prepare Let's say prepare the the sequence of patches for the next layer. So if the next layer expect these patches to be somehow Different the multilayer perceptron allow to transform them Also, it adds a non-linearity.

So the multilayer perceptron also includes a non-linearity which adds Which basically allow as you know non-linearities allow you to model more complex transformations So if you just create a list of linear layers without any non-linearities that you cannot model complex functions so that for example in the classification you cannot Map non-linearly separable data, but with by adding Non-linear transformations you add complexity to the model.

So the model is able to map complex transformations So the multilayer perceptron just adds parameters and this non-linearity which is helpful to To to allow the model to learn whatever complexity it needs To to map the input to the output After the multilayer perceptron, I guess we have a Yeah, we have another skip connection and then we return the output of this skip connection here and also the skip connection does not change the shape of the Of the tensors of the embeddings Now, let's code first this multilayer perceptron.

It's the easiest stuff to do So let's do it uh Let's go here. I I will also always copy first the Constructor and then the forward method so we can explore a little bit the structure and then we explore the logic So this multilayer perceptron just like in the vanilla transformer is made up of two layers plus a non-linear transformation So the first layer takes each of the embeddings which are we we can also call them tokens or patches Because most of the time we are dealing with language models and expands them So each of these vectors which is of size hidden size is expanded into this thing called intermediate size Usually it's chosen as three times the hidden size or four times the hidden size I remember in the vanilla transformer it was four times the hidden size Then we apply a non-linearity to this expanded tensor and then we compress it back to the hidden size dimension So let's do the forward method now Which is this one here So the first thing we do is we convert each of these embedded dimensions into intermediate sizes So again, we have a batch of images Each image is made up of num_patches number of patches each of this patch is represented by a vector of size embedding dimension With the first fully connected layer, we are expanding each of these patches into the intermediate size and then we apply A non-linear transformation in this case.

It's the gelu function now You may be wondering why are we using the gelu function or the zwiglu function or whatever non-linearity there is The reason is always practical. So Basically There is a there is no like a rule of thumb for choosing the non-linearities to use for a specific case There are just some heuristics And the heuristics is that initially the transformer when it was introduced it was with the gelu function as non-linearities between these two fully connected layers But then people explored other non-linearities and they saw that they work better Now non-linearity is actually there is also some logic behind the choice of a non-linearity So because the non-linearity define also the flow of the gradient So for example, if you use the gelu function, if you look at the graph of the gelu function, let me draw it actually The graph of the gelu function is something like this.

So Why I cannot draw it, okay So basically anything that is negative is zero. Let me use another color Anything that is negative is becomes zero basically and everything else is forwarded without any scaling So this means that if the input of the gelu function is negative the output will be zero and actually for any Negative input there will be no gradient because the gradient will be multiplied by zero.

So it will not flow That's why for example, we introduced the leaky relu and other like In the relu family, there are other Functions that allow also a little bit of gradient flow from the negative side So the non-linearity basically tells you How the gradient will flow during back propagation.

So having a non-linearity that allows That allows the gradient to flow back even when it's negative It means that the signal the model is not forced to always have the activation to be positive to have some Feedback from the loss function to optimize its weights And why we are using the gelu because people have tried it and probably it works better compared to the relu function for the same class of applications so in the vision transformer you see the gelu function, but In the lama, for example, they use the zwiglu function in other scenarios They use other functions and it's mostly based on heuristics on how they work in practice also, because a model is usually made up of billions and billions and billions of of parameters and it's not easy to find the regular regularity to understand why Specific non-linearity is working better than the other one Now, okay, then we apply the second linear layer Which is basically recompressing back this intermediate state into the embedding size and then we return it and this is our multilayer perceptron our next part is going to be we are going to code this attention mechanism for the vision transformer and we will see that it's Different than from those of language models because we don't have any causal mask or attention mask All right guys, so we have seen the multilayer perceptron now Let's go to the multi-head attention and for that I want to use the slides because I believe it's a little faster to explain on the slides and then we proceed with the code So what is the multi-head attention?

The multi-head attention is a way of contextualizing stuff Which means that you start with a sequence of for example patches and you can think we have for example Four patches each of this patch is represented by a single vector of 1024 dimensions So you need to think of this as a vector of 1024 dimensions.

So you need to think there are 1024 numbers in this row vector Then we have the patch number two the patch number three and the patch number four Each of this patch was extracted from a group of pixels from the initial image and it's only representing information about the patch It was extracted from so the part of the image it came from With the multi-head attention system.

We uh, what we mechanism what we are doing is we are contextualizing these patches Which means that the output of the multi-head attention is a tensor of the same size As the input so this is a tensor of size 4 by 1024 the output will be a tensor of size 4 by 1024, but where each of these Embeddings now does not capture information only about itself, but also about the other patches in the in the sequence This is for vision transformer for the language models we want something slightly different So for language models, we do have an input sequence, which is a sequence of tokens each token representing one single I don't want to use the term word because it's wrong but In my videos, I always make the simplification that each token is a word and each word is a token But this is not the case actually in tokenizer.

So usually a token can be just any sequence of characters Does not does not necessarily be um, it does not need to be necessarily a word But for us let's treat them as word. It's just simplifies the explanation so We have a list of tokens. Each token is represented as an embedding.

Let's say of 1024 dimensions So it's a vector of 1024 dimensions. So 1024 numbers for this one 1024 numbers for this one, etc, etc The multi-head attention in the case of language models What we want is we want to contextualize each token with the all the tokens that come before it So the output of the multi-head attention in the case of language models And this is this would be known as the self-attention mechanism with causal mask Is a sequence with the same shape as the input sequence So this vector this matrix here is a 4 by 1024.