fastai v2 walk-thru #4

Transcript

Hi, everybody. Can you see and hear okay? Great. And that response came back pretty quickly. So hopefully the latency is a bit lower. So Sam asked before we start to give you a reminder that here's conference in San Francisco. Today is the last day to get early bed tickets.

So remember if you're interested, the code is fast AI to get an extra 20% off. Okay. I was thinking I would do something crazy today, which is to do some live coding, which is going to be terrifying because I'm not sure I've really done that before. Oh, hey there, Max, welcome.

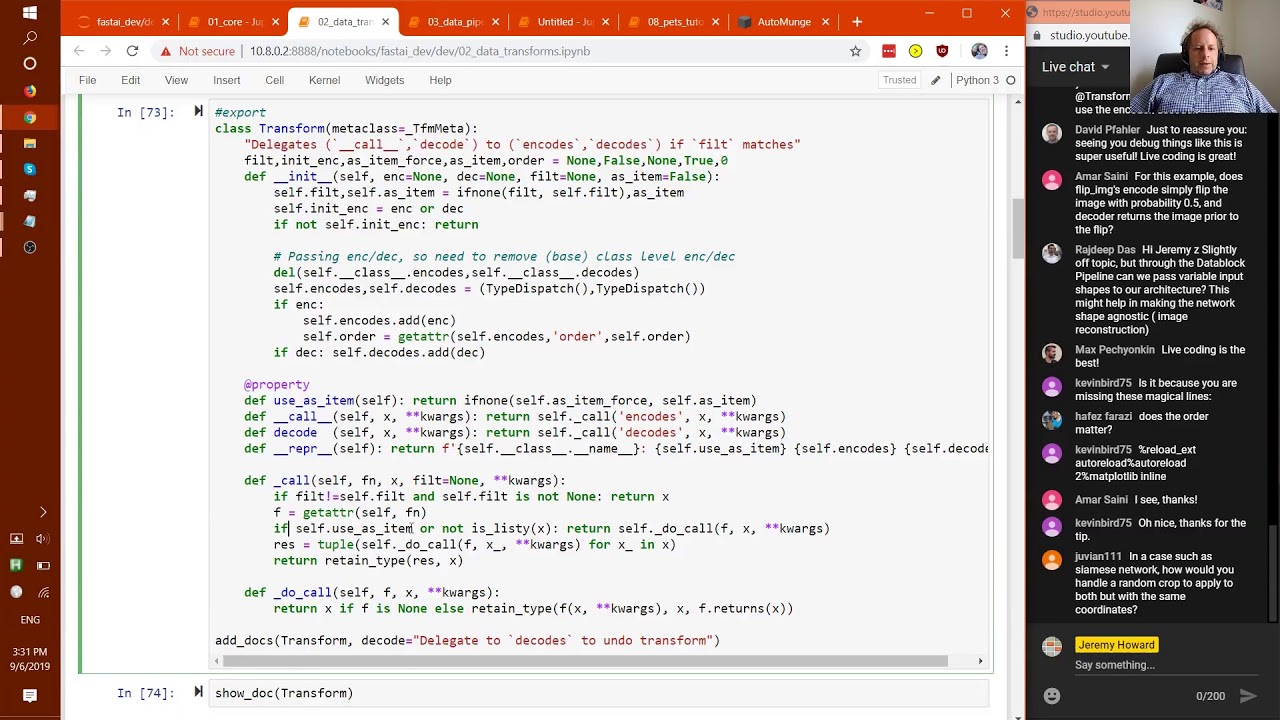

So I was going to try and do some live coding to try to explain what transform does and try and justify like why it does it and why it exists to kind of try to, yeah, basically see why we ended up where we ended up. I think we did something like 26 rewrites from scratch of the data transform functionality over a many month period, because it was just super hard to get everything really easy and clear and maintainable and stuff like that.

So I'm really happy with where we got to and that might be helpful for you to see why we got here. So maybe let's start by writing a function. So our function is going to be called neg and it's going to return negative, which you might recognize actually is the same as the operator.neg function, but we're writing it ourselves.

So we can say neg three, for instance. And that's how Python will do that. What if we did neg of some tuple, let's create a tuple, one kernel three, we get neg of some tuple. Oh, it doesn't work. That's actually kind of annoying because, I mean, it's the first thing to recognize is like this is this is a behavior.

It's a behavior that can be chosen from a number of possible behaviors and this particular behavior is how Python behaves and it's totally fine. And as you know, if we had an array, for instance, we would get different behavior. So one of the very, very, very nice things, in fact, to me, the best part of Python is that Python lets us change how it behaves according to what we want for our domain.

In the domain of data processing, we're particularly interested in tuples or tuple-like things because generally speaking, we're going to have, you know, an x and a y. So for example, we would have an image and a label such as a pet. Other possibilities would be a source image and a mask for segmentation.

Another possibility would be an image tuple and a bool, which would be for Siamese, segmentation, Siamese, categorization, or it could be a tuple of continuous columns and categorical columns and a target column such as for tabular. So we would quite like neg to behave for our tuple in a way that's different to Python and perhaps works a little bit more like the way that it works for array.

So what we could do is we could repeat our function and to add new behavior to a function or a class in Python, you do it by adding a decorator. So we created a decorator called transform, which will cause our function to work the way we want. So now here we have it, minus one, minus three.

So let's say we grab an image. And so we've got a file name, FM, which is an image, so let's grab the image, which will be log image FM, there's our image, and so let's create a tensor of the image as well, which we could do like this. OK, maybe just look at a few rows of it.

OK, so we could let's look at some rows more around the middle. OK, so we could, of course, do negative of that, no problem. Or if we had two of those in a tuple, we could create a tuple with a couple of images. And actually, this is not doing the negative, we're not getting a negative here.

OK, interesting question, tag the image, row, color, date. Hmm, interesting, what am I doing wrong? Oh, yes, thanks, sir, I mean, the reason my negative is wrong is because I have an unsigned int, so, yes, thank you, everybody. So let's make this a dot float, there we go, OK, and that will work on tuples as well.

There we go, OK. So that's useful, and that would be particularly useful if I had an image as an input and an image as an output, such as a mask as an output or super resolution or something like that. So this is all looking pretty hopeful. So the next thing, let's try and make a more interesting function.

Let's create a function called normalize, and that's going to take an image and a mean and a standard deviation, and we're going to return x minus m over s. So I could go normalize, t image, comma, let's say the mean is somewhere around 127, and standard deviation, let's say it's somewhere around 150, and here, let's-- and again, actually, let's just grab our subset so it's a little bit easier to see what's going on.

So we'll go the subset of the image is a few of those rows, OK, there we go. So we could check now t image dot mean, the sub dot mean, output t image dot mean, I should say, let's say, normed image across that, dot com, t, so, normed image dot mean, OK, so, it's the right idea, OK, so here's a function that would be actually pretty useful to have in a transformation pipeline, but I will note that we would generally want to run this particular transformation on the GPU, because even though that doesn't look like a lot of work, it actually turns out that normalizing takes an astonishingly large amount of time as part of something like an ImageNet pipeline, so we would generally want to run it on the GPU.

So by the time something has gotten to the GPU, it won't just be an image, it's the thing that our data set returns will be an image and a label, so let's create a image tuple, will be image, comma, let's say it's a one, so this is the kind of thing that we're actually going to be working with, oh, we should use a test, we're actually going to be working with on the GPU, so if we want to normalize that, and again, let's grab a mean and a standard one, and again, we have a problem, so let's make it a transform, so that's something that will be applied over tuples, so you'll see that none of the stuff we already have is going to change at all, because, oh, I made a mistake, I did it wrong, oh, took two additional arguments, that four were given, ah, yes, that's true, so we do have to use n equals s equals, okay, so one changes that we have to use, um, named arguments, so now we can go m equals s equals, so there we go, so now it's working again, um, but, oh, that's no good, it's also transformed our label, which is definitely not what we want, so, um, we need some way to have it only apply to those things that we would want to normalize, um, we can't, so, as we saw, you can add a type notation to say what to apply it to, um, the problem is that generally by the time you are getting through the data loader, everything's a tensor, so how can we decide which bits of our tuple to apply this to, um, so the answer is that we need to, um, create things that inherit from a tensor, um, and so we have something called tensor base, um, which is just a base class for things that inherit the tensor, so we could go plus, um, my tensor image, which will inherit from tensor base, um, oops, and I don't have to add any functionality to it, um, what I could just do is now say p image equals my tensor image, p image, actually let's call this, uh, my tensor image, uh, and so this, because this inherits from tensor, it looks exactly like a tensor, as all the normal tensory things, um, but if you check its type, it's one of these, so the nice thing is that we can now start constraining our functions to only work on things we want, so I want this to only work on that type, um, and so this is the second thing that a transform does, is that it's going to make sure that a new e gets applied, um, to these parts of a tuple, if you are applying it to a tuple.

So again, it's not going to change this behaviour, because it's not a tuple, um, but this one, we are applying it to a tuple, ah, perfect, okay, so now that's not being changed. So it's important to realise that this is like, um, it's, it's no, uh, more or less weird or more or less magical or anything than Python's normal function dispatch, different languages and different libraries have different function dispatch methods, um, Julia tends to do, uh, function dispatch nearly entirely using these kinds of, um, this kind of system that I'm describing here, um, um, Python by default has a different way of doing things, um, so we're just picking, we're just picking another way, uh, equally valid, uh, no more strange or no less strange.

Um, so Max is asking does transform only apply to tensor? No, not at all. It's just a, it's just a, so let's go back and have a look. Remember that, um, uh, in our image tuple, the second part of the tuple is just a one. So when I remove that, you'll see it's being applied to that int as well.

This is just a, this is, it's nothing PyTorch specific or anything about this. It's just another, um, way of doing, uh, function dispatch in Python. And so in this case, uh, we're constraining it to this particular type, but yeah, I mean, I could have instead said, um, class my int.

And then let's create a, my int equals my int one. And then let's say this only applies to a my int. Um, and so now let's not use a one, but let's use my int. So you can see now the, uh, tensor, the image tensor hasn't changed, but the int has.

So it's just a, yeah, it's just a different way of doing, um, function dispatch. Um, but it's a way that makes a lot more sense for data processing, um, than the default way that Python has. And so the nice thing is that like Python is specifically designed to be able to provide this kind of functionality.

So every, all everything we've written as you'll see, uh, we've written that all, um, uh, okay. Got a few questions here. Uh, so let's answer these first. Um, you defined MTI, but when did that get passed to norm? Uh, it got passed to norm. Oh, it got passed to norm.

Yeah. That I think. Yes. Okay. So let's go back and check this. So we're going to do my tensor image. So there it is with a by tensor image. If we made the tuple non my tensor image, just a regular tensor. Oh, it's still running. Why is that? Um, right.

Oh, I actually changed the type of T image at some point. I didn't mean to do that. Let's run it again. Okay. Uh, oh, uh, because I'm actually changing the original way. Uh, that looks like we found a bug. Okay. Let's make that bug. Okay. So let's do it separately.

Yes, I do need a job. Oh, I've got fun. Um, I shouldn't, but I have a bug. Okay. Let's do it again. There we go. Okay. So T image is now a tensor. And so, okay. So now when I run this on the tuple, which has a normal tensor, it doesn't run.

Okay. And so then if I change it to the subclass, then it does run. Um, and oh, and we wanted to make this a bug. Uh, and that should be a tensor array. Okay. There we go. Um, so that one is just being applied to the image and not to the label.

And so, uh, to answer Max's question, we absolutely could just write in here and put that back to T here. And now it is being applied. Uh, the reason I was showing you sub classing is because this is how we add a kind of semantic markers to existing types, um, so that we can get all the functionality of the existing types, but also say that they represent different kinds of information.

Um, um, so for example, in fast AI vision two, there is a capital I int, um, which is basically an int that has a, uh, show method. Um, but it knows how to show itself. Uh, what kind of work do you put in after the pipe? Not sure what you mean, Caleb.

We don't have a pipe. Uh, so feel free to re ask that question if I'm not understanding. Um, okay. So this is kind of on the right track. But one problem here, though, is that we don't want to have to pass the main and standard deviation in every time.

So it would be better if we could, uh, grab the main and standard deviation and we could say mean on our standard deviation equals T image dot mean comma T image dot standard deviation. Oops. He calls. I'm not sure what I just did. Okay. So now we've got a name and standard deviation.

So what we could do is we could create a function, which is a partial application of norm over that main and that standard deviation. Uh, so now we could go norm image equals f of that instance. Um, and then of course, we'll need to put this back to let's just make this tensor for now, say, there we go.

Um, or we could do it over our tuple. Um, we don't need an F, but more and more. So, and this is kind of more how we would want something like normalization to work, right? Because, um, we generally want to normalize a bunch of things with the same main and standard deviation.

Um, so this is more kind of the behavior we want, except there's a few other things we'd want in, um, normalization. Um, one is we generally want to be able to serialize it. We'd want to be able to save the transform that we're using to disk, including the main and standard deviation.

Um, uh, the second thing we'd want to be able to do is we want to be able to undo the normalization so that when you get back, uh, an image or whatever from some model, we'd want to be able to display it, which means we'd have to be able to denormalize it.

So that means that we're going to need proper state. So the easiest way to get proper state is to create a class. Um, Oh, before I do that, I'll just put something out. Um, for those of you that haven't spent much time with decorators, this thing where I went at transform and then I said def norm, um, that's exactly the same as doing this.

I could have just said def say underscore norm equals this and then I could have said norm equals transform. Um, and that's identical as you can see, because exactly the same answers that's literally the definition of a decorator in Python is it literally takes this function and passes it to this function.

That's what at does. So anytime you see at something, you know that, oh, thank you transform underscore norm. Um, you know that that's what it's doing. Thanks very much for picking up my silly mistakes. Um, okay. So yeah, so it's important to remember when you see a decorator, it's something you can always run that manually on functions and um, yeah, okay.

Uh, so what I was saying is in order to have state in this, we should turn it into a class, let's call it capital N norm. And so the way transform works is you can also inherit from it. And so now if you inherit from it, you have to use special names and encodes is the name that transforms expect.

Uh, and so normally you wouldn't then pass in those things, but instead good pass it here. And remember, uh, so we could just go self dot m comma self dot s equals m comma s, or we could use our little convenient thing we mentioned last time or doesn't really add as much, um, so small number, but just to show you the different approaches.

So that one. Um, so now we need self dot m self dot s. Um, I still have my, uh, annotation here. So now we will go f equals capital N norm equals M s equals s. Um, Oh, and of course these states are still, and there we go. So Oh, and why isn't that working?

So it worked on our, this one. That's not on our tuple. Um, so David asks, why would we inherit versus use decorator? Yeah. So basically, um, yeah, if you want some state, it's easier, easier to use a class. If you don't, it's probably easy to use a decorator. Um, that sounds about right to me.

Um, okay. So let's try and figure out why this isn't working. Um, Oh, I think it's because we forgot to call the super pass. Perhaps. Yes, that's probably why. Thank you. Oh yeah. But we both put it at the same time. There we go. Okay. Um, so that's an interesting point.

Um, it's, it's quite annoying to have to call supers in it. Um, we actually have something to make that easier, which is borrowed from the idea that, uh, Python standard libraries, data classes use, which is to have a special thing called post in it that runs after you're in it.

Um, uh, okay, Juvie and I will come back to that question. It's a very good one. Um, um, so we actually have, yes, if we inherit from base object, we're going to get a meta class, which we'll learn more about later called pre post in it. Meth meta, which allows us to have a pre in it and a post in it.

And they may already be using that. Let's have a look. Um, transforms. Transform. This isn't a trick from meta. Yeah. Okay. So we'll have to come back to this. This isn't a great opportunity to use this one. So must be impaired. Um, let's answer some questions first. Uh, so can you specify multiple types?

Um, so as max said, it would be nice if we could use union. I can't remember if I actually currently have that working. The problem is that Python's typing system, um, isn't at all well designed for using at runtime. Um, and last time I checked, I don't think I had this working.

Let's see. No, there's this really annoying check that they do. Um, so basically if you want this, um, then you have to go like this. Um, probably actually probably the easiest way would be to go underscore norm, get rid of that there. And then in codes here would be return self underscore norm X and we'll do the same here.

I think that's what you have to do at the moment. So have it twice both calling the same thing with the two different types. Um, if anybody can figure out how to get union working, that would be great because this is slightly more clunky than it should be. Um, so if you need multiple different encodes for different types, you absolutely don't need to use, um, class.

Um, and, um, so just to confirm, you know, again, you can do it with a class, uh, like so, um, so if that, uh, you can also do it like this. Um, well, actually, um, yeah, there's a few ways you can do it, but one way is you partially use it.

You partially use a class, uh, you define it like this, um, but you just write pass here. And then what you do is you write in codes here and you actually use this as a decorator, um, like so, and then you can do this a bunch of times like so.

Um, and this is another way to do it. So we now have multiple versions of encodes, um, uh, in separate places. So it's still defining a class once, but it's an empty class. Um, but then you can put the encodes in these different places. This is actually, we'll see more about using this later, but this is actually super important because, um, let's say the class was, well, actually norms, norms are great class.

So let's say you've got a normalized class and there's somewhere where you, uh, defined it for your, you know, answer image us. Um, but then later on you create a new data type for audio, say, um, you might have an audio tensor and then that might have some different implementation where, I don't know, maybe you have to, uh, transpose something or you have to add a non somewhere or whatever.

Um, so the nice thing is this separate implementation can mean a totally different file, totally different module, totally different project, and it will add the functionality to normalize audio tensors, um, to the library. Um, so this is one of the really, uh, helpful things about this, uh, about this style.

And so you'll see, yeah, the data augmentation in particular, this is great. Anybody can add data augmentation of, um, their own types, uh, to the library with just one line of code. Well, two lines of code, one for the decorator. Um, oh, great question, Juvian. So yes, um, the, the way that, uh, encodes works or the way that in general, um, these, uh, this dispatch system works is, um, let's check it out.

Um, let's put here, print a, print b, and this is a tensor and this is a tensor image. Um, so if we call it with MTI, it prints b. Why is it printing b? Because my tensor image is a more, um, is further down the inheritance hierarchy. So that's the more, uh, what you would call Juvian closest match, a more specific, uh, match.

Um, so it uses the most, um, precise type that it can. Um, and this does also work for, um, uh, kind of generic types as well. So we could say type being dot, somewhere there's a class for this. Ah, what's it called? There you go. Just a little bit slowly.

So Python standard library comes with various type hierarchy. So we could say numbers dot integral, for instance. Uh, and then we could say f3, as you can see, uh, where else if we had one that was on int. And again, it's going to call the most specialized version. Um, okay.

So lots of good questions. All right. Let me check this out later. Thank you for the tip. Um, all right. Um, the next thing that we want to be able to do is we need to be able to go in the opposite direction. Um, so to go in the opposite direction, they create something called decodes.

And so this would be X plus self dot S. Sorry, self times self dot S plus M. Um, and so let's check normalized image. And so to run decodes, you have to go F dot decode. And then we would say an image. And there it is. Um, and this works for tuples and everything exactly the same way.

So if we go, uh, F of our tuple, and then we could say F dot decode F of our tuple. Yep. We've gone back to where we were for the whole couple, just to confirm that this did impact. Oh, that's not working. Oh, because this should be an MTI.

This we're now saying this image type. There we go. And decode. There we go. Okay. So, um, so that is, um, some of the key pieces around how this dispatch works and why it works this way. Um, but of course, it's not enough to have just one transform. Um, in practice, we need to, um, not only normalize, but we need to be able to do other things as well.

So for example, maybe we'll have something, uh, that flips. Um, and so generally things that flip, we don't need to unflip because data augmentation, we want to see it even, um, later on, we don't generally want to decode data augmentation. So we could probably just use a function for that.

Um, so it's going to take one of our image tenses and it's gonna return porch.flip, uh, our X and we'll do it on the, uh, zero, the zeroth axis, uh, no, the fifth axis. Uh, if random random is greater than 0.5, otherwise we'll leave it alone. So that would be an example of some data augmentation we could do.

Uh, so how would we combine those two things together? Um, so one simple approach would be, we could say, um, uh, trans, uh, transformed image, uh, equals, uh, in fact, let's do it for the whole couple. So transform tuple, all right, tuple is image tuple. So transform tuple equals, um, let's say first we normalize, which was f of our image and then we flip it.

Um, actually that won't quite work because, um, um, because we can't apply it to couples, so we could tell it that this is a transform. And so now that works, which is good. Um, it's kind of annoying having to say everything's a transform and it's also kind of annoying because this kind of, um, uh, normalize and then flip and then do something else and then do something else.

These kinds of pipelines are things we often want to define once and use multiple times. Um, one way could do that is we could say, uh, pipeline, uh, equals compose. Uh, so compose is just a standard, um, functional and mathematical thing that, um, runs a bunch of functions in order.

So we could first of all run our normalization function and then our flip image function. So now we could just go pipe. Uh, so that, that works. Um, probably is that composed doesn't know anything about, um, transforms. So if we now wanted to go, um, to decode it, we can't.

So we would have to go, um, you know, the image dot decode, uh, which actually isn't going to do anything because we don't have one, but we don't necessarily know which ones have one, which don't, you'd have to do something like this. Um, so it would be great if we could compose things in a way that know how to compose, um, decoding as well as encoding.

Um, so to do that, you just change from compose to pipeline and it's going to do exactly the same thing. But now we can say pipe.decode and it's done. Um, so as you can see, this is kind of idea of like trying to get the pieces all to work together in this data transformation domain that we're in specifically kind of couple transformation domain that we're in.

Um, because pipeline knows that we want to be dealing with transforms. Um, it knows that if it gets a normal image and not a transform, it should turn it into a transform. So let's remove the at transform decorator from here and run this again. And I thought that was going to work.

Um, oh, so I thought I had made that the default. Sorry, I think I forgot to export something. Yes, that is correct. So let's do that. So let's do that. So let's do that. So let's do, okay. Let's get the right order. So pipeline. Okay. So let's do that.

So let's do that. So, let's add a new class. Let's say class. Okay. So let's do that. So let's do that. So let's, let's do that. Um, okay. So we put an image tuple pipeline, which is going to go. So if I add a transform back on here, and I've managed to break everything.

Let's go do that. That's a problem with live coding, I guess. Oh, and now it's working again. What did I do wrong? Maybe I just want to restart properly. I guess I did. Okay. All right. Sorry about that delay. So what I was saying is that a pipeline knows that the things that's being passed need to be transforms.

If we pass it something that's not a transform, so I've made this not a transform now. Um, so the cool thing is that pipeline will turn it into a transform automatically. So yes, David from people in the San Francisco study group, they say one of the best things is finding out how many errors I make.

Um, apparently that's extremely, um, encouraging that I make lots. Uh, and I want the default behavior of the transform to encode and decode. Uh, yeah. So the default behavior of encode and decode is to do nothing at all. Um, so the decoder want, the decoder doesn't know what the image prior to the flip is.

The decoder is not storing any state about what was decoded. It's just a function. So the decoder simply just returns whatever it's passed. It doesn't do anything. Uh, we haven't got to data box yet. So we'll talk about data box when we get there. Um, okay. And Kevin, I find I have a lot of troubles with auto reload, so I don't tend to use it, but you can actually just tap zero twice on the keyboard and it'll restart the kernel, um, which I thought I did before, but I might've forgotten.

Um, and the other thing I do, I find super handy is I go edit keyboard shortcuts and well, why don't I see any edit shortcuts? Edit keyboard shortcuts. Apparently you put it is currently broken. That's weird. Um, anyway, I add a keyboard shortcut for, um, um, running all the cells above the current cursor, which normally you can do with edit keyboard shortcuts.

Um, so I can just go zero zero to restart and then I go a to run above and that gets me back to where I am. Um, okay. Um, so hopefully you can kind of see how, um, this approach allows us to, um, kind of use normal Python functions, but in a way that is kind of dispatched and composed in a way that makes more sense for data processing pipelines than the default approach that Python gives us.

Um, but the nice thing is they're, um, they're not, they're not that different, you know? So like it's, it's, it's just kind of call, you know, the function just behaves like a normal function unless you add type annotations, uh, and so forth. Um, okay. So this is making good progress here.

Um, so then, um, yeah, so we don't need to actually define my tensor image because, um, uh, we already have, um, a answer image class, which we can use. Um, but as you can see, it's actually defined exactly the same way. Um, so that's the one that we should be using, um, so that's, um, and I guess we might as well just say T image dot on and let's restart.

And then we don't need my int because we've actually defined something called int as I mentioned in exactly the same way. Int again, just pass, but it's also got a show on it. Um, so that's now going to be a tensor image. There we go. Oh, um, okay. There we are.

So then the last piece of this puzzle, um, for now is that we might want, let's make our load image thing into a transform as well. Um, so the fine, um, create image with some file name and we're going to, it's actually going to be like, uh, I guess we're actually going to create an image.

And so we're going to return, um, answer array. Um, image, sorry, load image X, um, that we could do that. And so now we could use that here. And let's restart the image, the image done all above. Okay. So there you go. Now the point about this though, is that what we actually want risk to be is because you know, it's an image, not just any odd tensor.

We want to avoid having kind of non semantically typed tensors wherever possible. Cause then we, or first AI doesn't know what to do with them, but this is currently just a tensor. So we can just say that should be a and so our image and it will cast it, uh, should cast it for us.

Oh, and of course, unless it's in a pipeline, we need to tell it it's a transform for any magic to happen. Okay. Um, in practice, I basically pretty much never need to run transforms outside of a pipeline, um, except for testing. So, um, you'll see a lot of things that don't have decorators, um, because we basically always use them in the pipeline, which you can see.

Um, for example, we just went pipeline and pass that in. That would work as well. Okay. Cause there's a pipeline with just one, uh, something being composed, um, or of course, alternatively, it could do this and that would work too. Um, but heck out of having return types is kind of nicer because it's just going to ensure that it's, it's cast properly for you and it won't bother casting it if it's already the right type.

Um, just kind of nice. Um, okay. Random orientations we will get to later. Um, but if you're interested in looking ahead, you'll find that there is a, um, thing called efficient augment where you can find that stuff developed. Um, okay. So, um, you know, you don't need to learn anything about or understand anything about pipe dispatch or meta classes or any of that stuff to use this any more than to use, um, Python's default, uh, function dispatch.

You don't have to learn anything about, you know, um, the, the workings of Python's type dispatch system. Um, you just have to know what it does. Um, but we're going to be spending time looking to see how it works because that's what code walkthrough is all about, but you certainly don't have to understand it to use any of this.

Um, so the next thing to be aware of is that both pipelines and tenses, um, when you create them, you can pass an optional additional argument as item, uh, which is true or false. Um, as item equals false gives you the behavior that we've seen, um, which is that it, um, uh, well, if given a tuple or some kind of listy type of thing, it will operate on each element of the collection.

Um, but if you say as item equals, uh, true, um, then it won't, it basically turns that behavior off. Um, so you can actually see that in the definition of transform, um, here it says if use as item, which returns soft as item, then just call the function. Uh, and then otherwise it will do the loop that in this case it's a couple comprehension.

So, uh, you can easily turn off that behavior, um, by saying as item equals true either in the pipeline, uh, or in the transform. Um, the, um, there are predefined, uh, subclasses of transform as you can see called couple transform and item transform. And they actually set as item force equals false and true.

And the force versions of these mean that even if later on you create a pipeline with as item equals something, um, it won't change those transforms. So if you create an couple transform or an item transform, then you can know that all the time, no matter what, it will have the behavior that you requested.

So why does pipeline have this, uh, as item equals true false thing. So what that does is pipeline simply will go through. So you can see pipeline in the constructor called set as item that simply goes through each function in the pipeline and sets as item. Um, and the reason we do that is that, um, depending on kind of where you are in the data, the full data processing, processing pipeline, um, sometimes you want kind of tuple behavior and sometimes you want item behavior and specifically, um, up until you, um, uh, create your batch together, you kind of have, uh, two separate, uh, pipelines going on.

So each, each pipeline is being separately applied to mind you from, uh, the pets tutorial. We have two totally separate pipelines. So since each of those is only being applied to, um, one item at a time, not to tuples, um, the, uh, uh, uh, the, the fast.ai pipeline will automatically set as item equals true for those because they don't have, uh, they haven't been combined into tuples yet.

Um, and then later on in the process, it will set as item equals false, uh, basically after they've been pulled out of datasets. So by the time they get to the data loader, um, it'll use, uh, false. Um, and we'll see more of that later. Um, but that's a little, little thing to be aware of.

So basically it just tries to do the right thing, um, for you. Um, but you know, you don't have to use any of that functionality, of course, if you don't want to. Um, okay. Okay. All right. Well, I think that's, um, enough for today. Give us a good introduction to pipelines and transforms.

Um, and, uh, yeah, again, happy to take requests on the forum for what you'd like to look at next. Otherwise, um, yeah, maybe we'll start going deeper dive into how these things are actually put together. Um, we'll see where we go. Okay. Thanks everybody. See you tomorrow.