Prompt Engineering is Dead — Nir Gazit, Traceloop

Transcript

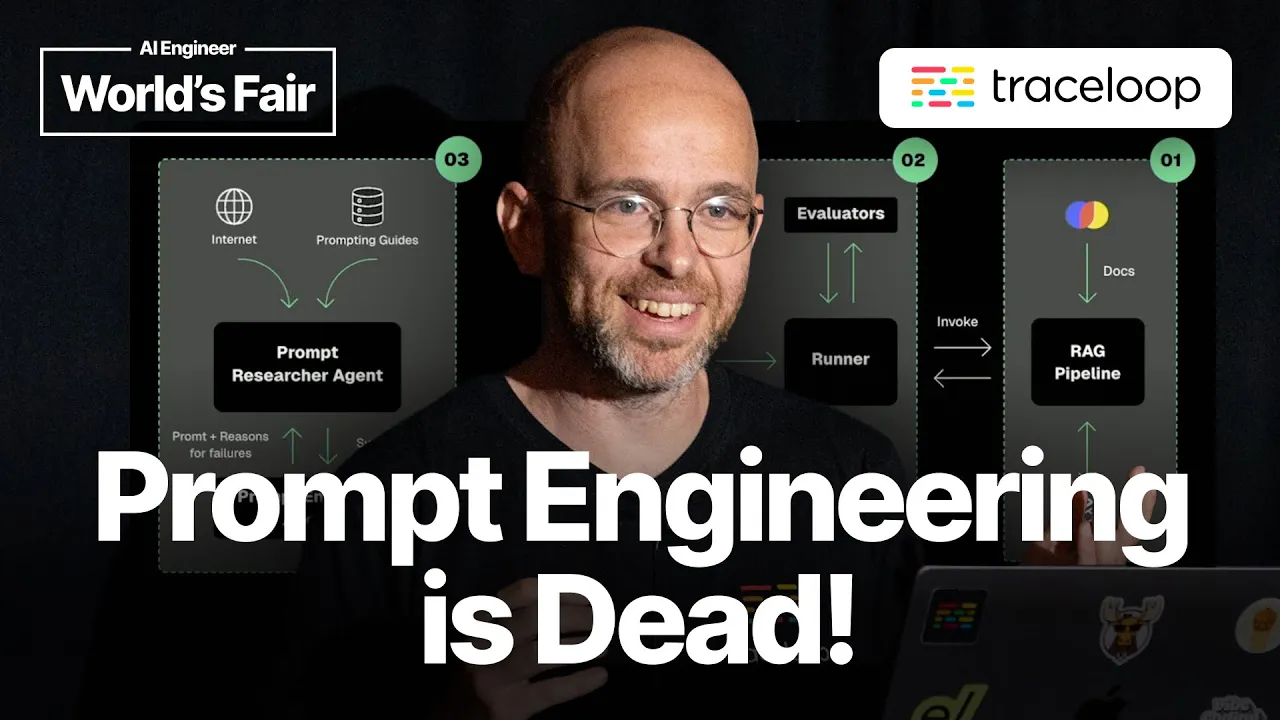

All right so prompt engineering is dead and this is a bold statement but I'm saying that it never actually existed and if you've ever done a prompt engineering you probably know that it's kind of bullshit because you kind of try to ask the LLM to act nicely and do what you want to do do what you wanted to do and so this talk I'm gonna walk you through a story of mine where I managed to improve our kind of sample chatbot that we have in our website and I made it possible for me to made it like five weeks better without actually doing any prompt engineering because you know it's not really engineering right and so we have a chatbot in our application in our website it's a rag-based super simple it just allows you to ask questions about our documentation and gives you answers super simple super straightforward the easiest rag you can think about and when I deployed it the first time it worked kind of okay and I tried to make it work better and you can see some examples here I tried to on I needed to have him only answer things that related to trace loop because this is my company I don't want you know it to answer things about the weather or something else I wanted to answer about trace loop only and I wanted to be useful so if someone asked a question I want the question to be useful for the users who asked it and I wanted to make less mistakes it was making so many mistakes and I wanted to just get a little bit better and and so you know what what are we doing at this stage we're doing prompt engineering but why do I even need to iterate on prompts I just want to give give it a couple of examples this is good this is bad and have it somehow learn you know how to how to follow my instructions properly and always and and so I begin imagining you know I begin imagining I want to build this automatically improving machine that will be kind of like an agent that will research the web find the latest and greatest prompt engineering techniques and and then apply them to my prompt over and over and over again until I get the best prompt for my super simple rag pipeline and and to do that to run this kind of machine I needed to do some a bit more work right because I need you've all been in a lot of conversations here about evaluators so you know that we need an evaluator so the crazy machine can actually know how to improve and if it's actually improving and so to do that I need to create a data set of like questions I want to ask my chatbot about the documentation and then I want to build an evaluator that can evaluate how well my rag pipeline is responding to those questions and then I have the agent that's kind of like iterating on the prompts until I get to the best prompt ever and so this is kind of like how it will look like so this is kind of like I have the right I have the rag pipeline I have the evaluator and then I'm gonna have my auto-improving agent let's begin rag pipeline super simple have a Chroma database have an open AI and some simple prompts that you know take a you know take it to the question find relevant documents in the Chroma database and just output an answer as you will see I'm just super simple one I just ask it a question how do I get started with trace loop and then it runs takes a couple of seconds and then I'm gonna see an answer and I'm gonna see the trace just so you if you've never if you've never seen a rag pipeline and I'm guessing you also a rag pipeline this is how it looks like right couple of calls to open AI and then you know final stage we get like all the context into open AI and we get the final answer to the user it was great and we have a couple of prompts here we probably want to optimize great okay now let's go to the next step my evaluator so what do we need for evaluations and this is not a talk about evaluators I'm sure you've heard a lot of talks about evaluators in this conference but I'm gonna tell you what what kind of evaluator did I choose to use and so first you know you need a data set of questions and and you know a way to evaluate these questions and then the evaluator is gonna kind of invoke the rag pipeline and then get answer from the rag pipeline and then gonna kind of evaluate and get a score maybe a reason if why the score is low or high and then this is kind of what we're gonna use for the agent will be the last step that can auto improve the prompt and so you know there's a couple of there's a lot of types of evaluators I think we've been talking a lot about LLM as a judge and spoiler alert I'm going to choose an LLM as a judge here because it's easier to build and it's easier to deploy but there are also a lot of different types of evaluators kind of like classic NLP metrics you know if you want to do something which is embedded based or all of these colors that can also help you evaluate different types of tasks like translation tasks and and even text summarization tasks and I think the main difference that I see between you know classic NLP metrics and LLM as a judge that classic NLP metrics usually require some ground truth and so I need to have you know my questions and then the actual answers and then if I have the actual answers when I'm invoking my rag pipeline I can actually get you know I can compare the ground truth answer against whatever the rag pipeline return.

Great. And then the LLM as a judge they can work with ground truth sometimes you can build a judge that can judge an answer based on some real answer that you expect but they can also they can also try to assess an answer just based on like the question and the context without any any ground truth.

For my example because I know the data set and I know everything I'm going to build a ground truth based LLM as a judge. But before we're going to do that I'm going to talk to you about where can we evaluate where can we run these evaluators right. So if we have an evaluator what can we evaluate I'm taking like I'm saying that I want to evaluate my rag pipeline but what exactly do I mean.

So the right pipeline is basically two steps right we get the data from the vector database and we run the call open AI with some context from the vector database. So we can kind of evaluate each step separately you can evaluate each one and kind of call it like a unit testing right we can even evaluate how well the vector databases is fetching the data that I need to answer the question.

Or I can run it on like the complete execution of the rag I can take the input the question and take the answer the final answer that I'm getting from the from the rag pipeline and just evaluate how well the answer is given that question. And I can also dive deeper into everything that's happening in the internals look at the context look at the question look at the answer and everything all together try to evaluate given given the context and given the question how well is the answer performing.

So again I'm going to do a simple lm as a judge and I'm going to take 20 examples of questions that I've created and for each question I'm going to write what do I expect the answer to contain. So we're going to have like three facts that the answer that was generated by the rag pipeline should have and then the evaluator is simply going to take the answer that we've gotten from the rag pipeline and make sure that the facts actually appear in the answer.

And so we're going to get you know per each fact we're going to get pass or fail so it's a Boolean response and then a reason. So if it failed I want to see a reason like why the judge thinks that the fact does not appear in the answer that we got.

And so then we're going to get a score which is numeral which is great because we like working with numeral scores which is kind of like summarizing all the facts across all the examples. So we have three facts times 20 examples. It's 60 total facts that we need to evaluate.

I'm going to just check how many facts were right out of the total facts that we expected to have in the rag generated answers. So let's see it in action. And as you can see this is like the questions and the facts and everything. This is what I created to make sure that the rag operates and then I'm going to run it, the evaluator.

And what you'll see that the evaluator is doing is running evaluations, right? So it's taking questions, calling the rag, getting an answer, and then checking that answer against each of the facts that I've given it. And then I'm going to create a score and you'll see the score, you know, slowly progressing as I'm running the evaluator.

This is like a super slow process and I don't have enough time to actually show you it to the end, but it works. It takes just a couple of minutes. Okay. Okay. Okay. Great. Last step is our agent. The agent that will optimize the prompt. We have everything set up.

Now we can actually build the agent. So I'm going to build a researcher agent and it's going to take, you know, as I said, the prompting guides online. It's going to crawl the web. It's going to find prompting guides. It's going to get an initial prompt. And then it's going to take, you know, it's going to take, it's going to run the evaluator once to get like the initial score.

And then if we get the initial score, it's going to get the reasons why we failed some of the evaluations. It's going to combine the reasons for failures plus prompting guides to get a new prompt. And then we're going to get a new prompt and then we're going to feed it back to the evaluator, run it again, get a new score, and then run it again with the agent so we can actually even improve the score a bit more.

If you've ever done kind of ML, classic ML training, this is kind of like a classic machine learning training but with a bit of vibes. So let's see it in action. We're going to -- I've used the crew AI for doing that. And I'm going to kick off. And you can see it will take a couple of seconds.

We can actually see the agent, you know, thinking and then calling the evaluator. And then the evaluator will run and get the responses and get the score. And I hope -- yeah, I hope I can -- yeah, it's running the evaluators. It's calculating the score. And then, you know, the agent is running back.

I skipped a couple of minutes here. And then, you know, we got a response. And then now the agent, the researcher agent is trying to understand, okay, why the prompt wasn't working. Can I find ways to improve RAD pipeline-based prompts? And then it's going to, like, regenerate new kind of, like, prompt with maybe a bit more instructions or kind of best practices on how to write prompts.

And then, you see, calling the evaluator again to get a new score so we know if we need to optimize or not. Great. Okay. This is when I run it once. Actually, the initial score was okay. It was 0.4. And I ran it just two iterations. And I got, like, a really -- like, a long kind of prompt that you expect, like, to see from, like, someone who's done a lot of prompt engineering.

It's, like, giving it a lot of instructions and telling it how it should react and, like, how it shouldn't. You're, like, an expert in answering users' questions about Tracetap. It was really nice to see it happening without me having to -- I wrote a lot of code to make the agent work.

But I didn't need to do -- I didn't need to do a lot of prompt engineering. I didn't need to do any prompt engineering. Right? So it kind of worked. It was really, really nice. And the score actually jumped by a lot. And I stopped at 0.9 because it means that, like, 90% of the effects were correct.

Great. I can stop. So if there's something you want to get out of this talk is that you can also vibe engineer your prompts. You don't need to manually iterate in prompts. You just need to build evaluators. And, again, you kind of can run gradient ascend on your evaluators.

So you have your score. You can kind of slowly try to optimize on your score either automatically with an agent like I did or manually by just, like, reading those manuals about how to write the best prompts. And then fixing them again and again and again. Some future thoughts to wrap it up.

We have two minutes. Okay? Am I overfitting? So I have 20 examples and then I run the evaluators. So maybe, maybe, maybe the prompt that I'm getting will work really well for those 20 examples. But then if I give it, like, another example, it will be horrible. So, yes, I was overfitting that because I just gave it the entire 20 examples.

Ideally, it would have more examples. And then kind of, like, again, classic machine learning. Split it into train test sets or train test eval sets. And then run them, like, separately. Right? You take the train set. This is what you're trying to optimize. But then you also use the test set to make sure that you actually -- you're not overfitting to your training data set.

Right? So you don't want to give the optimizer too much context unless it just will know how to answer those specific questions and nothing else. I told you that prompt engineering is dead. But I've actually done a lot of prompt engineering for this demo because I needed to engineer the agent that is optimizing my prompts.

So it was horrible. But I've done a lot of prompt engineering for that. Maybe I can also do this work for the evaluator prompts or even for the agent prompt. It's kind of like this meta talk where who's even writing prompts here? Like, I'm using the agent to optimize itself.

Maybe it will work. Maybe it won't. I might try it some weekend. It's kind of interesting. Some links. You can try this out. It's available in our repo. It's trace loop slash auto-prompting demo. And you can run it. It should run. I tested it yesterday. And if you have any questions, you're welcome to ask me outside.

Or you can even book some time with me just by not clicking this link but just following this link. Thank you very much. Thank you very much. Thank you very much. Thank you very much. We'll see you next time.