AI powered entomology: Lessons from millions of AI code reviews — Tomas Reimers, Graphite

Transcript

. Thank you all so much for coming to this talk. Thank you for being at this conference generally. My name is Tomas. I'm one of the co-founders of Graphite, and I'm here to talk to you around AI power entomology. If you don't know, entomology is the study of bugs.

It's something that is very near and dear to our heart, and part of what our product does. So Graphite, for those of you that don't know, builds a product called Diamond. Diamond's an AI-powered code reviewer. You go ahead, you connect it to your GitHub, and it goes ahead and finds bugs.

The project started about a year ago. What we started to notice was that the amount of code being written by AI was going up and up and up, but so was the amount of bugs. And after really thinking about it, we thought that this might actually be part and parcel, and what we need to do is we need to find a way to better address these bugs in general.

Given the technological advances, the first thing we turned to was AI itself, and we started to ask, well, maybe AI is creating the bugs, but can it also find the bugs? Can it help us? We started to go ahead and do things like ask Claude, hey, here's a PR.

Can you find bugs on this PR? And we were pretty impressed with the early results. Here's an example actually pulled from this week from our code base where it turns out that in certain instances, we'd be returning one of our database ORM classes uninstantiated, which would go ahead and crash our server.

Here's an example that came up on Twitter this week from our bot that found that in certain instances, there would be math being done around border radiuses that would lead to a division by a negative number, and would then go ahead and crash the front end. So to answer the question, it turns out AI can find bugs.

That's the end of the talk. I'm kidding if you've tried this. You know you've probably had a really, really frustrating experience. We also went ahead and saw things like, you should update this code to do what it already does. CSS doesn't work this way when it does. Or my favorite, you should revert this code to what it used to do because it used to do it.

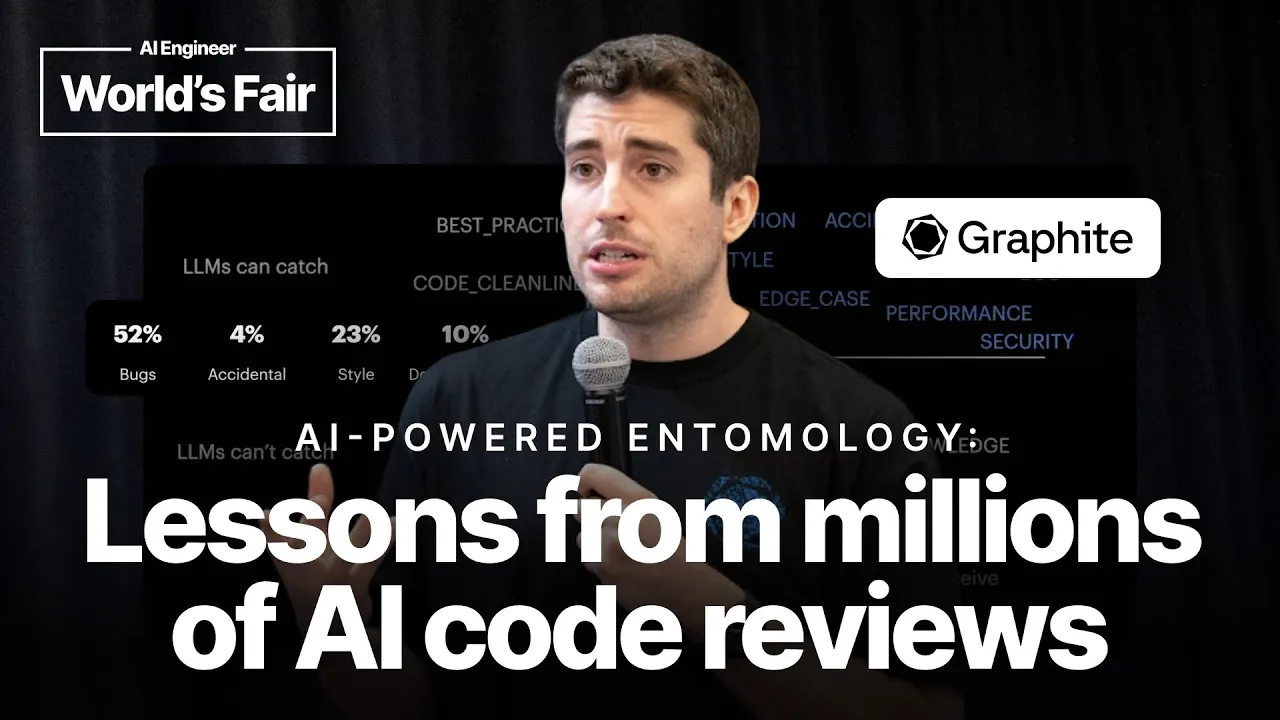

Getting those lost us a lot of confidence, but we started to think, well, we're seeing some really good things and we're seeing some really bad things, and maybe there's actually more than one type of bug. Maybe there's more than one type of thing an LLM can find. And so we started with the most basic division of, well, there's probably stuff that LLMs are good at catching and things that they're not good at catching.

At the end of the day, LLMs ultimately try and mimic the thing that you're asking them to do. And if you ask them, hey, what kind of code review comments would be left on this PR? It goes ahead and leaves everything, both those that are within its capability and things that are not within its capability.

And so we started to categorize those. What we found though was even when we categorize those, the LLM would start to leave comments like this. You should add a comment describing what this class does. You should extract this logic out into a function, or you should make sure this code has tests.

Well, these are technically correct. To developers, they're really frustrating. And I think this was actually one of the most insightful moments for us in building this project, was when we sat down with our design team and we started to actually go through past bugs, both those left by our bot and by humans in our own codebase.

The developers were all pretty much on the same page of like, yep, I'd be okay if an LLM left that. No, I would not be okay if an LLM left that. Yes, I'd be okay. And our designers were actually kind of baffled by it. They're like, well, but like, that kind of looks like that other comment.

And I think that what's happening here in the mind of the developer is if you go ahead and you read a type of comment like this, maybe you find it pedantic, frustrating, annoying when it comes from LLM, and you're much more welcoming to it when it comes from a human.

And so, as we started to think more around sort of that classification of bugs, we started to think around actually second access here, which was there's stuff LLMs can catch and LLMs can't catch, but there's also stuff that humans want to receive from an LLM and humans don't want to receive from an LLM.

And so, what we went ahead and did was we went ahead and we actually took 10,000 comments from our own codebase, from open source codebases, open source codebases, and we fed them to various LLMs and we asked them to categorize them. And we did that not just once, but we did that quite a few times.

And then we went ahead and we summarized those comments. And what we ended up with was actually this chart, where it says there's actually quite a few different types of bugs that you see left on codebases in the wild. Ignoring LLMs for a second, just talking around humans, you see things which are bugs, those are logical inconsistencies that lead the code to behave in a way it doesn't want to behave.

There's also accidentally committed code. This actually shows up more than you would expect. There are performance and security concerns. There's documentation where the code says one thing and does another and it's not clear which one's right. There's stylistic changes, things like, hey, you should update this comment or in this codebase we follow this other pattern.

And then there's a lot of stuff outside of sort of that top right quadrant. So in the bottom right where humans want to receive it, but the LLMs don't seem to be able to get there yet, are things like tribal knowledge. One class of comment that you'll see a lot in PRs is, hey, we used to do it this way.

We don't do it this way anymore because of blank. This documentation doesn't exist. It exists in the heads of your senior developers. And that's wonderful, but it's really hard for an AI to be able to mind read to that. On the left side where LLMs definitely can catch it, but humans don't want to receive are those things I showed you earlier, code cleanliness and best practice.

Examples of these that we've found are comment this function, add tests, extract this type out into a different type, extract this logic out into a function. While this is always correct to say, I think it's really hard to know when to apply to an LLM. I think as a human, you're applying some kind of barometer of, well, in this codebase, this logic is particularly tricky and I think someone's gonna get tripped up so we should extract it out versus, well, in this codebase, it's actually fine.

But what a bot can pretty much always leave this comment. I'd actually make the argument a human can pretty much always leave this comment and it'd be technically correct. The question is whether it's welcome in the codebase. And one thing I'm gonna say sort of like outside of all of this is as you add more, this area seems to become larger of what people are comfortable with, but for now, given the context that we have, given the codebase, the past history, your style guide and rules, we are what we have, we have what we have.

And so we ended up with this idea of, well, it turns out that these are basically the classes of comments that we think that human, that LLMs can both create and humans want to receive. Now, if you've worked with LLMs, you know that these kinds of offline passes and first passes are great for initial categorizations, but the much harder question is, how do you know that you're right continuously, right?

So we can, so as the story goes, we went ahead. We basically started to characterize comments that LLMs leave. We updated our prompts to only prompt the LLM to do things that were in its capacity and that humans wanted to receive. And people anecdotally started to like it a lot more.

But as we started to then think around, well, how can we get this LLM to, how do we know that this is going right? As we think around new LLMs, as we got into Claude 4 or Opus instead of Sonnet, how do we know that we're actually staying in this top right quadrant?

And as we increase the context, how do we know that this isn't growing on us? And actually, maybe there are even more types of comments that we could be leaving that we're not leaving already. And so first and foremost, we started by just looking at what kinds of comments is the thing currently leaving?

Your mileage may vary. For us, this is roughly the proportion we see of comments being left by the LLM right now based just on what we've seen. But the deeper question for us was, how do we measure the success, right? Like given this quadrant, how do we know that we're in the top right?

The first one was easy for us. So think around what they can catch and they can't catch. What we started to do was we started to actually add upvotes and downvotes to the product. So we let you go ahead and emoji react in these comments, and they pretty much tell us when the LLM's hallucinating, when we start to see a downvote spike.

We know that, okay, we might be trying to extend this thing beyond its capabilities, we need to tone it down. But the second one was a lot harder. The humans want to receive and humans don't want to receive was something that we weren't really sure how to get at.

And so upvote, downvote, we implemented it. We see about a less than a 4% downvote rate these days. We felt pretty good about that. The second one, as we started to think around it, well, what we realized was, well, what's the point of a comment? Why do you leave a comment in code review?

You leave a comment in code review ultimately so that someone actually updates the code to reflect that. And so our question was, well, can we measure that? Can we measure what percent of comments actually lead to the change that they describe? And so we started to do that. And we started to ask that question of, on open source repos and on the variety of repos that Graphite, which is a code review tool, has access to, can we actually start to measure that number?

And I think one of the most fascinating things we found was that only about 50% of human comments lead to changes. And so we started to ask the question of, well, could we get the LLM to at least this, right? Because if we get it to at least this, it's at least leaving comments on the level of fidelity that humans are.

Now, you might be saying in the audience and being like, well, why don't 100% of comments lead to action? I want to caveat this number. I'm saying lead to action within that PR itself. And so a lot of comments are sometimes fixed forward, where people are like, hey, I hear you, and I'm going to fix this in a follow-up.

A lot of comments are also like, hey, as a heads up, in the future if you do this, maybe you can do it this other way, but don't need to be acted on them. And I think there's a fair-- and some of them are just purely preferential of, I would do it this way, someone disagrees.

In healthy code review cultures, that space for disagreement exists. And so we started to measure this. And we started to say, could we get the bot here? Over time, we actually have. So as of March, we're at 52%, which is to say that if you start to actually prompt it correctly, you can get there.

And I think our sort of broader thesis is that this measuring-- getting bugs via an LLM does actually work. If you want to try any of these findings in production, Diamond is our product that offers it. We have a booth over there. Thank you. Thank you. Thank you.