AI in Action: Refactoring with PlantUML, N8N Workflows, and Claude vs. Gemini

Chapters

0:0 Lighthearted Intro0:44 MCP configured with Codax

1:6 How the MCP and Codax CLI works

1:28 Running the analyst in an E2B sandbox

1:34 Trouble with MCP's interaction with spreadsheet data

2:24 N8N workflow demonstration

3:59 Generating a workflow in N8N with O3Pro

4:18 Assembling a workflow in N8N with O3Pro

4:47 Using PlanUML to define code base refactoring flow

4:59 How PlantUML works

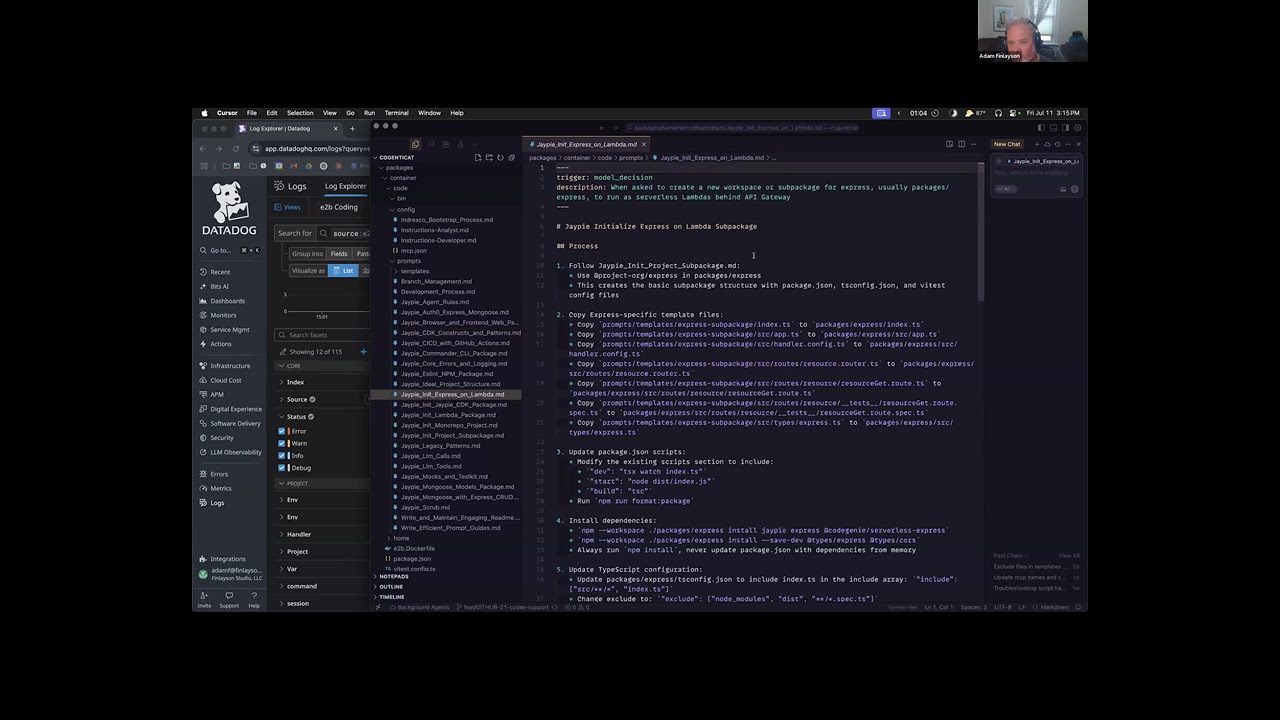

5:7 Connecting MCP to own documentation

5:40 MCP managing a JavaScript sandbox with PlantUML integration

6:6 Using Redis for communication between agents

9:59 Refactoring plan for a codebase with lots of garbage

39:41 "kogi plantuml" search

40:7 PlantUML Activity Diagrams

40:48 Experiences with Claude Code and Gemini

41:14 Elaborating on the plan to refactor the codebase

42:13 Example of a PlantUML diagram from ChatGPT

42:20 Linking the solution to the video

47:11 Elaborating on their code base refactoring flow

50:20 Own MCP, "Jesus"

50:45 Own MCP, "Go-Go-Goja"

51:6 Own MCP, "Vibes"

52:3 Experiences with Claude and Gemini

55:20 PlantUML diagram generated by ChatGPT

56:10 Avoiding over-fitting with their models

58:10 How they avoid over-fitting with their models

59:9 Working with different models at once

59:16 Importance of automating the code base refactoring flow

Transcript

That's a great intro. It is I. Can't pretend to be someone else. I'm excited to see this, though. I'm going to have to duck out at half-past. Adam, do you want to just drive? Do you want me to intro? How do you want to do this? I'll just drive.

Let me see if I can get this command to run on the first shot, which is highly, highly unlikely. That's my hopes there. In fact, if it runs on the first shot, you should not trust it in any capacity. Exactly, exactly. Yeah, so I'm going to see. Because this is going to take a while, I think I want to spawn it now.

I want to see if I can run this analyst that I have set up in an E2B. So that's these tiny little, like, Docker containers, you know, I guess, that you can spin up and have all sorts of toolkits available already on them. I'm going to say complete as much of advent of code 2024 as you can.

I only put one puzzle in there. And so I have it on this branch and I have it in this repo. And, oh, yeah, of course. Because, because I'm in the wrong repository, you know, of course. Duh. All right, let's see. Never happens to me. Right, yeah. Why doesn't this have a package JSON?

Oh, because it just, because it just doesn't. Let me see if this is going to, or maybe this is kicking off very quietly. That's a possibility also. Let me bring a browser in here. And I don't know if anyone's ever messed with this, you know, this service, but so I should have, yeah, so there's a little sandbox in here and it's running and it's just kind of doing whatever it is that it might be, that it might be doing.

I'm a little bit of a maniac and so I have hooked it up to a, you know, your tool of choice here, right? And so it created a sandbox and it sort of launched it. And the first thing that I do is make sure that Claude's up to date, which it is.

And then it says, okay, I'm supposed to complete, I was told to complete, redo this, read me, you know? So, all right. And I am on, I'm on Opus and I have some MCP servers. And so now it's going to say, I'll help you. What MCPs are you working with?

The only MCPs that I have in there are, well, I have, you know, Claude, I just sort of list all of their tools right when it moves up. So this is just all of the stuff Claude just comes with. This is my own, like, silly MCP server, which is really just a way for it to access my own written documentation about how I like to structure repos and, you know, my beyond Lint preferences.

It's, go ahead. Why wouldn't, why wouldn't you use ClaudeMD files for that? Um, that's a good, that's a good question. Um, I, I have used some, uh, windsurf rule files, which is like the same thing, but when I'm like at personally, I'm always bouncing from, um, from like, uh, from ID to ID and I want to mess with codex also.

And so I just found this was at the time, the most reliable way for me to, uh, put things in there. And then I can also put my own silliness in there. Like, you know, uh, if you could ask the user a question, you know, here's an ask, it just always responds with users not available, please proceed.

Uh, but I'm just curious how often it would ask. Interesting. Uh, how have you found, uh, where, where are you? So you're coming at this, not from a development perspective. I'm saying this with zero judgment. I'm trying to understand where you're coming from here. Yeah. Okay. Um, how have you been finding codex and are you talking about codex CLI or the codex platform?

I am talking about the codex CLI and not platform. Um, and big distinction, you know, I, I think that, uh, I'm, I'm, I'm honestly like in the process of getting it rigged up in this same, you know, like E2B setup and it's, um, and I don't like it as much, but maybe it's just because I've been biased towards, uh, towards Claude code.

Um, like one of my, one of the things that I was just, you know, just my hot takes, you know, like today I was just reading about how to set up its MCP servers. And it's like our MCP servers and it's like, our MCP server setup is just like Claude's except it's a Toml file and the key is named something different.

It's like, why the fuck did you do that? You know, but okay. that checks out. Yeah. Um, so do you have a Claude max sub or are you just paying by the API call? Uh, both, unfortunately, uh, to do it in the background like this, you'd have to pay by the API call.

So this is gonna, you know, this will spit out a charge. Why, why would you have to pay for the API call just to programmatically? Um, now I could be wrong about this, but, uh, I thought because maybe because I'm like, it's not me sitting there at the terminal that it's like, I'm using it as a, I should just be able to throw my.

You can programmatically use Claude code within your max subscription. A lot of people, uh, work with like multiple work trees and, and they'll have like multiple terminal windows open and even like terminal managers. There's something called Claudia up there. And there's, there's actually, it's a very busy space right now.

Um, people trying to like put their own custom harnesses around Claude code to, to better manage the sessions. Um, I can for sure see that, uh, I feel like I have an upper limit of how much I can just keep mentally track of. Very, very, yeah, that's, that's really, um, I mean, that's the, that's the crux of any, uh, agenda development, uh, enterprises.

Like you have to, you have to, these tools allow you to accelerate a lot and sometimes they allow you to accelerate far past your capability to keep yourself in check. Um, that's a very common anti-pattern I've seen with developers who are freshly adopting this technology, uh, because it's very exciting to get development.

Um, and it's very exciting to see the speed at which things start to work. Um, but also like if you're not being extraordinarily deliberate and planning from the outset, uh, to make a code base that works well with AI, then you're just gonna, uh, you're gonna quickly find yourself at a tipping point slash critical mass of spaghetti.

Um, where there's just nothing to do with it and you just gotta scrap it and start again. My, uh, analogy or the way that I think about this is like most, um, most developers are, uh, are kind of, you know, the, the closest. I can think it's like a, like a Kung Fu master, but they, they follow a very, a single, a single line of thought, very disciplined, you know?

Um, and like, that's what makes a good code base is a very disciplined, you know, sort of well paved roads all the way throughout. Whereas, uh, the models know all the techniques instead of just one technique. And so they mix and match them. And so they make a spaghetti because they, because to them it is interchangeable.

Um, so that's where the cloud MD files and the, uh, documentation references within the repo come into play for me. So the cloud MD files are great because they work in like a nested, uh, a nested way. And so you can include them as low in, uh, the directory structure as their relevance, uh, applies.

So if you've got a module where there's specific rules around like the way that this module works and connects to other modules, you make sure that you've got a cloud MD file file is in that folder. Well, not just that, but you've got a cloud MD file in that folder.

So I tend to have like a top layer, uh, underscore doc underscore ref folder in which I keep, uh, XML formatted documentation for the entire technology stack, uh, in my, uh, project. And I have little index files in there that, um, also an accidental format that point to where different components of those technology stacks are noted in there.

And so in the cloud MD files, I have it referenced the index to find where it needs to pull that. So that way you can like lazy load relevant context without like eating up the context window to search for that context in the first place. And that greatly reduces context bloat and allows me to, uh, keep task chains running for longer without interruption.

Nice. Nice. Let me see if I can pull a code window in here. Uh, I mean, I must have, yeah, so also just making sure your, your whole workflow is as modular as possible, because like if the, if the, so the less, the less context, the AI needs to be considering to make a productive change, uh, to your code base.

probably better, probably absolutely unequivocally the better outcomes you're going to be getting. And if you can structure it in a way that you've got like different, uh, tasks that are in, uh, different, uh, modules that may, you could, that can make sense to have like multiple. Work streams running at one time where they're not going to like actually get all up in each, like with each other in the middle of doing a thing.

So one thing that I've done with another, uh, another repo that sounds a lot like what you're talking about with the, with the, you know, like I have a top level one, but I haven't put one everywhere. Uh, is with, with windsurf at least I know you can, uh, you can, you can put like a bunch of read me.

You can put a bunch of markdown files in the dot windsurf slash rules directory, and then you can have these different things that are like, okay, this is always on. Like this is, you know, this has to, this has to be used. Uh, and wait, wait, wait, how big is that file?

I want to go back to that. This one, the one that's always on. There was one that you noted is always on. Yeah. This one's always on. How many lines are we talking here? I guess it's not huge. I mean, so this stuff is relevant to every single task.

Uh, I, I believe so at one time, you know, it's, it's probably honest that this, that parts of this could be, uh, could be cleaned up. Um, you know, but then you can say like, oh, you know, like this one's a great, a lot of useful information in here.

Uh, but then I, I think you can do like a similar thing to, um, like embedding a cloud file in a sub directory that you can tell windsurf, like, Hey, only if it's, you know, this packages and, and only if it's in this sub directory, then bring up this file, uh, and, and then you'll know what to do.

So that's, that's, that's kind of, that's, that's similar to, to what you're talking about with Claude. I have not optimized, uh, this particular repository for, for Claude. I'm trying to get it to rely on reading these files, you know, like as it's, as it's coding to, and, and deciding when to read these files.

So generally when it's, when it's making those efforts of its own accord, it's searching wide almost every time. Like, and like at least every context window is searching wide a fresh and that search is going to lead to a lot of context rot because also everything that it's looking at in its search for the thing that it needs.

Um, generally speaking, I guess it depends on the exact interactions of the sub agents. Um, I don't use windsurf very often, so I can't speak authoritatively on, on that specifically, but in my experience with the other tools that I've used, uh, a lot of that context rot, uh, makes its way into your outputs.

Um, and so that, that can be something that sort of just like misdirects it in subtle and obnoxious ways. Uh, and it's one of the reasons that people end up clearing, uh, like Claude code sessions so frequently. Mm-hmm, mm-hmm. Yeah. I felt like I had read at some point that you can tell Claude code to use sub agents for certain tasks and that it will respect that.

Um, I have not, uh, I guess I have not tried that and seen it and then observed it working, uh, really successfully for me, but I, but I, if you're telling. For the sub agent workflow, you want to make sure like what you're doing is appropriate for, for sub agents and that like it works as a, as like a work pattern.

So, uh, you, you'd want a multi-layer kind of delegatable tasks. So say you have, you're planning out your project and you've got it into like this series of, of well-contained actionable tasks. You can tell Claude code to spin off a sub agent, uh, for each of these tasks, uh, serially, uh, if they're not parallel processable and then each.

And you can then also specifically give it like which sub agent to use. So like, if you're on a little more limited description, you can go with sonnet and sonnet is still absolutely good enough for a lot of like well-defined contained tasks. Uh, and that can like greatly expand the, the resources you're working with over the course of the project.

Uh, but anyway, each one of those sub agents that kicks off for that task is going to not pollute the main context other than to say like job done boss. Um, and then it'll kick off the next thing, which will be a new, uh, sub agent with its own fresh context window.

And then the same thing. So you really have to make sure you're setting up the dominoes well for them to be knocking down, uh, because if you just say use sub agents, uh, it's not going to know when it's probably never going to choose it. Um, I, it, I wouldn't say never, it could be very creative if you give it, uh, if you give it unbounded, uh, tasks, it can get very creative on how it tries to answer those.

And that can be counterproductive a lot of time. All right, let me see what is this, what is this done? Um, let me see if I can, if I can open this. All right. So I started this at the, so well, and one of the reasons that at least for me, like I'm trying to, I'm trying to get this into something that I can analyze this.

I'm, I'm curious, you know, like you to see, you know, like, how does it, you know, how does it overcome problems? You know, how, where does it, where does it go sideways? Um, one of the things that we tried to, that I tried to do with, uh, with a partner, uh, we've been trying to do the last, you know, few days is, uh, give it.

Just, um, like, uh, dumps of dumps of spreadsheets, you know, like just go to, go to an enterprise system and, you know, dump six spreadsheets and tell it to do an analysis, you know? Uh, and yeah, it takes it a lot of, it, it, it doesn't do a good job.

It hallucinates. It gets off track. Uh, and how many are you giving it at a time? Uh, I think we're giving it between two and six in the, in the three different things that we've done. And what model of handling spreadsheets? What was that question? What's the model that's actually trying to parse the spreadsheets?

Oh, uh, we've, uh, tried it with, uh, Sonnet and Opus and hopefully I'll have codecs connected, you know, soon. I'm not a hundred on how well they, I know spreadsheets are a little bit weird when you're parsing them with LLMs. They have different strategies for handling them. And a lot of it's like, uh, code-based and programmatic.

Uh, I found that it liked to write Python scripts, uh, and, uh, and use Python to, to try and load up the spreadsheets. Yeah. That is, that is, that is common. Um, and that can be really problematic if the spreadsheets aren't formatted in a, in a way that, that is productive for that kind of parsing.

Uh, especially if they've got like, uh, empty spots in them, it can get really weird. Uh, also if there's any kind of like digital protection, like 365 sensitivity labeling, uh, with restrictions around that, that can be problematic. But, uh, the one thing that I would definitely consider no matter which flow I was using is I would want to process any attachments in sequence in like a separate, uh, context window.

because, uh, unless there was like a very specific, like a to B consideration that you were making. And even then I would try to find a way to parse them individually and then parse the, the outputs because, uh, basically I don't, I'm maybe I over-focus on this. But, but to me, like the wider, the, the context window gets the less valuable your results.

And even if you're working within a context window that technically the LLM can handle, um, like, uh, Claude code, Sonnet and Opus, they're technically rated for 200 K tokens. I think the second you get, uh, above 125, it gets funky. It gets a little bit funky and it still might work, but it's not going to be as focused or clear as the output that you're going to be getting with a fresh context window or a context window under 120 K.

Uh, like, likewise, like ChatGPT 4.0, uh, the pro version gets a 32 K context window, but the enterprise version gets a 128 K context window. And let me tell you that thing is garbage past 32 K so like you can, you can get something to it, except to ask and happily, uh, you know, process it, but the outputs that you're getting are, you know, widely dependent on how you're managing that context and the tool that you're managing that context with and how that tool breaks down the information.

you're getting it, giving it to formatted into the context that's working with mm-hmm mm-hmm yeah, I think it's, uh, I, I think it's a, it's a very challenging problem. Um, and go ahead. If you're using codex CLI, I would try 4.1 and see how that did 4.1 does okay with, uh, instruction following and long context windows.

It just extensively has a context window limitation up to a million, uh, tokens, but of course, just like anything else, I wouldn't trust it up to that actual million, but it has a much wider range where it doesn't lose the plot. then, or as does, I guess, Gemini 2.5, uh, so both of those might be, uh, candidates for the, for parsing if the other models aren't handling them well.

Yeah, I, I feel like, you know, there's, um, you know, there, there has to be like a, well, there doesn't have to be, there's possibly, you know, like a generalized architecture. You know, that is just like, Hey, you orchestrate and hand things off to, to, to sub agents, you know?

Um, but, uh, yeah, for sure. We're not at the point where you can, where you can just say, Hey, here's some spreadsheets now produce me magic, you know? Um, and so I don't think that, you know, like it's that we're on the verge of just, uh, replacing the entire blue white collar sector tomorrow.

We're not even close, we're not even close, like AI will not take anyone's job. Someone who knows how to use AI will take a lot of people's jobs. Sure, sure, sure. Well, and, and, you know, maybe that, maybe that, you know, brings me to the, you know, the other piece.

I think we wanted to, to talk about at some point, which was, um, uh, this N8, uh, N8N thing. N8N, I've heard, I've heard of it. Yeah, so, uh, uh, and of course, you know, well, maybe this is, maybe this is fortunate, you know, like I had some sort of crash with it and it lost, uh, my ability to, uh, plug into, or I had it plugged into a model.

And now that's gone. Um, but, you know, basically this is, uh, let me just, you know, I can, it's, it's a little workflow tool, right? Yeah, it's a no card tool, right? Yeah. I can press this and then I can get an HTTP request. Yeah. And so now I can come in here and I can say, uh, do a, so I haven't, I, you know, I haven't messed with this, you know, all but, uh, an hour or two.

Uh, I'm going to, I have already connected to open AI research of your message model. Um, okay. I don't know how familiar people are with these kind of like no code, uh, interfaces. I'm not super familiar with them. Uh, so this was, so what I did find that was cool, uh, is, you know, so if I want my code to plug into this and then this is going to plug into this and, you know, so if I come in here, uh, yeah, it automatically like knows what the stuff that it's going to get wired up is.

And so I think that's kind of, um, what can I ask this model to do? I can just say, uh, hello. Um, my name is, uh, and I am from, uh, you know, operation. So I just have, uh, name can be anything and API it's whether something came in for an eight through the API or through the, through the button.

Uh, that's really all it is. So let me execute this workflow. Of course it has an issue. Um, I think that, uh, You know, maybe where I, maybe where I want to, maybe where I want to pause right away is, uh, like, I no longer think that this is just like an average user task.

You know, like, you know, like, you know, like, you know, go ahead, you know, average user. That's a very dislike. That's a very common, uh, experience where people are told that AI solves all their problems. And they're like, okay, I'll go use AI to solve all my problems when they get there.

And they're like, uh, what now? Because it'll do a million things. There's too many ways to skin any cat with AI basically. And any given, like any given, this is like, maybe this is, you know, why I'm, I'm vacillating between these two extremes, you know, is like, this is putting rails up for every goddamn thing.

You know, like this is, this is total, this is total rails. And this would be a nightmare to build, I'm sorry, I'm not catching up on, uh, I'm not up on chat. Uh, this would be like a nightmare to build, like all of your workflows for your entire company.

And then I started thinking about, you know, then you start thinking about the change management process and what happens, what happens when an error happens, I mean, forget an error, you know. You will drown in edge cases if you try to set up your whole company around N8N workflows, uh, just from like the feminism.

I've seen it here. Uh, yeah, it is, it is cool. And slick. Yeah, it is, it is cool. It is cool and slick, you know, and I'm going to keep messing with it because I want to, uh, you know, I want to keep, uh, you know, because I want to see what it can do.

Uh, let me see if there's, uh, I, I would love to, Manuel, I would love to hear, uh, okay. I really like this chat. Uh, I would love to hear, I would love to hear some of your anecdotes, Manuel. So last year at, um, at, uh, the AI engineering conference, uh, this dude showed me, he was working at like some kind of healthcare company.

And so basically all their analysts. And older, I don't know how this is all like structured, but they had like hundreds and hundreds of NN workflows. The interesting thing was like that the people who were good at prompting were the analysts. And so for example, one analyst had figured out that YAML was a good intermediate format to do prompting things.

So, but they seemed like, I don't know how they were orchestrating all of that stuff, but the dude showed me that like huge NN workflows to like, and this was, it was like a year ago. So, so it wasn't like full on agents or so, but they were pre AI.

They were already using Natan, like quite a bit. I'm pronouncing it Natan. Cause I'm like tired of, I don't know. Yeah. Yeah. Natan. I like that. Secret with N8N is don't use N8N when you're using it in a N8N, just like whatever you're doing, go to O3 pro say, give me a workflow.

Jason that does this for me. So yeah. So that's what I wanted to show the dude. I was like, Oh, give me the Jason. I'll generate one for you. And I was like, okay, well the solid three, five at the time didn't really do all too well. Although you could, you know, like built an intermediate step.

Um, but they had like huge, huge workflows that they were using for forever. And that allowed, you know, the analysts to have like little blocks that would connect to their own APIs. And I think that was really useful for them. Well, that, yeah. And that's the, so the, the, the move here is O3 pro here's my data dog logs.

They're just going to stream to you whenever these agents get off track, you should make an N8N workflow. That's going to generate the Jason that will get them past this hump. And then you should save that as a template. So you like just recursively feed it back to the model and be stupid.

That's how do you trigger that? Like, so basically you just, the, the nodes can run JavaScript and then you can also make a, so there's JavaScript notes and there's, and there's webhook notes. Like those were, those were like the easiest to, I had to give a workflow. Also don't ask me this question.

Just ask the O3 pro to do it for you. Just like, yeah, yeah. O3 pro is great. Just be aware of the limitations to calls. If you're not paying by API. Yeah. Yeah. It's the other thing that's cool. Is that you have all these like influencers doing N8N things and you can just like screenshot their setups, which some of them are really useful.

Like they're, they're LinkedIn scrapers or whatever. And, and it just saves a lot of time to be like, okay, someone has already done this, put it in. Um, but I, I don't, I can't deal with like graphical interfaces. Yeah. For me, anytime I'm trying to make a graphical interface, I run into something that a graphical interface can't handle, but I need to dig into the CLI and it has just permanently turned me off of GUIs.

Yeah. Don't use the GUI. I don't use the GUI. Like it's, it's only JSON. Yeah. The reason you use it is because it's a structured data format. That's really like, it's really hard for a model to mess up. It, it, it automatically gives you the rails and you can ask it to generate more rails for itself and it's not going to just sold me on any then or Nate, as we're calling it now, I guess.

Yeah. So the, yeah, the, the thing with GUI with lots of applications, but like you know, applications like, like N8N is like, stop trying to use them. Like make the model do everything. Stop trying to be smart. Like don't drive. Don't try to fix it. The model's smarter than you just figure out how to get the model to fix itself.

Yeah. And just be careful to context engineering when you're doing this. Yeah. Or at least that's how I operate these days. It's like, I mean, that makes, that makes perfect sense to me. I like it. It sounds like a solid work. Cause you already have the data dog, like tracking everything, like getting a feedback loop of data dog that is tracking that.

And then those agents are generating workflows that are going back into the data dog that are flagging specific things that are going back into the workflow that the agents are then watching, et cetera, et cetera. So you get these little recursive, recursive self-improvement loops. And it's not this grand AGI thing.

It's just like one little loop that works for me. And you just shove those in everywhere. How do you avoid overfitting in those scenarios? I guess if it's not giving you, if it's not throwing an error, it wouldn't cause it to adjust. Yeah. Well, yeah, yeah. Like, so the, you, you, you review it, but like N8N is kind of how you avoid the overfitting.

Like you give it these really structured places to play and just let it play. It was kind of the, the thing. Okay. Cool. Thank you very much for sharing that. Yeah, it's, I haven't, I feel like I'm not using it enough, but like from, from a philosophy perspective, every time.

So like my, my, my thing that I end up doing is like, uh, when I'm, when I'm thinking about like these sort of AI first workflows is like, I start over engineering them. I'm like, oh, the model's messing up. So I have to step in like, no, that's, I need to like stop myself from doing that.

Like, there's a way to get the model to step in on itself. I just have to find that and give it that. That's interesting. Well, it's not the model. It's a model of your choosing for that workflow. That being a three. Cause yeah. Sometimes it's like ensemble or like, yeah.

So if, if, if there's a thing that this, that just a straight up call to one model is not going to work well, the answer to that is like call multiple models, have them debate, then ensemble that into the, like the ultimate deliverable. So it's always like, ship it back to the model.

Like the, if the model, if the model can't handle the model and get the model to prompt the model, um, because I'm not going to try to figure out how to prompt the model to get the thing. Um, sounds like. What's funny is when we, uh, something we've observed, um, just in this experimentation with, uh, throwing spreadsheets and read me's at, um, E to B, you know, my numeral, we'd ran out of just letter acronyms, didn't we damn it.

Uh, you know, I'm just, just throwing things at, at E to B is that when, uh, when we wrote the read me, it did not perform as well as when we wrote the read me and then asked the model to rewrite, to read me. They're better at prompting themselves than, than we are.

Uh, like the topic console bench is really, really good for this. I would say there's like a, there's, there's like a sliver above where they're, where they're at, where I've seen, I'm, I wouldn't consider myself to be a talented enough context engineering to, to, uh, improve over O3 pros outputs.

But I have seen people who get an output, uh, from something like O3 pro and then they just go in and they start moving tokens, uh, as superfluous. And I, there's a science to it that I. cannot, uh, grok yet. I'm trying to figure it out, but the models are great at that science.

The model, the models will get you very far, I guess. Uh, yeah, that basically is, or, and it's like, yeah, like, or like, I think there's, uh, some principle, like principally papers that like show this, like the, the models are better at prompting themselves than we are. Like we might have like an edge sliver, but end of the day, like that's human stuff.

What you, what the, the thing that you want is to add that little sliver into the search space that the model is looking forward to reconfigure this prompt into like the optimal sort of, sort of directive for the other model. Yeah. Makes sense. Reality is a search problem. change my mind.

That's all I had, you know, I'm happy to answer the questions. I'm happy to hack at something, you know, what's the, yeah. What's something that you can't get the model to do with N8N right now? Or like, what's the, what's an N8N thing where, what is the thing where you're like, it doesn't work for this?

I'm too, I'm too early. You know, I'm still, I'm still like getting my footing to be fair, you know? Well, yeah. So what I, uh, or like, so, uh, six spreadsheets was something that I heard doesn't work. You go, go to O3 pro say, Hey, I have these six spreadsheets.

And I also have N8N that can also like stop to call a model. Just so you know, um, can you give me a workflow that is going to correctly ensemble the models such that they can actually parse the, um, uh, the spreadsheets. Here is the original spreadsheets. And then here are the, the spreadsheet outlet output that I didn't like.

So avoid this. And then I'm gonna give you a description of the kind that I like, and then you just think on it and figure it out. Um, so yes, but O3 pro still has one 28 K context window. And so depending on those spreadsheets, he could be operating outside.

Yeah. So it's gonna, it's gonna truncate it, but what it should figure out while it's trying to make that workflow is that it needs to create sub spreadsheets and send those off to different models. Yeah. So I might workshop that with, I might workshop your O3 pro prompt with like O3, uh, before you submit it to O3 pro.

Yeah. And like, I might put that in a workflow that says like, Hey, like check this pro you know, the, uh, or like I would, I, I might expect that O3 might find out that, oh, like when I am creating this prompt of, uh, uh, uh, uh, of these three spreadsheets, then I should have a model recheck this outgoing spreadsheet slash prompt kind of thing that I'm sending during this workflow sort of, um, thing, but you need to like.

You need to be able to iterate iteratively prompt it. So you kind of like need like a deep researchy workflow, which you can build within it. And you can just sort of, you know, you can, you can sense you have the model node and you have the JS node, like you have, you have all the tools you would normally have.

If you're a JS developer, you just also have this like JSON based rails, primitive to build it off of kind of thing. I really liked the way that you put that. Um, so you could build in quality gates then to each step of the workflow where if X, Y, Z not met, and that could be X, Y, Z as determined by a model, uh, do not proceed or loop back.

Yeah. And like, uh, uh, like stream the data dog logs of the other models and then have an ensemble of models constantly debating. If the, the, the cloud code prompts need to be changed or intervened or whatever, and then like have something text you when you need to like, when it actually needs a human.

So I guess step one would be setting cost limits. Well, yes, well, yeah. So ideally the, the place I want to go with this is I want, I want, or because flagship models are going to be the thing with flagship models is they're powerful, but they're out of the box solutions.

So if I have my collection of data dog logs and my collection of N8N workflows, and I have like Kimi K2 or deep seek R1, then I can like RL, my internal model to be better at specifically handling my data dog and my N8N. I love that. I love that.

That makes, that makes all the sense. I'm telling you guys here, it's just in pieces. It's like together. I guess I'm going to try. And like anything works, you can use like Composio or you can use make or kind of whatever. It's just like anything that's sort of like constrains it.

I think like the way Emmanuel approaches it is he just goes straight raw code and he's like, make me some DSLs and then flip around the words and the DSLs kind of a kind of situation. I just use N8N just because it's easy to self-host and I have a coolify one click on it, but it works with pretty much whatever.

like, um, it's not that you necessarily need to use N8N either, but this graphical representation of flows, uh, is really interesting. I need to dig in more, but, um, what I've been doing when using sub agents. So I use M code, but I guess cloud code is the same.

I will ask it to design a diagram of how to paralyze sub tasks. And so basically do workflows. And the, I use plant UML cause it's actually, I think better suited for it. I ask it to output a plant UML diagram and then use that as an instruction. Right.

And it's like, it allows you to keep the model much more plant UML plant UML. Yeah. Like, like the old days, I think leaving behind UML and all of that, the first, the first one. Yeah. It's like mermaid, but like more tailored to software engineering. So if you, if you go look at, uh, if you go look at activity diagram, that's like the ones I usually use, um, see that scroll down a little bit.

Yeah. Oh my God. Yeah. They, they use ads. Don't click it. It's an ad. Um, so if you scroll down, they have these like syntax down here and that allows you to define, like, it's a well-known notation that's been around for like 20 years and, but, but it, it doesn't really matter per se.

It's more like, because it's a DSL slash syntax to define control flows. It's a really effective prompting technique compared to. You know, uh, even mark down to do lists because this wall per default, um, like encapsulate like parallelism. Let me see if I can share my screen because it will become much more evident if I show what I mean with it.

Um, let me see, I'm on my Mac. So I'm not really used to how to do these things. Let me see. So this might be a little bit too big. There we go. Is that readable? Yep. Uh, so let's say we have a big mess of a code base with lots of garbage.

Uh, I want to refactor it so that we first define a plan, like an architecture spec. Then review it, go back if it's bad, then start implementing a prototype. Then based on the prototype launch a series of parallel agents in their own work tree to refactor. The actual code base, I don't know.

Output flow diagram as plant. Um, I'm going to switch away from O3, but I would usually do this in O3. Um, you know, with like the actual code base, not like, not like the prompt garbage code base. How much of the code base would you use? Whatever the agent decides it's, is necessary.

Right. It's like, it's like, I, the goal is to trust it a lot. Right. Like I don't want to verify, I want to, I want to see how far I can push this kind of stuff. Um, and, and I mean, this is like upfront work to tell it where to look.

So it's not too bad. Right. Um, but really my idea is like, okay, how much can I do? You know, like, like on, on, on auto. So that I remove myself from the, from the process. Right. And so this would be like a pretty clean way of architecting something right.

Where you have like, maybe this is a sub-agent that does the review. Then you implement the prototype, you do this, and then you spawn the parallel refactors. So don't do this in Sonnet, at least not the heavily. neutered Sonnet, because that's a recipe for, for disaster. And then I don't have an agent on here.

Um, but, uh, I don't know how we could do this, simulate that, uh, simulate a run through with agents, as if you were a coding agent with sub-agent tools. Uh, I don't know, but this is a really effective prompting technique versus, you know, the more like text oriented way of doing things.

Uh, so I, I recommend trying it out because you can use it not just for coding, but for anything that's like a workflow and an agent, instead of describing it in text, which is like. ish, if you use code to describe it, it will like prompt it too hard to write code.

But I found this like intermediate diagram technique, pretty good to, to prompt sub-agents. So I've asked, I've put a memory in my chat GPT subscription so that whenever it thinks to output code that unless I was specifically asking for code, it should default to pseudo code. And I found that to be very productive.

yeah, yeah, so, so, so I've been playing around with these different things. I have something that's really interesting that I'm going to show as well, because they might give people some ideas. Um, so the, I've been playing around with these like prompting techniques. that are like kind of world-changing like use T-mux was a big, was a big one where it would always struggle running like servers in the background and tailing their logs and like closing them properly.

And, you know, it would like run a server, but then not be able to kill it and would kill other processes. So just using T-mux was like a pretty good way to solve that. And then I think for agents, what I think would be really interesting and I haven't really tried it out yet.

Let me see if I can find it. Man, I generate way too much stuff and madness, um, is using Redis CLI to both synchronize and also communicate sub agents with each other. Right. Cause they can store data, but they can also subscribe to event queues. So that sub agents are able to communicate saying like, I've done this and I've modified this file and then other agents can just pull them.

So I had them create like a JavaScript sandbox. Um, and also. A set of, so there's low level primitives for Redis, right. Which are cool, but they're like a little bit brittle. And then on top of those, I built a whole set of basically agent coordination things. So this is the, the low level stuff, but then agents can do this.

And I'm really curious to see if you give the agents basically the command line tools to do this, or the JavaScript methods to do this, like how much they can start communicating. Um, because they know what communication between agents looks like. Right. Like there's enough of that in the training corpus to, uh, to say, well, I'm going to wait on this agent to have finished refactoring the email stuff before I move on.

And then they call a blocking tool, um, and wait until the email agent says like I'm done. So, so I haven't played with this too much, but do you have agents like hot loaded and ready to fire off or do you, uh, like spin them up for each one?

So I have a MCP that's just like executing JavaScript, but I haven't tried it out yet. Like, this is just an idea that I'm playing with. I, I tried to give it like shell scripts and say like call redis CLI to synchronize and that worked in some instances. And it was really cool to see them like all the same pub sub and say like, oh, well, I, it looks like this agent hasn't finished.

So I'm going to wait. Uh, I went through an attempt to automate cloud code with this before hooks were a thing and it, it was a bit, it was a bit of a nightmare. Uh, in my experience, but I mean, I, I'm sure there are better ways to do it now.

And it wasn't robust enough. Uh, it wasn't robust enough, but let one thing that we can try is if we take this and go back to our diagram, right? Like if we rewind to here and then now use annotate with these APIs to show how to communicate. Amongst agents and add it to the flow diagram.

I don't know. This is horrible prompting, but, um, this kind of stuff seems to, seems to work pretty well. Yeah, depending on, right. Like I want to find the structures that make prompting. Not making it up on the spot is, is, is too hard, too much pressure. Get a model to do it.

Um, so here you can see that they do. Right. Like once you prompt them with this and the graph, I think it's going to be really robust. Um, and, um, yeah, I'm going to, going to play more with it. I'd like destroyed my JavaScript MCP. So green that you're going to mess with this one on.

I got to make sure I'm, uh, I think next week, maybe. Oh yeah. Anyway, that's what I wanted to show. Hopefully that gives like people some ideas. And cause I'm curious to see. Okay. I think there's so much untapped stuff in agents to actually build big code bases that don't sloppify, right?

Cause like refactoring a bit code base is even more pattern matching than like building new features in a way. Have you done anything with like, uh, craft databases for like, uh, code base management where it sort of like internal, like summarizes and internalizes that stuff to a graph database for, uh, prompting and pulling like lazy loaded sections of it.

I, I have a little. A little bit by using this, I don't, I don't know how much I've shared in, in AI in action, but I've been doing this like JavaScript based thing where it's just like in JavaScript, you have SQLite. So that gives the model the option to store things, but also create JavaScript function as needed.

And I told it like, Oh, model the code base. And that's all I prompted it. I just said, like, you know, you have a database, you have JavaScript, like, please model the database. And it did a graph database kind of like, right. Like it would start analyzing the files.

So I added a tree sitter library into my JavaScript sandbox and it started using that. Like, it's pretty crazy. Cause. That's what I meant with like, I have zero prompt effort to, to do like, the only thing I need to do is say like, write the code. If you don't put write the code, it will like not do it.

So it's really weird. It's like model the database, by the way, you can write code. And then it will do like, it will like, come up with a pretty good graph database on the spot. And I don't need to worry about it. Do you have a repo with an example of that?

Yeah, it's called Jesus. I said, I think it's broken right now. I'm not fully sure. Is that what he's called Jesus? That's what you shout at the computer screen? No, cause it's JavaScript. Okay. Right. Like the GS, uh, it used to be called experiments JS web server, but that that's kind of, uh, so, so this is, it has like a full ripple and like all kinds of crazy stuff.

This is like a little bit too over, over vibed, I think. Um, but, uh, the other thing that I have is this go, go, go jar. So it's all go with a JavaScript, um, thing. And so I cleaned up all my module engine and I'm starting to add more and more modules in there.

So currently those are very, very simple, but, um, in my, in my big vibes mess repo. So this is all stuff built completely autonomously by man is like, this is code. I don't even understand or look at. Um, but I have, I have like an LSP interface. That seems to work.

I have like a, I have like a, I have a tree sitter interface that works. And then I have a text text apply interface that works as well. So those, I'm going to merge them. Um, cause LSP is really cool. Like, like, uh, it's really cool to be in JavaScript and say like, oh, where's the symbol used?

And then it like kind of does it. And I wrote zero code for it. Um, anyway, I'm, I'm really curious to see where all of this, all of this goes, um, or like why I want to, uh, so much to play with. So a little time. Um, yeah. Yeah.

So in, in, uh, Neo Vim, if you use mcphub.nvim, you can make a native Lua MCP server that has access to the Neo Vim API such that you can use things like telescope. Um, and trouble to like, let the model just drive Neo Vim and bounce around between all the symbols and grab LSP outputs and all that, you know, I'm just, I'm not good at Neo Vim.

Just let the model do it. Like what's really, what's really funny. It's, it's like pretty token inefficient, but if you give, I, I tried a little bit, if you give, if you give the model Tmux and then you say like run Vim and write macros, it will start using Tmux to write macros to refactor the file.

It's like really funny and then it will read it back and forth. So it's a little bit slow. Right. Because it like calls the shell tools all the fricking time, but it's really funny to see it. Just like know what to do. Well, yeah. And that's why the Lua MCP is really intriguing to me.

Cause it's just gonna, it's just gonna try to, it's just gonna tool call to, to drive it. You know, you're hitting them. What, what, what models are you guys working with that are, that are using these MCPs effectively? Opus four tends to be the one that I usually default to.

Um, I think, or like what I, I want to find an open source solution for that. Cause I, cause I think gorilla can do it. Um, cause it can do it for, for open API. So why can't it do it for MCP? Um, but yeah. Cloud four seems to be, seems to be the guy, at least when it comes to tool calling.

Um, and then, and then. If Claude needs a model for something else, just give it a tool where it can call Oh three. If it gets confused or whatever. Yeah. I've been having a really good time. A really good, uh, I've been getting better results than I expected, uh, by having, uh, Claude code call other models like Gemini for components of the, of the workflow.

Uh, like, like Gemini has got that 1 million token context window. And so when there's like a deep analysis that I want to kick off in the middle of a component, but like, you know, cloud's got that 200 K limitation. I have it, uh, called Gemini 2.5 pro. And the trick, uh, or the trick there, uh, Gemini has really good.

Throughput on its recall, but it's re it it's, uh, when it's context window is full. It's reasoning degrades fairly quickly. So what you want to do is have Gemini is have a Gemini pull out all of the information and make a new document that you can, that Claude can then read down and do reasoning about.

Yeah. Yeah, that's, yeah, that's exactly the workflow. Um, because yeah, Gemini is great for summarizing and like analyzing, but it's, I, I haven't found it advertised on code. How do you have plot codes called Gemini? You have an MCP for that or like, how would you do it? Uh, you use a code with the API hook.

Use it. Um, so it depends on, it depends on your workflow, frankly. Um, but yeah. Uh, yeah, you using the hooks, um, or, or you structure the, I'm curious. I'm curious if you, if you were willing to try out something for me, cause I use M code and I don't have time to like, I don't want to go into M code, but try this, the same approach out, which is, um, which is to have, if you, if you start having like a refactoring plan, like this one, also ask it to create the necessary hooks to do it.

So you can say like, oh, every time you do this kind of thing, review with Gemini. And X, Y, Z. So basically speaking out loud, what you just said, then it generates this mermaid diagram with, you know, just like pretty strong poles. And it may be the hooks as well.

The thing I'm going to have to bounce. But the thing I'm, I'm, I'm trying to Yolo refactor right now. Right. Like I took a huge code base and I was like, yeah, make this event driven. Like that went completely sideways. I, I like what you're thinking. I'm, I'm not a hundred percent sure.

I got it, but definitely, uh, I'm going to ping you when I have the time to actually explore that idea. Uh, who are you in the discord? I am slow. No. What are your, what are your, your handles Adam? And, and all right. Yeah. Cool. Uh, I got a bounce, but I, that's currently my, my big thing for the last two weeks was like, can I Yolo refactor?

Can I like Yolo big code bases? Cause in my head, it's very simple. Right. I'm going to be like, oh, this needs to be event driven. And I don't want to prompt more than that. It's, it should be able to come up with a good architecture to do that.

Cause, cause it can, if I ask or three, I'm going to give me something. That's pretty decent. It's just like applying that to the code base, which is also very tedious kind of ad hoc. Like it's always the same stuff. It doesn't seem to be able to do it, but it feels eminently possible to do, to do that part.

Right. It's like, that's models are made to do this and it's just the way we currently. Yeah. You want like a patch based or diff based workflow or like rather, uh, like I'm, I'm where I'm heading with it is I, I think it's going to be patches where, um, the, um, I think it's going to be like, I think it's going to be like the ad hoc creation of the tools that that you need to do the thing that you need to do.

Right. Cause you need to adapt to certain systems where. If you want to refactor, you need to find the right granularity to make sure you're not breaking everything, but also to not make it small enough that you actually never progress. Cause the pattern I've seen is that cloud will start refactoring, right?

Like I've got a really good plan in O three. I give it to end code, which is sonnet. And then sonnet at some point we'll get like a lint error saying like, oh, well, this symbol that you're refactoring away is broken. So I'll be like, oh shit, the symbol's broken.

I'm going to write a wrapper. And then, so it starts wrapping the stuff that actually needs to be removed. And then it starts removing the wrapper and says like, oh shit, the wrapper is gone. Let me create. And then some kind of like architecture astronaut pattern. What's going to be like, I'm going to make a mock interface wrapper wrapper.

And then just like explodes from there. Do you have like prompts against anti-patterns like that? Like if you're telling it not to go too hard. And I think either it's M code, either it's the model as well. Like it seems hard to fight against on its training already. Um, but I think something about the agentic part of it also makes it much more easy to forget to do that.

So, so I'm experimenting, but my trivial approaches were. Context bloat plays into it. Although M code is pretty good at that. Um, it doesn't feel like, like context bloat errors. It feels like, oh, I found something wrong and I reacted upon it, which makes sense when you greenfield, right?

Like when you greenfield, you want to avoid having errors too early, but when you refactor, you actually want to have errors early until like everything resolves kind of. So it's, uh, it's an interesting balance. I have, I have to bounce. I'm sorry. We can talk about it. Yeah. Yeah.

Yeah. I, I definitely, what, what was yours? Uh, this quick slow. Slow down. My suspicion here is that I am once again, trying to over engineer, I'm, I'm suspecting that like periodic injections of like, no, do it again might be like kind of enough to just. Not in my experience, not in.

Well, I mean, you guys obviously. The burn, right. There's the, that's always the thing. That's, that's interesting. Um, Got a context management tool. Then it's like, oh, okay. Well, let me like, let me do some reasoning. Like, oh, why, why is, why is the user indicating that this is wrong?

Let me reason about what this agent is doing. So now I need to look at its context window and then I'm gonna need to reconfigure that context window, um, in order for it to actually accomplish the goal. So if I just sort of periodically inject that when there's like two, there's one error, then there's the same error, then there's the original error again, like detect that.

And then like, no, you're doing it wrong. You need to go back and, and figure it out. Um, is like at least one of the components of the waterfall that is in there. But I'm always like, how can I make this dumber? It's kind of the, the directive in my experience.

Yeah. I, I mean, that's, that's the goal, right? Is to make it, uh, make it keep it simple, uh, ideally. But I feel like agents put, put, put us in a really weird spot where depending on how they interpret our simple, uh, commands, it can get pretty complicated, pretty quick.

Hmm. I'm the, the other direction I'm kind of heading is, uh, uh, like functional programming and property testing. Cause if I can just take a PRD that defines properties of the software. Then I can just fuzz it. I'm doing. Yep. There you go. That's what I've been doing. I gotta get Oh three to learn Erlang where I was going to say, I need to learn Erlang, but no, I don't.

So yeah, dude, uh, I, I've been, I've been having a lot of good, uh, results by just getting it broken out into like PRDs for each feature. And like linked PRDs. And that's where I get like the lazy loading of like the, the edges of each module and how they interconnect.

So I can keep it from getting too much context around the whole project while it's focusing on any component, but that's not for like a new, that's not for a new refactor of an existing code base. That's just for like the planning of. Well, you, you just, all you need to do is generate a PRD that currently describes that code base.

And then you can say, okay, like now. Yes. That's not a simple, it's a little bit more complicated when you're working on an existing base. Cause you gotta do that like layered agentic review of each, uh, subcomponent. And if it's not already modularized, that gets fun. Yeah. Yeah. It's definitely, um, it's definitely a task, but yeah, I think like the fundamental primitive of like big list and then smaller to do lists.

Claude's has one, like the little tools like think. And like scratch pad are like way more, way more important than we think than, than one, one thing. Well, well, well, think is, think is dangerous because if you don't actually have much already in the context window loaded, like anthropic themselves will say, don't use think, don't use, uh, think hard.

Don't use ultra think unless you've got. I made, I made my own MCP server that is called think. And what it does is it, it, it runs, uh, from the paper algorithm of thoughts. It's like choose an algorithm, run it down once and then review it. Can you link that?

That sounds fascinating. I would be very interested in that. I I've used the, I've tried the sequential thinking MCP. That's it's not much better than a, than a checklist that, uh, opus generates itself in my experience. Hmm. I'll, I'll see if I, I might be broken right now, but it is.

I mean, you know, uh, I'll, I put it in AI in, in accent. It's, uh, uh, MCP reasoner is what it's called, but it's, I think it's got like five algorithms in there. So the idea is like, okay, sequentially thinking this way and then, um, like evaluate. Like, will you get to the solution here?

If not choose a different algorithm and run that. Oh, you muted again, but I think we're, uh, Yeah, I, I really appreciate this. This is really cool, uh, broken or not. I'm still going to check it out. It'll still end up being productive somehow. I'm sure. Um, I really appreciate this whole, uh, talk has been very informative for me.

Uh, I appreciate the, the counterpoints to, to my experience. It's been very productive. Uh, so yeah. Uh, thanks. Yeah. I'm, I'm curious to see if anything like, uh, unblocks or changes for you. So yeah, uh, keep dropping it. Yeah. Oh, I will. Yeah. We'll, we'll, we'll, we'll be in touch.

Don't worry about that. All right. I gotta go. Uh, thanks everybody. I'll catch you later. Bye. Bye. Bye. Bye. Bye. Bye. Bye. Bye. Bye. Bye. Bye. Bye. Bye. Bye. Bye. Bye. Bye. Thank you.