HNSW for Vector Search Explained and Implemented with Faiss (Python)

Chapters

0:0 Intro0:41 Foundations of HNSW

8:41 How HNSW Works

16:38 The Basics of HNSW in Faiss

21:40 How Faiss Builds an HNSW Graph

33:33 Fine-tuning HNSW

34:30 Outro

Transcript

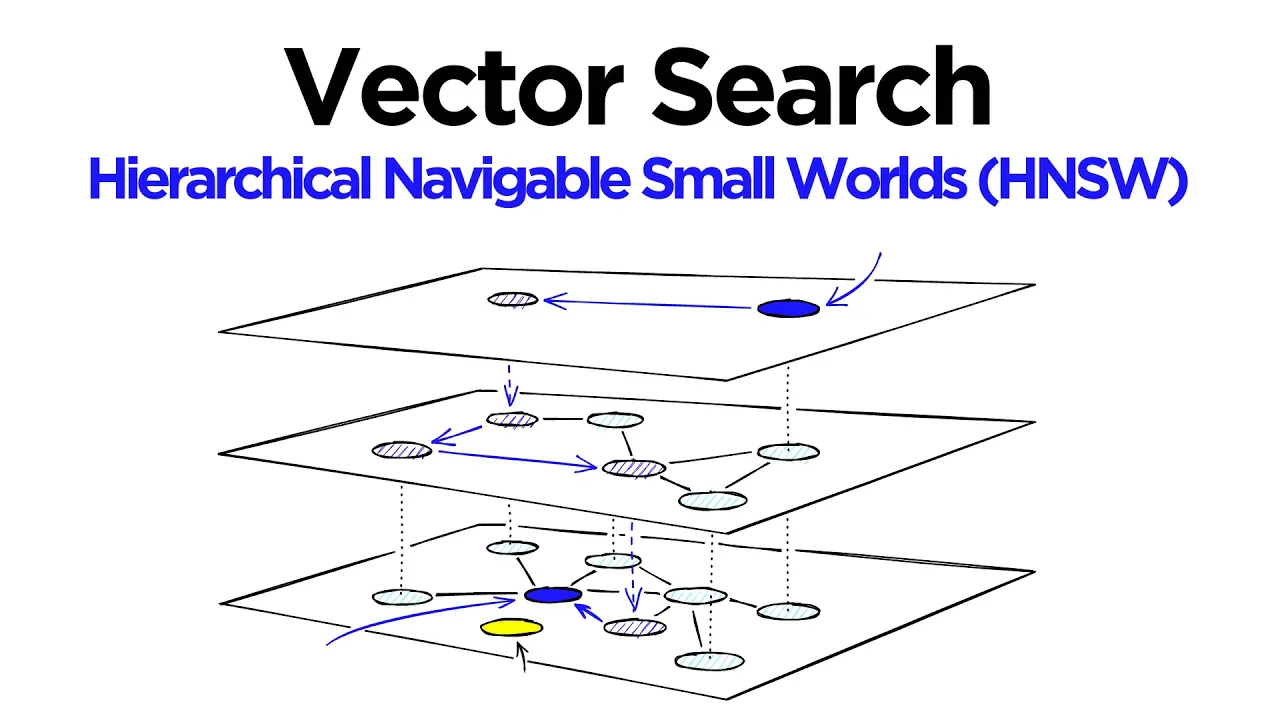

Hi and welcome to the video. We're going to be having a look at the Hierarchical Navigable Small Worlds graph or HNSW graph and how it's used in vector search. Now HNSW is one of the best performing algorithms for approximate nearest neighbors search and what I want to do with this video is explore how it works and how we can implement it in FICE.

So let's go ahead and get started. Now we'll start with the sort of foundations of HNSW and where it comes from. So we can split approximate nearest neighbor algorithms into three broad categories. Trees, hashes, and graphs. Now HNSW, it was HNSW graph so we can figure out it probably belongs in the graph category and more specifically it's a type of proximity graph which is it simply means that vertices which are just the vectors in our graph or the nodes in our graph are connected to other nodes or vertices based on their proximity and we would typically measure this proximity using Euclidean distance.

We don't have to but that's the sort of standard. Now going from a proximity graph which is pretty simple to a HNSW graph is a pretty big leap and there's two main algorithms that have really helped me understand what HNSW actually does and that is the probability skip list and the navigable small world graphs which are just the predecessors of HNSW.

So what I'm going to do is just quickly you know run through those two algorithms. So this is a probability skip list. It's reasonably old. It was introduced in 1990 by a guy called William Poog and it works by building several layers of linked list which is what you can see up here.

So what we do with this is we we're looking for the number 11 or the key 11 and we saw over here the top layer of our start block and what we do is we say in our layer 3 which is our entry layer and we move across. So we go over to the next block here and we say okay number 11 is not 5 and 5 is not greater than 11.

So we continue and we go all the way over to the end block over here. Once we reach the end block we know that we need to go to the next layer so one layer down and so we do that we go to layer 2 at the start block again and we continue moving across.

Now at this point we get to block 19 over here and we say okay 19 is a great 11 so now we know that we need to go on to our next layer, layer 1 down here and then we go across and we find number 11. Now the whole point of this is when we have a lot of values this can reduce the number of steps we need to take in order to get to our final value rather than just going along our layer 0 and going along every single value within our list.

HNSW borrows this hierarchical layered format or levels as we will see pretty soon. Navigable Small World or NSW graphs were developed over the course of a few papers around 2011 up to 2014. The basic idea is that we can have a very large graph with many vertices and because we have both short range links so the sort of links that you see on the outside of our circle here and we also have these long range links which is what you see going across the circle we make our graph very navigable so it's very easy to get from one point to another in very few steps and there's some terminology that we should cover here which is the all the vertices we have here all of their connections so all the other vertices that they are connected to we refer to these as that vertex's friends list okay and when searching this NSW graph we begin at a predetermined entry point so in here we see we're sort of sawing a few different points but in reality with these sort of big graphs we always start with one specific entry point or maybe sometimes we can do use different entry points based on some sort of logic but we have either one or if only a few entry points in our graph and then what we do is we perform what is called greedy routing now routing just refers to the route that we take through our graph and greedy refers to the fact that we are always going to choose out of our vertices friend list we're going to choose the friend that is the closest to our target vertex now if we find that there are no near vertices in our friend list this is a local minimum and this is our stopping condition we cannot get any closer we're kind of stuck in in that local minimum now if our vertex has very few friends we can get stuck quite easily so we can get stuck in the local minimum if there are less connections and we actually refer to vertices that have fewer friends as a low degree vertex so it's low degree it means it has a low number of links or edges and then we also have high degree vertices now with these it's if we are currently on a high degree vertex it's harder to get stuck in local minimum because we have more options on where we can where we can move now the routing through a navigable small world graph is split into two phases so we have the typically the earlier zoom out phase where we are passing through vertices that have a low number of links and then we also have the zoom in phase where we are passing through high degree vertices which have a lot of links and what we tend to find is a high degree vertices will usually have more long range links so we can move further across our graph with these high degree vertices now to minimize the probability of stopping early so avoiding those local minima we can increase the average degree of our vertices now this also increases the complexity of our network and therefore slows down our search time so we have to find a balance between both of those now another approach to avoiding hitting a local minima too early is to start search on high degree vertices so the vertices which have a lot of connections now for nsw we can do this and it works it works for low dimensional data but it doesn't work perfectly and it's actually one of the key factors that hnsw improves upon and uses to improve its own performance so let's move on to to hsw and how both this fit into hsw so hsw is obviously a an evolution of nsw and it borrows inspiration from poops probability skip list structure with the with the hierarchy so you can you can see that here we have a few different layers so we have our entry layer just like we did with the probability skip list structure and we also have an entry point and what we've basically done here is taken a navigable small nsw graph and split it across multiple layers so high vertex high degree vertices will tend to be spread across more layers because when we're building our graph we add the number of friends based on which layer it gets inserted at the higher the layer that the vertex is inserted at the more friends it's going to have which makes a high degree vertex and what that does is if we start at that highest layer that means we're on high degree vertices so we are less likely to get stuck in the local minimum and that means we're not going to stop early so that allows us to start the top layer and we move through our graph and what we do is we have our query vector and on each layer we keep moving or traversing across different edges in that layer in essentially the same way we did for an sw so we greedily identify the friend of our current vertex that has the least distance from our query vector and we move to that and then we we keep doing that and once we hit a local minimum we we don't stop the whole thing we just move down to the next layer and then we we do it again and we keep doing that until we reach the local minimum of layer zero and that is our stopping condition that's where we stop now that that's how we search that's what our graph looks like and how we search but let's have a quick look at how we actually build that graph so what we do is we have a probability function which essentially says we're pretty much going to put every vertex or every vector on layer zero not every but a pretty high number of them as we'll see later and we say okay we get a uniform a random number from uniform distribution and if that number is greater than our probability for that level we move on to the next layer so we increment our layer so let's say this turns out to not be true we move up a layer and we we try again okay now this next layer is going to have lower probability but we're also going to subtract the probability value from our lower layer from the random number that we've produced and we keep doing this again and again until we get up to our top layer at that point there are no other layers this is very unlikely to happen if we say if we have a billion maybe not a billion like a hundred i think it was a hundred million vectors i tried this with i got i think four vectors on the top layer so it is it's very unlikely for one vector to go all the way up to the top but if it does we insert it at that layer and it has the possibility of becoming a entry point for our graph one other thing that we we also have here is the the ml at the top now this is called the level multiplier and this value is it's just a normalization value which allows us to modify the probability or the probability distribution across each one of our layers now the the creators of hsw they found that the the best value for ml the optimum value was to use one over the natural logarithm of m and m we'll we'll have a look at that pretty soon that's just the number of neighbors each vertex is assigned so here we have we we have m so we've set m to three and we we're saying here so we want to insert this blue vector so that blue vector you see we've decided okay we're going to insert at layer one that means it gets an insert of it both layer one and layer zero but not at layer two and we we go through our graph just like we do when we search but this time we're we're trying to find many neighbors of our new vertex that we can assign to it's friendless and what we have here is so with an m value of three we would add three neighbors to that vertex both on layer one and also on layer zero now we can also have a m m max value and an m max zero value which is what we have down here and basically as more vertices are added we may find that this vertex ends up with more than three friends and maybe that that's fine but what we essentially do is use m max to say okay if we have any vertices with more than this number of friends we need to trim it and we need to just keep the closest like three in this example and then m max zero is another value and that is the same so it's the maximum number of friends of vertex you have but for layer zero which is i think always a higher number than the other layers now one other thing that we we should cover very quickly is we also have two more variables ef construction ef search now ef construction is used on we're building our graph and it's basically the number of friends of our inserted vertex that we will use when traversing across layers so in layer one up here we we have these three friends and that means that during our search for friends in the low layers we would go down like this we would start our search from each of these three vertices in the lower layer okay now that would be the case for all of those and ef search is the same but it's used during search time to search through our graph and i mean that they're pretty much that's pretty much all we need to know in on the theory side of hsw so let's dive into the implementation of hsw in advice okay so in we're in python now all i've done is taken our data so i'm using the sift 1m data set as we as we usually do and all i'm going to do is use this to you know search throughout our index as always now the first thing we want to do is actually create or initialize our index so i'm going to use two parameters so we have d which is the dimensionality the sift 1m data set so the length of our vectors in there and also m now m if you remember that's the number of neighbors that we will assign to each vertex in our graph now for this i'm going to use a value of 32 and what we can now do is we write index equals advice index flat hsw and then we just passed dnm and that's you know we've initialized okay sorry so it's not flat hsw it's hsw flat okay there we go so with that we have initialized our index of course there's nothing in there at the moment and we can see that so we can go ahead and write index hsw max level to see okay how many levels do we have in our index and we'll see that we get this minus one which means that the the level is in there that the structure has not been initialized yet and we also see that if we we do this so this is how we get the number of vertices that we have within each layer so we write the vice vector to array and in here we want to write index hsw levels and then let's print out and we see that we we just get this empty array now what we'd also want to do when we do so this is just a massive array so if we we will have a million values in here once we we add our cif-1m data set so what we do is we just write npb count levels to count the number to count basically how many vertices we have in each level when i say level by the way it's the same as earlier on when i was saying layers it's they're the same so when i say levels layers same thing so obviously at the moment we don't have anything in our graph so how do we how do we add that we we already know we just do index add xb okay nothing else so we add that that might take some time because we're building the graph we saw before it's a reasonably intensive process so it does take a little bit of time i think maybe around 40 seconds for this so i will fast forward okay so it's done 52 seconds not too bad now what we're now going to do is i want to have a look at these again so let's see if we we have a structure in our graph yet so max level so that's cool we have that means we have five levels zero one two up to four and then if we do this again we'll be able to see the distribution of vertices across each one of those levels so i'll just write this there we go so we just ignore this this first one here that doesn't mean anything so this is our layer zero like i said most vectors get assigned to this layer now we have layer one all the way up to layer four here or level four now vice will always add at least one vertex to your maximum level because otherwise we we don't have an entry point to our graph so there's always at least one in there and we can also check what which vector is being used there so we can write index hsw entry points yes and there we have said that that's the number of our vector that we have added as our entry point up here you can also change that if you want but i wouldn't probably especially with this i definitely wouldn't recommend it now i was super curious as to how it decides where to insert everything so went through the code which you can find over here so this is the defy scale repo and we have this hsw cpp file and in here we come down and it might take me a little while so this is where we initialize our index and we have this set default probus so if we if we go into set default progress we will find this here which is not really too hard to read i don't read i don't know c++ but this is pretty simple and what i translated that to in python is this here with a few comments because there is no comments in the other file for some reason now what we're basically doing is just building a probability distribution for each of our layers and we do using this this function here so we're taking the exponential of the the level divided by at that ml function or value that i mentioned earlier so that's the the multi-level the level multiplier value and what we get is a probability for the current level now what we do is once we reach a particularly low probability we we start creating new levels so if we we run this oh another actually interesting thing is here we we are saying on every level we will assign m neighbors as our as our m max except from level zero where we will assign two m okay so this is this m times like two so for our use case we had 32 we had m set to 32 so that means in all of our layers except from layer zero we'll have each vertex will have 32 neighbors or friends and on layer or level zero it will have 64 friends so i thought that was that was also interesting so we can go ahead and run that so i'm the 32 here is our m we can replace it m if you if we want so we run that and we get this probability distribution so this is for layer zero the probability of it being added using a random number generator so we're saying if our randomly generated number is less than this value we will assign or we will insert our vertex on at level zero and then we continue that logic all the way down to here which is our level four and then here is the total number so the cumulative number of neighbors per level so if we insert at level four we we will have this many neighbors for that vertex in total if we insert level zero we have 64 again a little bit interesting now in the in the code we when we're inserting vertices we use another function this is the last one we're not going to go through all of them we're just going to go through this one we insert or we decide where to insert our vertex using this function here which is the random level function this takes our sign probus from up here so this here and also a randomly generated number so here i'm using the numpy's random number generator and we go through and that's that's how we assign a different level for each of our values so if i let's run that and we'll see that we get a very similar distribution to to what we get in vice which i is i think pretty cool to see so if we do the bin count again we get this now if you take a look at this these values are so close to to what we produce up here so where is it um so here look at these values almost exactly the same so yeah it's pretty close they're both working in the same way now i also visualize that in in this chart here so you can you can see okay on the right we have a python version on the left we have our vice version and i've included the numbers in there basically that is exactly it's pretty much exactly the same it's off by a few here and there but not much so i thought that was pretty cool to see now okay that's cool that's how everything works that's interesting but what i think many of us are probably most interested in is how do i build the best hs sub u index for my for my use case for what i want so i ran some codes to go through all of that and pretty much i did this so we have this is also a good thing for me to show you um is how we get out ef construction and ef search values so i think you know a good starting point for both of these values is probably 16 32 is reasonable but you can play around them i think that's usually the best way and for most of what i'm going to show you i was using this so i was performing a thousand queries and calculating the recall at one value for those also i was checking you know how long our search is taking and the memory usage of each of these indexes so the first thing i wanted to look at is a recall of course so in in what what we have here on the left we have with a ef constriction value of two which is is very low i person i i don't know never use that never use an ef constriction value that low it well well you can see the recall is pretty terrible but you know surprisingly even though this is a very bad ef constriction value still got up to 40 percent recall so i was impressed with that that was cool and so the ef search values are what you can see on the bottom so it went from from i think two all the way up to 256 256 yeah that's right and we did that again for the ef constriction value of 16 here and also 64 i did it for more ef constriction values but these are probably the ones i think showed the relationship best and then all the colors you can see here we have a few different colors we're using different m values so what i found from this is that you basically you want to increase your your m and your ef search values to improve your recall and ef construction also helps with that so higher ef constriction value means that we need lower ef search and m values to achieve you know the same the same recall now of course you know we can just increase all of these and we we get better recall but that isn't for free we have to sacrifice some of our search time as well as always so we well this is this is what we've got so same same graphs as last time this time we have search time on the y-axis now ef construction here does actually make a bit of a difference when we start using higher m values so i found before in previous videos and articles that ef construction didn't really have too much of an effect on the search time which seemed fair enough because it is mostly a index construction parameter but that it does seem to have some effect when you start adding or increasing the number of queries that you are searching for and in particular where you have high m values now one thing to also note here is that we're using a load scale on the y-axis so the the higher values are definitely a fair bit higher but again i mean if we if we go for a sort of reasonable ef search value all of these are pretty high i mean 256 for your search values is high if we go for sort of es search of 32 let's say around here we're still getting reasonable search times so this is on the millisecond well it's a bit more than a millisecond scale and if we if we take a look at that on uh recall we're sort of getting up into the sort of 60 60 plus area at that point six cent plus and and look obviously it's not bad it's reasonably good but you can you can definitely get better than that when you start merging different indexes which we have covered all of that in another video another article so you'll be able to find that in in the either at the bottom of the article if you're reading the article or in the the video description if you're watching us on youtube now having a look at the the ef construction values again their effect on the search time we find that ef construction when we use those lower end values like i mentioned it doesn't have too much of a impact on the search time so if you are using a lower m value i think ef construction is a a good value to increase because it doesn't tend to make push up your search time too much and you can improve your recall fair bit by increasing it so it's definitely one to one to try and increase them and see how it goes for me i found it it's been quite useful at least and then finally the sort of negative point of h and sub u is the memory usage is just through the roof as you can see here so using the lowest m value which i think was two which is you you would never ever use that we were getting a memory usage for the sift 1m data set of more than more than half a gigabyte if you push m up a lot i mean 500 and 512 is is maybe a little bit too much but we're sort of pushing four and a half gigabytes five gigabytes so it's something to be aware of i think but like i said before we can we can still improve the the memory usage and the search speed by simply using different indexes alongside our hsw index so if memory usage is a concern you just compress the vectors using product quantization if the speed if you need it to be even faster you can use the an ibf index on top of hsw to improve the search times and if you want both of those you just put them all together and you can get that as well now like i said we we have covered all that in a lot more detail in another article and video but for this video on hsw i think now we're gonna leave it there i think we've covered plenty so thank you very much for watching and i'll see you in the next one bye