The spelled-out intro to language modeling: building makemore

Chapters

0:0 intro3:3 reading and exploring the dataset

6:24 exploring the bigrams in the dataset

9:24 counting bigrams in a python dictionary

12:45 counting bigrams in a 2D torch tensor ("training the model")

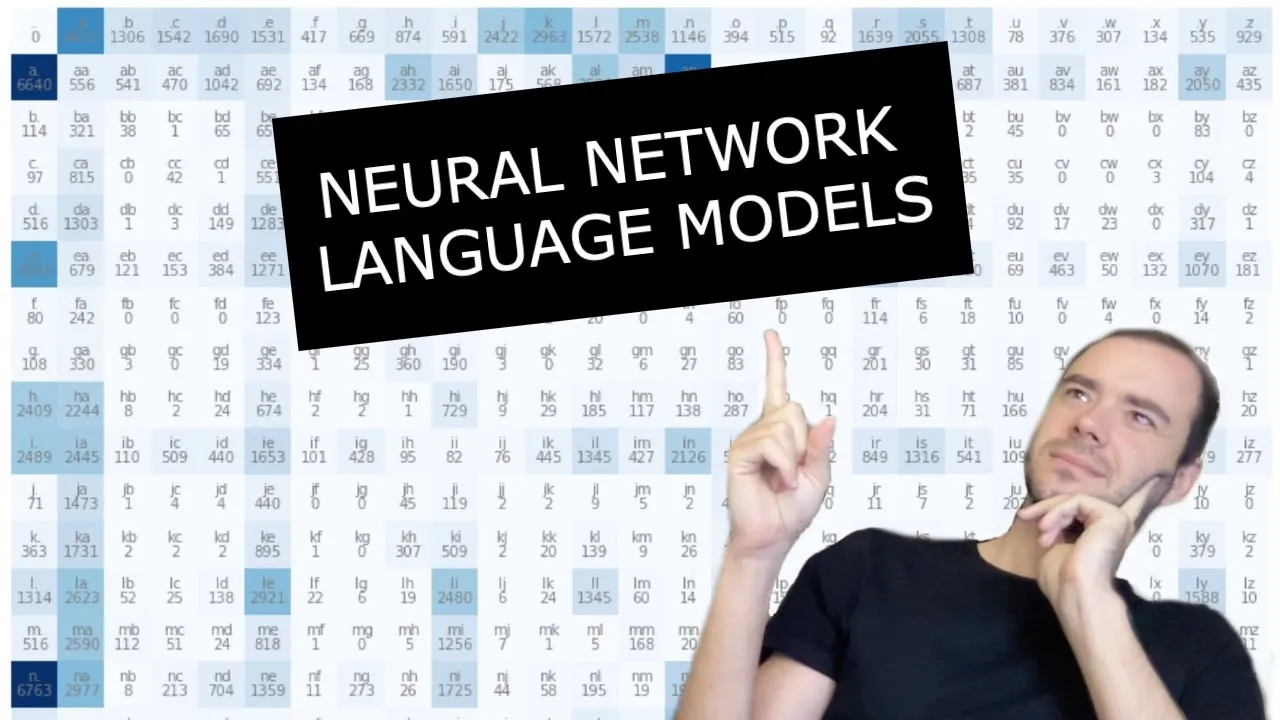

18:19 visualizing the bigram tensor

20:54 deleting spurious (S) and (E) tokens in favor of a single . token

24:2 sampling from the model

36:17 efficiency! vectorized normalization of the rows, tensor broadcasting

50:14 loss function (the negative log likelihood of the data under our model)

60:50 model smoothing with fake counts

62:57 PART 2: the neural network approach: intro

65:26 creating the bigram dataset for the neural net

70:1 feeding integers into neural nets? one-hot encodings

73:53 the "neural net": one linear layer of neurons implemented with matrix multiplication

78:46 transforming neural net outputs into probabilities: the softmax

86:17 summary, preview to next steps, reference to micrograd

95:49 vectorized loss

98:36 backward and update, in PyTorch

102:55 putting everything together

107:49 note 1: one-hot encoding really just selects a row of the next Linear layer's weight matrix

110:18 note 2: model smoothing as regularization loss

114:31 sampling from the neural net

116:16 conclusion

Transcript

Hi everyone, hope you're well. And next up what I'd like to do is I'd like to build out Makemore. Like micrograd before it, Makemore is a repository that I have on my GitHub web page. You can look at it. But just like with micrograd, I'm going to build it out step by step, and I'm going to spell everything out.

So we're going to build it out slowly and together. Now what is Makemore? Makemore, as the name suggests, makes more of things that you give it. So here's an example. Names.txt is an example dataset to Makemore. And when you look at names.txt, you'll find that it's a very large dataset of names.

So here's lots of different types of names. In fact, I believe there are 32,000 names that I've sort of found randomly on a government website. And if you train Makemore on this dataset, it will learn to make more of things like this. And in particular, in this case, that will mean more things that sound name-like, but are actually unique names.

And maybe if you have a baby and you're trying to assign a name, maybe you're looking for a cool new sounding unique name, Makemore might help you. So here are some example generations from the neural network once we train it on our dataset. So here's some example unique names that it will generate.

So, "Montel", "Irat", "Zendi", and so on. And so all these sort of sound name-like, but they're not, of course, names. So under the hood, Makemore is a character-level language model. So what that means is that it is treating every single line here as an example. And within each example, it's treating them all as sequences of individual characters.

So R-E-E-S-E is this example. And that's the sequence of characters. And that's the level on which we are building out Makemore. And what it means to be a character-level language model then is that it's just sort of modeling those sequences of characters, and it knows how to predict the next character in the sequence.

Now we're actually going to implement a large number of character-level language models in terms of the neural networks that are involved in predicting the next character in a sequence. So very simple bigram and bag-of-word models, multilayered perceptrons, recurrent neural networks, all the way to modern transformers. In fact, the transformer that we will build will be basically the equivalent transformer to GPT-2, if you have heard of GPT.

So that's kind of a big deal. It's a modern network. And by the end of the series, you will actually understand how that works on the level of characters. Now, to give you a sense of the extensions here, after characters, we will probably spend some time on the word level so that we can generate documents of words, not just little segments of characters, but we can generate entire large, much larger documents.

And then we're probably going to go into images and image-text networks, such as DALI, stable diffusion, and so on. But for now, we have to start here, character-level language modeling. Let's go. So like before, we are starting with a completely blank Jupyter Notebook page. The first thing is I would like to basically load up the dataset, names.txt.

So we're going to open up names.txt for reading, and we're going to read in everything into a massive string. And then because it's a massive string, we'd only like the individual words and put them in the list. So let's call splitlines on that string to get all of our words as a Python list of strings.

So basically, we can look at, for example, the first 10 words, and we have that it's a list of Emma, Olivia, Ava, and so on. And if we look at the top of the page here, that is indeed what we see. So that's good. This list actually makes me feel that this is probably sorted by frequency.

But okay, so these are the words. Now we'd like to actually learn a little bit more about this dataset. Let's look at the total number of words. We expect this to be roughly 32,000. And then what is the, for example, shortest word? So min of len of each word for w in words.

So the shortest word will be length 2, and max of len w for w in words. So the longest word will be 15 characters. So let's now think through our very first language model. As I mentioned, a character-level language model is predicting the next character in a sequence given already some concrete sequence of characters before it.

Now what we have to realize here is that every single word here, like Isabella, is actually quite a few examples packed in to that single word. Because what is an existence of a word like Isabella in the dataset telling us, really? It's saying that the character i is a very likely character to come first in a sequence of a name.

The character s is likely to come after i. The character a is likely to come after is. The character b is very likely to come after isa. And so on, all the way to a following Isabelle. And then there's one more example actually packed in here. And that is that after there's Isabella, the word is very likely to end.

So that's one more sort of explicit piece of information that we have here that we have to be careful with. And so there's a lot packed into a single individual word in terms of the statistical structure of what's likely to follow in these character sequences. And then of course, we don't have just an individual word.

We actually have 32,000 of these. And so there's a lot of structure here to model. Now in the beginning, what I'd like to start with is I'd like to start with building a bigram language model. Now in a bigram language model, we're always working with just two characters at a time.

So we're only looking at one character that we are given, and we're trying to predict the next character in the sequence. So what characters are likely to follow are, what characters are likely to follow a, and so on. And we're just modeling that kind of a little local structure.

And we're forgetting the fact that we may have a lot more information. We're always just looking at the previous character to predict the next one. So it's a very simple and weak language model, but I think it's a great place to start. So now let's begin by looking at these bigrams in our dataset and what they look like.

And these bigrams again are just two characters in a row. So for w in words, each w here is an individual word, a string. We want to iterate this word with consecutive characters. So two characters at a time, sliding it through the word. Now a interesting, nice way, cute way to do this in Python, by the way, is doing something like this.

Character one, character two in zip of w and w at one, one column. Print character one, character two. And let's not do all the words. Let's just do the first three words. And I'm going to show you in a second how this works. But for now, basically as an example, let's just do the very first word alone, Emma.

You see how we have a Emma and this will just print em, mm, ma. And the reason this works is because w is the string Emma, w at one column is the string mma, and zip takes two iterators and it pairs them up and then creates an iterator over the tuples of their consecutive entries.

And if any one of these lists is shorter than the other, then it will just halt and return. So basically that's why we return em, mm, mm, ma, but then because this iterator, the second one here, runs out of elements, zip just ends. And that's why we only get these tuples.

So pretty cute. So these are the consecutive elements in the first word. Now we have to be careful because we actually have more information here than just these three examples. As I mentioned, we know that e is very likely to come first. And we know that a in this case is coming last.

So what I'm going to do this is basically we're going to create a special array here, our characters, and we're going to hallucinate a special start token here. I'm going to call it like special start. So this is a list of one element plus w and then plus a special end character.

And the reason I'm wrapping the list of w here is because w is a string, Emma. List of w will just have the individual characters in the list. And then doing this again now, but not iterating over w's, but over the characters will give us something like this. So e is likely, so this is a bigram of the start character and e, and this is a bigram of the a and the special end character.

And now we can look at, for example, what this looks like for Olivia or Eva. And indeed, we can actually potentially do this for the entire dataset, but we won't print that. That's going to be too much. But these are the individual character bigrams and we can print them.

Now in order to learn the statistics about which characters are likely to follow other characters, the simplest way in the bigram language models is to simply do it by counting. So we're basically just going to count how often any one of these combinations occurs in the training set in these words.

So we're going to need some kind of a dictionary that's going to maintain some counts for every one of these bigrams. So let's use a dictionary B and this will map these bigrams. So bigram is a tuple of character one, character two. And then B at bigram will be B dot get of bigram, which is basically the same as B at bigram.

But in the case that bigram is not in the dictionary B, we would like to by default return a zero plus one. So this will basically add up all the bigrams and count how often they occur. Let's get rid of printing or rather let's keep the printing and let's just inspect what B is in this case.

And we see that many bigrams occur just a single time. This one allegedly occurred three times. So A was an ending character three times. And that's true for all of these words. All of Emma, Olivia and Eva end with A. So that's why this occurred three times. Now let's do it for all the words.

Oops, I should not have printed. I meant to erase that. Let's kill this. Let's just run and now B will have the statistics of the entire dataset. So these are the counts across all the words of the individual bigrams. And we could, for example, look at some of the most common ones and least common ones.

This kind of grows in Python, but the way to do this, the simplest way I like is we just use B dot items. B dot items returns the tuples of key value. In this case, the keys are the character bigrams and the values are the counts. And so then what we want to do is we want to do sorted of this.

But by default, sort is on the first item of a tuple. But we want to sort by the values, which are the second element of a tuple that is the key value. So we want to use the key equals lambda that takes the key value and returns the key value at one, not at zero, but at one, which is the count.

So we want to sort by the count of these elements. And actually we want it to go backwards. So here what we have is the bigram QNR occurs only a single time. DZ occurred only a single time. And when we sort this the other way around, we're going to see the most likely bigrams.

So we see that N was very often an ending character, many, many times. And apparently N almost always follows an A, and that's a very likely combination as well. So this is kind of the individual counts that we achieve over the entire dataset. Now it's actually going to be significantly more convenient for us to keep this information in a two-dimensional array instead of a Python dictionary.

So we're going to store this information in a 2D array, and the rows are going to be the first character of the bigram, and the columns are going to be the second character. And each entry in this two-dimensional array will tell us how often that first character follows the second character in the dataset.

So in particular, the array representation that we're going to use, or the library, is that of PyTorch. And PyTorch is a deep learning neural network framework, but part of it is also this torch.tensor, which allows us to create multidimensional arrays and manipulate them very efficiently. So let's import PyTorch, which you can do by import torch.

And then we can create arrays. So let's create an array of zeros, and we give it a size of this array. Let's create a 3x5 array as an example. And this is a 3x5 array of zeros. And by default, you'll notice a.dtype, which is short for datatype, is float32.

So these are single precision floating point numbers. Because we are going to represent counts, let's actually use dtype as torch.int32. So these are 32-bit integers. So now you see that we have integer data inside this tensor. Now tensors allow us to really manipulate all the individual entries and do it very efficiently.

So for example, if we want to change this bit, we have to index into the tensor. And in particular, here, this is the first row, because it's zero-indexed. So this is row index 1, and column index 0, 1, 2, 3. So a at 1, 3, we can set that to 1.

And then a will have a 1 over there. We can of course also do things like this. So now a will be 2 over there, or 3. And also we can, for example, say a is 5, and then a will have a 5 over here. So that's how we can index into the arrays.

Now of course the array that we are interested in is much, much bigger. So for our purposes, we have 26 letters of the alphabet, and then we have two special characters, s and e. So we want 26+2, or 28 by 28 array. And let's call it the capital N, because it's going to represent the counts.

Let me erase this stuff. So that's the array that starts at 0s, 28 by 28. And now let's copy-paste this here. But instead of having a dictionary b, which we're going to erase, we now have an n. Now the problem here is that we have these characters, which are strings, but we have to now basically index into an array, and we have to index using integers.

So we need some kind of a lookup table from characters to integers. So let's construct such a character array. And the way we're going to do this is we're going to take all the words, which is a list of strings, we're going to concatenate all of it into a massive string, so this is just simply the entire dataset as a single string.

We're going to pass this to the set constructor, which takes this massive string and throws out duplicates, because sets do not allow duplicates. So set of this will just be the set of all the lowercase characters. And there should be a total of 26 of them. And now we actually don't want a set, we want a list.

But we don't want a list sorted in some weird arbitrary way, we want it to be sorted from A to Z. So a sorted list. So those are our characters. Now what we want is this lookup table, as I mentioned. So let's create a special s to i, I will call it.

s is string, or character. And this will be an s to i mapping for i, s in enumerate of these characters. So enumerate basically gives us this iterator over the integer, index, and the actual element of the list. And then we are mapping the character to the integer. So s to i is a mapping from A to 0, B to 1, etc., all the way from Z to 25.

And that's going to be useful here, but we actually also have to specifically set that s will be 26, and s to i at E will be 27, because Z was 25. So those are the lookups. And now we can come here and we can map both character 1 and character 2 to their integers.

So this will be s to i of character 1, and i x 2 will be s to i of character 2. And now we should be able to do this line, but using our array. So n at x1, i x2. This is the two-dimensional array indexing I've shown you before.

And honestly, just plus equals 1, because everything starts at 0. So this should work and give us a large 28 by 28 array of all these counts. So if we print n, this is the array, but of course it looks ugly. So let's erase this ugly mess and let's try to visualize it a bit more nicer.

So for that, we're going to use a library called matplotlib. So matplotlib allows us to create figures. So we can do things like plt im show of the count array. So this is the 28 by 28 array, and this is the structure. But even this, I would say, is still pretty ugly.

So we're going to try to create a much nicer visualization of it, and I wrote a bunch of code for that. The first thing we're going to need is we're going to need to invert this array here, this dictionary. So s to i is a mapping from s to i, and in i to s, we're going to reverse this dictionary.

So iterate over all the items and just reverse that array. So i to s maps inversely from 0 to a, 1 to b, etc. So we'll need that. And then here's the code that I came up with to try to make this a little bit nicer. We create a figure, we plot n, and then we visualize a bunch of things later.

Let me just run it so you get a sense of what this is. So you see here that we have the array spaced out, and every one of these is basically b follows g zero times, b follows h 41 times, so a follows j 175 times. And so what you can see that I'm doing here is first I show that entire array, and then I iterate over all the individual little cells here, and I create a character string here, which is the inverse mapping i to s of the integer i and the integer j.

So those are the bigrams in a character representation. And then I plot just the bigram text, and then I plot the number of times that this bigram occurs. Now the reason that there's a dot item here is because when you index into these arrays, these are torch tensors, you see that we still get a tensor back.

So the type of this thing, you'd think it would be just an integer, 149, but it's actually a torch dot tensor. And so if you do dot item, then it will pop out that individual integer. So it'll just be 149. So that's what's happening there. And these are just some options to make it look nice.

So what is the structure of this array? We have all these counts, and we see that some of them occur often, and some of them do not occur often. Now if you scrutinize this carefully, you will notice that we're not actually being very clever. That's because when you come over here, you'll notice that, for example, we have an entire row of completely zeros.

And that's because the end character is never possibly going to be the first character of a bigram, because we're always placing these end tokens at the end of the bigram. Similarly, we have entire columns of zeros here, because the s character will never possibly be the second element of a bigram, because we always start with s and we end with e, and we only have the words in between.

So we have an entire column of zeros, an entire row of zeros. And in this little two by two matrix here as well, the only one that can possibly happen is if s directly follows e. That can be non-zero if we have a word that has no letters. So in that case, there's no letters in the word.

It's an empty word, and we just have s follows e. But the other ones are just not possible. And so we're basically wasting space. And not only that, but the s and the e are getting very crowded here. I was using these brackets because there's convention in natural language processing to use these kinds of brackets to denote special tokens, but we're going to use something else.

So let's fix all this and make it prettier. We're not actually going to have two special tokens. We're only going to have one special token. So we're going to have n by n array of 27 by set 27 instead. Instead of having two, we will just have one, and I will call it a dot.

Let me swing this over here. Now one more thing that I would like to do is I would actually like to make this special character have position zero. And I would like to offset all the other letters off. I find that a little bit more pleasing. So we need a plus one here so that the first character, which is a, will start at one.

So s to i will now be a starts at one and dot is zero. And i to s, of course, we're not changing this because i to s just creates a reverse mapping and this will work fine. So one is a, two is b, zero is dot. So we've reversed that.

Here we have a dot and a dot. This should work fine. Make sure I start at zeros. Count. And then here we don't go up to 28, we go up to 27. And this should just work. Okay. So we see that dot dot never happened. It's at zero because we don't have empty words.

And this row here now is just very simply the counts for all the first letters. So j starts a word, h starts a word, i starts a word, et cetera. And then these are all the ending characters. And in between we have the structure of what characters follow each other.

So this is the counts array of our entire dataset. So this array actually has all the information necessary for us to actually sample from this bigram character level language model. And roughly speaking, what we're going to do is we're just going to start following these probabilities and these counts, and we're going to start sampling from the model.

So in the beginning, of course, we start with the dot, the start token dot. So to sample the first character of a name, we're looking at this row here. So we see that we have the counts and those counts externally are telling us how often any one of these characters is to start a word.

So if we take this n and we grab the first row, we can do that by using just indexing as zero and then using this notation, colon, for the rest of that row. So n zero colon is indexing into the zeroth row and then it's grabbing all the columns.

And so this will give us a one dimensional array of the first row. So 0 4 4 10, you know, 0 4 4 10, 1 3 0 6, 1 5 4 2, etc. It's just the first row. The shape of this is 27. Just the row of 27. And the other way that you can do this also is you just you don't actually give this, you just grab the zeroth row like this.

This is equivalent. Now these are the counts and now what we'd like to do is we'd like to basically sample from this. Since these are the raw counts, we actually have to convert this to probabilities. So we create a probability vector. So we'll take n of zero and we'll actually convert this to float first.

OK, so these integers are converted to float, floating point numbers. And the reason we're creating floats is because we're about to normalize these counts. So to create a probability distribution here, we want to divide. We basically want to do p, p, p divide p dot sum. And now we get a vector of smaller numbers.

And these are now probabilities. So of course, because we divided by the sum, the sum of p now is one. So this is a nice proper probability distribution. It sums to one. And this is giving us the probability for any single character to be the first character of a word.

So now we can try to sample from this distribution. To sample from these distributions, we're going to use tors dot multinomial, which I've pulled up here. So tors dot multinomial returns samples from the multinomial probability distribution, which is a complicated way of saying you give me probabilities and I will give you integers, which are sampled according to the probability distribution.

So this is the signature of the method. And to make everything deterministic, we're going to use a generator object in PyTorch. So this makes everything deterministic. So when you run this on your computer, you're going to get the exact same results that I'm getting here on my computer. So let me show you how this works.

Here's the deterministic way of creating a torch generator object, seeding it with some number that we can agree on, so that seeds a generator, gives us an object g. And then we can pass that g to a function that creates here random numbers, tors dot rand creates random numbers, three of them.

And it's using this generator object as a source of randomness. So without normalizing it, I can just print. This is sort of like numbers between zero and one that are random according to this thing. And whenever I run it again, I'm always going to get the same result because I keep using the same generator object, which I'm seeding here.

And then if I divide to normalize, I'm going to get a nice probability distribution of just three elements. And then we can use tors dot multinomial to draw samples from it. So this is what that looks like. Tors dot multinomial will take the torch tensor of probability distributions. Then we can ask for a number of samples, let's say 20.

Replacement equals true means that when we draw an element, we can draw it and then we can put it back into the list of eligible indices to draw again. And we have to specify replacement as true because by default, for some reason, it's false. You know, it's just something to be careful with.

And the generator is passed in here. So we're going to always get deterministic results, the same results. So if I run these two, we're going to get a bunch of samples from this distribution. Now you'll notice here that the probability for the first element in this tensor is 60%.

So in these 20 samples, we'd expect 60% of them to be zero. We'd expect 30% of them to be one. And because the element index two has only 10% probability, very few of these samples should be two. And indeed, we only have a small number of twos. We can sample as many as we like.

And the more we sample, the more these numbers should roughly have the distribution here. So we should have lots of zeros, half as many ones, and we should have three times as few ones, and three times as few twos. So you see that we have very few twos, we have some ones, and most of them are zero.

So that's what torsion multinomial is doing. For us here, we are interested in this row, we've created this p here, and now we can sample from it. So if we use the same seed, and then we sample from this distribution, let's just get one sample, then we see that the sample is, say, 13.

So this will be the index. You see how it's a tensor that wraps 13? We again have to use .item to pop out that integer. And now index would be just the number 13. And of course, we can map the I2S of IX to figure out exactly which character we're sampling here.

We're sampling M. So we're saying that the first character is M in our generation. And just looking at the row here, M was drawn, and we can see that M actually starts a large number of words. M started 2,500 words out of 32,000 words. So almost a bit less than 10% of the words start with M.

So this is actually a fairly likely character to draw. So that would be the first character of our word. And now we can continue to sample more characters, because now we know that M is already sampled. So now to draw the next character, we will come back here, and we will look for the row that starts with M.

So you see M, and we have a row here. So we see that M. is 516, MA is this many, MB is this many, etc. So these are the counts for the next row, and that's the next character that we are going to now generate. So I think we are ready to actually just write out the loop, because I think you're starting to get a sense of how this is going to go.

We always begin at index 0, because that's the start token. And then while true, we're going to grab the row corresponding to index that we're currently on. So that's N array at ix, converted to float is our P. Then we normalize this P to sum to 1. I accidentally ran the infinite loop.

We normalize P to sum to 1. Then we need this generator object. We're going to initialize up here, and we're going to draw a single sample from this distribution. And then this is going to tell us what index is going to be next. If the index sampled is 0, then that's now the end token.

So we will break. Otherwise, we are going to print s2i of ix. i2s of ix. And that's pretty much it. We're just — this should work. Okay, more. So that's the name that we've sampled. We started with M, the next step was O, then R, and then dot. And this dot, we printed here as well.

So let's now do this a few times. So let's actually create an out list here. And instead of printing, we're going to append. So out.append this character. And then here, let's just print it at the end. So let's just join up all the outs, and we're just going to print more.

Now, we're always getting the same result because of the generator. So if we want to do this a few times, we can go for i in range 10. We can sample 10 names, and we can just do that 10 times. And these are the names that we're getting out.

Let's do 20. I'll be honest with you, this doesn't look right. So I started a few minutes to convince myself that it actually is right. The reason these samples are so terrible is that bigram language model is actually just really terrible. We can generate a few more here. And you can see that they're kind of like — they're name-like a little bit, like Ianu, O'Reilly, et cetera, but they're just totally messed up.

And I mean, the reason that this is so bad — like, we're generating H as a name, but you have to think through it from the model's eyes. It doesn't know that this H is the very first H. All it knows is that H was previously, and now how likely is H the last character?

Well, it's somewhat likely, and so it just makes it last character. It doesn't know that there were other things before it or there were not other things before it. And so that's why it's generating all these, like, nonsense names. Another way to do this is to convince yourself that this is actually doing something reasonable, even though it's so terrible, is these little p's here are 27, right?

Like 27. So how about if we did something like this? Instead of p having any structure whatsoever, how about if p was just torch.once(27). By default, this is a float 32, so this is fine. Divide 27. So what I'm doing here is this is the uniform distribution, which will make everything equally likely.

And we can sample from that. So let's see if that does any better. So this is what you have from a model that is completely untrained, where everything is equally likely. So it's obviously garbage. And then if we have a trained model, which is trained on just bigrams, this is what we get.

So you can see that it is more name-like. It is actually working. It's just bigram is so terrible, and we have to do better. Now next, I would like to fix an inefficiency that we have going on here, because what we're doing here is we're always fetching a row of n from the counts matrix up ahead.

And then we're always doing the same things. We're converting to float and we're dividing. And we're doing this every single iteration of this loop. And we just keep renormalizing these rows over and over again. And it's extremely inefficient and wasteful. So what I'd like to do is I'd like to actually prepare a matrix, capital P, that will just have the probabilities in it.

So in other words, it's going to be the same as the capital N matrix here of counts. But every single row will have the row of probabilities that is normalized to 1, indicating the probability distribution for the next character given the character before it, as defined by which row we're in.

So basically what we'd like to do is we'd like to just do it up front here. And then we would like to just use that row here. So here, we would like to just do P equals P of IX instead. The other reason I want to do this is not just for efficiency, but also I would like us to practice these n-dimensional tensors.

And I'd like us to practice their manipulation, and especially something that's called broadcasting, that we'll go into in a second. We're actually going to have to become very good at these tensor manipulations, because if we're going to build out all the way to transformers, we're going to be doing some pretty complicated array operations for efficiency.

And we need to really understand that and be very good at it. So intuitively, what we want to do is we first want to grab the floating point copy of N. And I'm mimicking the line here, basically. And then we want to divide all the rows so that they sum to 1.

So we'd like to do something like this, P divide P dot sum. But now we have to be careful, because P dot sum actually produces a sum--sorry, P equals N dot float copy. P dot sum produces a--sums up all of the counts of this entire matrix N and gives us a single number of just the summation of everything.

So that's not the way we want to divide. We want to simultaneously and in parallel divide all the rows by their respective sums. So what we have to do now is we have to go into documentation for torch dot sum. And we can scroll down here to a definition that is relevant to us, which is where we don't only provide an input array that we want to sum, but we also provide the dimension along which we want to sum.

And in particular, we want to sum up over rows. Now one more argument that I want you to pay attention to here is the keep_dim is false. If keep_dim is true, then the output tensor is of the same size as input, except of course the dimension along which you summed, which will become just 1.

But if you pass in keep_dim as false, then this dimension is squeezed out. And so torch dot sum not only does the sum and collapses dimension to be of size 1, but in addition, it does what's called a squeeze, where it squeezes out that dimension. So basically what we want here is we instead want to do p dot sum of some axis.

And in particular, notice that p dot shape is 27 by 27. So when we sum up across axis 0, then we would be taking the 0th dimension and we would be summing across it. So when keep_dim is true, then this thing will not only give us the counts along the columns, but notice that basically the shape of this is 1 by 27.

We just get a row vector. And the reason we get a row vector here, again, is because we pass in 0th dimension. So this 0th dimension becomes 1. And we've done a sum, and we get a row. And so basically we've done the sum this way, vertically, and arrived at just a single 1 by 27 vector of counts.

What happens when you take out keep_dim is that we just get 27. So it squeezes out that dimension, and we just get a one-dimensional vector of size 27. Now we don't actually want 1 by 27 row vector, because that gives us the counts, or the sums, across the columns.

We actually want to sum the other way, along dimension 1. And you'll see that the shape of this is 27 by 1. So it's a column vector. It's a 27 by 1 vector of counts. And that's because what's happened here is that we're going horizontally, and this 27 by 27 matrix becomes a 27 by 1 array.

And you'll notice, by the way, that the actual numbers of these counts are identical. And that's because this special array of counts here comes from bigram statistics. And actually, it just so happens by chance, or because of the way this array is constructed, that the sums along the columns, or along the rows, horizontally or vertically, is identical.

But actually what we want to do in this case is we want to sum across the rows, horizontally. So what we want here is p.sum of 1 with keep_dim true, 27 by 1 column vector. And now what we want to do is we want to divide by that. Now we have to be careful here again.

Is it possible to take what's a p.shape you see here, 27 by 27, is it possible to take a 27 by 27 array and divide it by what is a 27 by 1 array? Is that an operation that you can do? And whether or not you can perform this operation is determined by what's called broadcasting rules.

So if you just search broadcasting semantics in Torch, you'll notice that there's a special definition for what's called broadcasting, that for whether or not these two arrays can be combined in a binary operation like division. So the first condition is each tensor has at least one dimension, which is the case for us.

And then when iterating over the dimension sizes, starting at the trailing dimension, the dimension sizes must either be equal, one of them is one, or one of them does not exist. So let's do that. We need to align the two arrays and their shapes, which is very easy because both of these shapes have two elements, so they're aligned.

Then we iterate over from the right and going to the left. Each dimension must be either equal, one of them is a one, or one of them does not exist. So in this case, they're not equal, but one of them is a one. So this is fine. And then this dimension, they're both equal.

So this is fine. So all the dimensions are fine, and therefore this operation is broadcastable. So that means that this operation is allowed. And what is it that these arrays do when you divide 27 by 27 by 27 by one? What it does is that it takes this dimension one, and it stretches it out, it copies it to match 27 here in this case.

So in our case, it takes this column vector, which is 27 by one, and it copies it 27 times to make these both be 27 by 27 internally. You can think of it that way. And so it copies those counts, and then it does an element-wise division, which is what we want because these counts, we want to divide by them on every single one of these columns in this matrix.

So this actually, we expect, will normalize every single row. And we can check that this is true by taking the first row, for example, and taking its sum. We expect this to be one because it's now normalized. And then we expect this now because if we actually correctly normalize all the rows, we expect to get the exact same result here.

So let's run this. It's the exact same result. So this is correct. So now I would like to scare you a little bit. You actually have to like, I basically encourage you very strongly to read through broadcasting semantics and I encourage you to treat this with respect. And it's not something to play fast and loose with.

It's something to really respect, really understand, and look up maybe some tutorials for broadcasting and practice it and be careful with it because you can very quickly run into bugs. Let me show you what I mean. You see how here we have p.sum of one, keep them as true.

The shape of this is 27 by one. Let me take out this line just so we have the n and then we can see the counts. We can see that this is all the counts across all the rows and it's 27 by one column vector, right? Now suppose that I tried to do the following, but I erase keep them as true here.

What does that do? If keep them is not true, it's false. And remember, according to documentation, it gets rid of this dimension one, it squeezes it out. So basically we just get all the same counts, the same result, except the shape of it is not 27 by one, it's just 27, the one that disappears.

But all the counts are the same. So you'd think that this divide that would work. First of all, can we even write this and is it even expected to run? Is it broadcastable? Let's determine if this result is broadcastable. P dot sum at one is shape is 27. This is 27 by 27.

So 27 by 27 broadcasting into 27. So now rules of broadcasting number one, align all the dimensions on the right, done. Now iteration over all the dimensions starting from the right, going to the left. All the dimensions must either be equal. One of them must be one or one of them does not exist.

So here they are all equal. Here the dimension does not exist. So internally what broadcasting will do is it will create a one here. And then we see that one of them is a one and this will get copied and this will run, this will broadcast. Okay, so you'd expect this to work because we are, this broadcasts and this, we can divide this.

Now if I run this, you'd expect it to work, but it doesn't. You actually get garbage. You get a wrong result because this is actually a bug. This keep dim equals true makes it work. This is a bug. In both cases, we are doing the correct counts. We are summing up across the rows, but keep dim is saving us and making it work.

So in this case, I'd like to encourage you to potentially like pause this video at this point and try to think about why this is buggy and why the keep dim was necessary here. Okay, so the reason to do for this is I'm trying to hint it here when I was sort of giving you a bit of a hint on how this works.

This 27 vector internally inside the broadcasting, this becomes a one by 27 and one by 27 is a row vector, right? And now we are dividing 27 by 27 by one by 27 and torch will replicate this dimension. So basically it will take, it will take this row vector and it will copy it vertically now 27 times.

So the 27 by 27 lines exactly and element wise divides. And so basically what's happening here is we're actually normalizing the columns instead of normalizing the rows. So you can check that what's happening here is that P at zero, which is the first row of P dot sum is not one, it's seven.

It is the first column as an example that sums to one. So to summarize, where does the issue come from? The issue comes from the silent adding of a dimension here, because in broadcasting rules, you align on the right and go from right to left. And if dimension doesn't exist, you create it.

So that's where the problem happens. We still did the counts correctly. We did the counts across the rows and we got the counts on the right here as a column vector. But because the keyptons was true, this dimension was discarded and now we just have a vector of 27.

And because of broadcasting the way it works, this vector of 27 suddenly becomes a row vector. And then this row vector gets replicated vertically. And that every single point we are dividing by the count in the opposite direction. So this thing just doesn't work. This needs to be keyptons equals true in this case.

So then we have that P at zero is normalized. And conversely, the first column you'd expect to potentially not be normalized. And this is what makes it work. So pretty subtle. And hopefully this helps to scare you, that you should have respect for broadcasting, be careful, check your work, and understand how it works under the hood and make sure that it's broadcasting in the direction that you like.

Otherwise, you're going to introduce very subtle bugs, very hard to find bugs. And just be careful. One more note on efficiency. We don't want to be doing this here because this creates a completely new tensor that we store into P. We prefer to use in-place operations if possible. So this would be an in-place operation, has the potential to be faster.

It doesn't create new memory under the hood. And then let's erase this. We don't need it. And let's also just do fewer, just so I'm not wasting space. Okay, so we're actually in a pretty good spot now. We trained a bigram language model, and we trained it really just by counting how frequently any pairing occurs and then normalizing so that we get a nice property distribution.

So really these elements of this array P are really the parameters of our bigram language model, giving us and summarizing the statistics of these bigrams. So we trained the model, and then we know how to sample from the model. We just iteratively sample the next character and feed it in each time and get the next character.

Now, what I'd like to do is I'd like to somehow evaluate the quality of this model. We'd like to somehow summarize the quality of this model into a single number. How good is it at predicting the training set? And as an example, so in the training set, we can evaluate now the training loss.

And this training loss is telling us about sort of the quality of this model in a single number, just like we saw in micrograd. So let's try to think through the quality of the model and how we would evaluate it. Basically, what we're going to do is we're going to copy paste this code that we previously used for counting.

And let me just print the bigrams first. We're going to use f strings, and I'm going to print character one followed by character two. These are the bigrams. And then I don't want to do it for all the words, just do the first three words. So here we have Emma, Olivia, and Ava bigrams.

Now what we'd like to do is we'd like to basically look at the probability that the model assigns to every one of these bigrams. So in other words, we can look at the probability, which is summarized in the matrix P of IX1, and then we can print it here as probability.

And because these probabilities are way too large, let me percent or colon .4f to truncate it a bit. So what do we have here? We're looking at the probabilities that the model assigns to every one of these bigrams in the dataset. And so we can see some of them are 4%, 3%, et cetera.

Just to have a measuring stick in our mind, by the way, we have 27 possible characters or tokens. And if everything was equally likely, then you'd expect all these probabilities to be 4% roughly. So anything above 4% means that we've learned something useful from these bigram statistics. And you see that roughly some of these are 4%, but some of them are as high as 40%, 35%, and so on.

So you see that the model actually assigned a pretty high probability to whatever's in the training set. And so that's a good thing. Especially if you have a very good model, you'd expect that these probabilities should be near 1, because that means that your model is correctly predicting what's going to come next, especially on the training set where you trained your model.

So now we'd like to think about how can we summarize these probabilities into a single number that measures the quality of this model? Now when you look at the literature into maximum likelihood estimation and statistical modeling and so on, you'll see that what's typically used here is something called the likelihood.

And the likelihood is the product of all of these probabilities. And so the product of all of these probabilities is the likelihood. And it's really telling us about the probability of the entire data set assigned by the model that we've trained. And that is a measure of quality. So the product of these should be as high as possible when you are training the model and when you have a good model.

Your product of these probabilities should be very high. Now because the product of these probabilities is an unwieldy thing to work with, you can see that all of them are between 0 and 1. So your product of these probabilities will be a very tiny number. So for convenience, what people work with usually is not the likelihood, but they work with what's called the log likelihood.

So the product of these is the likelihood. To get the log likelihood, we just have to take the log of the probability. And so the log of the probability here, I have the log of x from 0 to 1. The log is a, you see here, monotonic transformation of the probability, where if you pass in 1, you get 0.

So probability 1 gets you log probability of 0. And then as you go lower and lower probability, the log will grow more and more negative until all the way to negative infinity at 0. So here we have a log prob, which is really just a torsion log of probability.

Let's print it out to get a sense of what that looks like. Log prob also 0.4f. So as you can see, when we plug in numbers that are very close, some of our higher numbers, we get closer and closer to 0. And then if we plug in very bad probabilities, we get more and more negative number.

That's bad. So and the reason we work with this is for large extent convenience, right? Because we have mathematically that if you have some product, a times b times c, of all these probabilities, right? The likelihood is the product of all these probabilities. And the log of these is just log of a plus log of b plus log of c.

If you remember your logs from your high school or undergrad and so on. So we have that basically, the likelihood is the product of probabilities. The log likelihood is just the sum of the logs of the individual probabilities. So log likelihood starts at 0. And then log likelihood here, we can just accumulate simply.

And then the end, we can print this. Print the log likelihood. F strings. Maybe you're familiar with this. So log likelihood is negative 38. Okay. Now we actually want -- so how high can log likelihood get? It can go to 0. So when all the probabilities are 1, log likelihood will be 0.

And then when all the probabilities are lower, this will grow more and more negative. Now we don't actually like this because what we'd like is a loss function. And a loss function has the semantics that low is good. Because we're trying to minimize the loss. So we actually need to invert this.

And that's what gives us something called the negative log likelihood. Negative log likelihood is just negative of the log likelihood. These are F strings, by the way, if you'd like to look this up. Negative log likelihood equals. So negative log likelihood now is just negative of it. And so the negative log likelihood is a very nice loss function.

Because the lowest it can get is 0. And the higher it is, the worse off the predictions are that you're making. And then one more modification to this that sometimes people do is that for convenience, they actually like to normalize by -- they like to make it an average instead of a sum.

And so here, let's just keep some counts as well. So n plus equals 1 starts at 0. And then here, we can have sort of like a normalized log likelihood. If we just normalize it by the count, then we will sort of get the average log likelihood. So this would be usually our loss function here.

This is what we would use. So our loss function for the training set assigned by the model is 2.4. That's the quality of this model. And the lower it is, the better off we are. And the higher it is, the worse off we are. And the job of our training is to find the parameters that minimize the negative log likelihood loss.

And that would be like a high-quality model. Okay, so to summarize, I actually wrote it out here. So our goal is to maximize likelihood, which is the product of all the probabilities assigned by the model. And we want to maximize this likelihood with respect to the model parameters. And in our case, the model parameters here are defined in the table.

These numbers, the probabilities, are the model parameters sort of in our bigram language model so far. But you have to keep in mind that here we are storing everything in a table format, the probabilities. But what's coming up as a brief preview is that these numbers will not be kept explicitly, but these numbers will be calculated by a neural network.

So that's coming up. And we want to change and tune the parameters of these neural networks. We want to change these parameters to maximize the likelihood, the product of the probabilities. Now maximizing the likelihood is equivalent to maximizing the log likelihood because log is a monotonic function. Here's the graph of log.

And basically all it is doing is it's just scaling your, you can look at it as just a scaling of the loss function. And so the optimization problem here and here are actually equivalent because this is just scaling. You can look at it that way. And so these are two identical optimization problems.

Maximizing the log likelihood is equivalent to minimizing the negative log likelihood. And then in practice, people actually minimize the average negative log likelihood to get numbers like 2.4. And then this summarizes the quality of your model. And we'd like to minimize it and make it as small as possible.

And the lowest it can get is zero. And the lower it is, the better off your model is because it's assigning high probabilities to your data. Now let's estimate the probability over the entire training set just to make sure that we get something around 2.4. Let's run this over the entire, oops.

Let's take out the print statement as well. Okay, 2.45 over the entire training set. Now what I'd like to show you is that you can actually evaluate the probability for any word that you want. So for example, if we just test a single word, Andre, and bring back the print statement, then you see that Andre is actually kind of like an unlikely word.

Like on average, we take three log probability to represent it. And roughly that's because EJ apparently is very uncommon as an example. Now think through this. When I take Andre and I append Q and I test the probability of it, Andre Q, we actually get infinity. And that's because JQ has a 0% probability according to our model.

So the log likelihood, so the log of zero will be negative infinity. We get infinite loss. So this is kind of undesirable, right? Because we plugged in a string that could be like a somewhat reasonable name. But basically what this is saying is that this model is exactly 0% likely to predict this name.

And our loss is infinity on this example. And really what the reason for that is that J is followed by Q zero times. Where's Q? JQ is zero. And so JQ is 0% likely. So it's actually kind of gross and people don't like this too much. To fix this, there's a very simple fix that people like to do to sort of like smooth out your model a little bit.

And it's called model smoothing. And roughly what's happening is that we will add some fake counts. So imagine adding a count of one to everything. So we add a count of one like this, and then we recalculate the probabilities. And that's model smoothing. And you can add as much as you like.

You can add five and that will give you a smoother model. And the more you add here, the more uniform model you're going to have. And the less you add, the more peaked model you're going to have, of course. So one is like a pretty decent count to add.

And that will ensure that there will be no zeros in our probability matrix P. And so this will of course change the generations a little bit. In this case, it didn't, but in principle it could. But what that's going to do now is that nothing will be infinity unlikely.

So now our model will predict some other probability. And we see that JQ now has a very small probability. So the model still finds it very surprising that this was a word or a bigram, but we don't get negative infinity. So it's kind of like a nice fix that people like to apply sometimes, and it's called model smoothing.

Okay, so we've now trained a respectable bigram character level language model. And we saw that we both sort of trained the model by looking at the counts of all the bigrams and normalizing the rows to get probability distributions. We saw that we can also then use those parameters of this model to perform sampling of new words.

So we sample new names according to those distributions. And we also saw that we can evaluate the quality of this model. And the quality of this model is summarized in a single number, which is the negative log likelihood. And the lower this number is, the better the model is, because it is giving high probabilities to the actual next characters in all the bigrams in our training set.

So that's all well and good, but we've arrived at this model explicitly by doing something that felt sensible. We were just performing counts, and then we were normalizing those counts. Now what I would like to do is I would like to take an alternative approach. We will end up in a very, very similar position, but the approach will look very different, because I would like to cast the problem of bigram character level language modeling into the neural network framework.

And in the neural network framework, we're going to approach things slightly differently, but again, end up in a very similar spot. I'll go into that later. Now our neural network is going to be still a bigram character level language model. So it receives a single character as an input.

Then there's neural network with some weights or some parameters w. And it's going to output the probability distribution over the next character in a sequence. And it's going to make guesses as to what is likely to follow this character that was input to the model. And then in addition to that, we're going to be able to evaluate any setting of the parameters of the neural net, because we have the loss function, the negative log likelihood.

So we're going to take a look at this probability distributions, and we're going to use the labels, which are basically just the identity of the next character in that bigram, the second character. So knowing what second character actually comes next in the bigram allows us to then look at how high of a probability the model assigns to that character.

And then we of course want the probability to be very high. And that is another way of saying that the loss is low. So we're going to use gradient based optimization then to tune the parameters of this network, because we have the loss function and we're going to minimize it.

So we're going to tune the weights so that the neural net is correctly predicting the probabilities for the next character. So let's get started. The first thing I want to do is I want to compile the training set of this neural network. So create the training set of all the bigrams.

And here I'm going to copy/paste this code, because this code iterates over all the bigrams. So here we start with the words, we iterate over all the bigrams, and previously, as you recall, we did the counts. But now we're not going to do counts. We're just creating a training set.

Now this training set will be made up of two lists. We have the inputs and the targets, the labels. And these bigrams will denote x, y. Those are the characters, right? And so we're given the first character of the bigram, and then we're trying to predict the next one.

Both of these are going to be integers. So here we'll take xs.append is just x1, ys.append is just x2. And then here, we actually don't want lists of integers. We will create tensors out of these. So xs is tors.tensor of xs, and ys is tors.tensor of ys. And then we don't actually want to take all the words just yet, because I want everything to be manageable.

So let's just do the first word, which is Emma. And then it's clear what these xs and ys would be. Here let me print character1, character2, just so you see what's going on here. So the bigrams of these characters is .emmmma. So this single word, as I mentioned, has one, two, three, four, five examples for our neural network.

There are five separate examples in Emma. And those examples are summarized here. When the input to the neural network is integer 0, the desired label is integer 5, which corresponds to e. When the input to the neural network is 5, we want its weights to be arranged so that 13 gets a very high probability.

When 13 is put in, we want 13 to have a high probability. When 13 is put in, we also want 1 to have a high probability. When 1 is input, we want 0 to have a very high probability. So there are five separate input examples to a neural net in this dataset.

I wanted to add a tangent of a note of caution to be careful with a lot of the APIs of some of these frameworks. You saw me silently use torch.tensor with a lowercase t, and the output looked right. But you should be aware that there's actually two ways of constructing a tensor.

There's a torch.lowercase tensor, and there's also a torch.capital tensor class, which you can also construct. So you can actually call both. You can also do torch.capital tensor, and you get an X as in Y as well. So that's not confusing at all. There are threads on what is the difference between these two.

And unfortunately, the docs are just not clear on the difference. And when you look at the docs of lowercase tensor, construct tensor with no autograd history by copying data. It's just like it doesn't make sense. So the actual difference, as far as I can tell, is explained eventually in this random thread that you can Google.

And really it comes down to, I believe, that-- where is this? Torch.tensor infers the D type, the data type, automatically, while torch.tensor just returns a float tensor. I would recommend to stick to torch.lowercase tensor. So indeed, we see that when I construct this with a capital T, the data type here of X's is float32.

But torch.lowercase tensor, you see how it's now X.Dtype is now integer. So it's advised that you use lowercase t, and you can read more about it if you like in some of these threads. But basically, I'm pointing out some of these things because I want to caution you, and I want you to get used to reading a lot of documentation and reading through a lot of Q&As and threads like this.

And some of this stuff is unfortunately not easy and not very well documented, and you have to be careful out there. What we want here is integers, because that's what makes sense. And so lowercase tensor is what we are using. Okay, now we want to think through how we're going to feed in these examples into a neural network.

Now, it's not quite as straightforward as plugging it in, because these examples right now are integers. So there's like a 0, 5, or 13. It gives us the index of the character, and you can't just plug an integer index into a neural net. These neural nets are sort of made up of these neurons, and these neurons have weights.

And as you saw in micrograd, these weights act multiplicatively on the inputs, wx + b, there's 10 h's and so on. And so it doesn't really make sense to make an input neuron take on integer values that you feed in and then multiply on with weights. So instead, a common way of encoding integers is what's called one-hot encoding.

In one-hot encoding, we take an integer like 13, and we create a vector that is all zeros, except for the 13th dimension, which we turn to a 1. And then that vector can feed into a neural net. Now conveniently, PyTorch actually has something called the one-hot function inside Torch and in Functional.

It takes a tensor made up of integers. Long is an integer. And it also takes a number of classes, which is how large you want your vector to be. So here, let's import Torch. and in .Functional.sf. This is a common way of importing it. And then let's do f.one-hot, and we feed in the integers that we want to encode.

So we can actually feed in the entire array of x's. And we can tell it that numClass is 27. So it doesn't have to try to guess it. It may have guessed that it's only 13 and would give us an incorrect result. So this is the one-hot. Let's call this xInc for xEncoded.

And then we see that xEncoded.shape is 5 by 27. And we can also visualize it, plt.imshow(xInc) to make it a little bit more clear, because this is a little messy. So we see that we've encoded all the five examples into vectors. We have five examples, so we have five rows.

And each row here is now an example into a neural net. And we see that the appropriate bit is turned on as a 1, and everything else is 0. So here, for example, the 0th bit is turned on, the 5th bit is turned on, the 13th bits are turned on for both of these examples, and the first bit here is turned on.

So that's how we can encode integers into vectors. And then these vectors can feed into neural nets. One more issue to be careful with here, by the way, is let's look at the data type of xEncoding. We always want to be careful with data types. What would you expect xEncoding's data type to be?

When we're plugging numbers into neural nets, we don't want them to be integers. We want them to be floating-point numbers that can take on various values. But the Dtype here is actually a 64-bit integer. And the reason for that, I suspect, is that one hot received a 64-bit integer here, and it returned the same data type.

And when you look at the signature of one hot, it doesn't even take a Dtype, a desired data type, off the output tensor. And so we can't, in a lot of functions in Torch, we'd be able to do something like Dtype equals Torch.float32, which is what we want. But one hot does not support that.

So instead, we're going to want to cast this to float like this, so that these, everything is the same, everything looks the same, but the Dtype is float32. And floats can feed into neural nets. So now let's construct our first neuron. This neuron will look at these input vectors.

And as you remember from micrograd, these neurons basically perform a very simple function, wx plus b, where wx is a dot product. So we can achieve the same thing here. Let's first define the weights of this neuron, basically. What are the initial weights at initialization for this neuron? Let's initialize them with Torch.random.

Torch.random fills a tensor with random numbers drawn from a normal distribution. And a normal distribution has a probability density function like this. And so most of the numbers drawn from this distribution will be around zero, but some of them will be as high as almost three and so on.

And very few numbers will be above three in magnitude. So we need to take a size as an input here. And I'm going to use size as to be 27 by 1. So 27 by 1. And then let's visualize w. So w is a column vector of 27 numbers.

And these weights are then multiplied by the inputs. So now to perform this multiplication, we can take x encoding and we can multiply it with w. This is a matrix multiplication operator in PyTorch. And the output of this operation is 5 by 1. The reason it's 5 by 5 is the following.

We took x encoding, which is 5 by 27, and we multiplied it by 27 by 1. And in matrix multiplication, you see that the output will become 5 by 1 because these 27 will multiply and add. So basically what we're seeing here out of this operation is we are seeing the five activations of this neuron on these five inputs.

And we've evaluated all of them in parallel. We didn't feed in just a single input to the single neuron. We fed in simultaneously all the five inputs into the same neuron. And in parallel, PyTorch has evaluated the wx plus b. But here it's just wx. There's no bias. It has evaluated w times x for all of them independently.

Now instead of a single neuron, though, I would like to have 27 neurons. And I'll show you in a second why I want 27 neurons. So instead of having just a 1 here, which is indicating this presence of one single neuron, we can use 27. And then when w is 27 by 27, this will in parallel evaluate all the 27 neurons on all the five inputs, giving us a much better, much, much bigger result.

So now what we've done is 5 by 27 multiplied 27 by 27. And the output of this is now 5 by 27. So we can see that the shape of this is 5 by 27. So what is every element here telling us? It's telling us for every one of 27 neurons that we created, what is the firing rate of those neurons on every one of those five examples?

So the element, for example, 3, 13 is giving us the firing rate of the 13th neuron looking at the third input. And the way this was achieved is by a dot product between the third input and the 13th column of this w matrix here. So using matrix multiplication, we can very efficiently evaluate the dot product between lots of input examples in a batch and lots of neurons where all of those neurons have weights in the columns of those w's.

And in matrix multiplication, we're just doing those dot products in parallel. Just to show you that this is the case, we can take x_inc and we can take the third row. And we can take the w and take its 13th column. And then we can do x_inc at 3, element-wise multiply with w at 13, and sum that up.

That's wx plus b. Well, there's no plus b. It's just wx dot product. And that's this number. So you see that this is just being done efficiently by the matrix multiplication operation for all the input examples and for all the output neurons of this first layer. Okay, so we fed our 27 dimensional inputs into a first layer of a neural net that has 27 neurons.

So we have 27 inputs and now we have 27 neurons. These neurons perform w times x. They don't have a bias and they don't have a nonlinearity like tanh. We're going to leave them to be a linear layer. In addition to that, we're not going to have any other layers.

This is going to be it. It's just going to be the dumbest, smallest, simplest neural net, which is just a single linear layer. And now I'd like to explain what I want those 27 outputs to be. Intuitively, what we're trying to produce here for every single input example is we're trying to produce some kind of a probability distribution for the next character in a sequence.

And there's 27 of them. But we have to come up with precise semantics for exactly how we're going to interpret these 27 numbers that these neurons take on. Now intuitively, you see here that these numbers are negative and some of them are positive, etc. And that's because these are coming out of a neural net layer initialized with these normal distribution parameters.

But what we want is we want something like we had here. But each row here told us the counts, and then we normalized the counts to get probabilities. And we want something similar to come out of a neural net. But what we just have right now is just some negative and positive numbers.

Now we want those numbers to somehow represent the probabilities for the next character. But you see that probabilities, they have a special structure. They're positive numbers and they sum to one. And so that doesn't just come out of a neural net. And then they can't be counts because these counts are positive and counts are integers.

So counts are also not really a good thing to output from a neural net. So instead, what the neural net is going to output and how we are going to interpret the 27 numbers is that these 27 numbers are giving us log counts, basically. So instead of giving us counts directly, like in this table, they're giving us log counts.

And to get the counts, we're going to take the log counts and we're going to exponentiate them. Now exponentiation takes the following form. It takes numbers that are negative or they are positive. It takes the entire real line. And then if you plug in negative numbers, you're going to get e to the x, which is always below 1.

So you're getting numbers lower than 1. And if you plug in numbers greater than 0, you're getting numbers greater than 1, all the way growing to the infinity. And this here grows to 0. So basically, we're going to take these numbers here and instead of them being positive and negative and all over the place, we're going to interpret them as log counts.

And then we're going to element-wise exponentiate these numbers. Exponentiating them now gives us something like this. And you see that these numbers now, because they went through an exponent, all the negative numbers turned into numbers below 1, like 0.338. And all the positive numbers originally turned into even more positive numbers, sort of greater than 1.

So like for example, 7 is some positive number over here. That is greater than 0. But exponentiated outputs here basically give us something that we can use and interpret as the equivalent of counts originally. So you see these counts here, 112, 751, 1, etc. The neural net is kind of now predicting counts.

And these counts are positive numbers. They can never be below 0. So that makes sense. And they can now take on various values depending on the settings of W. So let me break this down. We're going to interpret these to be the log counts. In other words for this, that is often used, is so-called logits.

These are logits, log counts. And these will be sort of the counts. Logits exponentiate it. And this is equivalent to the n matrix, sort of the n array that we used previously. Remember this was the n? This is the array of counts. And each row here are the counts for the next character, sort of.

So those are the counts. And now the probabilities are just the counts normalized. And so I'm not going to find the same, but basically I'm not going to scroll all over the place. We've already done this. We want to counts.sum along the first dimension. And we want to keep dims as true.

We went over this. And this is how we normalize the rows of our counts matrix to get our probabilities. So now these are the probabilities. And these are the counts that we have currently. And now when I show the probabilities, you see that every row here, of course, will sum to one because they're normalized.

And the shape of this is 5 by 27. And so really what we've achieved is for every one of our five examples, we now have a row that came out of a neural net. And because of the transformations here, we made sure that this output of this neural net now are probabilities, or we can interpret to be probabilities.

So our WX here gave us logits. And then we interpret those to be log counts. We exponentiate to get something that looks like counts. And then we normalize those counts to get a probability distribution. And all of these are differentiable operations. So what we've done now is we are taking inputs.

We have differentiable operations that we can back propagate through. And we're getting out probability distributions. So for example, for the zeroth example that fed in, which was the zeroth example here, was a 1/2 vector of 0. And it basically corresponded to feeding in this example here. So we're feeding in a dot into a neural net.

And the way we fed the dot into a neural net is that we first got its index. Then we one-hot encoded it. Then it went into the neural net. And out came this distribution of probabilities. And its shape is 27. There's 27 numbers. And we're going to interpret this as the neural net's assignment for how likely every one of these characters, the 27 characters, are to come next.

And as we tune the weights w, we're going to be, of course, getting different probabilities out for any character that you input. And so now the question is just, can we optimize and find a good w such that the probabilities coming out are pretty good? And the way we measure pretty good is by the loss function.

OK, so I organized everything into a single summary so that hopefully it's a bit more clear. So it starts here. We have an input data set. We have some inputs to the neural net. And we have some labels for the correct next character in a sequence. These are integers.

Here I'm using torch generators now so that you see the same numbers that I see. And I'm generating 27 neurons' weights. And each neuron here receives 27 inputs. Then here we're going to plug in all the input examples, x's, into a neural net. So here, this is a forward pass.

First, we have to encode all of the inputs into one-hot representations. So we have 27 classes. We pass in these integers. And x_inc becomes an array that is 5 by 27. 0's, etc. a few ones. We then multiply this in the first layer of a neural net to get logits.

Exponentiate the logits to get fake counts, sort of. And normalize these counts to get probabilities. So these last two lines, by the way, here, are called the softmax, which I pulled up here. Softmax is a very often used layer in a neural net that takes these z's, which are logits, exponentiates them, and divides and normalizes.

It's a way of taking outputs of a neural net layer. And these outputs can be positive or negative. And it outputs probability distributions. It outputs something that always sums to 1 and are positive numbers, just like probabilities. So this is kind of like a normalization function, if you want to think of it that way.

And you can put it on top of any other linear layer inside a neural net. And it basically makes a neural net output probabilities. That's very often used. And we used it as well here. So this is the forward pass, and that's how we made a neural net output probability.

Now, you'll notice that all of these, this entire forward pass is made up of differentiable layers. Everything here, we can backpropagate through. And we saw some of the backpropagation in micrograd. This is just multiplication and addition. All that's happening here is just multiply and add. And we know how to backpropagate through them.

Exponentiation, we know how to backpropagate through. And then here, we are summing, and sum is easily backpropagatable as well, and division as well. So everything here is a differentiable operation, and we can backpropagate through. Now, we achieve these probabilities, which are 5 by 27. For every single example, we have a vector of probabilities that sum to 1.

And then here, I wrote a bunch of stuff to sort of like break down the examples. So we have five examples making up Emma, right? And there are five bigrams inside Emma. So bigram example 1 is that E is the beginning character, right after dot. And the indexes for these are 0 and 5.

So then we feed in a 0. That's the input to the neural net. We get probabilities from the neural net that are 27 numbers. And then the label is 5, because E actually comes after dot. So that's the label. And then we use this label 5 to index into the probability distribution here.

So this index 5 here is 0, 1, 2, 3, 4, 5. It's this number here, which is here. So that's basically the probability assigned by the neural net to the actual correct character. You see that the network currently thinks that this next character, that E following dot, is only 1% likely, which is, of course, not very good, right?