[AI in Action] Vibe-Kanban with OpenCode + Gemini Code + Claude Code (ft. Louis Knight-Webb)

Transcript

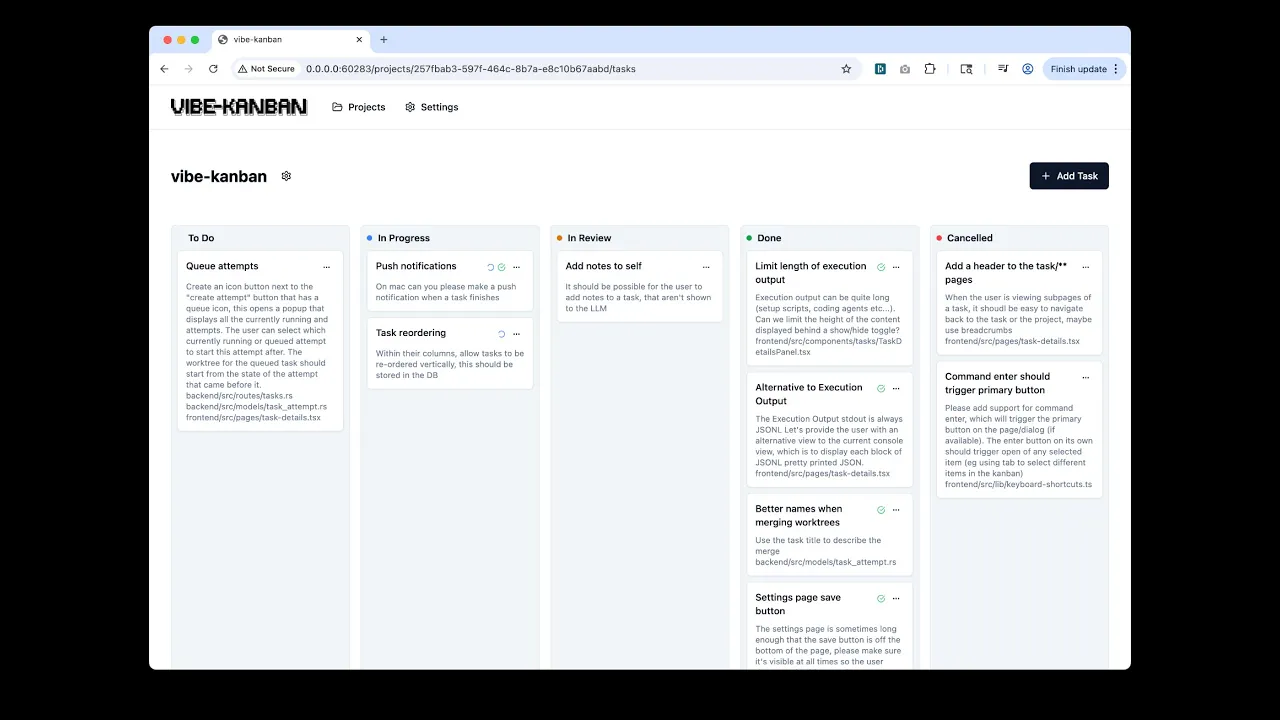

popping off he's actively developing it he uh and uh you know we're friends so i get to just invite him uh to to come on and share i figure you guys would love it too here louis hey sean hey how you doing i'm good i'm good nice to see man nice to see you uh this is the crew for ai in action uh i don't know how big it gets but we record it so uh it gets posted on youtube and people can catch up later but this is basically like the place where we discuss coding tools and things that are you know just making people more productive we have a different club that is for discussing papers but this is more for tools um yeah we're already recording so if you just want to kick it off like go ahead awesome um so well this is a very new thing it's only really existed for a couple of weeks internally and then for about six days externally of uh of my company uh for context i'm one of the co-founders of bloop um we have been working on legacy code modernization for the last two years so predominantly helping very large companies uh translate things like kobol um to um uh to java but more recently you know a lot of dotnet a lot of java 8 for some reason people are still running that um etc and what we found is that a lot of our internal development recently has switched from coding agents without having favorites you know the curses the the windsurfs of the world to clawed code and i wouldn't be surprised if if virtually everybody in this call had kind of had the same uh change over the last few months yeah and so what i found is that um and hope i don't know if there's a pg-13 filter on this but i'm basically a cuckold to clawed all i do is sit there and watch it do what i used to love doing and um i'm just helpless i sit there it takes you know one to two minutes to uh to complete and i often find myself getting you know on twitter and it's actually quite demoralizing to be honest as a workflow to just like constantly go back and forth between twitter and claw code so i think there are lots of things that we can be doing as developers during those one to two minutes um that we're waiting and essentially what i've come up with is uh vibe kanban and it is it's really simple concept it's basically a kanban board and you can add projects so projects are um git repos and you can queue up different tasks in the kanban just like this so what we're editing at the moment is actually vibe kanban itself so what i will do is you know just make a very simple dummy ticket change the settings icon to uh you know some fruit or something and we can go ahead and hit create start you have the option of so what what what just happened when i hit start is the uh in the background a git work tree is being created specifically for this task you can see the um a setup script is now running and so these are defined on a project level it's kind of like what you would do if you were setting up your github ci or you had some scripts in you know in your docker file or something like that um i've just got pmpmi and cargo build uh and then what's going to happen in a minute although it takes a minute so i'll just skip maybe to one of these that uh has already run is that an executor will start and this can be either clawed code amp or gemini i actually find myself mainly using amp these days i don't know uh how familiar everybody is with that but um it's from its source graph's new coding agent which is pretty cool gemini i haven't been able to try yet because i just get 429'd every single time i try it but i've heard you know decent things from people that have managed to get through and essentially once a task is complete uh what do you want to do well you want to review the changes so you get a um uh a kind of mini github review screen if you need to rebase it you get a rebase button if the and that'll just you know one click rebase it i'm going to add claw code for fixing kind of messy rebases as well soon uh and then when you're ready to merge you can go ahead and just hit the hit the merge button so basically just takes everything you were doing in five or six tools uh or five or six different terminal windows and just brings it into one and the beauty of it is it just gives you that visibility so that while this is running i can be thinking like okay well i can test the last thing that came in or i could be planning the next thing that i'm going to do and i'm not just sitting there kind of helplessly watching claude um trying to think if there are some other nice little things that are worth mentioning okay so dev servers that was another thing that i found myself doing quite a lot of um you can hit start and that'll run a script that's configured in your project in this case it's just npm run dev and we can see the logs uh address already in use that means i'm running a dev server probably just somewhere locally off vibe kanban at the moment if it was on vibe kanban it would have automatically killed the the other one and that is basically it i'm very interested in hearing you know what people are doing in the two minutes that they you know are waiting for claude uh i would maybe preempt some of the frequent things that people say like why aren't you using docker for this i did try initially and i just found that you know i'm on a decent lap laptop like an m3 you know mac but uh after two or three concurrent builds in in docker going on at the same time i just found my my laptop was unusable so actually going for a more lightweight get work tree based approach uh just meant that i'm you're able to use your browser and it's not super choppy at the same time as building a bunch of stuff in the background uh another thing so today's release it hasn't it's it's gone out but i'm still in the middle of testing it um adds mcp support so what that means is that you can now do deep research on your repository and then say at the end of that deep research add all of these as tasks to my vibe kanban board and the the real and then like the 10d chess version of that is you can add the vibe kanban mcp server to claude code running in vibe kanban mcp server which is which i'm just trying out now and this is the first time i've ever seen this result so if it doesn't go to plan i'm sorry um but i just asked it to list my projects and i think okay i'm not totally sure this has worked okay roll back the last 30 seconds and erase your memories all right let's go to some questions wow it's a very efficient demo uh manuel so uh i have maybe i don't know if i have to introduce people but uh yeah these are all the like the the very very power users of of uh these coding tools so they actually know a lot more than me but i like the idea so anyway go ahead uh yeah if other people have something to say first like i don't want to take up my usual the usual space um go for it go for it why don't you kick off all right uh so so um i've i've been playing around with like i've did it i did something similar with github projects uh but this is this is way nicer right like the real time the running the project and all of that um one thing that's really interesting is having the agents right into other tickets what the context is to start that ticket which is like a nice way to compact the current thread right like that that works really nicely because you actually don't need to tell the agents what to do you just point them to the board and so like figure it out and they'll like look at all the tickets and do all of these things which is really fun i don't know if you've experimented with that but like more and more i just like don't give the bots or the agents any context because i figured they will know what to do looking at these things and i started um this is something i'm playing with where where there's this design pattern from the 70s 80s from agents back then which were way more advanced than what we have because they had to deal with like much bigger constraints which is called like a blackboard system where you basically put resources on a blackboard and then the agents look at it and like do stuff based on it which you know is a canman board at the end of the day uh you can put resources on there like not tasks right like but just like shared resources that they can update i don't know if you've played with that kind of uh basically putting log files up on the board putting that kind of stuff um because they will be discovered and i don't want to have them in a code base i don't want to have them shared somewhere i don't know if you have ideas around expanding it that way yeah it's interesting i think a lot of these are going to be solved by the actual coding agents themselves and i imagine you know you can kind of imagine at some point claw code is going to get to a point where it can see all of the previous threads that you know all the previous uh attempts you've made with claw code and then probably be able to leverage that to make better you know fewer better one-shot um attempts at solving these tasks so i guess we could build that stuff into this but i think it'll probably just be supported natively this is kind of a bet in the future right like 25 percent of my tasks today can probably happen in claw code one-shot without me needing to ever enter an ide i do a very cursory you know check of the code just to make sure it hasn't done anything stupid but if it runs and and the button's been added and the back-end endpoint works like i generally trust that it works and and i think that number is just going to increase and increase and so you know what's the interface when we're at 80 percent 90 percent where clawed is able to one shot you know how much of how much of that interface do you really uh you know need from the existing kind of you know dev stack and then the same is true you know of all of those tools how much is going to be absorbed into clawed code itself like probably everything the notion of of being at the ai application layer and calling like a chat completions endpoint i think is just going to be completely dead why would you do that when virtually you know and and we've been thinking about the difference so i think this goes back maybe to one of your original um questions which was around like um you know how do you use these things to plan and things like that and virtually every problem you think about like okay how do we resolve messy rebases it's claw code how do we plan tasks it's claw code how do we do the task it's claw code virtually like the answer to everything at the end of the day is like claw code or is going to be claw code quite soon so i don't know if that answers your question probably made no sense thanks no it kind of does like uh i'm telling people i just tell the ai what to do don't don't control stuff and it's good to hear that more and more i just don't look at the code anymore i'm like i put right boundaries so that i'm not worried that it will do the wrong thing right like it can't write to the database with this api or whatever and then just like accept be like this is probably better than what i would do this is probably better tested than what i would do to test so it's um it's quite you got to be careful with yolo mode i noticed today every time i open a terminal now it's like in a python virtual environment and i don't know why that happened i think claude has done something just in yolo mode to to configure my entire local host anyway so this does raise an interesting question of how you put in boundaries and validations right like should these actually be running in a sandbox somewhere should we be you know you mentioned not wanting to totally dockerize it but are there ways that we can kind of uh put constraints around what we do and don't want these tools to do and also how we validate the outputs now that you know i think uh you talk about not looking with at the outputs to me what's happening a source code is becoming essentially the new binary right nobody almost nobody looks at the details of the assembler in their binary sometimes you do if you have a really tricky bug but mostly you don't what you do is you say does it meet the functionality does it run these tests does it do this sort of thing so like what does that look like in this world yeah so your last point is probably the the the thing i'm most interested in which is the testing and this is something that i do not see claude code being able to really solve which is you know when i when i say a sentence to claude like add a button here there's a lot of assumptions that are being made right like what do i mean by here what did i mean by button how big what what sort of size right there's a lot of ways in which it can misinterpret what i'm saying and so i think the more like the real alpha if you're not claude is is to is to basically get information out of the user's brain in in the in the least uh you know in the kind of the easiest way possible make it like delightful for me to explain as much information as possible so you know i don't see why it has to be a kind of jira style text box that we're describing these things and why can't it just be you know more of like a lovable point and click like this is where i mean on the website or if it's a back-end endpoint like why can't i describe you know here's a json that describes the output of the request that you know is going to be created through this endpoint and you know basically ways of rapidly prototyping tests for you know because this is the big difference between claw code working and not i've noticed with my own tasks it's like the ones where it can just go in a loop and validate you know against some kind of test are the ones that work really well and the ones that always seem to fail are the ones that it's just impossible to to test for that case because of statefulness or it it's some sort of devops or data or migration related thing um so that's what i think we're all going to be doing is is kind of tests writing tests and and the two are really linked right essentially the the plan is that becomes the test it's quite a blurry line between you know what the distinction between those two things is when you talk about agents i think uh a couple of things that uh i really liked when i saw vibe kanban was um the the diff view that you had set up was really nice to kind of just be able to to quickly figure out what exactly changed and that's been an obstacle for me sometimes where it's like i don't want to look at this whole giant like six thousand line change like just show me the parts that matter um so nice work on that and then the other i don't know if you touched on it too much but i think um kind of one of the one of the unlocks with with coding agents terminal coding agents especially as work trees um i think that like a more um kind of like patch based workflow rather than sort of your tradition you kind of have to like re-rig your workflow to be for agents as opposed to for humans like humans i want a whole pr i want us to like chat about it etc etc if i if i have like a hundred agents spinning up i just want like patch this patch that patch that run these tests does it work yes good okay now tell the orchestrator what you did leave some notes so he can keep track of everything and then go back um uh so like those kind of uh workflow enablements were the parts that i i um thought were really really solid from vibe canban and then um i'm curious do you have or like one of the things that i find useful when i'm working with coding agents is to give them like persona prompts like i'm going to have an orchestrator i'm going to have like one of them that runs tests uh i'm curious on the vibe canban do you have uh like what what's sort of the the procedure for context injection when you're when you're assigning a given task if you have any yet yeah so i don't think i've got thoughts on this but they're not really related to vibe canban so we we um we also uh you know we have a we have a pretty serious effort going on at all we have a lot of times to try and like top sweep bench and what you know basically the um they you know the the architecture a few months ago that was really good at this was was that kind of multi-prompt you know specialized uh you have an opinion about the steps that are needed to complete a software engineering task and of course that works really well for the you know the the 60 percent of of cases that can be solved using a very opinionated approach but uh it's not great for edge cases and what we've seen i think is just the kind of the move from having these very opinionated big system prompts that essentially you know direct the model to do some chain of thought in a structured way towards just leveraging the you know whatever they've been fine-tuned to do natively um and especially with uh you know with reasoning models they have quite a lot of flexibility in how they can solve problems you know you might have prompted it to do planning in this way and then uh try and recreate the problem and then try and solve the problem then try and test the problem but you know if you actually just lean into the models fine-tuning it when it when it needs to do that it'll just do that when it doesn't need to do that it won't do that and currently the the leader on sweet bench is just clawed in a loop uh it's not you know some special complicated multi-agent multi-prompt architecture um and so i think you basically can't beat bringing the execution into the training environment and just what anthropic can do you know because they're they've got that you know level of granular control so i think less is more like you just tell it what it wants what what you want and it's just going to get better and better at figuring that out and there's less for us to to prompt on top of that the counterpoint to that is i don't know if anybody's seen like the claude uh claude the web uh you know this is the chat gpt thing the system prompt for that is crazy long um and so this is this weird balance of like the chat version of this has this huge crazy system prompt and i you know claude code and you know in a loop with claude for just seems to be like simpler is better but anyway it's probably people that have looked into that more than i have um okay we've got a couple of hands up swaraj i really like your demo um i'm super interested in what some of these user experiences are when you want to let the agents sort of do their thing and you're kind of on the outside instead of uh knee deep in with them i'm curious um how you think about separating some parts of the input to every and how maybe some things need to stay and maybe some things are stuff that we don't think about anymore like hey look at this file but for example maybe there are some design patterns that you want like your to generally follow do you kind of more feel there's there's a place for that in this sort of ux where you're kind of storing this uh repository uh where it can search some composite information instead of looking at say 10 files and then figuring out the design pattern at the start of every task um how do you sort of maybe uh think of that uh flip yeah the the it's it's really inefficient especially like again probably more insight from trying to solve sweep bench than than actually five can ban here but um there is math you know you can reduce if if if the average sweep bench task takes say probably 80 80 iterations of um of a loop with clawed in it i would say probably 60 of those are just things that could probably be shared across the same repository in a lot of cases um and the same is true of of running claw code obviously it's you know they're very similar a lot of them are very similar tasks um and i don't think there's any any contact sharing at the moment at all but then the thing is only like what three months four months old so i don't think that's our role again to kind of go back to my earlier point like this is obviously a core thing that anthropic themselves would want to be looking at and so therefore it is a terrible idea for for me to be trying to solve that problem because it would just be i give them three months and then you know consistent memory is probably a thing that they're going to solve yeah makes sense thanks i think it's a it's a good good or i i assume that it did but maybe i'm wrong does um does vibe can ban like uh parallelize like does it support sub agents doing some of the tasks while another agent works on another one so it it supports running as many uh instances of of clawed or amp as as you like at the same time what we don't have yet is the ability to say when this one finishes start that one and to be honest i have not really found the use case for that yet um because i have to review you know you you as a human have to review uh after every task and if if it's going off and doing the next thing you're losing that opportunity to review and if it's only a 50 chance it's going to one-shot it which seems to be my average um it's actually better in a lot of ways just to review every piece of work and then paralyze your workflow by just working on two completely different things so i'll be working on you know i'll get a few emails with some feedback that mainly just like ui bugs and i'll work through those on one thread and then at the same time i have like some gnarly refactor to the way we're doing rebasing which is just purely back end in another and so that's kind of the way i think about being most efficient is not like scheduling these things to to run in sequence or to work on the same piece of work but faster but just to work on like two completely divergent unrelated things that are not gonna cause a massive rebase at the same time super interesting yeah i i i want to solve that problem where i want to just like be firing them off all the time and then be have some way to like effectively resolve when they clash but you know maybe i'm as always just like over engineering it and there's just like chill for a couple of months and then like just be okay with reading some tickets for a while i guess sure sean's found the the website people keep finding the website i haven't published the website anywhere i literally use claude to generate a markdown file summarizing all the api endpoints and then pasted it into lovable and said make me a website and it it's the the results are mixed i need to i need to polish this up it's good incentive thank you sean uh the github repo is broken i was just looking for the github repo so there isn't one yet this is so we will okay so we previously had a had a github project that uh got 10 000 stars and about 10 serious open source contributions so we're like we're in two minds about open sourcing this i think it probably will happen at some point but uh i think we're at the point where all of the things that we need to do for the next two weeks are pretty obvious and we're just going to work through them in an opinionated way as quickly as possible and then at that point we're probably going to open it up to community contributions the problem is if we open it up now we could end up like a lot of other popular nameless ai projects on github that just the code quality goes to dog shit very quickly and we just spend all of our time like reviewing things and and leaving things and we just want to move really quickly and i think it is obvious like my problems with this thing are probably very similar to a lot of your problems that doesn't mean i want to hear don't want to hear your feedback but um that's why we have an open source to get the cloud code team says the same thing about open source and cloud code yeah there you go cool code itself is an open source that's my cover um manuel you've been dying to ask something for a while i wanted to chime in on two things one is uh what do you do in those two minutes while your agent is running or something like that which for a while before they censored the gemini 2 5 pro thinking traces that was like my favorite activity was looking at those traces because they would tell me a lot about the project itself right like i would actually get a pretty good understanding of what was going on and learn a couple of things so i was really really sad when they censored that stuff i hope they bring it back because like the news thinking traces are like kind of useless uh the deep seek ones are pretty good so often i'll just like if i want to understand stuff while the agent is running and cursor i'll just say like hey deep seek tell me something and they'll just like tell me something about the source tree um that was one thing where where i really really missed gemini because it was like pairing with a really knowledgeable uh senior developer that knows like all the apis and stuff um i really hope they bring that back i hope that this is also an incentive for people to uncensor their thinking models because there's a lot of value i think for developers in there to both vibe and at the same time get a really deep understanding of what's going on um and then the other thing that i do is um i can manage like three four five different projects cloud codes running on different things at the same time so i have like this infinite list of open source ideas that i want to have and so i just vibe them on the side where i just usually want terminal uis for things like i want a file picker in here i want this there so i'll launch those on the side while the main one is running and that's really fun it's like a little bit disturbing head wise but um it like these incremental improvements on all my personal tools like i have a tool to to manage work trees and yesterday while i was working on something else i said like add fork and merge like i want to fork my own work tree again and then you know after after the main job was done at the same time i had like fork and merge on my other tool i was like okay i'll i'll take it so that's something you can do uh but it's pretty exhausting to jump between four projects so um that those were some ideas because i think it's a big deal like they were because the workflows change so often there were times in my workflow where i was literally like a copy paste bitch for chat gpt right like it's you would i would code and chat gpt and be like okay well my day is spent basically copy pasting out of this window that's like what i actually do and it's like this can't this can't go on um and it changes so often that you always have to like question what your value as an engineer is or what your role is and and all of this um it's really interesting because now with like vibe kanban and the coding agents you're like kind of a product project manager um or your qa person right because like even if everything works you still have to like try it out at some point right it's like you still have to go through everything by yourself just to make sure that it indeed works well meet accountability there's always got to be a a flesh you know flesh intelligence on the hook if something goes wrong that's what our role is yeah and yeah i i found it until very recently because you know do most of the stuff in rust and there weren't really good coding agents for rust until i want to say cool code and an amp to be to be honest and it was mainly copy and paste up to chat gpt which is soul destroying after day 180 of that i i think the big bump with sonnet 4 versus sonnet 3 7 is like the newer cutoff date and then something like a lot of the things that weren't one shotable but were like 90 shotable before the fact that they're now one shotable is makes a really big difference right because suddenly you can take yourself out of the loop um which is like charm bracelet go tuis was like always kind of a struggle before because they would like mess up the keyboard shortcuts or would mess up like the format you know now that that is handled you it's like crazy what more is available um and once again then all your workflow changes once you know once a little thing suddenly becomes one shotable it's like anyway okay before we go to the next question i'm going to as promised um add some features to vibe kanban now that will make it into tonight's release i think the obvious one that i want to ask is is is anybody not using any of the coding agents that we've got pre-configured here and would like me to add another one and hopefully it's like an easy one to add that's just like an npx command type thing stick it in the chat now can you pull the show the list again so we've got claude amp and gemini okay i mean ada ada is the big one uh yeah uh we we did just interview client but they're not cli they're their ps code extension open code is another one open code i saw i saw that crazy twitter thing of like you know all the agents trying to shut each other off and open code that's that's a stunts that's just noise that's not meaningful at all um it's not meaningful that's that's what's taking up my two minutes before i started using vibe kanban uh but yeah these are good suggestions open code goose uh ada those are the probably the three biggest that you're missing okay great all right i'm gonna do this in the background let's go to the next question are you gonna vibe kanban vibe kanban yeah that's what i'm doing right now no no no no show show show show us show us oh okay but does it have it looks like quite a big installation though i think if you've already got this installed how do you actually start it sort of debugging okay yeah all right we're just gonna do that um so let's take add open code agent as an executor and we're just gonna tag some relevant files executors please add open code uh as an executor coding agent so i know there's a bit of jargon um and you guys don't have the code but essentially an executor represents any any uh process so that could be the setup script or the dev server and obviously coding agent is a subtype of that um the command to run it is that and i will just change that too this is really obvious prompt here um the thing is okay i can do this okay i can do a simple version of this but then the complex version is going to be like session management and then follow-ups and things so this is just going to be the basic implementation for now um and then like sometimes it especially with bigger code bases it makes stupid mistakes where it'll implement this everywhere and then forget to like add it to the settings so you can give it a quick way to validate and this is kind of what i mean by our job as humans is to sit there and kind of figure out what the lightweight tests are and so i would just say anywhere where amp or um claude or gemini oops is mentioned you should probably also mention open code okay all right and i think i can't remember what that's executing with that's with claude uh i might just try that with amp because i think that's going to get a better result all right we're going to watch this and take some questions in the background so i don't know who's first kevin go for it actually david's been up for longer than i have let me hand over to him sorry go for it i think i might have gotten my answer just from what you were doing uh is everything just one shot coming in on the command line and like one prompt or how are you driving the squad code yeah so you so there's actually very little emphasis on on what is ha what is happening in the middle um like when it's running you can if you want to go in and see the logs and you cannot follow up so for example i was just checking one of these tasks that i i just started before this um and it turns out it has not really done the right thing and i wanted it to add some more themes currently have like light and dark i can see that it's added like purple green blue orange and red but again this is exactly what i'm talking about it's added the theme but it has not added any way to choose that theme in the selector so i'm going to now follow up and be like at settings uh i don't know why the settings bar is up there oh maybe because i'm zoomed in uh settings can you make sure the user can actually select select these cool new themes okay so you can follow up and so how is that how is that doing are you just doing another like command line prompt like one shot thing uh it's actually on the same thread so if we can see the raw um where's the show mode button so you can turn all of these agents on to like json l mode which just gives you um a bit more detail and so you can see in this case uh there's a thread id at the top here and if we add a command line argument uh called like resume i think with the thread id then it just pops onto the conversation so it's not it's not like re-one-shotting it to be honest i would say it's 50 50 whether i go for the follow-up or whether i just make a new attempt and sometimes you can make a make a new attempt in a different coding agent as well so try it first in amp and then try it in claude um again maybe some more insight from trying to solve sweep bench is that uh sampling and ensembling is really important and this is obviously something that claude doesn't do it's it's just you're just looking at one attempt at a problem whereas the average uh sweep bench attempt is sampling like up to ten times or or more at the moment um and when you read the traces there is really it's kind of illogical why you know why one attempt works and another doesn't because you would have thought there'd be some obvious thing like it really it read the wrong file or something like that but it'll literally you know you you'll look at two attempts one will work one will not work they'll both read the same file and then they'll just do two completely different code changes with almost the exact same steps before that and it's you know it's like the way these things work right they're not humans they're just you know that they themselves are sampling and so the response to that is probably just running a few instances of the coding agent one shot and then just taking the best one is actually not a bad strategy and can probably be more efficient than asking follow-up questions yeah i kind of the reason why what i want to ask is for the ai in action bot uh like i've i coded that in one of these uh sessions and what i think i i really want is everyone in the community just to be able to ask the bot to make changes to itself uh i started working on this before clawed code and it was really hard to get aider to do that in a way where it would hold hold things on discord but it seems like we're just at the the age now we just try it once and have it do it 10 times and pick the best one or something yeah it's a different you know you'd never do that with humans you'd never get 10 humans to do the same task but this is just we've got to kind of relearn how to do this stuff okay um was there somebody for kevin i can't remember i'm not sure kevin go for it yeah so um question i have is uh let's see a little context so i find for myself when i'm writing code now using agents to do things one of the biggest limiting factors for me is how quickly my brain can keep up with what is the current state of the system and like what are the changes that need to be kicked off or prompted um and there seems to be like actually uh manuel and i were joking like we use we're using amp code amp is also a you know that that word means current right and so we have like an amperage limit in our brains of how many amps we can have going at a time and like actually have things keep up and depending on how rested you are like in the morning you know morning fresh maybe i can handle four amps uh you know by this time on a friday i'm down to one maybe two right and so like i guess the there's also this cognitive thing we had this week right where you need to think strategically and at some point you just can't think strategically anymore so it's better to just not prompt the llm at that point yeah so i guess what the question that i'm so that's the sort of overlying context is there still seem to be cognitive limits that i at least we are running into in terms of how we think strategically about the system how we uh are able to keep updating our brain state of what the system is so i'm kind of curious how you are thinking about that if you've run run into similar problems and how uh what you know how vibe can ban either today or in the future is like kind of helping with that so just to replay that how does vibe can ban work around our changing just like how are you are you still running into those just viewing it a can board uh can ban board actually change your ability to keep track of it like is that helping you like i'm just we're trying to adapt to this world of agents and that's where i found the new limits for me or just like my brain can't update fast enough i you know i do feel that so my uh the the thing i keep finding myself doing is coming in to review a task clicking the start dev server button going over to the dev version of whatever it is that's running and then immediately having no clue what i'm what i'm supposed to be reviewing because i've forgotten and it and i literally looked at it about two seconds ago and it's probably a function of too much context switching i think i don't know i i i don't know how that really gets solved with better ux you know this is definitely better than terminal because at least there's some visual difference like this is in this column and that's in that column and there's different colors and stuff um but at the same time it does encourage you to uh to do much more and and so your context switching much more i i i think the long term is that right now you can think of coding agents or just like ai assisted coding in general on a spectrum of like how long does it take before a human has to intervene and you start off with github copilot right where it's running for two seconds and then a human has to intervene and then you upgrade and you and you get to like cursor and you know that's running for like 20 seconds and then the human has to you know get involved again and then you have you know the next generation of coding agents which uh kind of like the core code things which run for two minutes and then at some point you know we'll get to the the dev in reality where you where you have like half an hour to an hour you know running and then you can come back and check it and yeah this so this is probably just like a moment in time where these things are running for two minutes and my hope is that in the future like they'll run for much longer or you know we will it'll it'll do enough work like me checking like one little widget and then jumping back in checking like another little widget isn't super useful you know isn't doesn't feel like a great use of my time like i feel like i could be bundling these into much bigger changes that i review and i and then i'm kind of in the zone with that one change for five ten minutes half an hour whatever um and that's the direction of travel i think is is longer changes and you know reflects the the you know different generations of tools we've been seeing for two or three years now does that answer your question at all sort of um so i think a thing that i'm grappling with to some extent like one way of thinking about it is just asking continually asking the question what is the job of a software engineer anymore but there's more in the sense that i have seen that even though like the surface layer prompts that we're doing are as you say they're very simple they're very intent based they're not doing there's not very much context in there i have seen that when i do that in a system that i understand well i get substantially better results something subtle about how i'm prompting it is different and it gets substantially better results and i'm also able to correct it when i don't uh when it goes off the rails and when i do that in a system that i understand poorly it is i get systematically worse results and i often don't see the issues early enough and so it goes off for a long ways before i'm like oh that was a mistake i need to come back and redo that in a different way and so what that points to is that there is still at least in this point and i suspect i because i'm because i'm in some ways a skeptic despite being way out on the edge of this i suspect there will always be some value in actually understanding the kind of architecture of your system in some way because you'll be able to guide or maybe that's codified in a document somewhere that the agent can pick it up i don't know but like i think there's some amount of mental modeling that is still having to happen and i'm like the problem i'm grappling with in all these shapes is like how do we still build that model that bottle used to get built as you were writing the code right you're writing and it's absorbing it and we're delegating a lot of that writing so how do we still update our mental models for what the system are so that we can guide these agents in the right direction maybe frameworks just having like very opinionated ways of building these things that is predictable so i don't know you know it goes off and does something but i kind of trust that the roots are going to go in the roots folder and the model is going to go in the models folder and it's not going to put sql queries outside of the model i don't know same way you'd work with um i guess contractors who have never been in your repo before it works a lot better when you're using you know like java spring boot and you're hiring java spring boot developers versus like you know generic java projects because of that shared context that everybody's got um manuel there we go i was looking for my cursor um one thing i i find myself doing for the like oh when you don't know how to how to approach something right and like it's it's hard to even decompose something into tasks because you don't even know what to ask for uh one thing i do is i have manus and then i'll just throw really like the most random shit at manus and say like please build like what did i do yesterday i just bought a drone i was like please build like a drone motion planning engine which i don't even know what that means like i just put some words together right like i have a rough idea and then i'll just like do that and like launch three of them which of course is like costly but also well i'll just do like oh three researchers or like deep researchers and paste those into manus and said like can you please implement whatever this algorithm is about um and the way i i think of because kevin was saying that oh source code is like the new binary which is like a it's like the analogy only goes so far but in many ways running an llm on a prompt you can think of it as compiling you know and sometimes you'll get like compile errors which in this case is like oh what came out is not what i want it doesn't mean like oh what came out doesn't work it means like oh what came out is like not necessarily what i want so i have to go back fix my code and i think that analogy is is pretty interesting to to you can approach llms as kind of like a more like a notebook as well right like where where suddenly you're in a notebook and you're just going to try stuff out see what comes out refine it try it again um and that way of thinking about what do we do with all this compute like right how how do we leverage the computers basically not just building prototypes and throwing them away but like thinking of it as i'm iteratively trying to get to the code i want to generate but the code itself is just a single run is just like one attempt of doing something um it's costly yeah that all makes sense yeah um david so i put this in chat is there any way to have this shared or running on a server or this is just only you have it on your own machine and only one person's using it at a time yeah well i think there are already like quite a few options for running stuff in the server and i tried a few of them out you know you can kind of link you know web-based agents to linear and stuff like that but um i guess there's for whatever reason it's just nicer to kind of work on localhost and um as it's very deliberately uh you know based around your local tooling and the agents you install locally rather than the ones you you'd run in the cloud i guess at some point in the future my computer will start going too slow for me to keep paralyzing agents and and i will need to move to some kind of cloud infrastructure to run you know so that would be like step one of moving to the cloud probably would be when you hit you know start that goes off and it runs somewhere else and actually the sandboxing thing that was mentioned earlier you know that even just for that reason you know that could be a good reason to do that and then yeah at some point if you ever want to use this at scale like you'll you know your boss at a big bank is probably not going to let you just roll this they're going to want some kind of overview of what's happening you know and and observability and and analytics about which coding agents people are using and you know and i expect people to to keep changing you know i don't think this is going to settle down and and this text box will have 50 options in it you know at some point probably um and so there is potential i guess for cloud version that supports some of the the more boring like observability security compliance stuff that teams need to use this in for serious workflows yeah i mean i'm thinking more again as like the ai in action bot giving the community the ability to make changes or you know theoretically in your case you know you're talking about you don't want to open source it because the contributions you get are so terrible but what if you had you know people be able to you know use this directly i mean still a terrible idea but a little bit more you know what you'd expect it will it will happen at some point i'm i'm a little confused about this like uh i'm revealing that i'm not really actually a developer and i don't know how this stuff works but like if you npx install vibe dash kanban and you go to like npmjs.com you can see the like the code is there is it not like if we wanted to run this on our own is it i don't understand like maybe i'm missing something the source there's difference between the source code and the compiled code that you can run so the source code is not open source i see i see so so like running it on a server like let's say you log into a server and just run npx install kanban like is it not running on a server then it is it is you just don't have the original source code i see okay okay okay okay i think i've got open code running i've just like brew install how's this thing working without an api key i do not really understand but this is crazy okay all right let's try it so uh add open code agent we're gonna start the dev server let's get an auspicious start going sometimes this fails that's just my project setup okay looks like it's running um let's add a well we're just gonna prove it works first so i've got my one plus one test and we're gonna run it oh it's done it again it hasn't added it to the settings i don't know why it loves like making changes and then never adding them okay but it is up here okay so it's just forgotten to add it to the settings in one place this is like really classic this is this is like again you know you need to design applications in a slightly different way to kind of centralize things like this um so uh you forgot to add this as an option in the task details i think there's a task details toolbar yeah that sounds right okay and in the meantime we can review the other thing i was playing around with which is add some more themes so for those of you that weren't following it uh added some themes and then it forgot to give me a way to actually select those themes so i asked it to add the themes as options to the settings and then i realized the uh well first of all that didn't actually work so i just basically wrote fix and then i realized the logo looked a bit off so this is something where i think like the playwright mcp or something like that could probably be quite useful i haven't hooked that up to this project yet um you can see if i start the dev server we have blue purple lots of other beautiful themes there so i'm going to go ahead as promised and merge something live on camera this is obviously going to fail because i'm doing it live oh my god it worked that feels great that is going to go out in today's release everybody and the open code one has also followed up so let's try this one out we're gonna land two today that's the real question um first of all we need to rebase this because we just merged something so rebase on domain successful now we'll get the theme i just set because we've just rebased um and let's try again and see okay we've got open code it's running this is there's no way this is going to work this is gonna fail horribly suspense is killing me uh okay that is actually the wrong error that is because so the dev server config is messed up um just killing everything on that board anyway any final question this this is an hour long sean uh yeah it is um and you've already done amazing we have one merge on the the channel so that's great yeah um cool well yeah i mean i will it obviously it's yeah this will this will you know take a little bit of back and forth probably but oh it's working what okay i don't know what the i need to look into the logs and make these pretty like we have for claude but that's definitely coming from the executor so we half did it guys all right amazing thank you for dropping by amazing work um i think the kanban form factor is useful and like obviously where something like this should exist come back and thanks for all the feedback i have been following along in the comments and i'm gonna look at these after the call as well there's a lot of really great suggestions after the questions people are asking as well um that has been super helpful uh my email i'll just drop it in the chat if you ever have feedback uh please just ping me we have a success rate of responding to all feedback by delivering it as fixes within 24 hours so far for the six days of this project's existence and i will endeavor to keep that up so please send it through okay amazing um yeah thank you you want to end yeah all right thank you and folks who want to give talks like this sign up in the discord just tell the ai in action bot that you want to give a talk and then we don't have to depend on swix sourcing no it's just my pleasure like something cool like i know the person all right thank you everyone bye