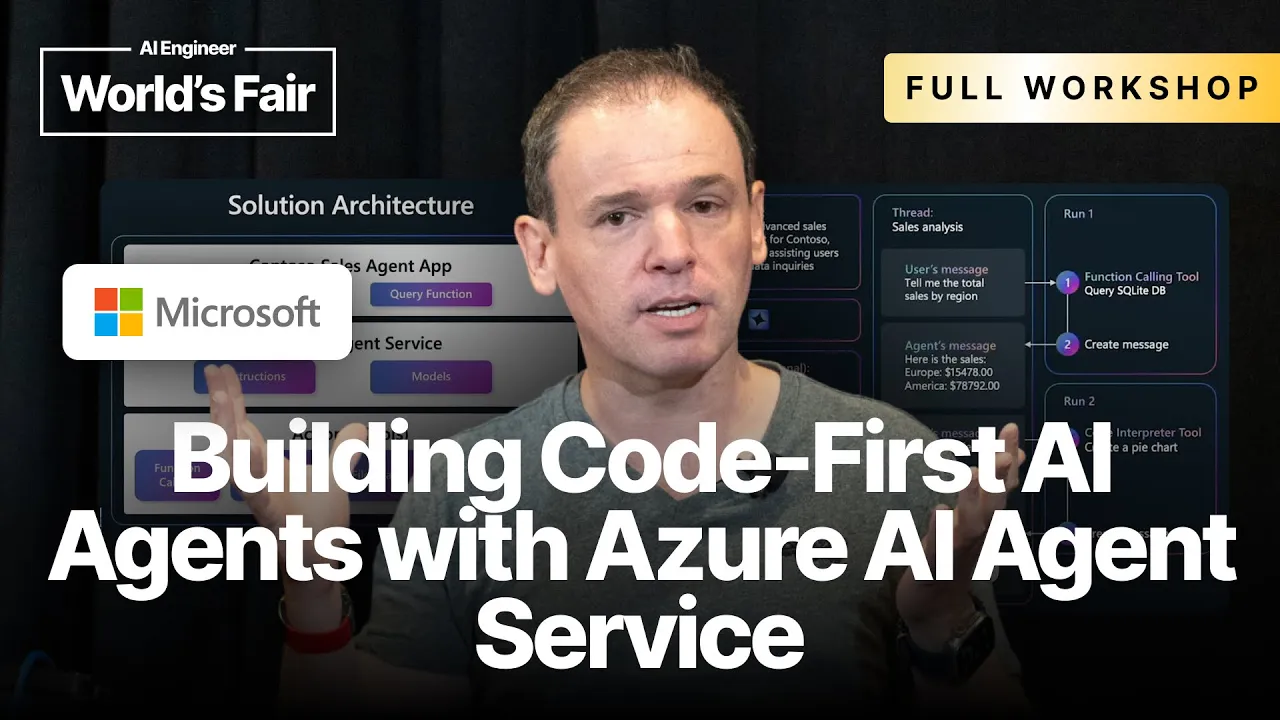

Building Code First AI Agents with Azure AI Agent Service — Cedric Vidal, Microsoft

Transcript

Well, this is very exciting because, I mean, 2025 is clearly the year of agents. Compared to the past two years, things have moved so fast. We went from very simple prompts, which were already incredible, but now we move to the next step where we have agents that can autonomously achieve goals without us knowing exactly how they do that, which is quite incredible.

But the question is, how do you make them? So that's what we're going to see today. I am Cedric Vidal. I am a Principal AI Advocate at Microsoft. You can find my information down below. And today I'm going to be your host, and I am going to be helped today by Procter's, by Mark.

And I'm sorry, I forget your name. I feel terrible. Nakmar. Did I say it correctly? Okay, thank you. So a big thank you to you two to help me today. So during the workshop, if you have any questions, please raise your hand. And Mark or Argmar will come and help answer questions.

I feel super bad. Anyway, so today, like I said, I'm going to set the scene first. So what are we going to do today? So in order to put our hands on the keyboard and create and show to you how to create an agent, we're going to use that use case.

Imagine that you are working for an outdoor and hiking equipment company that sells equipment online. We are going to, so what you want to do is you need to build a system that allows to analyze your sales data mixed with product information, generate ad hoc diagrams, basically a UX that your sales people can use very easily where we move away from the old paradigm where we had to hard code every single use case, every single view, every single query, where now the database, the queries are going to be generated automatically.

The UX is going to be generated automatically to accommodate the type of information that you are displaying. And we are going to see how to create such an application. An agent, okay, what is it? Because like the definition of an agent has changed so often. Let's be honest, even the specialists in the industry don't agree exactly on what they are.

And even the definition of what an agency is has evolved over time over the past three years, as people have got more acquainted and were discovering what we could do with it. You would imagine that a definition should be set in stone, but in that case, it's been difficult to agree.

But the definition we're going to use today is that it's semi-autonomous software using tools and information that you can pull from databases and data stores at large and iterate until it achieves that goal. So until the system stabilizes and the goal are met. So that's the general definition. But we're going to see that, depending on the context, it can be more or less simple or complex.

So in order to do that, an agent should be able to do three things, do reasoning over a provided context, to provide a cognitive function such as deduction, correlation, understanding cause and effect. So that's all that domain of cognition, the LLM, now has proven that it was able to do a lot of those.

Not perfectly, but it's getting better every day. The second one is integrate with data sources for context, and the last one is act on the world. Because in order to stabilize the system, in order to be useful, like before the first generations of LLM-powered system, we are just about putting information and displaying it.

But now we are moving a step forward where we are acting on the world and modifying the environment until it stabilizes and reaches the expected goal. So, what kind of application are we going to build today? It will look like this, so this is a screenshot of a slightly different application.

What you're going to build today does not look exactly like this. But the idea is that you can ask a question in plain English, such as show the sales of backpacking tents by region, and include a brief description in the table about each tent. And it's going to pull information from the database, as well as the product information, and mix all that information together, reason about it, and display the content, and the shape and form of the display of the UX will depend on the type of information which is requested.

Here it's a table, here it's a pie chart. Because the question here, yeah, create a pie chart of sales by region. So, the system is going to understand that we want to create a visualization. Okay. What technology are we going to use today to build our system? We are going to use Azure AI agent service.

So, before I dig more into the details of what this is, you have so many ways to build an agent today. So many frameworks, long chain, long graph, semantic kernel, and so many others. The Azure AI agent service has the advantage that it's stateful and quite easy to put together.

Because usually, when you build any kind of LLM application, I don't know if you're aware, but it's stateless. You need to manage the state client-side, so it's the responsibility of the application developer to store the conversations and to handle all the logic of pulling information from various systems, as well as executing the functions, the tools.

So, agent service moves all the responsibility to the cloud on the Azure platform, and everything is managed. So, it comes with pros and cons. We're going to see what they are. But when it's about the pros, the big advantage is that it provides a very simple development workflow, because all the state and the context and the agent configuration is managed in the cloud.

The integration with back-end data and data sources is also managed in the cloud. So, even if you may also mix and match, you can mix things that are managed by agent service in the cloud with things that are managed locally, it's possible. And it supports all the model families that are supported on the Azure AI Foundry model catalog, plus the Microsoft Enterprise Security, which is very well known to be very robust.

It's sometimes a bit difficult to set up, but that's the price to pay for security. So, the application that I just showed was using Chainlit. The one we are going to build today is going to be command line. It's going to be slightly easier, but so basically at the top, you have the application layer with the framework.

So here, Chainlit, in our case, it's going to become a command line, very simple. very basic, with a query function, which is going to use Azure AI agent service with instructions and models. And actions, so for function coding, and I'm going to explain what it is, code interpreter, and I'm also going to explain what it is.

File search and grounding was being searched for web information grounding. And we're going to go through each one of those during the workshop. So, like I said, Azure AI agent service comes with pros and cons. The pros is that it manages everything for you. That's a big pro, right?

The con is that you need to understand the diagram. More or less. You don't need to understand all the details, but one of the... So, I'm going to change side. So, the first thing, you're going to have to follow a sequence of steps. And you have quite a few steps to follow in order for agent to work.

First, you need to create an agent. Once you have created and configured your agent, your agent exists in the cloud. It's kind of weird at first, because when you're used to stateless way of doing things, that stateful programming model is not so common those days anymore. But it becomes relevant again in the age of agents.

So, once you have your... Which means that if you have an application that wants to reuse an agent, if you have created the agent before, you need to reconnect to an existing agent. It's kind of like in SQL, like the create or update table. You only create the schema if it does not exist yet.

So, it's kind of a create or update agent for most applications. So, you create the agent. Then, you create a thread or you reuse the thread. Then, you run the agent on the thread. Then, you check the run status. And then, you display the agent's response. Those are the big steps that you need to get familiar with when building with Azure AI agent service.

Then, you are willing to configure instructions. Those instructions are going to be attached to the agent and they are stateful. So, they are deployed in the cloud. And once they are there, you can reuse your agent and you don't have to send the instructions every single time. Which in terms of bandwidth and network is interesting.

Then, you configure your model. You add data sources. So, one of the pros of using Azure AI agent service is that you can attach data sources directly to the agent. And you can do that either graphically through Azure AI Foundry or you can do that programmatically through the SDK.

Then, you can attach tools. And we're going to see how to do that. How we attach each one of those tools. Some of those tools can be client-side. So, you are managed by the application code. Some of them can be managed by Azure. Today, we're going to see file search, code interpreter, function calling, and Bing search.

Here's an example of a thread, of what a thread looks like. So, the user's message is going to be, "Tell me the total sales by region." So, what's going to happen is that in order to get the total sales by region, we need first to get the sales. I mean, we need to query, sorry, we need to query the sales data store.

And it happens that in this case, the sales data store is a SQL relational database. And as you know it, the way to interact is using SQL queries. So, we are going to generate a SQL query dynamically, depending on the user's request. And then, we are going to send that SQL query, execute it on the database, get the list of records back, re-inject those records into the LLM, which is going to generate a message in plain text from those list of records.

The SQL light is just for an example, it can be an example. Yeah, of course, yeah, it's an example. In the agent we're going to build today, just because it's convenient, but you can, of course, connect it to any database you want, version all, the document, an API, it really doesn't matter.

For the ones which are managed locally, for the ones which are managed by agent service on the backend, the list is more restrictive, and to be honest, I don't have it on the top of my mind. You're not going to see any all-back or anything. Sorry? You're not going to see any all-based access control already.

Not today, not today. It's a more advanced topic, obviously. And it depends a lot on your use case. So then, show as a pie chart, which is the second question we asked in the previous screen I showed you. This one is going to be quite interesting, because we're going to use a tool called Code Interpreter.

What it does is that it's going to take the query, generate Python code, and the Python code is going to be executed in a sandbox, in a secure, safe sandbox. And it can be whatever is supported, whatever Python packages are available in the environment. Usually, you use it to generate diagrams.

And it's going to generate the Python code, which is going to generate that visual representation. It's going to execute the code. The code is going to save the image somewhere on the file system inside the sandbox. Then the agent is going to pull that image out of the sandbox and send it back to the client application.

Okay, so like what I said, this is quite a lot to digest, right? But the thing is, you just have to go through and get a mental model of how that works. And once you understand that, you don't have to manage it yourself, which is quite interesting. Today? Do we have a vector database today?

I'm blinking. I think we do. I think we do. I think we do. But I'm going to double check. Because we ingest the documents, and I think we ingest the documents inside an AI search instance. One more very important thing, function calling. So to be honest, function calling is not new when it comes to LLMs.

I was doing function calling like literally when the first version of TGPT was announced by asking the LLM to give me answers from separated by commas and say generate a function and an argument separated by commas. You can still do it, by the way. But nowadays, it's much more efficient to have structured output.

It generates a well-formed JSON. Even the LLMs are optimized under the hood inside the data center to generate JSON optimally. So the principle of function calling, actually, the name is bad. I've hated that name ever since it was coined because it's not function calling. It's function routing. The LLM does not call anything.

An LLM runs on a GPU. It's not going to call any code. So what it does, rather, is that it generates a JSON representation telling you what function to call with what parameter values. And it's going to map. It's going to decide which function to call. And it's going to map the natural language sentence and extract values that it's going to pass as parameter to the function to be called.

And then it's the responsibility of the application code to take that function call specification and actually execute the code. So that's what it does. Yes. How does LLM know which functions you use in your computer classification model? Like, do you know under the hood how does it know? Oh, that is an excellent-- that is a very good question.

Well, it's exactly the same way when you ask a question. For example, when you ask, what is the color of the sky? Like, very-- one of the first use cases. Obviously, the answer is going to be blue most of the time. The way LLMs work is that it's a statistical distribution.

You have the probability that the word that comes after-- when you ask the question, what is the color of the sky? The most probable answer is going to be the color of the sky is. And after is, the most probable color is going to be blue. Because that's how the model has been pre-trained, right?

But if you say, what is the color of the sky? And in order to get me the answer, you can only use, for example, color equal and the value of the color. Then you give an instruction. You tell the LLM, hey, here's how-- here's the output that I want.

And then it's going to generate color equals blue. Just because you constrained, like statistically, in the world of all the possibilities that you can answer, You're narrowing down the type of output that you want to be generated. So color equals blue. Imagine that-- And so you're going to parse that answer.

And you're going to interpret color equals blue into whatever you want with it. Except in the world of function calling, it's not color equals blue. It's set dash-- you have a tool, say, execute color, which takes a parameter with the value of the color. And instead of having just one, you have many possible functions.

And same statistical probabilities. When you imagine you have one question to set the color and another function to order a pizza, If you ask what the color of the sky is, it's not going to generate an order pizza call for the same reason. It's exactly how it works. So how do you know when you function calling versus creating your own classification model?

Okay, well, because a classification model cannot extract values, entities, out of a context and like answer many, many answers across multiple dimensions at the same time. Classification is just one out of n possibilities. So it's an entirely different type of task. Okay, let's move on. Okay, so I want you to, on your laptops, to open the following URL.

So I'm sorry, it's not very big. I'm going to spell it. So Microsoft events dot learn on demand dot net. So Microsoft events, all attached, dot events, sorry, oh my god, dot learn on demand dot net. Is everybody on the page? Is everybody on the page? Okay. Then, you're going to log in.

Be very careful. This is very important. You need to choose Microsoft account. Well, usually you're going to use Microsoft account. Huh, I thought we had all the types of, okay, so if it's a personal account, you need Microsoft account. If you have a corporate account, like if you have an account as part of your company, which is managed by Android, which is managed by Antra, you need to select Antra ID.

So it's kind of misleading. Do not select Microsoft account if you use a corporate account. Use Antra ID. Then, you're going to land on that page. That page, you're going to have a redeem training key link. Click on it and then you're going to be asked to enter a training key.

Enter the following training key. So, hopefully this is big enough and everybody can see, but let me know. Remember, raise your hands if you have any questions. You can use also a personal Microsoft account. Like, you can use an Outlook.com. You can use, if you have an Xbox, you can use an Xbox account.

Any Microsoft account or any of the consumer domains out there. So, in your case, you might want to try a personal account. And to be honest, in that case today, it's usually easier. Like, you do not need to use a corporate account today. There is absolutely no need. I just have a question about that first slide you had up.

So, you're saying an agent can go do a SQL query, come back, use the code interpreter, and then make a graph. Is there any reason you're using agents to that, as opposed to just training all the workflows and injecting the tools? You could totally do that. Yeah. I'm just wondering if there's a reason why we're using agents to that sort of stuff.

Well, like I said, we need to go back to the definition of what an agent is. And like I said, it's a bit of an overloaded term those days. It encapsulates a wide range of definition, including the simplest. Today, we are not in the simplest use cases. We are not in the more complex use cases.

For example, at the beginning, I said, one of the things that an agent can do is be goal driven and iterate until it achieves the goal. Today, we are not going to see that specific thing. We're going to stop one step. We're going to be in the middle in terms of complexity.

Sorry. We are going to be above the simple completion. And we are not going to see the looping. We are going to be seeing a mix of using tools and code interpreter with multiple data sources, where the information from all those are mixed together to do reasoning and act on the words.

We're going to see that. And yes, you could do that using just an LLM locally. Nothing would prevent you from doing that. Except today, we're using app service, which manages everything server side. Also, I'm going to show the limits. Today, I'm going to give examples so that you get a sense and you touch exactly when the current architecture hits its limits and when you need to go further and use a more orchestrated planning and orchestration that iterates which loops until it reaches the goal.

And I'm going to give an example where you see where it breaks. Okay, where were we? Training key. Okay. After you click on launch. Building? Oh my god. It's not pre-built. I thought they would be pre-built. Did everybody click on the build button? Yes. Okay, please do. So that means we have eight minutes to answer questions.

So we just had one, thank you. Yes, one more. If an agent is just an LLM running on a loop until it thinks that it's done what you've asked it to do. Yes. How does it know-- When to stop? Yeah, how does it know when to kick back out of that recursion?

Yeah, so today, we are not going to-- we are going to see the limits of not-- Okay, you have two types of agents, two levels of complexity. An agent does not have to do the looping to be called an agent in the simpler area of the spectrum. In order to go one step further and do that looping, you need to use something like autogen, for example.

Those types of agents, you need to define a criteria of done, a definition of done, basically. And you're going to have-- and the criteria can be implemented programmatically, deterministically, or using an LLM, which decides, is it done? Like, is the task finished? Or have we accomplished the goal? And the workflow engine is going to loop until the goal is reached.

But it is the most tricky thing, in my opinion, fairly. That's when you're going to develop an agent, figuring out when to stop is tricky. For example, I often-- I've done a couple of prototypes with Browser Use, which is a famous open source agenting system for Browser Navigation. When you ask it to complete a task on the web, it's going to navigate from website to website, and read the pages, and do actions.

The evaluation of when the task is done is clearly not always perfect. Yes? Sorry? One might be related. Yeah. The other one is, so my first question is, are we going to learn today, like, how to send feedback to the agent, like, in case the agent gives, like, incorrect answers or incomplete answers?

You mean the looping? Yeah, yeah. So, no. Yeah. So, not today. Not today. Not today. The other question is, what's, like, engineer, what's the difference between, like, an AI agent and an MCP that's it? Oh. I don't know what that. So, it's very easy. It's a very easy explanation.

So, basically, MCP is just a tool, a function. You remember I explained what a function is? So, an LLM is able to do function routing. Decide which function to call when you have a question. MCP is just that plus management of the lifecycle of the program which completes the-- which executes the function.

Because normal function tooling, if you just take, like, a GPT or LAMA and you do function calling, what the LLM is going to return is just a JSON telling you the name of the function and the list of the values for each parameter, nothing more. It's your responsibility as an application developer to execute the function.

So, I don't want to use the MCP for that? No, it's not that you need it. It's that it's one of the existing technologies out there that exist that you can use as a tool. And the advantage of MCP, at least as a client-side AI application developer, is that the MCP protocol takes care of downloading the executable, the binary, whether it's a node, Python, or whatever, download or a Docker image.

Like, pull the executable on your machine and execute it automatically. And it's an overall protocol which comes with-- and also, it encapsulates the possibility for the tool to declare its functions. So, from the standpoint of the user, you just have to declare, oh, I want to use a file system MCP server or I want to use a blender MCP server or whatnot, and that's all you have to do.

You can select it from a catalog and it's going to auto-declare what tools it has and automatically start it and stop it. Also, when you're done with your MCP client, it's going to stop all the MCP servers and clean up everything. Is it-- where are we with the-- Okay.

Where are we? Oh my god. Oh my god. Does it give it time? Seven minutes? Oh my god. I've got a question. Did we forget to do something? Yeah. Anyway. I had a question. I was going to wait and see kind of how the demo played out later, but one of the questions I often find making agents is the balance between making a fairly general agent that can do lots of things and just kind of giving it the tools, not giving it much direction versus having to be fairly controlled with it.

You're kind of trading off the autonomy to a bit of reliability. The one that you set up before, I couldn't quite work out if you've literally just given it the tools and then it can do everything with those tools or if you've been quite controlled with it. How do you think about the trade-off and when people are using your tools do they tend to fall more on one side than the other?

It's a more complex answer than it looks like. More complex question than it looks like because of two things. When you give instructions, it's like I was talking about the space of probabilities. The more vague you are, the more you leave options open. The more your agent is going to be able to do a wide range of things, but it might get it wrong.

The more specific you are, the more you're restricting the things that it's going to do well, but it's going to do well more often. And then there is, you didn't really ask this, but I'm assuming that's on your mind, is how many tools can I give to my agent?

And also another common question that we often get is, should I give all my tools to one agent or should I split tools on multiple agents or should I create should I only give one tool per agent? And the answer is same, it's complex, but it's kind of, imagine, same, you need to think in terms of statistical probabilities.

like it's stochastic. And so when, imagine you have one agent and you give all the tools. And at every single instance for any question you ask, the LM has to decide which one of all those tools to call. the probability that it gets it wrong is higher, right? So the solution to that is to create agents which are more specialized by like areas of expertise and do some kind of routing and multi-step selection.

Where instead of having one agent and giving all the tools, you, for example, you have a first round of agents that is going to determine, classify. We're talking about classification earlier. Classify the question and say, oh, this is a sales question or this is a product question. And then route to an agent which is more specialized for to answer things about sales or things about products.

And then when the answer comes back, the first agent can say, oh, do I need to use another agent? So it's like an agent is like a multi-tool. So you can imagine like a tree of tools and each tool can be composite or leaf. You can see that way and autogen allows to build such topologies.

It may specialize in each one could be working with a different database or a different track. Yeah. Yes. And then the coordinator or the maestro. Yes. Yes, but you can also have topologies where the different agents share memory. Because the thing is, sometimes you also have the situation where you have like a team of agents and they are specialized.

And it's good that they are specialized because you don't want them to pick the wrong tool. For the reason I just-- Or hallucinate or-- Sorry? Or hallucinate or-- Yeah, but what's going to prevent the hallucination is the grounding. So each one of those agents is going to be grounded in something.

But something which is some kind of a grounding is memory. Like for example, if you have multiple tools that answer questions about a consumer, and you want to memorize, remember the preferences of your consumer, of your user. Like for example, what's the name of his or her pet? And you bet you want each one of your agents potentially to be able to use that information.

So what you want to do is take that memory, connect that memory to each one of those agents. Even if you have multiple agents, they can-- each one of them have access to that shared memory. Because you want all of them to be able to access the name of the pet.

So to be honest, it's complex. Like you have many topologies, which make sense depending on your use case. Where are we? Ah, finally. So where are we? Is every-- ah, one more. Ah, still building. One more. Can you please raise your hand when it's still building? And how did you try?

And you refresh or something else? I think it went back to the . OK. So you can-- I mean, I've already tried doors. Yeah. I don't like multiple times. OK. The way time is less. OK. Hopefully it's good. So is it still-- no. Is it still building? No. You're good.

So sorry. Can you raise your hand again for those for which it's still building? For whom it's still building? So just one. OK. So I'm going to move-- It's 20 seconds. No. OK. So hopefully it is actually 20 seconds. OK. I'm going to move ahead. Yes. I'm going to move ahead.

Sorry? Yeah. Oh, cool. OK. So everybody is there. Awesome. So let me move on. And let me see, because I want to reconnect. I heard 404. That's not good. OK. So I have the same environment as you. So I'm going to show you how to make the most of it.

Login as admin? Yes. No. Sorry. Yeah, I went too fast. I forgot. So when you have the black screen, I should have-- OK, let me-- When you have the black screen, the username should be pre-selected and should be admin. So there, you can click on password here, which is going to fill the password box.

And then you hit Enter. Do not type. Every time you see a T like this, you do not need to type. You just click on it and it's going to auto type. Is everyone on that screen? Yeah. Yeah. OK. So we can start. OK. It says we're going to fast on this.

I'm going to move on to-- So what I'm going to do here, what you can do, it's not mandatory, but you can click here and go to split windows to get more real estate. So that way here, I'm moving the instructions away, and here, that allows me to have more space.

Then, I'm going to go to getting started. Do we need getting started? I don't think we do. No, I think we can move on. No, we do, actually. So we're going to move on to getting started. What we are going to need to do is AZ login here. So I'm going to open up from here, Terminal.

Type AZ login. Type select work or school account. So this is very important. Continue. Even if you connected the first time, even if you connected with your Microsoft account, in this instance now, we are not going to use your account. We are going to use a temporarily generated account, which is a work account.

So you need to select work account. And there, you're going to select the user. So in the resources tab here, in the instructions here, in the pop-up, you click user. It's going to auto-type. Then next. Then the password. Sign in. Yes. We're all set. We're all set. There we go.

Okay. So now I'm logged into AZ. So I can go back to the instructions here. And we're going to have to type that comment. Because in Azure, you have some roles that need to be assigned. And that's something we could not automate as part of the lab provisioning. So that's something you need to execute manually.

So you just type that command. Pass it in the terminal here. You're going to have to say to agree to the warning and say pass anyway. And sure. And you're going to see a bunch of logs. And at the end, normally everything should be fine. Okay. Here we go.

So you have a JSON. Is everybody there? Yeah. Okay? Okay. So now, finally, we're going to be able to open the workshop. So you type that command, which starts with git clone. So what we're going to do is we're going to check out the git repository from GitHub. And we're going to build the project and open it and install some code extension and open it in VS Code.

So I'm going to go back here, type the command, passed anyway, enter. So like I said, it's cloning the repository, creating the Python virtual environment. So today we're going to use Python for this workshop. So when you get into the VM, you just open Edge, you open the browser, and the instructions should be displayed right away.

You also have a form on the desktop right here. Yeah. Okay. So when you get into the VM, you just open Edge, you open the browser, and the instructions should be displayed right away. You must have clicked on the link. I don't know. I don't know how you get here.

I don't know how you get here. I don't know how you get here, and I'm not sure what the ... Can I see? Yeah, I can start a little bit. Oh, here we go. So I was at this step, where I cloned the repository, and I installed the PDF extension.

Then we're going to move on, and we're going to open VS Code. There we go. Okay. So now we have VS Code here. So now we still have a few setup steps before we can get to coding. So you're going to have to go to the Azure AI Foundry here.

Sign in. For the sign-in, use that user from the instructions pane. Password. Okay. Stay signed in. Yes. Okay. So Azure AI Foundry. So now we are in AI Foundry. Then what we're going to do is we are going to search for ... Go down. Go down. We're going to ...

Oh, my God. Come on. Come on. Come on. So we're going to open the project here. So be careful. You need to select the project, not the hub. Once you are on the project, you can ignore those pop-ups. Okay? And we're going to need that project connection string here.

So you can copy it. And we're going to pass it in VS Code. So once you have copied the project connection string, you can go back to VS Code, search for the .anv.sample file, rename it. So we are going to remove the sample. And we're going to pass the connection string here.

So that connection string is what allows us to connect to the AI Foundry project, in which we're going to deploy the AI agent. Be careful when you passed it to not forget characters, to pass between the double quotes. Otherwise, it's not going to work. So once we've done that, we can move on.

So as you can see, we're going to use GPT-40. You can save the file. Okay. Is everybody at this point? This is important because now, finally, we have configured all the-- I get a node over there. Can you guys go help? Mark? Is everybody else at this point? Okay.

So, do we have everyone at this tape? Is everyone set up? Yes. So we can look at the code? Okay. So, the first thing that we are going to look at first is that I'm going to explain quickly what the files are. So the main file that we are going to look at today is the main.py file, which is the entry point for the application.

It's where everything is configured. The goal of this workshop is not to give you, like, what code should look like in production. The goal of this workshop is for you to understand how it works, to understand all the pieces, because once you understand, you're going to be able to use any of those other frameworks.

I mean, this one or another one, it's going to be the same. What matters is to understand how NLLM works, how function coding works, how grounding works. So, and the sales data, yeah, that's where the SQL query generation logic is. You have some stream handling. I mean, you have a bunch of things.

Another directory which is very important is the shared/instructions directory. OK, so let's move on. So the first step, the most important thing is to understand function coding. So the first example is it's going to show you what it does. So in sales data, let's look at-- where am I?

Yes, sales data. So that's where all the SQLite logic is. So you have a bunch of functions. So as you can see here, that function takes a SQL query. I mean, let me scroll so that you can see it. It's a SQL query. So the LLM is going to be the one generating the SQL query, compliant with SQLite syntax, and pass it to the function.

And the function is actually pretty dumb. The function does not do much. It just uses the SQLite driver and executes the query, nothing more. That's because all the smartness of generating SQL query is done by the LLM. When you think about it, LLM is very good at language. And SQL query is a kind of language, like coding, like Python.

And so-- and it happens that when the large language models are pre-trained, they are pre-trained on the massive amount of GitHub repository, and a lot of them contain SQLite queries. So if you use a common database, it's going to work, like PostgreSQL, MySQL, SQLite, MongoDB. If you use an exotic database that nobody has ever heard of, it's not going to work.

Because the model needs to have been pre-trained on it. Here we go. OK. So the exercise is in the main.py file. So I'm going to open the main.py file here. So we're importing a bunch of packages. So those are the packages which are required to connect to AI Foundry.

To get the models to authenticate with Azure identity. .onv is the package as it was to load the environment variable from a .onv file that we edited previously. You have some logging. OK. Let me move on. So here, that's how you connect to the AI Foundry workspace. Or project, I should say.

OK. And so line 59, what you're going to do is comment the first instruction files. And then we're going to look at its content. So if you go to Instructions here, you open FunctionCoding here. Those are the instructions that are going to be passed to the agent. You are a sales analysis agent for Contoso, Retarder of Outdoor, Camping Gear.

So it explains what personality the agent should have, what the mission of the agent should be, help users by answering sales related questions, at least what tools are available. So here, sales data tool, use only the Contoso sales database via the provided tool, with the name of the function.

So to be honest, those instructions are optional. They are made to-- actually, it occurs to the question you were asking earlier. I didn't get your name, but-- James. James. Where you were asking how specific you must be. The tool comes with its own schema. And the LM is going to understand the JSON schema of the tool, which explains the function name, the parameters, and you have an explanation of what each parameter is.

Here, in the system instructions, you can add some more information that are more specific to your application or to the agent using the tool. Then you have information regarding formatting and localization and examples, et cetera. Well, it's quite thorough. And here, so we are specifying the instruction files, and we are also specifying which tools to use.

So here, we are going to use the functions, which contains only one function tool, which is async, which is the function I showed to you earlier. Here we go. And also, the documentation here, the Python doc, is passed to the LLM. So what you write here is important, because the LLM is going to interpret that documentation, as well as the name of the parameters, plus the documentation of the parameters, to understand how to call your function.

And then I'm going to open a terminal, command palette. And to be honest, I never remember where the terminal is. So what I do is that I cheat, and I create a terminal here. There we go. You need to remember we are on Windows, usually I have a Mac.

Okay, we have looked at this, I have explained to you all of this. Run. So now we're going to hit F5. No, here, F5. Oh, I could do that too. I could click on the play button here. Okay. Yeah, you don't even need to open the terminal. You don't even need to do what I just did before.

It's just a habit I have, but you can just click run here, it's going to work. I mean, hopefully, we'll see. So it's doing what I mentioned earlier, which is that first you need to create the agent. Once you created an agent, you're going to be assigned with an ID because it's stateful.

Like the agent is actually an entity which lives in the AI Foundry project. Then we're going to enable the auto function calls. We're creating a thread. So the thread is basically the conversation, which is stateful too, because you don't have to save the messages yourself, and then we can finally enter a query.

So I'm going to go back because we have a list of questions we can ask here. What were the sales by region? I go back to the terminal and past it. No, sorry, wrong terminal. This one. And if it works correctly, we'll see. Yes, it does work. So to be honest, every time I see that, it still amazes me, even if I've been doing that for a long time.

Because basically from the simple question that we ask in plain English, based on the knowledge of the schema of the database, which is somewhere in the code, it can automatically generate such a query. Like, let me go back to the question I asked. What were the sales by region?

So we are asking what the sales are. So where is it from? From sales data, because we are putting from the sales data table, and we want to group by region. So we want to sum the revenue, group by region, and automatically it limits to three. And I believe it's because in the instructions, there is an instruction that says that by default, you should limit to three.

And so, no, 30, sorry, three, zero. So that's how we get that great table. And we can see that for Africa, we had 5.2 million Asia-Pacific, 5.3 million, et cetera. So we have our table. And now we can ask follow-up questions. What was last quarter's revenue? It gets more tricky.

To be honest, I don't know the scheme of the database very well. So even for me, like I would have to look at it. If I had to write a SQL query, I'm sure you've all done that. Remember, the goal of such an agent is to enable non-technical people to use technical tools.

And in this case, the technical tool is a SQL database. And so here, using-- Oh. I don't remember that one. What's year? Let's try 2024. Okay, so this is interesting. Okay, so we did find-- so the question was, what was last quarter's revenue? So I'm assuming that it's the-- yeah, select the sum of the revenues from sales data, where year equals 2024.

And we ask for the last quarter-- sorry, did we say last? Last quarter. So I selected 7, 8, 9. So I'm not sure why he selected those three months, as opposed to, for example, 10, 11, 12. So let me correct it, actually. It's an interesting experiment. Every time we do that, obviously, we get different responses, right?

So actually-- I think it's because Oro's last training date was November of '24. So it's thinking last quarter from November is 7, 8, 9, or 4 November. So it's possible, but actually, let's say we're in April. Yeah, it works. So I just said, actually, we're in April, which means that the last quarter is January, February, March.

And as you can see, it changed the request with 123. So one thing we could do to improve this example is add a date tool. We could add a tool that allows the LIM to ask for what is the current date, so that we would not have to enter it manually.

Well, I'm going to leave it as an exercise. Which products sell best in Europe? So this time, it is interesting because we are generating a query against a different dimension, which is the product type. So as you can see here, we are grouping by product type. Okay, I'm going to pass on because you understand the concept.

I'm going to ask the last one because I want to move on to the next examples and see what happens when you mix multiple data sources. Okay. Same one, bar region. Let's move on. Yes. Yes. Yes, good question. So let's go in here. Okay. Yes, sir. Thank you. Okay, so this is how.

The way it works is that at initialization of the agent, so we are loading the instructions from the instructions files. So if I go back to load, replace, if I search for this in the function calling instruction file, there we go. So sales data tool, so here, and I passed too fast on this when I was going through the file and explaining it to you, but so what's going to happen here is that the instructions of the agents is going to contain what the schema of the database is.

That's how the LLM knows what query to generate and what tables and columns are in the database. It would not work at all. Oh. Like, no way. Oh. It would completely hallucinate the schema. That you're grounding, basically, the LLM with what the schema of the database is. Can I do more specific, like, what if, just saying, like, what if one has some confidential data, and I don't really want to get data?

Yes, you could. I can. Yeah. Yeah. You could. And actually, you don't even have to be too fancy for that. You can literally add it to the instructions file. You can say, hey, the column X of table Y is confidential. You can never return it. It is not bulletproof.

It is not guaranteed that the LLM is going to follow those instructions. If you have confidentiality, I mean, matters, problems in that space where you want to restrict access, you should, somebody mentioned IAM, or I think it was you, you should implement this. You should add column level, and the best is to do what you would do with normal code.

Because even if normal code is deterministic, and the odds of a user getting access to something he's not supposed to get access to, it's still possible. You can still use SQL injection, like all sorts of hacking, to exploit normal code. Obviously, with LLMs, it's a whole different area. So you should implement the exact same safety measures you would for a normal program.

Where were we? Okay. Yes. If you were supposing that the schema is kind of perfect, it's a perfect work, a schema that will point, I mean, our agents to the right and table to the right-- I'm not sure what you mean by perfect, because the schema of a SQL database is, I mean, has to be perfect, I hope.

At least matching the data. Yeah, but I mean, it depends on the one that created, I mean, depends on the name of the column. It depends on the name of the column. So you mean clear? You mean interpretable? Okay. Yes. Yes. So in this case, but in a real world-- Same answer as regarding security.

It's the exact same answer. He asked, how can I make sure that confidential information is not returned if I want to? You add an instruction saying, do not use that column, it is confidential. To answer your question, what you would do is document your schema. It would say, that table which has a cryptic name that nobody understands is actually the list of orders.

Yeah. So it's kind of a semantic layer. Yeah. Yes. That's exactly how it would work. Okay. Let's-- Where am I? I'm lost. We did that. Okay. Okay. Basically explaining what I explained. Okay. Blah, blah, blah. Breakpoint, no. Okay. Okay. So this is interesting. Let me try that one. And I'm going to increase here.

So what regions have the highest sales? Okay. So that's very interesting. Why is it interesting? Because to answer the previous question, which was total shipping costs by region. Remember that the LLM has access to the context of the discussion, of the past messages. It's stateful. It has all that context of the answers and questions that were previously asked.

And it uses it when you ask a new question. For example, when I asked total shipping costs by region, it did not have the information required to answer within the previous answers. So it executed the SQL query. But now, when I asked what regions have the highest sales, it can actually use a table that was generated previously.

There is no need to execute one more SQL query because it has everything it needs. Exactly like you. I mean, without doing anthropomorphic, but exactly as you, if you were reasoning about the problem and you were like, do I need to go write a SQL query in order to get that information?

No, you don't. You have it already. So the LLM is going to do that reasoning. That's why here, you don't see any SQL query executed. Okay. Yes? How do we know it's doing math the right way? Because like-- It's not doing math the right way. But here, we did not ask it to do math.

We asked it to-- oh, you mean because there is a top. Okay. If you're adding the number-- So, so highest-- okay, that's a very interesting question. So highest sales is not about doing math. It's about comparing. Like what's bigger or smaller? 11 or 1. Yeah. And that's easy because that's something that does not-- I mean, easy.

What I mean is that LLMs are bad at math. They are bad at calculus, bad at addition, multiplication, division. It's going to get it wrong. And it's funny because I have an anecdote that's from this morning. We had a team meeting and we were wondering about the compound value of a monthly-- and you were there-- and what would be after a year the compound effect of a daily rate of increase of 1%.

And in my mind, I remember the formula for this, which is you take the percentage, you add 1, and you put it to the power of the number of periods, right? And I executed it and I got a number. Somebody else was like, "Oh, that's big." And he typed it in GPT.

And he got a different answer. And the LLM got it completely wrong. It was-- it was-- it was very convincing. It looked like it was doing right, but it was not. It was the original answer, which I typed in Google Sheet with a formula, was correct. So to answer your question, when you need to do math, you need to use a tool.

Here, we're using code interpreter, which can do math, but to be honest, I've tried. It's not the best way to do math, to use code interpreter. It's good at generating diagrams, at reading files, extracting information from a CSV, from other types of structured documents. It's very good at that.

If you try to do math with code interpreter for whatever reason, which I cannot explain, it does not work very well. When you want to do math, it's better to create your own tool, like calculate. And it takes like a LaTeX expression or some kind of mathematical formalism to represent an expression.

You have plenty of mathematical calculators out there that you can use to do the actual and accurate math calculation. And so that the LLM can delegate to a tool the responsibility of doing calculations right. Exactly as you, as a human, would do it. Because me, I don't know, but 1% to the power of 365, I don't know how to do that myself.

I use a calculator for that. And I'm going to have to reconnect again. Oh my god, this is annoying. OK. So, where was I? OK. What were the cells of tense? And here, we get a query. We need to ask, again, to do a query on the database. Because in the past information, we do not have that information.

We don't know the drill down of tense in the United States in April 2022. So it needs to execute a SQL query. OK. I mean, you get the point. OK. Let's move on. I'm going to stop the tool. Exit. OK. Oh my god. What's happening now? No, I don't want to meet and chat with friends and family right now.

OK. Sorry, mom. OK. Let's move on. Where am I? OK. Grounding with documents. Very important. So we're going to do RAG. VectorStore. You're asking if you're using a vector database for whatever reason. I was blanking. But yes, we are. OK. And so we're going to uncomment those lines in main.py.

So I'm going to go here. Go back up. I think it's back up. Let me search for-- yeah, it's now the instructions. So file search. I'm going to comment that one. I'm going to uncomment the file store here. Can I do that? Yes. Cool. No. OK. It works just-- no.

OK. Oh my god. Can I do that? No. Sorry. I was trying to select multiple lines at once. But I'm going to have to do it over the way. OK. So now we're defining-- we're creating a vector store. I'm not going to go into the details of the creation.

There is a utility which does all the heavy lifting. But basically what it does is that it creates an AI search vector store. It reads all the documents which are here. Oh my god. The Wi-Fi today. I'd like to click on this. OK. I'm going to try to reconnect.

OK. Password. OK. So like I was saying, I was going to show you the files. OK. Where are my files? Oh. Here we go. So it's a PDF which contains all the product information for my products. For tents, for whatever. And so what this function does is that it reads the PDF, chunks it, cuts it in small pieces, and uploads all those pieces into the vector database.

I'm not going to go into the details. If we have time at the end, I can explain to you in excruciating details how that works. But not right now. So tool sets, yes. I think I wanted to show you something else. For whatever reason, I'm blanking. I forgot. Yes.

I remember. I wanted to show you the difference between the instructions file we were using before and this one. So function coding and file search. So compare selected. So I'm using the div tool here included in VS Code to show you the difference. And so here, what's interesting is that you can see the difference between the instructions.

So in the file search instruction, we have new instructions. So now we have a contested product information vector store. We have a search tool which allows to search into the vector database. And we have a few different things when it comes to content and clarification guidelines. Such as the kind of questions that you can ask.

You know, with new questions like what brand of tents do we sell, which you could not ask before. Because you didn't have the brand information into the sales database. That kind of thing. So what's interesting with the use case here, and really the colleague that created that content, created something very interesting because it allows to show many things.

Like when you have a source of information, a source of data, think about how before when we had to mix different data store, different databases, and we had to inject them into some kind of big cube system to elapses. whatever, even forget to do some very complex aggregated queries to link things together.

Now you can do that with AI. Like here, when I want to link to join information from a database with information in a PDF, which is not structured, I can use the LLM for that, which is quite extraordinary. So when I hear all the skeptics about AI, I just don't understand because, I mean, that's just insane what you can do.

Anyway, I'm just going to skip for the rest of the difference between those instructions. But I just wanted to show you that. Now we're going to execute it. And I can type run. OK. So as I said, it's uploading the PDF. And actually, the chunking, I said it was chunking.

Actually, the chunking is done by AI search, I think. I forgot if it's client-side or server-side, but I think it's server-side. It's one of the features of agent services that you do not have to take care of chunking yourself. I'm creating a blah, blah, blah. OK, OK. OK. Same.

So now we can type our query. I explained this. Run, run. OK. OK. Oh. Here are our questions. So what brands often do we sell? Now we can ask this. And so this time-- so what's interesting is that this is not using the SQL database. This is only reading from the PDF.

Nothing else because there is no sales information required to answer that question. Also, remember that I killed the previous instance of the agent. I created a new one. So all the past conversation was lost on purpose. This is-- I could have reused the same conversation if I wanted to keep the previous context, but I don't want.

OK. So outdoor living and alpine gear plus some information about it. OK. Cool. Simple enough. Now, that's interesting. We're asking which one do we sell. So are we going to query the database? No. Not yet. Oh, OK. That's because we do not sell hiking shoes. So it's not using the SQL database.

It's just using the PDF. But what's interesting here is that there is no information about hiking shoes in the PDF. OK. Let's move on. So now what's interesting is that it's referring to the previous brands we talked about. Outdoor living and alpine gear. So what product type and categories are these brands associated with?

Same. Only PDF. Outdoor living does tents. Camping and hiking. Both, actually. Both do the same. To be honest, it could have summarized it since both do the same, but it did not. What were the sales of tents? Oh, now it's interesting. Because now we are asking for specific sales information about a specific year.

So now it's going to need to query the SQL database. So we are asking for the sales of tents in 2024 by product type. Include the brands associated with each. So what's very interesting here is that the product type and the total sales, I think, come-- yes. The product type and the revenue come from the SQL database.

But the brand does not. The brand comes from the PDF. So because in the questions before, we read the mapping between the product type and the brand, that's how it's capable of adding the brand into the table here. And that's also-- I'm not going to do the demonstration right now.

I'm going to do it at the end. At the end, I'm going to kill the program. And I'm going to restart it. And I'm going to re-ask the exact same question. And you're going to see the difference in the question. Hint, because I'm going to ask that question without the previous question.

And because what I said earlier-- remember when I said that an agent can be goal-oriented and relentlessly work until it achieves the goal. In this instance, we have not implemented the loop. So it does not have the planning capability. So it's going to fall short. It's not going to be able to say, oh, but in order to answer that question, I need to look into the PDF and into the-- well, you might get lucky to be honest.

It might do it out of luck, because sometimes it does. Because sometimes the planning is simple enough that it doesn't need multiple steps. But if it gets slightly complicated and you need multiple-step planning, it's going to fall short. Okay. Next one. Next one. What were the sales of Alpine Gear in 2024 by region?

So very interesting, too, because the database does not contain the brand. So here, what it does is say where product type like-- it automatically generates a like matching criteria based on the brand, because it knows that the brand does family camping tents. So now we're going to generate charts, and we're going to use a code interpreter for this.

Oh, and like I told you, I told you before I do that, I'm going to make an experiment. And I'm going to ask again-- the last question actually, the most-- yeah, this one. The last question. And this one most likely is going to fall short. So now I'm executing the agent again.

Yes. Okay, and I'm going to create-- to ask the last question. Right. So here, in theory, it's not going to work. Yes. Oh. What the heck? What the heck? Well, it worked. I guess it figured out that it needed to use the product database. Or maybe there is an instruction in the file search that says that when you ask a sales question, requiring product information to go read the PDF.

It's possible. It's possible. So, yeah, I think that's what happened here. Question on the board after the report. Oh, you tried? Yeah. I might have to quit and launch again. Yeah. I know my response is different from Gary's. I got-- it gave me Alpine and Alp-- it doubled up on the second result.

It-- it what? It doubled up on the-- when you did it, it said it was just-- it was just-- it was outdoor job living for backpacking and camping tent was Alpine Gary. But from my-- it said I wouldn't put a little bit of hope. OK. It's just one to one after the other.

OK. Interesting. Yeah. It's not deterministic. So it's not perfect. It's going to make a mistake for sure. Which is-- is that the whole eval thing? Like, I guess that's where I struggle sometimes. Yes. It is that whole-- So I give a breakout session, by the way, on evals. OK.

Tomorrow. OK. At 12:30, I think. Or 2:00 PM. I forget. But I'm going to give a breakout session tomorrow specifically on how to evaluate agents. because, yes, it's-- It's a little bit because it's like, well, the answer might be right. It's a problem. It's hard. OK. Let's move on.

Cutting temperature. So now, we're going to go back to our main.py. And I'm going to comment that one. OK. And every time, we keep the previous tools. We just add new tools. OK. So we can also just have all the tools running, right? Yes. At the end, when I'm going to uncomment everything, all the tools will be working at the same time.

But what's very interesting is to uncomment-- it's a workshop. It's so that you get an understanding of exactly the certainty of how everything works. It just works. OK. So run. So we're going to look at the instruction. And we're going to compare code interpreter with file search. Compare. So here, with code interpreter, we add visualizations.

We add a chapter, a section in the instruction, which explains how to do visualization. So when you have questions involving visualization, we basically say, hey, go use the code interpreter to generate charts. And at the end, yeah, PNG. OK. Let's move on. Main. There we go. OK. So show sales by region as a pie chart.

Remember, in the previous-- in the slide I showed before, we were first asking for the sales by region, and then for a pie chart. Here, we're asking for both at the same time. And so it's tricky because sometimes when you need-- because basically what this is doing is that it's calling two tools.

And sometimes, it's simple enough, the reasoning capabilities of the LLM is capable in, like, one question and answer to-- it has enough reasoning power to say, oh, I need to call that tool and then this one. And sometimes it falls short and it does not have enough reasoning power.

And you need to use a multi-step system. OK. So here, we saved the results here, so I'm going to-- OK. So here is a diagram, the pie chart diagram. With our answer, I can try to zoom to show-- OK. So we have the drill down of revenue by region.

OK. Cool. I go fast on this, but this is incredible. I mean, another who is literally generating Python code to generate the pie charts. So basically, what that means is that you can generate a pretty crazy diagrams idea. You can be pretty imaginative in the type of information that you want to display.

So for the anecdote, last year at this conference, I gave a talk on cognitive pressure because at the time it was a hot topic. It was brand new. And what I had done, I do kite surfing. And I had extracted one of my sessions where, you know, you're on the water and you do attacks, you do turns, you do jumps.

And I had the XML file of my session recorded from my watch, exported. I imported it into a code interpreter, and I asked to calculate how many times I turned or how many times I jumped. And from-- it was able to read the XML because XML is a very rich, strict format and self-sufficient.

And extracted all the information and the structure and generated the Python code to go through all the data points in the file and calculate how many turns and jumps and height I had during my session. It's a pretty incredible tool when used right. Anyway, end of the anecdote. It shows blah, blah, blah, blah.

Okay. Next. Why download the JSON? I forget what this one does, to be honest. Yeah, okay. I mean, it can be useful, but let's move on. Continue asking questions about-- yeah, because there was no tools involved. Yeah, just interpreted whatever was in the context before and downloaded the JSON.

Blah, blah, blah. What would be-- huh, interesting. I mean, it's really crazy what you can do. You can really, really be imaginative. By the way, you hear a lot about one of the latest things in AI is deep research. Under the hood, deep research is an agent that has those tools.

Just that it's been developed by an army of engineers and they've made sure that everything works well. But it's basically what it's doing. I don't know how it's implemented, but I'm assuming it's a mix of or some kind of orchestration system that mixes tools together and it loops until it achieves a goal.

And it can run for hours. Anyway. So what would be the impact of a shock event, 20% sales drop in one region? Oh, yeah. Can you elaborate on the difference between what this can do, how it's not looping, but if you use Autodesk and you can loop through the different tools that it needs?

Yeah. I see that it has planning orchestration. It's able to use several tools at the same time. Two. Yeah, two. Fine. And, like for example, let me try to find an example and, like for example, if you ask a question, you have two data sources, right? One is product, one is sales.

Imagine you ask a question for which it is obvious that you need to query one, but it's not obvious that you need to query the other. The information is in the other, but you have no hint in the phrasing of the question that you should be querying the second in order to answer the question as a whole.

It would fall short. This system would fall short, not the Autodesk? Yes. Depending on how you configure it. And this one, the falling short, would also depend on what instructions you gave it, because you can hack your way through those kinds of problems. Because if you, you can just, in your instruction, add some instructions saying, hey, if the user asks for that kind of question, you should also go look into the data source.

But, the difference, sorry, the difference with a multi-agent, multi-step planning system, with a more complex topology, is that you have an LLM, which every time you get an answer, looks at the answer and asks itself the question, is the answer correct? Is this answer answering the question or not?

And it's capable of extrapolating. It can do something such as, huh, this is not answering the question. What do I have at my disposal? What could I do more to try to answer the question? And then, maybe the first step, it did not query the second data store, but it's going to say, actually, maybe we should go look into the data store, because actually, maybe the answer is in there.

Or we should query the internet. Or we should query the internet. See what I mean? And auto-type . Yes. So you can query it, right? Yes. Automatic kernel or other more, like, collaborative multi-agent system. Yes. Automatic kernel. But this is today, it's an introductory. So we don't go into those more complex.

It's a workshop of its own, just looking at multi-agent systems. Yes. So you said that sometimes, I mean, the answer is not obvious, or the question is the prompt itself. It doesn't point to the right, maybe, set of data. Yes. That's exactly what we were saying. That's a multi-agent system.

And a coordinator, when you use the word coordinator, it's actually one of the patterns, we call it a topology, it's one of the topologies of multi-agent systems. And you can build such a topology with autogen. Yeah. Heads up, it's not trivial. It's not trivial. Not trivial. The hardest question in multi-agent systems is, in my opinion, how to specify the definition of DOM.

So we did not open. Did we? No, we did not. OK. So this is a simulation. Impact of a 20% sales drop in North America on global sales distribution. Percentage of global sales. Oh, yeah. Because in North America, here it's dropping. So the percentage, obviously, of North America goes down, while the percentage of others goes up.

That's a pretty cool example. What if the shock event was 50%? I mean, I'm going to skip because it's pretty obvious what it's going to do. So, which regions have sales above or below their range? Oh, yeah. Interesting. So maybe the previous questions-- the previous question was-- no, I don't think so.

I think it's a-- Huh. Huh. Do we have a problem? Connectivity problem, maybe? Interesting. So maybe the previous question was-- no, I don't think so. I think it's-- huh. Do we have a problem? Connectivity problem, maybe? We'll launch it again. OK. Let me just-- OK. Let me just-- so we are-- OK.

I want to show you real quick the Bing grounding with Bing Search. So I'm going to skip over that one because this is important. Very often you want to add internet search capabilities. So I'm going to comment-- uncomment this. Use the other instruction file. Ah. What did I do?

OK. Here we go. And the last one, code interpreter multilingual. We skip it. It's important because that's how to configure code interpreter. Once you want to use-- you want to work on non-English languages. So with specific encoding, specific fonts, that kind of thing. But we're going to skip it for today.

Resource not found? Huh. Well, that's interesting. Did you have that problem? Did you try to that point? Did you have that issue? Is it working for you? Is it working for you? I have that error. You have the same? Yeah. Maybe we've had to change. OK. And I'm not sure what happened.

It may be-- because built was recently. And a lot of APIs have changed. And maybe that one changed too? I'm not sure. Anyway, what would have happened is that-- let's look at the question. OK. So here, what beginner tends to sell? Queries, our products, PDF. What beginner tends to our competitors sell, include prices, needs to go query the internet.

You need to go query information about what competitors are out there and what they sell and for how much. So that would use the Bing grounding tool, et cetera. So same logic as before, we showed-- and I'm going to wrap up and go back to my slide. OK. But just to finish my sentence.

So what we bring-- one, two, three-- Bing grounding is just one more tool. That same as the database query tool, the vector database file indexing tool is one more source of data that the agent can use to make informed decisions. Or rather reports. So we've seen how to do more with function calling, like with a bunch of very powerful tools.

And yeah, Azure AI agent service. So yeah. The UI today was very simple. It's always a question when you do workshops like this, like do we go with a web UI which is complicated and adds some React and web stuff that some people might not be familiar with. That one is like bare bone, like it's just CLI, the bare minimum of code focusing explicitly on making sure you understand how to build such an application.

That one works because it's a whole new paradigm. And building those is not trivial. And as you mentioned, there is a question of evaluation. And so when it comes to evaluating normal, just an LLM, question answer, there has been many frameworks out there for some time now. But when it comes to evaluating agents, it's a whole new world again.

Because not only you are evaluating one answer, but you need to evaluate a whole conversation. Plus, because you need to call tools. So you need to evaluate whether the good tool, the accuracy of which tools were selected. Anyway, we're going to see all of this tomorrow during the breakout session where I'm going to introduce the Azure AI evaluation SDK with the agent evaluation capabilities, which is fascinating.

You have on that QR code a bunch of additional resources. And I'm going to point back to my contact if you want to. Do you have questions? We have three minutes. Oh, no. One minute, sorry. Sorry, first. The tools which are used by agents, should they be part of the Azure ecosystem?

No. So you can mix. When you create an AI foundry, an agent, you have a bunch of pre-made tools that are readily available. You just have to click on it and configure it. Like I said, I can't remember from the top of my mind. You would have to go look, to be honest.

But the tools we define today, they are client side. We define them using code. Well, except Bing grounding, because Bing grounding is you decline client side, but the actual Bing grounding call is happening on the server side. But the SQL query is client side. So we had a mix, actually, to that.

So I know that the agent service and ad foundry is crazy right now. So what are the -- why should we use the agent service versus just the serving service for, like, GPT 4.0, 4.1? So I guess if we can instantiate the tools in the application itself, do we need to use the agent service, then, inside of Azure?

Or can we just stick with the regular old LLM kind of thing? So you do not need to. Okay. Like I said earlier, everything I just showed today with agent -- Azure AI agent service is just a managed AI system. managed in the sense that it takes -- like most developers, every time you build an AI application those days, you need a vector database, you need conversations, you need to remember those, you need to store those, you need to search on the web.

I mean, those are the basic features that every single AI application out there needs. I mean, most of them. So Azure AI agent service makes it super easy, manages everything for you. But no, you do not have to use it. You can use the bare bone LLM completion from two years ago, and you can do whatever you want with it.

It's just -- it's easier. You have a full-blown API. It manages persistence. It's just easier. You have a full-blown API. You have a full-blown API. You have a full-blown API. You have a full-blown API. You have a full-blown API. You have a full-blown API. You have a full-blown API.

You have a full-blown API.