Designing AI-Intensive Applications - swyx

Chapters

0:0 Conference Welcome and Overview0:42 Conference Logistics and Growth

1:47 Audience Preferences and Survey

2:22 Innovations in AI Engineering (MCP and Chatbots)

2:58 Evolution of AI Engineering (Past Talks)

3:50 Simplicity in AI Engineering

4:17 AI Engineering as a Developing Field

5:23 Seeking the "Standard Model" in AI Engineering

6:2 Candidate Standard Models in AI Engineering

9:26 Human Input vs. AI Output (AI News Example)

11:5 SPADE Model for AI-Intensive Applications

12:29 Call to Action for Conference Attendees

Transcript

- - Okay. Hi everyone, welcome to the conference, how are you doing? Excellent. Usually I open these conferences with a small little talk to introduce what's going on and then give you a little update on where the state of AI engineering is and how we put together the conference for you.

This is one of those combined talks. I'm trying to answer every single question you have about the conference, about AI news, about where this is all going, and we'll just dive right in. Okay, so, 3,000 of you, all of you registered last minute. Thank you for that stress. I actually can't quantify this.

I call this the Gini coefficient for the AI organizer stress. This is compared to last year. It is, please just buy tickets earlier. Like, I mean, you know you're going to come, just do it. Okay. We also like to use this conference as a way to track the evolution of AI engineering.

That's, those are the tracks for last year. We've just doubled every single track for you. So, basically, it's basically, you know, like double the value for whatever you get here, and I think, like, you know, I think this is as much concurrency as we want to do. Like, I know, I hear that people have decision fatigue and all that totally, but also we try to cover all of AI, so deal with it.

We also pride ourselves in doing well by being more responsive than other conferences like NeurIPS and being more technical than other conferences like TED or whatever, what have you. So, we asked you what you wanted to hear about. These are the surveys. We tried all sorts of things. We tried computer using agents.

We tried AI and crypto. It's always a fun one. And, but you guys told us what you wanted, and we put it in there. For all, for more data, we would actually like you to finish out our survey with surveys not done. So, if you want to head to that URL, we will present the results in full tomorrow.

We would love all of you to fill it out so we can get a representative sample of what you want, and they'll inform us next year. Okay. You know, I think the other thing about AI engineering is that we also have been innovating as engineers, right? We're the first conference to have an MCP.

We're the first conference to have an MCP talk accepted by MCP. Shout out to Sam Julian from Ryder for working with us on the official chatbot, and Quinn and John from Daily for working with us on the official VoiceBot, as well as Elizabeth Treichen from Vappy. I need to give her a shout out because she originally helped us prototype the VoiceBot as well.

So, we're trying to constantly improve the experience. The other thing I think I want to emphasize as well is, like, these are the talks that I give. In 2023, the very first AIE, I talked about the three types of AI engineer. In 2024, I talked about how AI engineering was becoming more multidisciplinary, and that's why we started the World's Fair with multiple tracks.

In 2025, in New York, we talked about the evolution and the focus on agent engineering. So, where are we now in sort of June of 2025? That's where we're going to focus on. I think we've come a long way, regardless. Like, you know, people used to make fun of AI engineering, and I anticipated this.

We used to be low status. People just derived GPT wrappers. And look at all the GPT wrappers now. All of you are rich. So, we're going to hear from some of these folks in the room, and thank you for sponsoring, as well. But, you know, I think the other thing that's also super interesting is that, like, you should-- the consistent lesson that we hear is to not overcomplicate things.

From Anthropic on the Latent Space podcast, we hear from Eric Stuntz about how they beat a sweep bench with just a very simple scaffold. Same about deep research from Greg Brockman, who you're going to hear later on in the sort of closing keynotes. As well as AMP code. Where's the AMP folks here?

AMP? AMP? AMP? I think they're probably back in the other room. But also, you know, there's a sort of emperor has no clothes. Like, there's-- it's still a very early field, and I think the AI engineers in the room, like, should be very encouraged by that. Like, there's still a lot of alpha to mine.

If you watch back all the way to the start of this conference, we actually compared this moment a lot to the time when sort of physics was in full bloom. Like, this is the Solvay conference in 1927 when Einstein, Marie Curie, and all the other household names in physics were all gathered together, and that's what we're trying to do for this conference.

We've gathered the entire-- the best sort of AI engineers in the world and researchers and all that to build and push the frontier forward. The thesis is that there's-- this is the time. This is the right time to do it. I said that two and a half years ago, still true today.

But I think, like, there's a very specific time when, like, basically, what people did in that time of the formation of an industry is that they set out all the basic ideas that then lasted for the rest of that industry. So this is the standard model in physics, and there was a very specific period in time from, like, the '40s to the '70s where they figured it all out, and the next 50 years we haven't really changed the standard model.

So the question that I want to phrase here is, what is the standard model in AI engineering? Right? We have standard models in the rest of engineering, right? Everyone knows ETL. Everyone knows MVC. Everyone knows CRUD. Everyone knows MapReduce. And I've used those things in, like, building AI applications.

And, like, it's pretty much like, yes, RAG is there, but I heard RAG is dead. I don't know. You guys can tell me. This day is, like, long context-filled RAG. The other day, fine-tuning kills RAG. I don't know. But I don't think-- I definitely don't think it's the full answer.

So what other standard models might emerge to help us guide our thinking? And that's really what I want to push you guys to. So there are a few candidates, standard models in AI engineering. I'll pick out a few of these. I don't have time to talk about all of them.

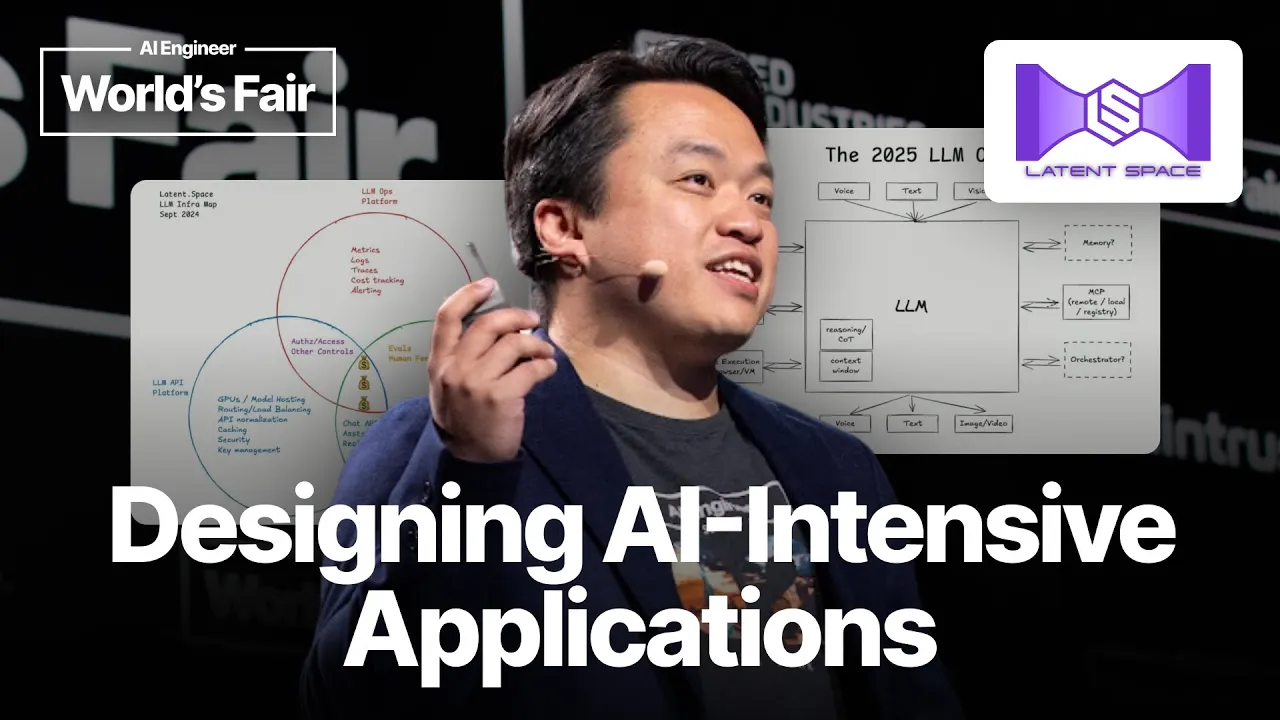

But definitely listen to the DSPy talk from Omar later, tomorrow. So we're going to cover a few of these. So first is the LMOS. This is one of the earliest standard models, basically, from Karpavi in 2023. I have updated it for 2025 for multimodality, for the standard set of tools that have come out, as well as MCP, which has become the default protocol for connecting with the outside world.

Second one would be the LNSDLC, Software Development Lifecycle. I have two versions of this, one with the intersecting concerns of all the tooling that you buy. By the way, this is all on the Latent Space blog, if you want. And I'll tweet out the slides, so-- and it's live stream, so whatever.

But I think, for me, the most interesting insight in the aha moment when I was talking to Ankur of Braintrust, who is going to be keynoting tomorrow, is that, you know, the early parts of the SDLC are increasingly commodity, right? LLM's kind of free, you know, monitoring kind of free, and RAG kind of free.

Obviously, there's-- it's just free tier for all of them, and you only get-- start paying. But like, when you start to make real money from your customers, it's when you start to do evals, and you start to add in security, orchestration, and do real work. That is real hard engineering work.

And I think that's-- those are the tracks that we've added this year. And I'm very proud to, you know, I guess, push AI engineering along from demos into production, which is what everyone always wants. Another form of standard model is building effective agents. Our last conference, we had Barry, one of the co-authors of Building Effective Agents from Anthropic, give an extremely popular talk about this.

I think that this is now at least the received wisdom for how to build an agent. And I think, like, that is one definition. OpenAI has a different definition. And I think we're just going to continue to iterate. I think Dominic yesterday released another improvement on the agents SDK, which builds upon the Swarm concept that the OpenAI is pushing.

The way that I approach sort of the agent standard model has been very different. So you can refer to my talk from the previous conference on that. I'm basically trying to do a descriptive top-down model of what people use, the words people use to describe agents, like intent, you know, control flow, memory planning, and tool use.

So there's all these, like, really, really interesting things. But I think that the thing that really got me is, like, I don't actually use all of that to build AI news. By the way, who here reads AI news? I don't know if there's, like-- yeah? Oh, my god, like, there's half of you.

Thanks. It's a really good tool I built for myself. And, you know, hopefully now over 70,000 people are reading along as well. And the thing that really got me was Sumith. At the last conference, you know, he's the lead of PyTorch. And he says he reads AI news, he loves it, but it is not an agent.

And I was like, what do you mean it's not an agent? I call it an agent, and you should call it an agent. But he's right. It's actually-- it's actually-- I'm going to talk a little bit about that. But, like, why does it still deliver value, even though it's, like, a workflow?

And, like, you know, is that still interesting to people? Right? Like, why do we not brand every single track here-- voice agents, you know, like, workflow agents, computer use agents? Like, why is every single track in this conference not an agent? Well, I think, basically, we want to deliver value instead of arguable terminology.

So the assertion that I have is that it's really about human input versus valuable AI output. And you can sort of make a mental model of this and track the ratio of this, and that's more interesting than arguing about definitions of workflow versus agents. So, for example, in the co-pilot era, you had sort of, like, a debounce input of, like, every few characters that you type that maybe you'll do in autocomplete.

In ChatGPT, every few queries that you type, you'll maybe output responding query. It starts to get more interesting with the reasoning models with, like, a 1 to 10 ratio. And then, obviously, with, like, the new agents, now it's, like, more sort of deep research, Notebook LM. By the way, Ryza Martin, also speaking on the product management track, she's incredible on talking about the story of Notebook LM.

The other really interesting angle, if you want to take this mental model to stretch it, is the 0 to 1, the ambient agents. With no human input, what kind of interesting AI output can you get? So, to me, that's more a useful discussion about input versus output than what is a workflow?

What is an agent? How agentic is your thing versus not? Talking about AI news, so, you know, it is like a bunch of scripts in a trench code. And I realized I've written it three times. I've written it for the Discord scrape. I've written it for the Reddit scrape.

I've written it for the Twitter scrape. And basically, it's just-- it's always the same process. You scrape it, you plan, you recursively summarize, you format, and you evaluate. And yeah, that's the three kids in a trench coat. And that's really what it is. I run it every day, and we improve it a little bit, but then I'm also running this conference.

So if you generalize it, that actually starts to become an interesting model for building AI-intensive applications, where you start to make thousands of AI calls to serve a particular purpose. So you sync, you plan, and you sort of parallel process. You analyze and sort of reduce that down to-- from many to one.

And then you deliver the contents to the user, and then you evaluate. And to me, like, that conveniently forms an acronym, S-P-A-D-E, which is really nice. There's also sort of interesting AI engineering elements that fit in there. So you can process all these into a knowledge graph. You can turn these into, like, structured outputs.

And you can generate code as well. So for example, you know, ChatGPT with Canvas, or Claude with artifacts, is a way of just delivering the output as a code artifact instead of just text output. And I think it's like a really interesting way to think about this. So this is my mental model so far.

I wish I had the space to go into it. But ask me later. This is what I'm developing right now. But I think what I would really emphasize is, you know, I think, like, there's all sorts of interesting ways to think about what the standard model is, and whether it's useful for you in taking your application to the next step of, like, how do I add more intelligence to this in a way that's useful and not annoying?

And for me, this is it. OK, so I've thrown a bunch of standard models in here. But that's just my current hypothesis. I want you at this conference, in all your conversations with each other and with the speakers, to think about what the new standard model for AI engineering is.

What can everyone use to improve their applications? And I guess, ultimately, build products that people want to use, which is what Laurie mentioned at the start. So I'm really excited about this conference. It's such an honor and a joy to get it together for you guys. And I hope you enjoy the rest of the conference.

Thank you so much. Thank you so much. Thank you. Thank you. Thank you. Thank you. Thank you.