NER Powered Semantic Search in Python

Chapters

0:0 NER Powered Semantic Search1:19 Dependencies and Hugging Face Datasets Prep

4:18 Creating NER Entities with Transformers

7:0 Creating Embeddings with Sentence Transformers

7:48 Using Pinecone Vector Database

11:33 Indexing the Full Medium Articles Dataset

15:9 Making Queries to Pinecone

17:1 Final Thoughts

Transcript

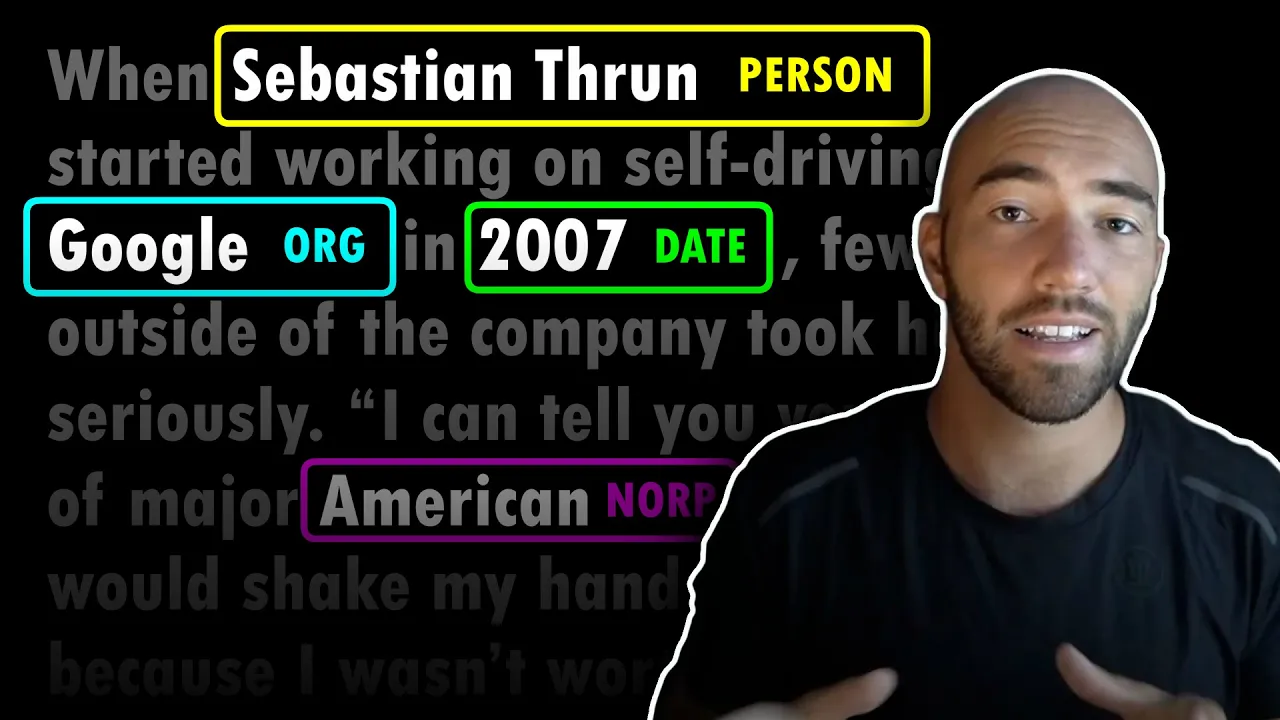

Today we're going to go through an example of using named entity recognition alongside a vector search and this is a really interesting way of just making sure our search is very much focused on exactly whatever it is we're looking for. So for example if we are going to search through articles and we are searching for something to do with Tesla we can use this to restrict the search whatever it is we're looking for maybe we're looking for some news about Tesla for self-driving and using this we're going to restrict search scope for full self-driving to specifically articles or parts of articles that contain the named entity Tesla.

Now I think this is a really interesting use case and can definitely help making search more specific. So let's jump in to the example. So we're working from this example page on Pinecone so pinecone.io/examples/nersearch and Ashok thought of this and put all this together. Yeah I think it's a really cool example.

So we'll work through the code. So to get started I'm going to come over to Colab here and I'm just going to install a few dependencies. So sentence transformers, pinecone client and datasets. Okay so that will just install everything. Okay great and then we can download the dataset that we're going to be using.

So we're going to go from datasets, import load dataset. We are going to be using this dataset here. So it's a medium articles dataset. I'll go search right here. Okay and it's just it contains a ton of articles straight from medium. So to use that we use the name of the dataset from here and we're going to load it into a hooking face data frame.

So data files, medium articles, csv and we want to train split. Okay if we have a look at that or we have a look at the head after converting it to a pandas data frame. So to pandas. I'll just take a moment to download. Okay and then zoom out a little bit so you can see what we have here.

So title, text, url and a few other things in here. Okay so obviously the text is going to be the main part for us and what we will also do is just drop a few of these. So drop any where we have just empty rows. So that's this the drop na here and then we're going to sample at random 50,000 of these articles and we'll do that with a random state of 32.

Essentially this is so you can get the same 50,000 articles that I will get here as well. Okay cool now for each article I mean there's a few things we could do in putting the embeddings together. So we're going to have to create embeddings for every single article here.

What we could do is split the article into parts because our embedding models can only handle so many words at one time. So we could split the article into parts and embed all those different parts but in this case we can usually get an idea of what the article will talk about based on the title and usually the introduction.

So what we'll do is actually take the first 1,000 characters. So what you can see here there and then we're just going to join the article title and the text and we'll keep all that within the title text feature. So we'll go df head again maybe we can just have a look at title text so let's do that.

Okay so we have this now the next thing to do or that we want to do is initialize our NER model. So initialize NER model. Now how do we do that? So we're going to be using a hugging face for this. So I'll just copy the code in. We have all of this so this is a NER pipeline with an NER model.

So we have this DSLIM database for NER named entity recognition. We have our tokenizer the model itself and then we just load all that into our pipeline. We need to select our device so if we have a GPU running we will want to use a GPU it will be much faster.

So I'm going to import torch and what we're going to do is say device equals CUDA if a CUDA device is available. So torch dot CUDA is available otherwise we're just going to use CPU. Now if you are on colab what you can do to make sure you are going to be using GPU is go to runtime change runtime type and here change this to GPU.

Okay so mine wasn't set to GPU so now I need to change it save and actually rerun everything. So I'll go ahead and do that. Okay so that all just reran and what I'm going to do is just print the device here so that we can see that we are in fact using CUDA hopefully.

Okay cool and then here we will run all this and that will just download and initialize the model as well so it may take a moment. Okay so after that let's try it on a quick example. So we have London, Qatar, England and the United Kingdom. So we would expect a few named entities to be within this so let's run that and see what we return.

Okay with this maybe I need to here maybe we write torch device this. Okay cool so here we have a few things so we have a location this is the entity type London. Okay cool that is definitely true location again England and location again United Kingdom. Okay all great so that's definitely working so let's move on to creating our embeddings.

So to create those embeddings we will need a retrieve model. So to initialize that we're going to be using the sentence transformers library that will look something like this. So from sentence transformers import sentence transformer and then we initialize this model here. So this is just a it's a pretty good sentence transformer model that's been trained on a lot of examples so the performance is generally really good.

Okay now we can see the model format here so we have match sequence 128 tokens word embedding dimension here 768 so that's how big the sentence embeddings will be sentence vectors and we can use this model to create our embeddings but we need something to store our embeddings and for that we're going to be using Pinecone so we will initialize that so for that we will need to do this.

So import Pinecone initialize our connection to Pinecone and for that we need an API key which is free and we can get it from here so app.pinecone.io we need to log in here now you probably just have one project in here which would probably be your name default project so go into that you go to API keys and you just press copy on your API key here and then you just paste it into here now I put my API key into a variable called API key and with that we can initialize that connection now we'll create a new index called NER search and we do that with Pinecone create index and this index there's a few things that we need here so create index we need the index name the dimensionality so dimension now this is going to be equal to the retriever model that we just created that includes a embedding dimension and we actually have that up here it's 768 but we're going to we're not going to hard code it we're just going to get from here so get sentence embedding dimension let me see yeah this here okay so if we just have a look at what that will give us should be 768 yeah and then we also want to use the cosine similarity metric and then that will go ahead and create our index after it's been created we'll connect to it so we do Pinecone index and we just use the index name again and then we can describe the index just to see what is in there which should be nothing okay cool so we have dimensionality you can see that the index is empty as we haven't added anything into it yet so the next thing is actually preparing all of our data to be added to Pinecone and allowing us to do this search so what we're going to do first is initialize this extract named entities function which is going to extract for a batch of text it's going to extract named entities for each one those text records so let's initialize that and then what we're going to do is just have a look at how it will work so extract named entities and in there we're just going to pass the data that we have is called df title text what we'll do is maybe turn this into a list yeah let's do that so df title text i want to go for the first let's say the first three items okay and we'll turn into a list isn't already a list there okay cool so the first one we get data christmas anonymous america light switch so on and so on and yeah you can you can see we're getting those entities that are being extracted from there so that is working and what we can do now is actually loop through the full data set or the full 50 000 that we have extracted and we're just going to we're going to do this so let me walk walk you through all this so we're going to be doing everything in batches of 64 that's to avoid overwhelming our gpu which is going to be encoding everything in batches of 64 and also when we're sending these requests to pinecone we can't send too many or too large requests at any one time so with 64 we're pretty safe here we're going to find the end of the batch so we're going in batches 64 from i to i end obviously when you get to the ended data set the batch size will probably be smaller because we probably don't have the data set fitting into perfect 64 batch sizes okay and then we extract the batch generate those embeddings extract the name entities using our function if there are any duplicates so we might have the same name entity a few times and in a single sentence of course or single paragraph in that case we don't need to do that because we're just going to be filtering so we actually only need one instance of the named entity so to do that we just deduplicate our entities here and convert it back into a list and then what we're doing here is we're dropping the title text from our batch because we actually don't need that right here because we've just extracted our named entities and that's all we care about at the moment and then from there we create our metadata so this is going to be some metadata that we saw in the dictionary like format in pinecone create our unique ids which is count and we upset everything or add everything to upset list and upset so we can run that it will possibly take a little while to run may have to just deal with this quickly so entities can contain a list so probably the best way to deal with that is within the function here so let's come up here and what we'll do is just convert into a or remove the duplicates here so any equals list set any okay and then we don't have to do it here so I can remove that and we'll just call this suppose batch named entities okay cool so let's try and run that again great so that looks like it will work it will take a little bit of time so I will leave that to run and when it's complete we'll go through the actual making queries and see how that works okay so that is done I've just run it on a another fast computer so we should be able to describe the index stats here and we should see 50,000 items the one thing here is I need to just refresh the connection so let me do that quickly so we'll come here I am going to run this again and I'm also going to run this without the create index come down here run this here we go so we have 50,000 vectors in there now and what we want to do is use this here so this is going to search through pine cone so we're going to have our query here we're going to extract the named entities from our query embed our query and what we do is query with our embedded query with our query vector which is this xq we're going to return the top 10 most similar matches we're going to include metadata for those matches and importantly for the named entity part of this or named entity filtering what we do is we filter for named entities that are within the list that we have here so let's run that this will just return all the metadata that we have there so the the titles that we that we might want from that and what we do is simply make a few queries so what are the best places to visit in greece will be our first growth and we get these titles here so budget-friendly holidays was the best summer destination greece exploring greece santorini island yeah all i think pretty seem like pretty relevant articles to me ask a few more questions what the best places to visit in london run this and then we get a few more so oh i think pretty relevant again and then let's try one slightly different query why spacex wants to build a city on mars and here we go so for sure i think all these are definitely very relevant articles to be returned okay so that's it for this example covering vector search with this sort of named entity filtering component just to try and improve our results and make sure we are searching within a space that contains whatever it is we are looking for or just making our search scope more specific so i hope this has been interesting and useful thank you very much for watching and i will see you again in the next one bye