From this post, I learned that Cognition, the company behind Devin created DeepWiki. They’re calling it a “Deep Research for Github.” They created explainer-wiki style web pages for the top 30,000 most popular Github repos.

From Silas Alberti, he said:

- 30k repos already indexed

- processed 4 billion+ lines of code

- the indexing alone cost $300k+ in compute

Some interesting points he makes in his thread:

- indexing a single repo costs $12 on average

- models are very good at understanding code locally. the hard part is understanding the global structure of the codebase.

- DeepWiki breaks down a codebase into a hierarchy of high-level systems and then generates a wiki page for every system

- one interesting signal that we’re able to leverage is the commit history. who tends to touch which files together? you can represent this as a graph.

You can also do Q&A on each project after clicking into it. I looked at a handful of examples and can see how valuable this is.

Some ones I briefly looked at:

- https://deepwiki.com/pandas-dev/pandas

- https://deepwiki.com/langchain-ai/langgraph

- https://deepwiki.com/BerriAI/litellm

- https://deepwiki.com/modelcontextprotocol/modelcontextprotocol

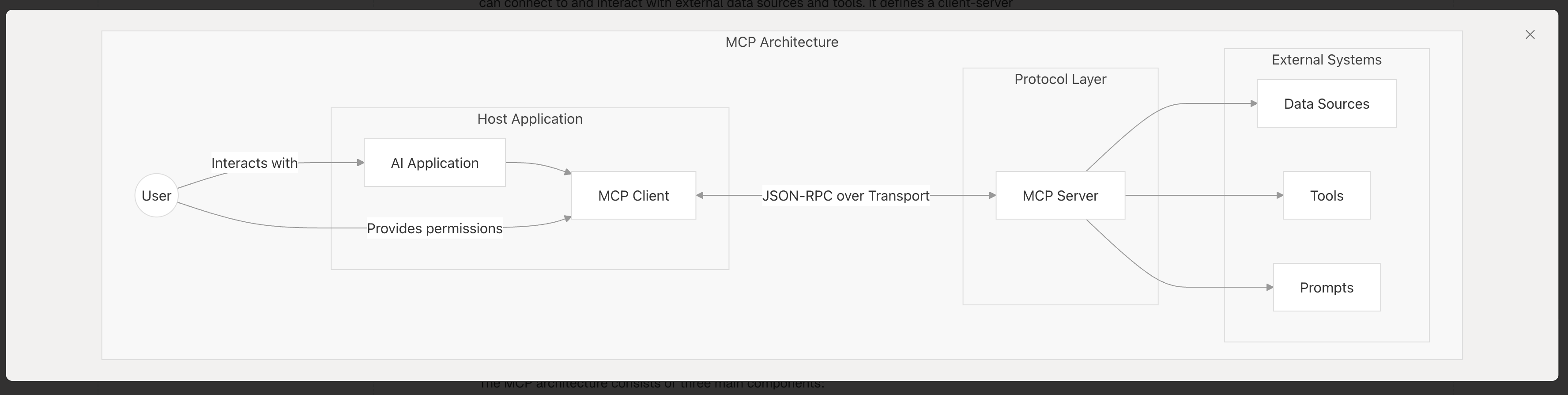

The generated diagrams are nice:

A lot of MCP servers are already in the top 30k repos: